Processing 40 TB of code from 10 million projects on a dedicated server with Go for $ 100

The Sloc Cloc and Code (scc) command line tool that I wrote , which is now finalized and supported by many great people, counts lines of code, comments, and estimates the complexity of files inside a directory. A good selection is needed here. The tool counts branching operators in code. But what is complexity? For example, the statement “This file has difficulty 10” is not very useful without context. To solve this problem, I ran

on all sources on the Internet. This will also allow you to find some extreme cases that I did not consider in the tool itself. Powerful brute force test.

But if I'm going to run the test on all the sources in the world, it will require a lot of computing resources, which is also an interesting experience. Therefore, I decided to write everything down - and this article appeared.

In short, I downloaded and processed a lot of sources.

Naked figures:

Let's just mention one detail. There are not 10 million projects, as indicated in the high-profile title. I missed 15,000, so I rounded it. I apologize for this.

It took about five weeks to download everything, go through scc and save all the data. Then a little more than 49 hours to process 1 TB JSON and get the results below.

Also note that I could be mistaken in some calculations. I will promptly inform you if any error is detected, and provide a dataset.

Since launching searchcode.com, I have already accumulated a collection of more than 7,000,000 projects on git, mercurial, subversion and so on. So why not process them? Working with git is usually the easiest solution, so this time I ignored mercurial and subversion and exported a complete list of git projects. It turns out I actually tracked 12 million git repositories, and I probably need to refresh the main page to reflect this.

So now I have 12 million git repositories to download and process.

When you run scc, you can select the output in JSON with saving the file to disk:

The results are as follows (for a single file):

For a larger example, see the results for the redis project: redis.json . All the results below are obtained from such a result without any additional data.

It should be borne in mind that scc usually classifies languages based on the extension (unless the extension is common, for example, Verilog and Coq). Thus, if you save an HTML file with the java extension, it will be considered a java file. This is usually not a problem, because why do this? But, of course, on a large scale, the problem becomes noticeable. I discovered this later when some files were disguised as a different extension.

Some time ago, I wrote code for generating scc-based github tags . Since the process needed to cache the results, I changed it a bit to cache them in JSON format on AWS S3.

With the code for labels in AWS on lambda, I took an exported list of projects, wrote about 15 python lines to clear the format to match my lambda, and made a request to it. Using python multiprocessing, I parallelized requests to 32 processes so that the endpoint responds quickly enough.

Everything worked brilliantly. However, the problem was, firstly, in cost, and secondly, lambda has an 30-second timeout for API Gateway / ALB, so it cannot process large repositories fast enough. I knew that this was not the most economical solution, but I thought that the price would be about $ 100, which I would put up with. After processing a million repositories, I checked - and the cost was about $ 60. Since I was not happy with the prospect of a final AWS account of $ 700, I decided to reconsider my decision. Keep in mind that this was basically the storage and CPU that were used to collect all this information. Any processing and export of data significantly increased the price.

Since I was already on AWS, a quick solution would be to dump the URLs as messages in SQS and pull them out using EC2 or Fargate instances for processing. Then scale like crazy. But despite the everyday experience with AWS, I have always believed in the principles of Taco Bell programming . In addition, there were only 12 million repositories, so I decided to implement a simpler (cheaper) solution.

Starting the calculation locally was not possible due to the terrible internet in Australia. However, my searchcode.com works by using the dedicated servers from Hetzner rather carefully. These are fairly powerful i7 Quad Core 32 GB RAM machines, often with 2 TB of storage space (usually not used). They usually have a good supply of computing power. For example, the front-end server most of the time calculates the square root of zero. So why not start processing there?

This is not really Taco Bell programming, as I used the bash and gnu tools. I wrote a simple program on Go to run 32 go-routines that read data from a channel, generate git and scc subprocesses before writing the output to JSON in S3. I actually wrote the solution first in Python, but the need to install pip dependencies on my clean server seemed like a bad idea, and the system crashed in strange ways that I didn't want to debug.

Running all this on the server produced the following metrics in htop, and several running git / scc processes (scc does not appear in this screenshot) assumed that everything was working as expected, which was confirmed by the results in S3.

I recently read these articles , so I had the idea to borrow the format of these posts in relation to the presentation of information. True, I also wanted to add jQuery DataTables to large tables to sort and search / filter the results. Thus, in the original article, you can click on the headings to sort and use the search field to filter.

The size of the data to be processed raised another question. How to process 10 million JSON files, occupying a little more than 1 TB of disk space in the S3 bucket?

The first thought was AWS Athena. But since it would cost something like $ 2.50 per query for such a dataset, I quickly started looking for an alternative. However, if you save the data there and rarely process it, this may be the cheapest solution.

I posted a question in corporate chat (why solve problems alone).

One idea was to dump data into a large SQL database. However, this means processing the data in the database, and then executing queries on it several times. Plus, the data structure means multiple tables, which means foreign keys and indexes to provide a certain level of performance. This seems wasteful because we could just process the data as we read it from disk - in one pass. I was also worried about creating such a large database. With data only, it will be more than 1 TB in size before adding indexes.

Seeing how I created JSON in a simple way, I thought, why not handle the results in the same way? Of course, there is one problem. Pulling 1 TB of data from S3 will cost a lot. If the program crashes, it will be annoying. To reduce costs, I wanted to pull out all the files locally and save them for further processing. Good advice: it’s better not to store many small files in one directory . This sucks for runtime performance, and file systems don't like that.

My answer to this was another simple Go program to pull files from S3 and then save them in a tar file. Then I could process this file again and again. The process itself runs a very ugly Go program to process the tar file so that I can re-run the queries without having to pull data from S3 again and again. I didn’t bother with go-routines here for two reasons. Firstly, I didn’t want to load the server as much as possible, so I limited myself to one core for the CPU to work hard (the other was mostly locked on the processor to read the tar file). Secondly, I wanted to guarantee thread safety.

When this was done, a set of questions was needed to answer. I again used the collective mind and connected my colleagues while I came up with my own ideas. The result of this merging of minds is presented below.

You can find all the code that I used to process JSON, including the code for local processing, and the ugly Python script that I used to prepare something useful for this article: please do not comment on it, I know that the code is ugly , and it is written for a one-time task, since I’m unlikely to ever look at it again.

If you want to see the code that I wrote for general use, look at the scc sources .

I spent about $ 60 on computing while trying to work with lambda. I have not looked at the cost of storing S3 yet, but it should be close to $ 25, depending on the size of the data. However, this does not include transmission costs, which I did not watch either. Please note that I cleaned the bucket when I finished with it, so this is not a fixed cost.

But after a while I still abandoned AWS. So what is the real cost if I wanted to do it again?

All software is free and free. So there’s nothing to worry about.

In my case, the cost would be zero, since I used the “free” computing power left from searchcode.com. However, not everyone has such free resources. Therefore, let's assume that the other person wants to repeat this and must raise the server.

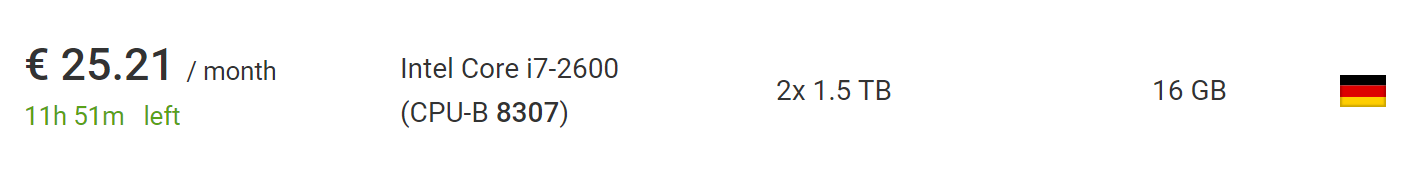

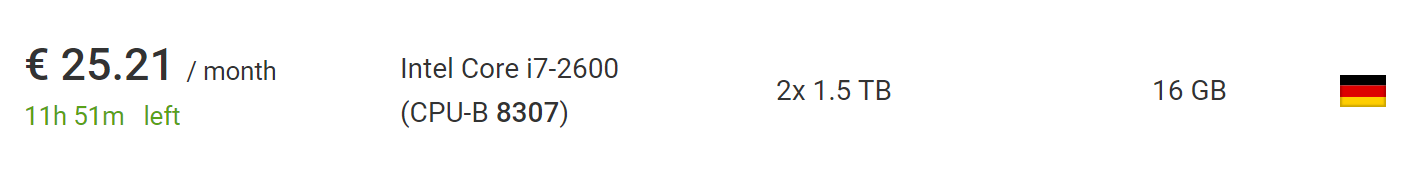

This can be done for € 73 using the cheapest new dedicated server from Hetzner , including the cost of installing a new server. If you wait and delve into the section with auctions , you can find much cheaper servers without installation fees. At the time of writing, I found a car that is perfect for this project, for € 25.21 a month without installation fees.

What's even better, outside the European Union, VAT will be removed from this price, so safely take another 10%.

Therefore, if you lift such a service from scratch on my software, it will ultimately cost up to $ 100, but rather up to $ 50, if you are a little patient or successful. This assumes that you have been using the server for less than two months, which is enough for downloading and processing. There is also enough time to get a list of 10 million repositories.

If I used zipped tar (which is actually not that difficult), I could process 10 times more repositories on the same machine, and the resulting file will still remain small enough to fit on the same HDD. Although the process can take several months, because the download will take longer.

To go far beyond the 100 million repositories, however, some kind of sharding is required. Nevertheless, it is safe to say that you will repeat the process on my scale or much larger, on the same equipment without much effort or code changes.

Here's how many projects came from each of three sources: github, bitbucket, and gitlab. Please note that this is before excluding empty repositories, therefore, the amount exceeds the number of repositories that are actually processed and taken into account in the following tables.

I apologize to the GitHub / Bitbucket / GitLab staff if you read this. If my script caused any problems (although I doubt it), I have a drink of your choice when meeting me.

Let's move on to the real issues. Let's start with a simple one. How many files are in the average repository? Most projects only have a couple of files or more? After looping through the repositories, we get the following schedule:

Here, the X axis shows buckets with the number of files, and the Y axis shows the number of projects with so many files. Limit the horizontal axis to a thousand files, because then the graph is too close to the axis.

It seems like most repositories have less than 200 files.

But what about visualization up to the 95th percentile, which will show the real picture? It turns out that in the vast majority (95%) of projects - less than 1000 files. While 90% of projects have less than 300 files and 85% have less than 200.

If you want to build a chart yourself and do it better than me, here is a link to the raw data in JSON .

For example, if a Java file is identified, then we increase the number of Java in the projects by one, and we do nothing for the second file. This gives a quick idea of which languages are most commonly used. Unsurprisingly, the most common languages include markdown, .gitignore, and plaintext.

Markdown is the most frequently used language; it is seen in more than 6 million projects, which is about 2⁄3 of the total. This makes sense, since almost all projects include README.md which is displayed in HTML for repository pages.

Addition to the previous table, but averaged by the number of files for each language in the repository. That is, how many Java files exist on average for all projects where there is Java?

I believe it’s still interesting to see which languages have the largest files on average? Using arithmetic mean generates abnormally high numbers due to projects like sqlite.c, which is included in many repositories, combining many files into one, but no one ever works on this one large file (I hope!)

Therefore, I calculated the average of the median. However, languages with absurdly high values, such as Bosque and JavaScript, still remained.

So I thought, why not make a knight's move? At the suggestion of Darrell (a Kablamo resident and an excellent data scientist), I made one small change and changed the arithmetic mean, dropping files over 5,000 lines to remove anomalies.

What is the average file complexity for each language?

In fact, complexity ratings cannot be directly correlated between languages. Excerpt from readme itself

:

Thus, languages cannot be compared with each other here, although this can be done between similar languages such as Java and C, for example.

This is a more valuable metric for individual files in the same language. Thus, you can answer the question “Is this file I'm working with easier or more complicated than the average?”

I must mention that I will be glad to suggest how to improve this metric in scc . For a commit, it is usually enough to add just a few keywords to the languages.json file, so any programmer can help.

What is the average number of comments in files in each language?

Perhaps the question can be rephrased: the developers in which language write the most comments, suggesting a misunderstanding of the reader.

What file names are most common in all codebases, ignoring the extension and case?

If you asked me earlier, I would say: README, main, index, license. The results pretty well reflect my assumptions. Although there is a lot of interesting things. I have no idea why so many projects contain a file called

or

.

The most common makefile surprised me a bit, but then I remembered that it was used in many new JavaScript projects. Another interesting thing to note: it seems that jQuery is still on the horse, and the reports of his death are greatly exaggerated, and he is in fourth place on the list.

Please note that due to memory limitations, I made this process a little less accurate. After every 100 projects, I checked the map and deleted the names of files that occurred less than 10 times from the list. They could return to the next test, and if they met more than 10 times, they remained on the list. Perhaps some results have some error if some common name rarely appeared in the first batch of repositories before becoming common. In short, these are not absolute numbers, but should be close enough to them.

I could use the prefix tree to “squeeze” the space and get the absolute numbers, but I didn’t want to write it, so I slightly abused the map to save enough memory and get the result. However, it will be quite interesting to try the prefix tree later.

It is very interesting. How many repositories have at least some explicit license file? Please note that the absence of a license file here does not mean that the project does not have it, since it can exist in the README file or can be indicated through SPDX comment tags in lines. It simply means that

it could not find the explicit license file using its own criteria. Currently, such files are considered to be “license”, “license”, “copying”, “copying3”, “unlicense”, “unlicence”, “license-mit”, “license-mit” or “copyright” files.

Unfortunately, the vast majority of repositories do not have a license. I would say that there are many reasons why any software needs a license, but someone else said it for me.

Some may not know this, but there may be several .gitignore files in a git project. With this in mind, how many projects use multiple .gitignore files? But at the same time, how many have not a single one?

I found a rather interesting project with 25,794 .gitignore files in the repository. The next result was 2547. I have no idea what is going on there. I glanced briefly: it seems that they are used to check directories, but I can’t confirm this.

Returning to the data, here is a graph of repositories with up to 20 .gitignore files, which covers 99% of all projects.

As expected, most projects have 0 or 1 .gitignore files. This is confirmed by a massive tenfold drop in the number of projects with 2 files. What surprised me was how many projects have more than one .gitignore file. The long tail in this case is especially long.

I was curious why some projects have thousands of such files. One of the main troublemakers is the fork https://github.com/PhantomX/slackbuilds : each of them has about 2547 .gitignore files. The following are other repositories with more than a thousand .gitignore files.

This section is not an exact science, but belongs to the class of problems of natural language processing. Searching for abusive or abusive terms in a specific list of files will never be effective. If you search with a simple search, you will find many ordinary files like

and so on. So I took a list of curses and then checked if any files in each project start with one of these values followed by a dot. This means that the file named

will be taken into account, but

not. However, he will miss many varied options, such as

other equally crude names.

My list contains some words on leetspeak, such as

and

, to catch some of the interesting cases. Full list here.

Although this is not entirely accurate, as already mentioned, it is incredibly interesting to look at the result. Let's start with the list of languages in which the most curses. You should probably correlate the result with the total amount of code in each language. So here are the leaders.

Interesting!My first thought was: “Oh, these naughty C developers!” But despite the large number of such files, they write so much code that the percentage of curses is lost in the total. However, it’s pretty clear that Dart developers have a few words in their arsenal! If you know one of the Dart programmers, you can shake his hand.

I also want to know what are the most commonly used curses. Let's look at a common dirty collective mind. Some of the best that I found were normal names (if you squint), but most of the rest will certainly surprise colleagues and some comments in the pool requests.

Note that some of the more offensive words on the list did have matching file names, which I find pretty shocking. Fortunately, they are not very common and are not included in the list above, which is limited to files with the number of more than 100. I hope that these files exist only for testing allow / deny lists and the like.

As expected, plaintext, SQL, XML, JSON, and CSV occupy the top positions: they usually contain metadata, database dumps, and the like.

Note.Some of the links below may not work due to some extra information when creating files. Most should work, but for some, you might need to slightly modify the URL.

Once again, these values are not directly comparable to each other, but it’s interesting to see what is considered the most difficult in every language.

Some of these files are absolute monsters. For example, consider the most complex C ++ file I found: COLLADASaxFWLColladaParserAutoGen15PrivateValidation.cpp : it's 28.3 megabytes of compiler hell (and, fortunately, it seems to be generated automatically).

Note.Some of the links below may not work due to some extra information when creating files. Most should work, but for some, you might need to slightly modify the URL.

It sounds good in theory, but in fact ... something minified or without line breaks distorts the results, making them meaningless. Therefore, I do not publish the results of the calculations. However, I created a ticket in

to support the minification detection in order to remove it from the calculation results.

You can probably draw some conclusions based on the available data, but I want all users to benefit from this feature

.

I have no idea what valuable information you can learn from this, but it's interesting to see.

Note.Some of the links below may not work due to some extra information when creating files. Most should work, but for some, you might need to slightly modify the URL.

Under the "pure" in the types of projects are purely in one language. Of course, this is not very interesting in itself, so let's look at them in context. As it turned out, the vast majority of projects have less than 25 languages, and most have less than ten.

The peak in the graph below is in four languages.

Of course, in clean projects there can be only one programming language, but there is support for other formats, such as markdown, json, yml, css, .gitignore, which are taken into account

. It is probably reasonable to assume that any project with less than five languages is “clean” (for some level of cleanliness), and this is just over half of the total dataset. Of course, your definition of cleanliness may differ from mine, so you can focus on any number that you like.

What surprises me is a strange surge around 34-35 languages. I have no reasonable explanation of where it came from, and this is probably worthy of a separate investigation.

Ah, the modern world of TypeScript. But for TypeScript projects, how many are purely in this language?

I must admit, I'm a little surprised by this number. Although I understand that mixing JavaScript with TypeScript is quite common, I would have thought there would be more projects in the newfangled language. But it is possible that a more recent set of repositories will dramatically increase their number.

I have the feeling that some TypeScript developers feel sick at the very thought of it. If this helps them, I can assume that most of these projects are programs like

with examples of all languages for testing purposes.

Given that you can either upload all the files you need into one directory or create a directory system, what is the typical path length and number of directories?

To do this, count the number of slashes in the path for each file and average. I did not know what to expect here, except that Java might be at the top of the list, since there are usually long file paths.

There was once a “discussion” at Slack - using .yaml or .yml. Many were killed there on both sides.

Debate may finally (?) End. Although I suspect that some will still prefer to die in a dispute.

What register is used for file names? Since there is still an extension, we can expect, mainly, a mixed case.

Which, of course, is not very interesting, because usually file extensions are lowercase. What if ignore extensions?

Not what I expected. Again, mostly mixed, but I would have thought that the bottom would be more popular.

Another idea that colleagues came up with when looking at some old Java code. I thought, why not add a check for any Java code where Factory, FactoryFactory or FactoryFactoryFactory appears in the title. The idea is to estimate the number of such factories.

So, just over 2% of the Java code turned out to be a factory or factoryfactory. Fortunately, no factoryfactoryfactory was found. Perhaps this joke will finally die, although I’m sure that at least one serious third-level recursive multifactory still works somewhere in some kind of Java 5 monolith, and it makes more money every day than I have seen in my entire career. .

The idea of .ignore files was developed by burntsushi and ggreer in a discussion on Hacker News . Perhaps this is one of the best examples of "competing" open source tools that work together with good results and are completed in record time. It has become the de facto standard for adding code that tools will ignore.

also fulfills the .ignore rule, but also knows how to count them. Let's see how well the idea has spread.

I like to do some analysis for the future. It would be nice to scan things like AWS AKIA keys and the like. I would also like to expand the coverage of Bitbucket and Gitlab projects with analysis for each, to see if there may hang out development teams from different areas.

If I ever repeat the project, I would like to overcome the following shortcomings and take into account the following ideas.

Well, I can take some of this information and use it in my searchcode.com search engine and program

. At least some useful data points. Initially, the project was conceived in many ways for the sake of this. It is also very useful to compare your project with others. It was also an interesting way to spend a few days solving some interesting problems. And a good reliability check for

.

In addition, I am currently working on a tool that helps leading developers or managers analyze code, search for specific languages, large files, flaws, etc. ... with the assumption that you need to analyze several repositories. You enter some kind of code, and the tool says how maintainable it is and what skills are needed to maintain it. This is useful when deciding whether to buy some kind of code base, to service it, or to get an idea about your own product that the development team gives out. Theoretically, this should help teams scale through shared resources. Something like AWS Macie, but for code - something like this I'm working on. I myself need this for everyday work, and I suspect that others may find application for such an instrument, at least that is the theory.

Perhaps it would be worth putting here some form of registration for those interested ...

If someone wants to make their own analysis and make corrections, here is a link to the processed files (20 MB). If someone wants to post raw files in the public domain, let me know. This is 83 GB tar.gz, and inside is just over 1 TB. Content consists of just over 9 million JSON files of various sizes.

UPD. Several good souls suggested placing the file, the places are indicated below:

By hosting this tar.gz file, thanks to CNCF for the server for xet7 from the Wekan project .

scc

on all sources on the Internet. This will also allow you to find some extreme cases that I did not consider in the tool itself. Powerful brute force test.

But if I'm going to run the test on all the sources in the world, it will require a lot of computing resources, which is also an interesting experience. Therefore, I decided to write everything down - and this article appeared.

In short, I downloaded and processed a lot of sources.

Naked figures:

- 9,985,051 total repositories

- 9,100,083 repositories with at least one file

- 884 968 empty repositories (without files)

- 3,500,000,000 files in all repositories

- Processed 40 736 530 379 778 bytes (40 TB)

- 1,086,723,618,560 rows identified

- 816,822,273,469 lines with code recognized

- 124 382 152 510 blank lines

- 145 519 192 581 lines of comments

- Total complexity according to scc rules: 71 884 867 919

- 2 new bugs found in scc

Let's just mention one detail. There are not 10 million projects, as indicated in the high-profile title. I missed 15,000, so I rounded it. I apologize for this.

It took about five weeks to download everything, go through scc and save all the data. Then a little more than 49 hours to process 1 TB JSON and get the results below.

Also note that I could be mistaken in some calculations. I will promptly inform you if any error is detected, and provide a dataset.

Table of contents

- Methodology

- Presentation and calculation of results

- Cost

- Data sources

- How many files are in the repository?

- What is the language breakdown?

- How many files are in the repository by language?

- How many lines of code in a typical language file?

- Average file complexity in each language?

- Average number of comments for files in each language?

- What are the most common file names?

- How many repositories are missing a license?

- Which languages have the most comments?

- How many projects use multiple .gitignore files?

- What language is more obscene language?

- The largest files by the number of lines in each language

- What is the most complex file in every language?

- The most complicated file regarding the number of lines?

- What is the most commented file in each language?

- How many “clean” projects

- Projects with TypeScript but not JavaScript

- Does anyone use CoffeeScript and TypeScript?

- What is the typical path length in each language

- YAML or YML?

- Upper, lower, or mixed case?

- Factories in Java

- .Ignore files

- Ideas for the future

- Why is this all?

- Unprocessed / processed files

Methodology

Since launching searchcode.com, I have already accumulated a collection of more than 7,000,000 projects on git, mercurial, subversion and so on. So why not process them? Working with git is usually the easiest solution, so this time I ignored mercurial and subversion and exported a complete list of git projects. It turns out I actually tracked 12 million git repositories, and I probably need to refresh the main page to reflect this.

So now I have 12 million git repositories to download and process.

When you run scc, you can select the output in JSON with saving the file to disk:

scc --format json --output myfile.json main.go

The results are as follows (for a single file):

[ { "Blank": 115, "Bytes": 0, "Code": 423, "Comment": 30, "Complexity": 40, "Count": 1, "Files": [ { "Binary": false, "Blank": 115, "Bytes": 20396, "Callback": null, "Code": 423, "Comment": 30, "Complexity": 40, "Content": null, "Extension": "go", "Filename": "main.go", "Hash": null, "Language": "Go", "Lines": 568, "Location": "main.go", "PossibleLanguages": [ "Go" ], "WeightedComplexity": 0 } ], "Lines": 568, "Name": "Go", "WeightedComplexity": 0 } ]

For a larger example, see the results for the redis project: redis.json . All the results below are obtained from such a result without any additional data.

It should be borne in mind that scc usually classifies languages based on the extension (unless the extension is common, for example, Verilog and Coq). Thus, if you save an HTML file with the java extension, it will be considered a java file. This is usually not a problem, because why do this? But, of course, on a large scale, the problem becomes noticeable. I discovered this later when some files were disguised as a different extension.

Some time ago, I wrote code for generating scc-based github tags . Since the process needed to cache the results, I changed it a bit to cache them in JSON format on AWS S3.

With the code for labels in AWS on lambda, I took an exported list of projects, wrote about 15 python lines to clear the format to match my lambda, and made a request to it. Using python multiprocessing, I parallelized requests to 32 processes so that the endpoint responds quickly enough.

Everything worked brilliantly. However, the problem was, firstly, in cost, and secondly, lambda has an 30-second timeout for API Gateway / ALB, so it cannot process large repositories fast enough. I knew that this was not the most economical solution, but I thought that the price would be about $ 100, which I would put up with. After processing a million repositories, I checked - and the cost was about $ 60. Since I was not happy with the prospect of a final AWS account of $ 700, I decided to reconsider my decision. Keep in mind that this was basically the storage and CPU that were used to collect all this information. Any processing and export of data significantly increased the price.

Since I was already on AWS, a quick solution would be to dump the URLs as messages in SQS and pull them out using EC2 or Fargate instances for processing. Then scale like crazy. But despite the everyday experience with AWS, I have always believed in the principles of Taco Bell programming . In addition, there were only 12 million repositories, so I decided to implement a simpler (cheaper) solution.

Starting the calculation locally was not possible due to the terrible internet in Australia. However, my searchcode.com works by using the dedicated servers from Hetzner rather carefully. These are fairly powerful i7 Quad Core 32 GB RAM machines, often with 2 TB of storage space (usually not used). They usually have a good supply of computing power. For example, the front-end server most of the time calculates the square root of zero. So why not start processing there?

This is not really Taco Bell programming, as I used the bash and gnu tools. I wrote a simple program on Go to run 32 go-routines that read data from a channel, generate git and scc subprocesses before writing the output to JSON in S3. I actually wrote the solution first in Python, but the need to install pip dependencies on my clean server seemed like a bad idea, and the system crashed in strange ways that I didn't want to debug.

Running all this on the server produced the following metrics in htop, and several running git / scc processes (scc does not appear in this screenshot) assumed that everything was working as expected, which was confirmed by the results in S3.

Presentation and calculation of results

I recently read these articles , so I had the idea to borrow the format of these posts in relation to the presentation of information. True, I also wanted to add jQuery DataTables to large tables to sort and search / filter the results. Thus, in the original article, you can click on the headings to sort and use the search field to filter.

The size of the data to be processed raised another question. How to process 10 million JSON files, occupying a little more than 1 TB of disk space in the S3 bucket?

The first thought was AWS Athena. But since it would cost something like $ 2.50 per query for such a dataset, I quickly started looking for an alternative. However, if you save the data there and rarely process it, this may be the cheapest solution.

I posted a question in corporate chat (why solve problems alone).

One idea was to dump data into a large SQL database. However, this means processing the data in the database, and then executing queries on it several times. Plus, the data structure means multiple tables, which means foreign keys and indexes to provide a certain level of performance. This seems wasteful because we could just process the data as we read it from disk - in one pass. I was also worried about creating such a large database. With data only, it will be more than 1 TB in size before adding indexes.

Seeing how I created JSON in a simple way, I thought, why not handle the results in the same way? Of course, there is one problem. Pulling 1 TB of data from S3 will cost a lot. If the program crashes, it will be annoying. To reduce costs, I wanted to pull out all the files locally and save them for further processing. Good advice: it’s better not to store many small files in one directory . This sucks for runtime performance, and file systems don't like that.

My answer to this was another simple Go program to pull files from S3 and then save them in a tar file. Then I could process this file again and again. The process itself runs a very ugly Go program to process the tar file so that I can re-run the queries without having to pull data from S3 again and again. I didn’t bother with go-routines here for two reasons. Firstly, I didn’t want to load the server as much as possible, so I limited myself to one core for the CPU to work hard (the other was mostly locked on the processor to read the tar file). Secondly, I wanted to guarantee thread safety.

When this was done, a set of questions was needed to answer. I again used the collective mind and connected my colleagues while I came up with my own ideas. The result of this merging of minds is presented below.

You can find all the code that I used to process JSON, including the code for local processing, and the ugly Python script that I used to prepare something useful for this article: please do not comment on it, I know that the code is ugly , and it is written for a one-time task, since I’m unlikely to ever look at it again.

If you want to see the code that I wrote for general use, look at the scc sources .

Cost

I spent about $ 60 on computing while trying to work with lambda. I have not looked at the cost of storing S3 yet, but it should be close to $ 25, depending on the size of the data. However, this does not include transmission costs, which I did not watch either. Please note that I cleaned the bucket when I finished with it, so this is not a fixed cost.

But after a while I still abandoned AWS. So what is the real cost if I wanted to do it again?

All software is free and free. So there’s nothing to worry about.

In my case, the cost would be zero, since I used the “free” computing power left from searchcode.com. However, not everyone has such free resources. Therefore, let's assume that the other person wants to repeat this and must raise the server.

This can be done for € 73 using the cheapest new dedicated server from Hetzner , including the cost of installing a new server. If you wait and delve into the section with auctions , you can find much cheaper servers without installation fees. At the time of writing, I found a car that is perfect for this project, for € 25.21 a month without installation fees.

What's even better, outside the European Union, VAT will be removed from this price, so safely take another 10%.

Therefore, if you lift such a service from scratch on my software, it will ultimately cost up to $ 100, but rather up to $ 50, if you are a little patient or successful. This assumes that you have been using the server for less than two months, which is enough for downloading and processing. There is also enough time to get a list of 10 million repositories.

If I used zipped tar (which is actually not that difficult), I could process 10 times more repositories on the same machine, and the resulting file will still remain small enough to fit on the same HDD. Although the process can take several months, because the download will take longer.

To go far beyond the 100 million repositories, however, some kind of sharding is required. Nevertheless, it is safe to say that you will repeat the process on my scale or much larger, on the same equipment without much effort or code changes.

Data sources

Here's how many projects came from each of three sources: github, bitbucket, and gitlab. Please note that this is before excluding empty repositories, therefore, the amount exceeds the number of repositories that are actually processed and taken into account in the following tables.

| Source | amount |

|---|---|

| github | 9 680 111 |

| bitbucket | 248 217 |

| gitlab | 56 722 |

I apologize to the GitHub / Bitbucket / GitLab staff if you read this. If my script caused any problems (although I doubt it), I have a drink of your choice when meeting me.

How many files are in the repository?

Let's move on to the real issues. Let's start with a simple one. How many files are in the average repository? Most projects only have a couple of files or more? After looping through the repositories, we get the following schedule:

Here, the X axis shows buckets with the number of files, and the Y axis shows the number of projects with so many files. Limit the horizontal axis to a thousand files, because then the graph is too close to the axis.

It seems like most repositories have less than 200 files.

But what about visualization up to the 95th percentile, which will show the real picture? It turns out that in the vast majority (95%) of projects - less than 1000 files. While 90% of projects have less than 300 files and 85% have less than 200.

If you want to build a chart yourself and do it better than me, here is a link to the raw data in JSON .

What is the language breakdown?

For example, if a Java file is identified, then we increase the number of Java in the projects by one, and we do nothing for the second file. This gives a quick idea of which languages are most commonly used. Unsurprisingly, the most common languages include markdown, .gitignore, and plaintext.

Markdown is the most frequently used language; it is seen in more than 6 million projects, which is about 2⁄3 of the total. This makes sense, since almost all projects include README.md which is displayed in HTML for repository pages.

Full list

| Language | Number of projects |

|---|---|

| Markdown | 6 041 849 |

| gitignore | 5,471,254 |

| Plain text | 3,553,325 |

| Javascript | 3 408 921 |

| HTML | 3 397 596 |

| CSS | 3 037 754 |

| License | 2 597 330 |

| XML | 2 218 846 |

| Json | 1 903 569 |

| Yaml | 1 860 523 |

| Python | 1 424 505 |

| Shell | 1 395 199 |

| Ruby | 1,386,599 |

| Java | 1 319 091 |

| C header | 1,259,519 |

| Makefile | 1 215 586 |

| Rakefile | 1 006 022 |

| Php | 992 617 |

| Properties file | 909 631 |

| Svg | 804,946 |

| C | 791 773 |

| C ++ | 715 269 |

| Batch | 645,442 |

| Sass | 535 341 |

| Autoconf | 505 347 |

| Objective c | 503 932 |

| CoffeeScript | 435 133 |

| SQL | 413,739 |

| Perl | 390,775 |

| C # | 380 841 |

| ReStructuredText | 356 922 |

| Msbuild | 354,212 |

| LESS | 281 286 |

| Csv | 275,143 |

| C ++ Header | 199,245 |

| CMake | 173,482 |

| Patch | 169,078 |

| Assembly | 165,587 |

| XML Schema | 148 511 |

| m4 | 147 204 |

| JavaServer Pages | 142,605 |

| Vim script | 134 156 |

| Scala | 132 454 |

| Objective C ++ | 127 797 |

| Gradle | 126,899 |

| Module definition | 120 181 |

| Bazel | 114 842 |

| R | 113 770 |

| ASP.NET | 111 431 |

| Go template | 111 263 |

| Document Type Definition | 109,710 |

| Gherkin specification | 107 187 |

| Smarty template | 106 668 |

| Jade | 105 903 |

| Happy | 105 631 |

| Emacs lisp | 105 620 |

| Prolog | 102 792 |

| Go | 99 093 |

| Lua | 98 232 |

| Bash | 95 931 |

| D | 94,400 |

| ActionScript | 93,066 |

| Tex | 84 841 |

| Powershell | 80 347 |

| Awk | 79 870 |

| Groovy | 75 796 |

| Lex | 75 335 |

| nuspec | 72,478 |

| sed | 70 454 |

| Puppet | 67 732 |

| Org | 67 703 |

| Clojure | 67 145 |

| Xaml | 65 135 |

| TypeScript | 62 556 |

| Systemd | 58 197 |

| Haskell | 58 162 |

| Xcode Config | 57 173 |

| Boo | 55 318 |

| LaTeX | 55 093 |

| Zsh | 55 044 |

| Stylus | 54 412 |

| Razor | 54 102 |

| Handlebars | 51 893 |

| Erlang | 49,475 |

| Hex | 46,442 |

| Protocol buffers | 45 254 |

| Mustache | 44,633 |

| ASP | 43 114 |

| Extensible Stylesheet Language Transformations | 42,664 |

| Twig template | 42,273 |

| Processing | 41,277 |

| Dockerfile | 39,664 |

| Swig | 37 539 |

| LD Script | 36 307 |

| FORTRAN Legacy | 35,889 |

| Scons | 35,373 |

| Scheme | 34 982 |

| Alex | 34 221 |

| TCL | 33,766 |

| Android Interface Definition Language | 33,000 |

| Ruby HTML | 32 645 |

| Device tree | 31 918 |

| Expect | 30,249 |

| Cabal | 30 109 |

| Unreal script | 29 113 |

| Pascal | 28,439 |

| GLSL | 28,417 |

| Intel hex | 27 504 |

| Alloy | 27 142 |

| Freemarker template | 26,456 |

| IDL | 26,079 |

| Visual basic for applications | 26,061 |

| Macromedia eXtensible Markup Language | 24,949 |

| F # | 24,373 |

| Cython | 23,858 |

| Jupyter | 23,577 |

| Forth | 22 108 |

| Visual basic | 21 909 |

| Lisp | 21,242 |

| OCaml | 20,216 |

| Rust | 19,286 |

| Fish | 18,079 |

| Monkey c | 17 753 |

| Ada | 17 253 |

| SAS | 17 031 |

| Dart | 16,447 |

| TypeScript Typings | 16,263 |

| Systemverilog | 15 541 |

| Thrift | 15 390 |

| C shell | 14,904 |

| Fragment shader file | 14,572 |

| Vertex Shader File | 14 312 |

| QML | 13,709 |

| Coldfusion | 13,441 |

| Elixir | 12 716 |

| Haxe | 12 404 |

| Jinja | 12,274 |

| Jsx | 12 194 |

| Specman e | 12 071 |

| FORTRAN Modern | 11,460 |

| PKGBUILD | 11 398 |

| ignore | 11,287 |

| Mako | 10 846 |

| Tol | 10 444 |

| SKILL | 10 048 |

| Asciidoc | 9 868 |

| Swift | 9 679 |

| Buildstream | 9 198 |

| ColdFusion CFScript | 8 614 |

| Stata | 8 296 |

| Creole | 8 030 |

| Basic | 7 751 |

| V | 7 560 |

| Vhdl | 7,368 |

| Julia | 7 070 |

| ClojureScript | 7 018 |

| Closure template | 6,269 |

| AutoHotKey | 5 938 |

| Wolfram | 5,764 |

| Docker ignore | 5 555 |

| Korn shell | 5 541 |

| Arvo | 5,364 |

| Coq | 5,068 |

| SRecode Template | 5 019 |

| Game maker language | 4 557 |

| Nix | 4,216 |

| Vala | 4 110 |

| COBOL | 3 946 |

| Varnish configuration | 3,882 |

| Kotlin | 3,683 |

| Bitbake | 3 645 |

| Gdscript | 3 189 |

| Standard ML (SML) | 3,143 |

| Jenkins buildfile | 2 822 |

| Xtend | 2 791 |

| Abap | 2 381 |

| Modula3 | 2,376 |

| Nim | 2,273 |

| Verilog | 2013 |

| Elm | 1 849 |

| Brainfuck | 1 794 |

| Ur / web | 1,741 |

| Opalang | 1,367 |

| GN | 1,342 |

| Taskpaper | 1,330 |

| Ceylon | 1,265 |

| Crystal | 1,259 |

| Agda | 1,182 |

| Vue | 1,139 |

| LOLCODE | 1 101 |

| Hamlet | 1,071 |

| Robot framework | 1,062 |

| MUMPS | 940 |

| Emacs dev env | 937 |

| Cargo lock | 905 |

| Flow9 | 839 |

| Idris | 804 |

| Julius | 765 |

| Oz | 764 |

| Q # | 695 |

| Lucius | 627 |

| Meson | 617 |

| F * | 614 |

| ATS | 492 |

| PSL Assertion | 483 |

| Bitbucket pipeline | 418 |

| Purescript | 370 |

| Report Definition Language | 313 |

| Isabelle | 296 |

| Jai | 286 |

| MQL4 | 271 |

| Ur / Web Project | 261 |

| Alchemist | 250 |

| Cassius | 213 |

| Softbridge basic | 207 |

| MQL Header | 167 |

| Jsonl | 146 |

| Lean | 104 |

| Spice netlist | one hundred |

| Madlang | 97 |

| Luna | 91 |

| Pony | 86 |

| MQL5 | 46 |

| Wren | 33 |

| Just | thirty |

| QCL | 27 |

| Zig | 21 |

| SPDX | twenty |

| Futhark | 16 |

| Dhall | fifteen |

| Fidl | fourteen |

| Bosque | fourteen |

| Janet | 13 |

| Game maker project | 6 |

| Polly | 6 |

| Verilog Args File | 2 |

How many files are in the repository by language?

Addition to the previous table, but averaged by the number of files for each language in the repository. That is, how many Java files exist on average for all projects where there is Java?

Full list

| Language | Average number of files |

|---|---|

| Abap | 1,0008927583699165 |

| ASP | 1.6565139917314107 |

| ASP.NET | 346.88867258489296 |

| ATS | 7.888545610390882 |

| Awk | 5,098807478952136 |

| ActionScript | 15.682562363539644 |

| Ada | 7.265376817272021 |

| Agda | 1,2669381110755398 |

| Alchemist | 7.437307493090622 |

| Alex | 20.152479318023637 |

| Alloy | 1,0000000894069672 |

| Android Interface Definition Language | 3.1133707938643074 |

| Arvo | 9.872687772928423 |

| Asciidoc | 14,645389421879814 |

| Assembly | 1049.6270518312476 |

| AutoHotKey | 1,5361384288472488 |

| Autoconf | 33,99728695464163 |

| Bash | 3.7384110335355545 |

| Basic | 5,103623499110781 |

| Batch | 3.943513588378872 |

| Bazel | 1.0013122734382187 |

| Bitbake | 1,0878349272366024 |

| Bitbucket pipeline | one |

| Boo | 5,321822367969364 |

| Bosque | 1.28173828125 |

| Brainfuck | 1,3141119785974242 |

| Buildstream | 1,4704635441667189 |

| C | 15610,17972307699 |

| C header | 14103,33936083782 |

| C shell | 3.1231084093649315 |

| C # | 45.804460355773394 |

| C ++ | 30,416980313492328 |

| C ++ Header | 8.313450764990089 |

| CMake | 37.2566873554469 |

| COBOL | 3,129408853490878 |

| CSS | 5.332398714337156 |

| Csv | 8.370432089241898 |

| Cabal | 1.0078125149013983 |

| Cargo lock | 1.0026407549221519 |

| Cassius | 4.657169356495984 |

| Ceylon | 7,397692655679642 |

| Clojure | 8,702303821528872 |

| ClojureScript | 5.384518778099244 |

| Closure template | 1,0210028022356945 |

| CoffeeScript | 45.40906609668401 |

| Coldfusion | 13.611857060674573 |

| ColdFusion CFScript | 40,42554202020521 |

| Coq | 10,903652047164622 |

| Creole | 1.000122070313864 |

| Crystal | 3.8729367926098117 |

| Cython | 1.9811811237515262 |

| D | 529,2562750397005 |

| Dart | 1.5259554297822313 |

| Device tree | 586,4119588123021 |

| Dhall | 5.072265625 |

| Docker ignore | 1.0058596283197403 |

| Dockerfile | 1.7570825852789156 |

| Document Type Definition | 2.2977520758534693 |

| Elixir | 8,916658446524252 |

| Elm | 1,6702759813968946 |

| Emacs dev env | 15,720268315288969 |

| Emacs lisp | 11.378847912292201 |

| Erlang | 3.4764894379621607 |

| Expect | 2.8863991651091614 |

| Extensible Stylesheet Language Transformations | 1.2042068607534995 |

| F # | 1.2856606249320954 |

| F * | 32,784058919015 |

| FIDL | 1,8441162109375 |

| FORTRAN Legacy | 11,37801716560221 |

| FORTRAN Modern | 27,408192558594685 |

| Fish | 1,1282354207617833 |

| Flow9 | 5,218046229973186 |

| Forth | 10,64736177807574 |

| Fragment Shader File | 3,648087980622546 |

| Freemarker Template | 8,397226930409037 |

| Futhark | 4,671875 |

| GDScript | 3,6984173692608313 |

| GLSL | 1,6749061330076334 |

| GN | 1,0193083210608163 |

| Game Maker Language | 3,6370866431049604 |

| Game Maker Project | 1,625 |

| Gherkin Specification | 60,430588516231666 |

| Go | 115,23482489228113 |

| Go Template | 28,011342078505013 |

| Gradle | 5,628880473160033 |

| Groovy | 6,697367294187844 |

| HEX | 22,477003537989486 |

| HTML | 4,822243456786672 |

| Hamlet | 50,297887645777536 |

| Handlebars | 36,60120978679127 |

| Happy | 5,820573911044464 |

| Haskell | 8,730027121836951 |

| Haxe | 20,00590981880653 |

| IDL | 79,38510300176867 |

| Idris | 1,524684997890027 |

| Intel HEX | 113,25178379632708 |

| Isabelle | 1,8903018088753136 |

| JAI | 1,4865150753259275 |

| JSON | 6,507823973898348 |

| JSONL | 1,003931049286678 |

| JSX | 4,6359645801363465 |

| Jade | 5,353279289700571 |

| Janet | 1,0390625 |

| Java | 118,86142228014006 |

| Javascript | 140,56079100796154 |

| JavaServer Pages | 2,390251418283771 |

| Jenkins Buildfile | 1,0000000000582077 |

| Jinja | 4,574843152310338 |

| Julia | 6,672268339671913 |

| Julius | 2,2510109380818903 |

| Jupyter | 13,480476117239338 |

| Just | 1,736882857978344 |

| Korn Shell | 1,5100887455636172 |

| Kotlin | 3,9004723322169363 |

| LD Script | 16,59996086864524 |

| LESS | 39,6484785300563 |

| Lex | 5,892075421476933 |

| LOLCODE | 1,0381496530137617 |

| LaTeX | 5,336103768010524 |

| Lean | 1,6653789470747329 |

| License | 5,593879701111845 |

| Lisp | 33,15947937896521 |

| Lua | 24,796117625764612 |

| Lucius | 6,5742989471450155 |

| Luna | 4,437807061133055 |

| MQL Header | 13,515527575704464 |

| MQL4 | 6,400151428436254 |

| MQL5 | 46,489316522221515 |

| MSBuild | 4,8321384193507875 |

| MUMPS | 8,187699062741014 |

| Macromedia eXtensible Markup Language | 2,1945287114300807 |

| Madlang | 3,7857666909751373 |

| Makefile | 1518,1769808494607 |

| Mako | 3,410234685769436 |

| Markdown | 45,687500000234245 |

| Meson | 32,45071679724949 |

| Modula3 | 1,1610784588847318 |

| Module-Definition | 4,9327688042002595 |

| Monkey C | 3,035163164383345 |

| Mustache | 19,052714578803542 |

| Nim | 1,202213335585401 |

| Nix | 2,7291879559930488 |

| OCaml | 3,7135029841909697 |

| Objective C | 4,9795510788040005 |

| Objective C++ | 2,2285232767506264 |

| Opalang | 1,9975597794742732 |

| Org | 5,258117805392903 |

| Oz | 22,250069644336204 |

| Php | 199,17870638869982 |

| PKGBUILD | 7,50632295051949 |

| PSL Assertion | 3,0736406530442473 |

| Pascal | 90,55238627885495 |

| Patch | 25,331829692384225 |

| Perl | 27,46770444081142 |

| Plain text | 1119,2375825397799 |

| Polly | one |

| Pony | 3,173291031071342 |

| Powershell | 6,629884642978543 |

| Processing | 9,139907354078636 |

| Prolog | 1,816763080890156 |

| Properties File | 2,1801967863634255 |

| Protocol Buffers | 2,0456253005879304 |

| Puppet | 43,424491631161054 |

| PureScript | 4,063801504037935 |

| Python | 22,473917606983292 |

| Q# | 5,712939431518483 |

| QCL | 7,590678825974464 |

| QML | 1,255201818986247 |

| R | 2,3781868952970115 |

| Rakefile | 14,856192677576413 |

| Razor | 62,79058974450959 |

| ReStructuredText | 11,63852408056825 |

| Report Definition Language | 23,065085061465403 |

| Robot Framework | 2,6260137148703535 |

| Ruby | 554,0134362337432 |

| Ruby HTML | 24,091116656979562 |

| Rust | 2,3002003813895207 |

| SAS | 1,0032075758254648 |

| SKILL | 1,9229039972029645 |

| SPDX | 2,457843780517578 |

| SQL | 2,293643752864969 |

| SRecode Template | 20,688193360975845 |

| Svg | 4,616972531365432 |

| Sass | 42,92418345584642 |

| Scala | 1,5957851387966393 |

| Scheme | 10,480490204644848 |

| Scons | 2,1977062552968114 |

| Shell | 41,88552208947577 |

| Smarty Template | 6,90615223295527 |

| Softbridge Basic | 22,218602385698304 |

| Specman e | 2,719783829645327 |

| Spice Netlist | 2,454830619852739 |

| Standard ML (SML) | 3,7598713626650295 |

| Stata | 2,832579915520368 |

| Stylus | 7,903926412469745 |

| Swift | 54,175594149331914 |

| Swig | 2,3953681161240747 |

| SystemVerilog | 7,120705494624247 |

| Systemd | 80,83254275520476 |

| TCL | 46,9378307136513 |

| TOML | 1,0316491217260413 |

| TaskPaper | 1,0005036006351133 |

| TeX | 8,690789447558961 |

| Thrift | 1,620168483240211 |

| Twig Template | 18,33051814392764 |

| TypeScript | 1,2610517452930048 |

| TypeScript Typings | 2,3638072576034137 |

| Unreal Script | 2,9971615019965148 |

| Ur/Web | 3,420488425604595 |

| Ur/Web Project | 1,8752869585517504 |

| V | 1,8780624768245784 |

| VHDL | 5,764059992075602 |

| Vala | 42,22072166146626 |

| Varnish Configuration | 1,9899734258599446 |

| Verilog | 1,443359777332832 |

| Verilog Args File | 25.5 |

| Vertex Shader File | 2,4700152399875077 |

| Vim Script | 3,2196359799822662 |

| Visual basic | 119,8397831247842 |

| Visual Basic for Applications | 2,5806381264096503 |

| Vue | 249,0557418123258 |

| Wolfram | 1,462178856761796 |

| Wren | 227,4526259500999 |

| XAML | 2,6149608174399264 |

| XCode Config | 6,979387911493798 |

| XML | 146,10128153519918 |

| XML Schema | 6,832042266604565 |

| Xtend | 2,87054940757827 |

| YAML | 6,170148717655746 |

| Zig | 1,071681022644043 |

| Zsh | 2,6295064863912088 |

| gitignore | 6,878908416722053 |

| ignore | 1,0210649380633772 |

| m4 | 57,5969985568356 |

| nuspec | 3,245791111381787 |

| sed | 1,3985770380241234 |

How many lines of code in a typical language file?

I believe it’s still interesting to see which languages have the largest files on average? Using arithmetic mean generates abnormally high numbers due to projects like sqlite.c, which is included in many repositories, combining many files into one, but no one ever works on this one large file (I hope!)

Therefore, I calculated the average of the median. However, languages with absurdly high values, such as Bosque and JavaScript, still remained.

So I thought, why not make a knight's move? At the suggestion of Darrell (a Kablamo resident and an excellent data scientist), I made one small change and changed the arithmetic mean, dropping files over 5,000 lines to remove anomalies.

Full list

| Language | < 5000 | |

|---|---|---|

| ABAP | 139 | 36 |

| ASP | 513 | 170 |

| ASP.NET | 315 | 148 |

| ATS | 945 | 1 411 |

| AWK | 431 | 774 |

| ActionScript | 950 | 2 676 |

| Ada | 1,179 | 13 |

| Agda | 466 | 89 |

| Alchemist | 1,040 | 1 463 |

| Alex | 479 | 204 |

| Alloy | 72 | 66 |

| Android Interface Definition Language | 119 | 190 |

| Arvo | 257 | 1 508 |

| AsciiDoc | 519 | 1 724 |

| Assembly | 993 | 225 |

| AutoHotKey | 360 | 23 |

| Autoconf | 495 | 144 |

| BASH | 425 | 26 |

| Basic | 476 | 847 |

| Batch | 178 | 208 |

| Bazel | 226 | twenty |

| Bitbake | 436 | 10 |

| Bitbucket Pipeline | 19 | 13 |

| Boo | 898 | 924 |

| Bosque | 58 | 199 238 |

| Brainfuck | 141 | 177 |

| BuildStream | 1 955 | 2 384 |

| C | 1 052 | 5 774 |

| C Header | 869 | 126 460 |

| C Shell | 128 | 77 |

| C # | 1 215 | 1,138 |

| C ++ | 1 166 | 232 |

| C++ Header | 838 | 125 |

| CMake | 750 | fifteen |

| COBOL | 422 | 24 |

| CSS | 729 | 103 |

| Csv | 411 | 12 |

| Cabal | 116 | 13 |

| Cargo Lock | 814 | 686 |

| Cassius | 124 | 634 |

| Ceylon | 207 | fifteen |

| Clojure | 521 | 19 |

| ClojureScript | 504 | 195 |

| Closure Template | 343 | 75 |

| CoffeeScript | 342 | 168 |

| ColdFusion | 686 | 5 |

| ColdFusion CFScript | 1 231 | 1 829 |

| Coq | 560 | 29 250 |

| Creole | 85 | twenty |

| Crystal | 973 | 119 |

| Cython | 853 | 1 738 |

| D | 397 | 10 |

| Dart | 583 | 500 |

| Device Tree | 739 | 44 002 |

| Dhall | 124 | 99 |

| Docker ignore | 10 | 2 |

| Dockerfile | 76 | 17 |

| Document Type Definition | 522 | 1 202 |

| Elixir | 402 | 192 |

| Elm | 438 | 121 |

| Emacs Dev Env | 646 | 755 |

| Emacs Lisp | 653 | fifteen |

| Erlang | 930 | 203 |

| Expect | 419 | 195 |

| Extensible Stylesheet Language Transformations | 442 | 600 |

| F# | 384 | 64 |

| F* | 335 | 65 |

| FIDL | 655 | 1 502 |

| FORTRAN Legacy | 277 | 1 925 |

| FORTRAN Modern | 636 | 244 |

| Fish | 168 | 74 |

| Flow9 | 368 | 32 |

| Forth | 256 | 62 |

| Fragment Shader File | 309 | eleven |

| Freemarker Template | 522 | twenty |

| Futhark | 175 | 257 |

| GDScript | 401 | one |

| GLSL | 380 | 29th |

| GN | 950 | 8 866 |

| Game Maker Language | 710 | 516 |

| Game Maker Project | 1 290 | 374 |

| Gherkin Specification | 516 | 2 386 |

| Go | 780 | 558 |

| Go Template | 411 | 25 342 |

| Gradle | 228 | 22 |

| Groovy | 734 | 13 |

| HEX | 1 002 | 17 208 |

| HTML | 556 | 1 814 |

| Hamlet | 220 | 70 |

| Handlebars | 506 | 3 162 |

| Happy | 1 617 | 0 |

| Haskell | 656 | 17 |

| Haxe | 865 | 9 607 |

| IDL | 386 | 210 |

| Idris | 285 | 42 |

| Intel HEX | 1 256 | 106 650 |

| Isabelle | 792 | 1 736 |

| JAI | 268 | 41 |

| JSON | 289 | 39 |

| JSONL | 43 | 2 |

| JSX | 393 | 24 |

| Jade | 299 | 192 |

| Janet | 508 | 32 |

| Java | 1,165 | 697 |

| Javascript | 894 | 73 979 |

| JavaServer Pages | 644 | 924 |

| Jenkins Buildfile | 79 | 6 |

| Jinja | 465 | 3 914 |

| Julia | 539 | 1 031 |

| Julius | 113 | 12 |

| Jupyter | 1 361 | 688 |

| Just | 62 | 72 |

| Korn Shell | 427 | 776 |

| Kotlin | 554 | 169 |

| LD Script | 521 | 439 |

| LESS | 1 086 | 17 |

| Lex | 1 014 | 214 |

| LOLCODE | 129 | four |

| LaTeX | 895 | 7 482 |

| Lean | 181 | 9 |

| License | 266 | twenty |

| Lisp | 746 | 1 201 |

| Lua | 820 | 559 |

| Lucius | 284 | 445 |

| Luna | 85 | 48 |

| MQL Header | 793 | 10 337 |

| MQL4 | 799 | 3 168 |

| MQL5 | 384 | 631 |

| MSBuild | 558 | 160 |

| MUMPS | 924 | 98 191 |

| Macromedia eXtensible Markup Language | 500 | twenty |

| Madlang | 368 | 340 |

| Makefile | 309 | twenty |

| Mako | 269 | 243 |

| Markdown | 206 | 10 |

| Meson | 546 | 205 |

| Modula3 | 162 | 17 |

| Module-Definition | 489 | 7 |

| Monkey C | 140 | 28 |

| Mustache | 298 | 8 083 |

| Nim | 352 | 3 |

| Nix | 240 | 78 |

| OCaml | 718 | 68 |

| Objective C | 1 111 | 17 103 |

| Objective C++ | 903 | 244 |

| Opalang | 151 | 29th |

| Org | 523 | 24 |

| Oz | 360 | 7 132 |

| Php | 964 | 14 660 |

| PKGBUILD | 131 | 19 |

| PSL Assertion | 149 | 108 |

| Pascal | 1 044 | 497 |

| Patch | 676 | 12 |

| Perl | 762 | eleven |

| Plain text | 352 | 841 |

| Polly | 12 | 26 |

| Pony | 338 | 42 488 |

| Powershell | 652 | 199 |

| Processing | 800 | 903 |

| Prolog | 282 | 6 |

| Properties File | 184 | eighteen |

| Protocol Buffers | 576 | 8 080 |

| Puppet | 499 | 660 |

| PureScript | 598 | 363 |

| Python | 879 | 258 |

| Q# | 475 | 5 417 |

| QCL | 548 | 3 |

| QML | 815 | 6 067 |

| R | 566 | twenty |

| Rakefile | 122 | 7 |

| Razor | 713 | 1 842 |

| ReStructuredText | 735 | 5 049 |

| Report Definition Language | 1,389 | 34 337 |

| Robot Framework | 292 | 115 |

| Ruby | 739 | 4 942 |

| Ruby HTML | 326 | 192 |

| Rust | 1 007 | four |

| SAS | 233 | 65 |

| SKILL | 526 | 123 |

| SPDX | 1 242 | 379 |

| SQL | 466 | 143 |

| SRecode Template | 796 | 534 |

| Svg | 796 | 1 538 |

| Sass | 682 | 14 653 |

| Scala | 612 | 661 |

| Scheme | 566 | 6 |

| Scons | 545 | 6 042 |

| Shell | 304 | four |

| Smarty Template | 392 | fifteen |

| Softbridge Basic | 2 067 | 3 |

| Specman e | 127 | 0 |

| Spice Netlist | 906 | 1 465 |

| Standard ML (SML) | 478 | 75 |

| Stata | 200 | 12 |

| Stylus | 505 | 214 |

| Swift | 683 | 663 |

| Swig | 1 031 | 4 540 |

| SystemVerilog | 563 | 830 |

| Systemd | 127 | 26 |

| TCL | 774 | 42 396 |

| TOML | one hundred | 17 |

| TaskPaper | 37 | 7 |

| TeX | 804 | 905 |

| Thrift | 545 | 329 |

| Twig Template | 713 | 9 907 |

| TypeScript | 461 | 10 |

| TypeScript Typings | 1 465 | 236 866 |

| Unreal Script | 795 | 927 |

| Ur/Web | 429 | 848 |

| Ur/Web Project | 33 | 26 |

| V | 704 | 5 711 |

| VHDL | 952 | 1 452 |

| Vala | 603 | 2 |

| Varnish Configuration | 203 | 77 |

| Verilog | 198 | 2 |

| Verilog Args File | 456 | 481 |

| Vertex Shader File | 168 | 74 |

| Vim Script | 555 | 25 |

| Visual basic | 738 | 1 050 |

| Visual Basic for Applications | 979 | 936 |

| Vue | 732 | 242 |

| Wolfram | 940 | 973 |

| Wren | 358 | 279 258 |

| XAML | 703 | 24 |

| XCode Config | 200 | eleven |

| XML | 605 | 1,033 |

| XML Schema | 1 008 | 248 |

| Xtend | 710 | 120 |

| YAML | 165 | 47 327 |

| Zig | 188 | 724 |

| Zsh | 300 | 9 |

| gitignore | 33 | 3 |

| ignore | 6 | 2 |

| m4 | 959 | 807 |

| nuspec | 187 | 193 |

| sed | 82 | 33 |

Average file complexity in each language?

What is the average file complexity for each language?

In fact, complexity ratings cannot be directly correlated between languages. Excerpt from readme itself

scc

:

The complexity score is just a number that can only be matched between files in the same language. It should not be used to compare languages directly. The reason is that it is calculated by looking for branch and loop operators for each file.

Thus, languages cannot be compared with each other here, although this can be done between similar languages such as Java and C, for example.

This is a more valuable metric for individual files in the same language. Thus, you can answer the question “Is this file I'm working with easier or more complicated than the average?”

I must mention that I will be glad to suggest how to improve this metric in scc . For a commit, it is usually enough to add just a few keywords to the languages.json file, so any programmer can help.

Full list

| Language | |

|---|---|

| ABAP | 11,180740488380376 |

| ASP | 11,536947250366211 |

| ASP.NET | 2,149275320643484 |

| ATS | 0,7621728432717677 |

| AWK | 0 |

| ActionScript | 22,088579905848178 |

| Ada | 13,69141626294931 |

| Agda | 0,19536590785719454 |

| Alchemist | 0,3423442907696928 |

| Alex | 0 |

| Alloy | 6,9999997997656465 |

| Android Interface Definition Language | 0 |

| Arvo | 0 |

| AsciiDoc | 0 |

| Assembly | 1,5605608227976997 |

| AutoHotKey | 423,87785756399626 |

| Autoconf | 1,5524294972419739 |

| BASH | 7,500000094871363 |

| Basic | 1,0001350622574257 |

| Batch | 1,4136352496767306 |

| Bazel | 6,523681727119303 |

| Bitbake | 0,00391388021490391 |

| Bitbucket Pipeline | 0 |

| Boo | 65,67764583729533 |

| Bosque | 236,79837036132812 |

| Brainfuck | 27,5516445041791 |

| BuildStream | 0 |

| C | 220,17236548200242 |

| C Header | 0,027589923237434522 |

| C Shell | 1,4911166269191476 |

| C # | 1,0994400597744005 |

| C ++ | 215,23628287682845 |

| C++ Header | 2,2893104921677154 |

| CMake | 0,887660006199008 |

| COBOL | 0,018726348891789816 |

| CSS | 6,317460331175305E-176 |

| Csv | 0 |

| Cabal | 3,6547924155738194 |

| Cargo Lock | 0 |

| Cassius | 0 |

| Ceylon | 21,664400369259404 |

| Clojure | 0,00009155273437716484 |

| ClojureScript | 0,5347588658332859 |

| Closure Template | 0,503426091716392 |

| CoffeeScript | 0,02021490140137264 |

| ColdFusion | 6,851776515250336 |

| ColdFusion CFScript | 22,287403080299764 |

| Coq | 3,3282556015266307 |

| Creole | 0 |

| Crystal | 1,6065794006138856 |

| Cython | 42,87412906489837 |

| D | 0 |

| Dart | 2,1264450684815657 |

| Device Tree | 0 |

| Dhall | 0 |

| Docker ignore | 0 |

| Dockerfile | 6,158891172385556 |

| Document Type Definition | 0 |

| Elixir | 0,5000612735793482 |

| Elm | 5,237952479502043 |

| Emacs Dev Env | 1,2701271416728307E-61 |

| Emacs Lisp | 0,19531250990197657 |

| Erlang | 0,08028322620528387 |

| Expect | 0,329944610851471 |

| Extensible Stylesheet Language Transformations | 0 |

| F# | 0,32300702900710193 |

| F* | 9,403954876643223E-38 |

| FIDL | 0,12695312593132269 |

| FORTRAN Legacy | 0,8337643985574195 |

| FORTRAN Modern | 7,5833590276411185 |

| Fish | 1,3386242155247368 |

| Flow9 | 34.5 |

| Forth | 2,4664166555765066 |

| Fragment Shader File | 0,0003388836600090293 |

| Freemarker Template | 10,511094652522283 |

| Futhark | 0,8057891242233386 |

| GDScript | 10,750000000022537 |

| GLSL | 0,6383056697891334 |

| GN | 22,400601854287807 |

| Game Maker Language | 4,709514207365569 |

| Game Maker Project | 0 |

| Gherkin Specification | 0,4085178437480328 |

| Go | 50,06279203974034 |

| Go Template | 2,3866690339840662E-153 |

| Gradle | 0 |

| Groovy | 3,2506868488244898 |

| HEX | 0 |

| HTML | 0 |

| Hamlet | 0,25053861103978114 |

| Handlebars | 1,6943764911351036E-21 |

| Happy | 0 |

| Haskell | 28,470107150053625 |

| Haxe | 66,52873523714804 |

| IDL | 7,450580598712868E-9 |

| Idris | 17,77642903881352 |

| Intel HEX | 0 |

| Isabelle | 0,0014658546850726184 |

| JAI | 7,749968137734008 |

| JSON | 0 |

| JSONL | 0 |

| JSX | 0,3910405338329044 |

| Jade | 0,6881713929215119 |

| Janet | 0 |

| Java | 297,22908150612085 |

| Javascript | 1,861130583340945 |

| JavaServer Pages | 7,24235416213196 |

| Jenkins Buildfile | 0 |

| Jinja | 0,6118526458846931 |

| Julia | 5,779676990326951 |

| Julius | 3,7432448068125277 |

| Jupyter | 0 |

| Just | 1,625490248219907 |

| Korn Shell | 11,085027896435056 |

| Kotlin | 5,467347841779503 |

| LD Script | 6,538079182471746E-26 |

| LESS | 0 |

| Lex | 0 |

| LOLCODE | 5,980839657708373 |

| LaTeX | 0 |

| Lean | 0,0019872561135834133 |

| License | 0 |

| Lisp | 4,033602018074421 |

| Lua | 44,70686769972825 |

| Lucius | 0 |

| Luna | 0 |

| MQL Header | 82,8036524637758 |

| MQL4 | 2,9989408299408566 |

| MQL5 | 32,84198718928553 |

| MSBuild | 2,9802322387695312E-8 |

| MUMPS | 5,767955578948634E-17 |

| Macromedia eXtensible Markup Language | 0 |

| Madlang | 8.25 |

| Makefile | 3,9272747722381812E-90 |

| Mako | 0,007624773579836673 |

| Markdown | 0 |

| Meson | 0,3975182396400463 |

| Modula3 | 0,7517121883916386 |

| Module-Definition | 0,25000000023283153 |

| Monkey C | 9,838715311259486 |

| Mustache | 0,00004191328599945435 |

| Nim | 0,04812580073302998 |

| Nix | 25,500204694250044 |

| OCaml | 16,92218069843716 |

| Objective C | 65,08967337175548 |

| Objective C++ | 10,886891531550603 |

| Opalang | 1,3724696160763994E-8 |

| Org | 28,947825231747235 |

| Oz | 6,260657086070324 |

| Php | 2,8314653639690874 |

| PKGBUILD | 0 |

| PSL Assertion | 0,5009768009185791 |

| Pascal | four |

| Patch | 0 |

| Perl | 48,16959255514553 |

| Plain text | 0 |

| Polly | 0 |

| Pony | 4,91082763671875 |

| Powershell | 0,43151378893449877 |

| Processing | 9,691001653621564 |

| Prolog | 0,5029296875147224 |

| Properties File | 0 |

| Protocol Buffers | 0,07128906529847256 |

| Puppet | 0,16606500436341776 |

| PureScript | 1,3008141816356456 |

| Python | 11,510142201304832 |

| Q# | 5,222080192729404 |

| QCL | 13,195626304795667 |

| QML | 0,3208023407643109 |

| R | 0,40128818821921775 |

| Rakefile | 2,75786388297917 |

| Razor | 0,5298294073055322 |

| ReStructuredText | 0 |

| Report Definition Language | 0 |

| Robot Framework | 0 |

| Ruby | 7,8611656283491795 |

| Ruby HTML | 1,3175727506823756 |

| Rust | 8,62646485221385 |

| SAS | 0,5223999023437882 |

| SKILL | 0,4404907226562501 |

| SPDX | 0 |

| SQL | 0,00001537799835205078 |

| SRecode Template | 0,18119949102401853 |

| Svg | 1,7686873200833423E-74 |

| Sass | 7,002974651049148E-113 |

| Scala | 17,522343645163424 |

| Scheme | 0,00003147125255509322 |

| Scons | 25,56868253610655 |

| Shell | 6,409446969197895 |

| Smarty Template | 53,06143077491294 |

| Softbridge Basic | 7.5 |

| Specman e | 0,0639350358484781 |

| Spice Netlist | 1,3684555315672042E-48 |

| Standard ML (SML) | 24,686901116754818 |

| Stata | 1,5115316917094068 |

| Stylus | 0,3750006556512421 |

| Swift | 0,5793484510104517 |

| Swig | 0 |

| SystemVerilog | 0,250593163372906 |

| Systemd | 0 |

| TCL | 96,5072605676113 |

| TOML | 0,0048828125000002776 |

| TaskPaper | 0 |

| TeX | 54,0588040258797 |

| Thrift | 0 |

| Twig Template | 2,668124511961211 |

| TypeScript | 9,191392608918255 |

| TypeScript Typings | 6,1642456222327375 |

| Unreal Script | 2,7333421227943004 |

| Ur/Web | 16,51621568240534 |

| Ur/Web Project | 0 |

| V | 22,50230618938804 |

| VHDL | 18,05495198571289 |

| Vala | 147,2761703068509 |

| Varnish Configuration | 0 |

| Verilog | 5,582400367711671 |

| Verilog Args File | 0 |

| Vertex Shader File | 0,0010757446297590262 |

| Vim Script | 2,4234658314493798 |

| Visual basic | 0,0004882812500167852 |

| Visual Basic for Applications | 4,761343429454877 |

| Vue | 0,7529517744621779 |

| Wolfram | 0,0059204399585724215 |

| Wren | 0,08593750013097715 |

| XAML | 6,984919309616089E-10 |

| XCode Config | 0 |

| XML | 0 |

| XML Schema | 0 |

| Xtend | 2,8245844719990547 |

| YAML | 0 |

| Zig | 1,0158334437942358 |

| Zsh | 1,81697392626756 |

| gitignore | 0 |

| ignore | 0 |

| m4 | 0 |

| nuspec | 0 |

| sed | 22,91158285739948 |

Average number of comments for files in each language?

What is the average number of comments in files in each language?

Perhaps the question can be rephrased: the developers in which language write the most comments, suggesting a misunderstanding of the reader.

Full list

| Language | Comments |

|---|---|

| ABAP | 56,3020026683825 |

| ASP | 24,67145299911499 |

| ASP.NET | 9,140447860406259E-11 |

| ATS | 41,89465025163305 |

| AWK | 11,290069486393975 |

| ActionScript | 31,3568633027012 |

| Ada | 61,269572412982384 |

| Agda | 2,4337660860304755 |

| Alchemist | 2,232399710231226E-103 |

| Alex | 0 |

| Alloy | 0,000002207234501959681 |

| Android Interface Definition Language | 26,984662160277367 |

| Arvo | 0 |

| AsciiDoc | 0 |

| Assembly | 2,263919769706678E-72 |

| AutoHotKey | 15,833985920534857 |

| Autoconf | 0,47779749499136687 |

| BASH | 34,15625059662068 |

| Basic | 1,4219117348874069 |

| Batch | 1,0430908205926455 |

| Bazel | 71,21859817579139 |

| Bitbake | 0,002480246487177871 |

| Bitbucket Pipeline | 0,567799577547725 |

| Boo | 5,03128187009327 |

| Bosque | 0,125244140625 |

| Brainfuck | 0 |

| BuildStream | 12,84734197699206 |

| C | 256,2839210573451 |

| C Header | 184,88885430308878 |

| C Shell | 5,8409870392823375 |

| C # | 30,96563720101839 |

| C ++ | 44,61584829131642 |

| C++ Header | 27,578790410119197 |

| CMake | 1,7564333047949374 |

| COBOL | 0,7503204345703562 |

| CSS | 4,998773531463529 |

| Csv | 0 |

| Cabal | 4,899812531420634 |

| Cargo Lock | 0,0703125 |

| Cassius | 0,07177734654413487 |

| Ceylon | 3,6406326349824667 |

| Clojure | 0,0987220821845421 |

| ClojureScript | 0,6025725119252456 |

| Closure Template | 17,078124673988057 |

| CoffeeScript | 1,6345682790069884 |

| ColdFusion | 33,745563628665096 |

| ColdFusion CFScript | 13,566947396771592 |

| Coq | 20,3222774725393 |

| Creole | 0 |

| Crystal | 6,0308081267588145 |

| Cython | 21,0593019957583 |

| D | 0 |

| Dart | 4,634361584097128 |

| Device Tree | 33,64898256434121 |

| Dhall | 1,0053101042303751 |

| Docker ignore | 8,003553375601768E-11 |

| Dockerfile | 4,526245545632278 |

| Document Type Definition | 0 |

| Elixir | 8,0581139370409 |

| Elm | 24,73191350743249 |

| Emacs Dev Env | 2,74822998046875 |

| Emacs Lisp | 12,168370702306452 |

| Erlang | 16,670030919109056 |

| Expect | 3,606161126133445 |

| Extensible Stylesheet Language Transformations | 0 |

| F# | 0,5029605040200058 |

| F* | 5,33528354690743E-27 |

| FIDL | 343,0418392068642 |

| FORTRAN Legacy | 8,121405267242158 |

| FORTRAN Modern | 171,32042583820953 |

| Fish | 7,979248739519377 |

| Flow9 | 0,5049991616979244 |

| Forth | 0,7578125 |

| Fragment Shader File | 0,2373057885016209 |

| Freemarker Template | 62,250244379050855 |

| Futhark | 0,014113984877253714 |

| GDScript | 31,14457228694065 |

| GLSL | 0,2182627061047912 |

| GN | 17,443267241931284 |

| Game Maker Language | 3,9815753922640824 |

| Game Maker Project | 0 |

| Gherkin Specification | 0,0032959059321794604 |

| Go | 6,464829990599041 |

| Go Template | 4,460169822267483E-251 |

| Gradle | 0,5374194774415457 |

| Groovy | 32,32068506016523 |

| HEX | 0 |

| HTML | 0,16671794164614084 |

| Hamlet | 4,203293477836184E-24 |

| Handlebars | 0,9389737429747177 |

| Happy | 0 |

| Haskell | 20,323476462551376 |