Python + Keras + LSTM: do a text translator in half an hour

Hi, Habr.

In the previous part, I looked at creating a simple text recognition based on a neural network. Today we will use a similar approach and write an automatic translator of texts from English to German.

For those who are interested in how this works, details are under the cut.

Note : this project of using a neural network for translation is exclusively educational, therefore the question “why” is not considered. Just for fun. I do not set out to prove that this or that method is better or worse, it was just interesting to check what happens. The method used below is, of course, simplified, but I hope no one hopes that we will write a second Lingvo in half an hour.

A file found on the network containing English and German phrases separated by tabs was used as the source dataset. A set of phrases looks something like this:

The file contains 192 thousand lines and has a size of 13 MB. We load the text into memory and break the data into two blocks, for English and German words.

We also converted all the words to lowercase and removed the punctuation marks.

The next step is to prepare the data for the neural network. The network does not know what words are, and works exclusively with numbers. Fortunately for us, keras already has a Tokenizer class built in, which replaces words in sentences with digital codes.

Its use is simply illustrated with an example:

The phrase “to be or not to be” will be replaced by the array [1 2 3 4 1 2 0 0], where it is not difficult to guess, 1 = to, 2 = be, 3 = or, 4 = not. We can already submit these data to the neural network.

Our data is digitally ready. We divide the array into two blocks for input (English lines) and output (German lines) data. We will also prepare a separate unit for validating the learning process.

Now we can create a model of a neural network and run its training. As you can see, the neural network contains LSTM layers having memory cells. Although it would probably work on a “regular” network, those who wish can check on their own.

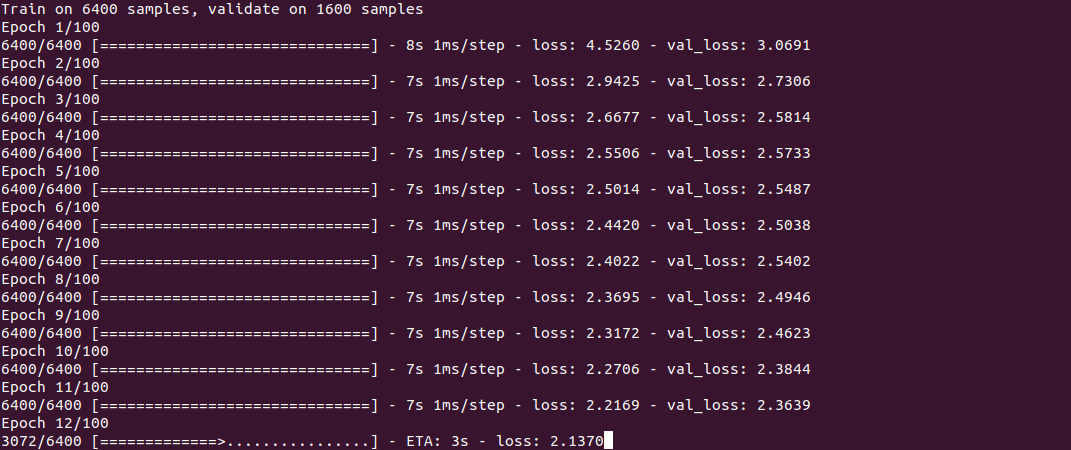

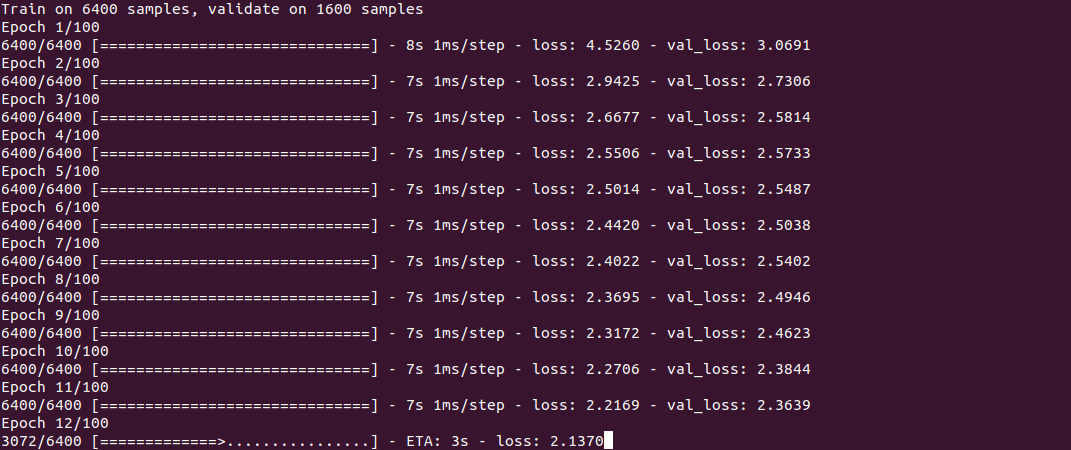

The training itself looks something like this:

The process, as you can see, is not fast, and takes about half an hour on a Core i7 + GeForce 1060 for a set of 30 thousand lines. At the end of the training (it needs to be done only once), the model is saved to a file, and then it can be reused.

To get the translation, we use the predict_classes function, to the input of which we submit a few simple phrases. The get_word function is used to invert words to numbers.

Now, actually, the most curious thing is the results. It is interesting to see how the neural network learns and “remembers” the correspondence between English and German phrases. I specifically took 2 phrases easier and 2 harder to see the difference.

5 minutes of training

“The weather is nice today” - “das ist ist tom”

“My name is tom” - “wie für tom tom”

"How old are you" - "wie geht ist es"

"Where is the nearest shop" - "wo ist der"

As you can see, so far there are few “hits”. A fragment of the phrase “how old are you” confused the neural network with the phrase “how are you” and produced the translation “wie geht ist es” (how are you?). In the phrase “where is ...” the neural network identified only the verb where and issued the translation “wo ist der” (where is it?), Which, in principle, is not meaningless. In general, it also translates into a German newcomer in group A1;)

10 minutes of training

“The weather is nice today” - “das haus ist bereit”

“My name is tom” - “mein heiße heiße tom”

"How old are you" - "wie alt sind sie"

"Where is the nearest shop" - "wo ist paris"

Some progress is visible. The first phrase is completely out of place. In the second phrase, the neural network “learned” the verb heißen (called), but “mein heiße heiße tom” is still incorrect, although you can already guess the meaning. The third phrase is already correct. In the fourth, the correct first part is “wo ist”, but the nearest shop was for some reason replaced by paris.

30 minutes of training

“The weather is nice today” - “das ist ist aus”

"My name is tom" - "" tom "ist mein name"

"How old are you" - "wie alt sind sie"

"Where is the nearest shop" - "wo ist der"

As you can see, the second phrase has become correct, although the design looks somewhat unusual. The third phrase is correct, but the 1st and 4th phrases have not yet been "learned." With thisin order to save electricity, I finished the process.

As you can see, in principle, this works. I would like to memorize a new language with such speed :) Of course, the result is not perfect so far, but training on a full set of 190 thousand lines would take more than one hour.

For those who want to experiment on their own, the source code is under the spoiler. The program theoretically can use any pair of languages, not only English and German (the file should be in UTF-8 encoding). The issue of translation quality also remains open, there is something to test.

The dictionary itself is too large to attach to the article, link in the comments.

As usual, all successful experiments.

In the previous part, I looked at creating a simple text recognition based on a neural network. Today we will use a similar approach and write an automatic translator of texts from English to German.

For those who are interested in how this works, details are under the cut.

Note : this project of using a neural network for translation is exclusively educational, therefore the question “why” is not considered. Just for fun. I do not set out to prove that this or that method is better or worse, it was just interesting to check what happens. The method used below is, of course, simplified, but I hope no one hopes that we will write a second Lingvo in half an hour.

Data collection

A file found on the network containing English and German phrases separated by tabs was used as the source dataset. A set of phrases looks something like this:

Hi. Hallo! Hi. Grüß Gott! Run! Lauf! Wow! Potzdonner! Wow! Donnerwetter! Fire! Feuer! Help! Hilfe! Help! Zu Hülf! Stop! Stopp! Wait! Warte! Go on. Mach weiter. Hello! Hallo! I ran. Ich rannte. I see. Ich verstehe. ...

The file contains 192 thousand lines and has a size of 13 MB. We load the text into memory and break the data into two blocks, for English and German words.

def read_text(filename): with open(filename, mode='rt', encoding='utf-8') as file: text = file.read() sents = text.strip().split('\n') return [i.split('\t') for i in sents] data = read_text("deutch.txt") deu_eng = np.array(data) deu_eng = deu_eng[:30000,:] print("Dictionary size:", deu_eng.shape) # Remove punctuation deu_eng[:,0] = [s.translate(str.maketrans('', '', string.punctuation)) for s in deu_eng[:,0]] deu_eng[:,1] = [s.translate(str.maketrans('', '', string.punctuation)) for s in deu_eng[:,1]] # convert text to lowercase for i in range(len(deu_eng)): deu_eng[i,0] = deu_eng[i,0].lower() deu_eng[i,1] = deu_eng[i,1].lower()

We also converted all the words to lowercase and removed the punctuation marks.

The next step is to prepare the data for the neural network. The network does not know what words are, and works exclusively with numbers. Fortunately for us, keras already has a Tokenizer class built in, which replaces words in sentences with digital codes.

Its use is simply illustrated with an example:

from keras.preprocessing.text import Tokenizer from keras.preprocessing.sequence import pad_sequences s = "To be or not to be" eng_tokenizer = Tokenizer() eng_tokenizer.fit_on_texts([s]) seq = eng_tokenizer.texts_to_sequences([s]) seq = pad_sequences(seq, maxlen=8, padding='post') print(seq)

The phrase “to be or not to be” will be replaced by the array [1 2 3 4 1 2 0 0], where it is not difficult to guess, 1 = to, 2 = be, 3 = or, 4 = not. We can already submit these data to the neural network.

Neural network training

Our data is digitally ready. We divide the array into two blocks for input (English lines) and output (German lines) data. We will also prepare a separate unit for validating the learning process.

# split data into train and test set train, test = train_test_split(deu_eng, test_size=0.2, random_state=12) # prepare training data trainX = encode_sequences(eng_tokenizer, eng_length, train[:, 0]) trainY = encode_sequences(deu_tokenizer, deu_length, train[:, 1]) # prepare validation data testX = encode_sequences(eng_tokenizer, eng_length, test[:, 0]) testY = encode_sequences(deu_tokenizer, deu_length, test[:, 1])

Now we can create a model of a neural network and run its training. As you can see, the neural network contains LSTM layers having memory cells. Although it would probably work on a “regular” network, those who wish can check on their own.

def make_model(in_vocab, out_vocab, in_timesteps, out_timesteps, n): model = Sequential() model.add(Embedding(in_vocab, n, input_length=in_timesteps, mask_zero=True)) model.add(LSTM(n)) model.add(Dropout(0.3)) model.add(RepeatVector(out_timesteps)) model.add(LSTM(n, return_sequences=True)) model.add(Dropout(0.3)) model.add(Dense(out_vocab, activation='softmax')) model.compile(optimizer=optimizers.RMSprop(lr=0.001), loss='sparse_categorical_crossentropy') return model eng_vocab_size = len(eng_tokenizer.word_index) + 1 deu_vocab_size = len(deu_tokenizer.word_index) + 1 eng_length, deu_length = 8, 8 model = make_model(eng_vocab_size, deu_vocab_size, eng_length, deu_length, 512) num_epochs = 40 model.fit(trainX, trainY.reshape(trainY.shape[0], trainY.shape[1], 1), epochs=num_epochs, batch_size=512, validation_split=0.2, callbacks=None, verbose=1) model.save('en-de-model.h5')

The training itself looks something like this:

The process, as you can see, is not fast, and takes about half an hour on a Core i7 + GeForce 1060 for a set of 30 thousand lines. At the end of the training (it needs to be done only once), the model is saved to a file, and then it can be reused.

To get the translation, we use the predict_classes function, to the input of which we submit a few simple phrases. The get_word function is used to invert words to numbers.

model = load_model('en-de-model.h5') def get_word(n, tokenizer): if n == 0: return "" for word, index in tokenizer.word_index.items(): if index == n: return word return "" phrs_enc = encode_sequences(eng_tokenizer, eng_length, ["the weather is nice today", "my name is tom", "how old are you", "where is the nearest shop"]) preds = model.predict_classes(phrs_enc) print("Preds:", preds.shape) print(preds[0]) print(get_word(preds[0][0], deu_tokenizer), get_word(preds[0][1], deu_tokenizer), get_word(preds[0][2], deu_tokenizer), get_word(preds[0][3], deu_tokenizer)) print(preds[1]) print(get_word(preds[1][0], deu_tokenizer), get_word(preds[1][1], deu_tokenizer), get_word(preds[1][2], deu_tokenizer), get_word(preds[1][3], deu_tokenizer)) print(preds[2]) print(get_word(preds[2][0], deu_tokenizer), get_word(preds[2][1], deu_tokenizer), get_word(preds[2][2], deu_tokenizer), get_word(preds[2][3], deu_tokenizer)) print(preds[3]) print(get_word(preds[3][0], deu_tokenizer), get_word(preds[3][1], deu_tokenizer), get_word(preds[3][2], deu_tokenizer), get_word(preds[3][3], deu_tokenizer))

results

Now, actually, the most curious thing is the results. It is interesting to see how the neural network learns and “remembers” the correspondence between English and German phrases. I specifically took 2 phrases easier and 2 harder to see the difference.

5 minutes of training

“The weather is nice today” - “das ist ist tom”

“My name is tom” - “wie für tom tom”

"How old are you" - "wie geht ist es"

"Where is the nearest shop" - "wo ist der"

As you can see, so far there are few “hits”. A fragment of the phrase “how old are you” confused the neural network with the phrase “how are you” and produced the translation “wie geht ist es” (how are you?). In the phrase “where is ...” the neural network identified only the verb where and issued the translation “wo ist der” (where is it?), Which, in principle, is not meaningless. In general, it also translates into a German newcomer in group A1;)

10 minutes of training

“The weather is nice today” - “das haus ist bereit”

“My name is tom” - “mein heiße heiße tom”

"How old are you" - "wie alt sind sie"

"Where is the nearest shop" - "wo ist paris"

Some progress is visible. The first phrase is completely out of place. In the second phrase, the neural network “learned” the verb heißen (called), but “mein heiße heiße tom” is still incorrect, although you can already guess the meaning. The third phrase is already correct. In the fourth, the correct first part is “wo ist”, but the nearest shop was for some reason replaced by paris.

30 minutes of training

“The weather is nice today” - “das ist ist aus”

"My name is tom" - "" tom "ist mein name"

"How old are you" - "wie alt sind sie"

"Where is the nearest shop" - "wo ist der"

As you can see, the second phrase has become correct, although the design looks somewhat unusual. The third phrase is correct, but the 1st and 4th phrases have not yet been "learned." With this

Conclusion

As you can see, in principle, this works. I would like to memorize a new language with such speed :) Of course, the result is not perfect so far, but training on a full set of 190 thousand lines would take more than one hour.

For those who want to experiment on their own, the source code is under the spoiler. The program theoretically can use any pair of languages, not only English and German (the file should be in UTF-8 encoding). The issue of translation quality also remains open, there is something to test.

keras_translate.py

import os # os.environ["CUDA_VISIBLE_DEVICES"] = "-1" # Force CPU os.environ['TF_CPP_MIN_LOG_LEVEL'] = '3' # 0 = all messages are logged, 3 - INFO, WARNING, and ERROR messages are not printed import string import re import numpy as np import pandas as pd from keras.models import Sequential from keras.layers import Dense, LSTM, Embedding, RepeatVector from keras.preprocessing.text import Tokenizer from keras.callbacks import ModelCheckpoint from keras.preprocessing.sequence import pad_sequences from keras.models import load_model from keras import optimizers from sklearn.model_selection import train_test_split import matplotlib.pyplot as plt pd.set_option('display.max_colwidth', 200) # Read raw text file def read_text(filename): with open(filename, mode='rt', encoding='utf-8') as file: text = file.read() sents = text.strip().split('\n') return [i.split('\t') for i in sents] data = read_text("deutch.txt") deu_eng = np.array(data) deu_eng = deu_eng[:30000,:] print("Dictionary size:", deu_eng.shape) # Remove punctuation deu_eng[:,0] = [s.translate(str.maketrans('', '', string.punctuation)) for s in deu_eng[:,0]] deu_eng[:,1] = [s.translate(str.maketrans('', '', string.punctuation)) for s in deu_eng[:,1]] # Convert text to lowercase for i in range(len(deu_eng)): deu_eng[i,0] = deu_eng[i,0].lower() deu_eng[i,1] = deu_eng[i,1].lower() # Prepare English tokenizer eng_tokenizer = Tokenizer() eng_tokenizer.fit_on_texts(deu_eng[:, 0]) eng_vocab_size = len(eng_tokenizer.word_index) + 1 eng_length = 8 # Prepare Deutch tokenizer deu_tokenizer = Tokenizer() deu_tokenizer.fit_on_texts(deu_eng[:, 1]) deu_vocab_size = len(deu_tokenizer.word_index) + 1 deu_length = 8 # Encode and pad sequences def encode_sequences(tokenizer, length, lines): # integer encode sequences seq = tokenizer.texts_to_sequences(lines) # pad sequences with 0 values seq = pad_sequences(seq, maxlen=length, padding='post') return seq # Split data into train and test set train, test = train_test_split(deu_eng, test_size=0.2, random_state=12) # Prepare training data trainX = encode_sequences(eng_tokenizer, eng_length, train[:, 0]) trainY = encode_sequences(deu_tokenizer, deu_length, train[:, 1]) # Prepare validation data testX = encode_sequences(eng_tokenizer, eng_length, test[:, 0]) testY = encode_sequences(deu_tokenizer, deu_length, test[:, 1]) # Build NMT model def make_model(in_vocab, out_vocab, in_timesteps, out_timesteps, n): model = Sequential() model.add(Embedding(in_vocab, n, input_length=in_timesteps, mask_zero=True)) model.add(LSTM(n)) model.add(Dropout(0.3)) model.add(RepeatVector(out_timesteps)) model.add(LSTM(n, return_sequences=True)) model.add(Dropout(0.3)) model.add(Dense(out_vocab, activation='softmax')) model.compile(optimizer=optimizers.RMSprop(lr=0.001), loss='sparse_categorical_crossentropy') return model print("deu_vocab_size:", deu_vocab_size, deu_length) print("eng_vocab_size:", eng_vocab_size, eng_length) # Model compilation (with 512 hidden units) model = make_model(eng_vocab_size, deu_vocab_size, eng_length, deu_length, 512) # Train model num_epochs = 250 history = model.fit(trainX, trainY.reshape(trainY.shape[0], trainY.shape[1], 1), epochs=num_epochs, batch_size=512, validation_split=0.2, callbacks=None, verbose=1) # plt.plot(history.history['loss']) # plt.plot(history.history['val_loss']) # plt.legend(['train','validation']) # plt.show() model.save('en-de-model.h5') # Load model model = load_model('en-de-model.h5') def get_word(n, tokenizer): if n == 0: return "" for word, index in tokenizer.word_index.items(): if index == n: return word return "" phrs_enc = encode_sequences(eng_tokenizer, eng_length, ["the weather is nice today", "my name is tom", "how old are you", "where is the nearest shop"]) print("phrs_enc:", phrs_enc.shape) preds = model.predict_classes(phrs_enc) print("Preds:", preds.shape) print(preds[0]) print(get_word(preds[0][0], deu_tokenizer), get_word(preds[0][1], deu_tokenizer), get_word(preds[0][2], deu_tokenizer), get_word(preds[0][3], deu_tokenizer)) print(preds[1]) print(get_word(preds[1][0], deu_tokenizer), get_word(preds[1][1], deu_tokenizer), get_word(preds[1][2], deu_tokenizer), get_word(preds[1][3], deu_tokenizer)) print(preds[2]) print(get_word(preds[2][0], deu_tokenizer), get_word(preds[2][1], deu_tokenizer), get_word(preds[2][2], deu_tokenizer), get_word(preds[2][3], deu_tokenizer)) print(preds[3]) print(get_word(preds[3][0], deu_tokenizer), get_word(preds[3][1], deu_tokenizer), get_word(preds[3][2], deu_tokenizer), get_word(preds[3][3], deu_tokenizer)) print()

The dictionary itself is too large to attach to the article, link in the comments.

As usual, all successful experiments.

All Articles