Why is Kaldi good for speech recognition?

Why am I (and I hope you) interested in speech recognition? Firstly, this direction is one of the most popular in comparison with other tasks of computer linguistics, since speech recognition technology is now used almost everywhere - from recognizing a simple yes / no in the bank’s automatic call center to the ability to support “small talk” in “Smart column” like “Alice”. Secondly, in order for the speech recognition system to be of high quality, it is necessary to find the most effective tools for creating and configuring such a system (this article is devoted to one of such tools). Finally, the undoubted “plus” of choosing a specialization in the field of speech recognition for me personally is that for research in this area it is necessary to have both programmer and linguistic skills. This is very stimulating, forcing to acquire knowledge in different disciplines.

Why Kaldi, after all, are there other frameworks for speech recognition?

To answer this question, it is worth considering the existing analogues and the algorithms and technologies used by them (the algorithms used in Kaldi are described further in the article):

- CMU Sphinx

CMU Sphinx (not to be confused with the Sphinx search engine!) Is a speech recognition system created by developers from Carnegie Mellon University and consisting of various modules for extracting speech features, speech recognition (including on mobile devices) and training for such recognition. CMU Sphinx uses hidden Markov models at the acoustic-phonetic recognition level and statistical N-gram models at the linguistic recognition level. The system also has a number of interesting features: recognition of long speech (for example, transcripts or sound recordings of an interview), the ability to connect a large dictionary to hundreds of thousands of word forms, etc. It is important to note that the system is constantly evolving, with each version, recognition quality and performance are improved . Also there are cross-platform and convenient documentation. Among the minuses of using this system, it is possible to single out the inability to start CMU Sphinx “out of the box”, because even solving simple problems requires knowledge on adapting the acoustic model, in the field of language modeling, etc. - Julius

Julius has been developed by Japanese developers since 1997, and now the project is supported by Advanced Science, Technology & Management Research Institute of Kyoto. The model is based on N-grams and context-sensitive hidden Markov models, the system is able to recognize speech in real time. The disadvantages are distribution only for the Japanese language model (although there is a VoxForge project that creates acoustic models for other languages, in particular for the English language) and the lack of stable updates. - RWTH ASR

The model has been developed by specialists from the Rhine-Westphalian Technical University since 2001, consists of several libraries and tools written in C ++. The project also includes installation documentation, various training systems, templates, acoustic models, language models, support for neural networks, etc. At the same time, the RWTH ASR is practically cross-platform and has a low speed. - Htk

HTK (Hidden Markov Model Toolkit) is a set of speech recognition tools that was created at Cambridge University in 1989. Toolkit based on hidden Markov models is most often used as an additional tool for creating speech recognition systems (for example, this framework is used by Julius developers). Despite the fact that the source code is publicly available, the use of HTK to create systems for end users is prohibited by the license, which is why the toolkit is not popular right now. The system also has a relatively low speed and accuracy.

In the article “Comparative analysis of open source speech recognition systems” ( https://research-journal.org/technical/sravnitelnyj-analiz-sistem-raspoznavaniya-rechi-s-otkrytym-kodom/ ), a study was conducted during which all the systems were trained in an English language case (160 hours) and applied in a small 10-hour test case. As a result, it turned out that Kaldi has the highest recognition accuracy, slightly outperforming its competitors in terms of speed. Also, the Kaldi system is able to provide the user with the richest selection of algorithms for different tasks and is very convenient to use. At the same time, emphasis is placed on the fact that work with documentation may be inconvenient for an inexperienced user, as It is designed for speech recognition professionals. But in general, Kaldi is more suitable for scientific research than its analogues.

How to install Kaldi

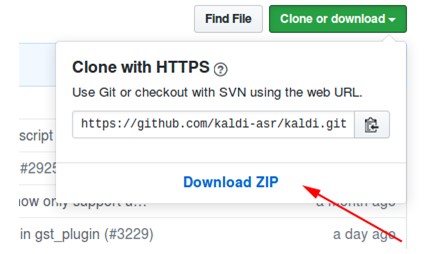

- Download the archive from the repository at https://github.com/kaldi-asr/kaldi :

- Unpack the archive, go to kaldi-master / tools / extras.

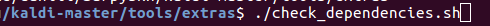

- We execute ./check_dependencies.sh:

If after that you see not “all ok”, then open the file kaldi-master / tools / INSTALL and follow the instructions there. - We execute make (being in kaldi-master / tools, not in kaldi-master / tools / extras):

- Go to kaldi-master / src.

- We run ./configure --shared, and you can configure the installation with or without CUDA technology by specifying the path to the installed CUDA (./configure --cudatk-dir = / usr / local / cuda-8.0) or change the initial value “yes "To" no "(./ configure —use-cuda = no) respectively.

If at the same time you see:

either you did not follow step 4, or you need to download and install OpenFst yourself: http://www.openfst.org/twiki/bin/view/FST/FstDownload . - We make make depend.

- We execute make -j. It is recommended that you enter the correct number of processor cores that you will use when building, for example, make -j 2.

- As a result, we get:

An example of using a model with Kaldi installed

As an example, I used the kaldi-ru model version 0.6, you can download it from this link :

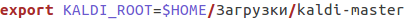

- After downloading, go to the file kaldi-ru-0.6 / decode.sh and specify the path to the installed Kaldi, it looks like this for me:

- We launch the model, indicating the file in which the speech is to be recognized. You can use the file decoder-test.wav, this is a special file for the test, it is already in this folder:

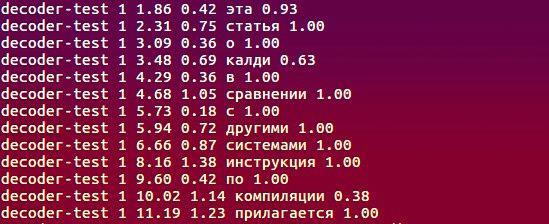

- And here is what the model recognized:

What algorithms are used, what underlies the work?

Full information about the project can be found at http://kaldi-asr.org/doc/ , here I will highlight a few key points:

- Either acoustic MFCC (Mel-Frequency Cepstral Coefficients) or slightly less popular PLPs (Perceptual Linear prediction - see H. Hermansky, “Perceptual linear predictive (PLP) analysis of speech” ) are used to extract acoustic features from the input signal. In the first method, the spectrum of the original signal is converted from the Hertz scale to a chalk scale, and then cepstral coefficients are calculated using the inverse cosine transform (https://habr.com/en/post/140828/). The second method is based on the regression representation of speech: a signal model is constructed that describes the prediction of the current signal sample by a linear combination - the product of known samples of input and output signals and linear prediction coefficients. The task of calculating the signs of speech is reduced to finding these coefficients under certain conditions.

- The acoustic modeling module includes hidden Markov models (HMM), a mixture model of Gaussian distributions (GMM), deep neural networks, namely Time-Delay Neural Networks (TDNN).

- Language modeling is carried out using a finite state machine, or FST (finite-state transducer). FST encodes a mapping from an input character sequence to an output character sequence, while there are weights for the transition that determine the likelihood of calculating the input character in the output.

- Decoding takes place using the forward-reverse algorithm.

About creating the kaldi-ru-0.6 model

For the Russian language, there is a pre-trained recognition model created by Nikolai Shmyryov, also known on many sites and forums as nsh .

- To extract features, the MFCC method was used, and the acoustic-phonetic model itself is based on neural networks of the TDNN type.

- The training sample was the soundtracks of videos in Russian, downloaded from YouTube.

- To create a language model, we used the CMUdict dictionary and exactly the vocabulary that was in the training set. Due to the fact that the dictionary contained similar pronunciations of different words, it was decided to assign the meaning of “probability” to each word and normalize them.

- To learn the language model, the RNNLM framework (recurrent neural network language models) was used, based, as the name implies, on recurrent neural networks (instead of the good old N-grams).

Comparison with Google Speech API and Yandex Speech Kit

Surely, one of the readers, when reading the previous paragraphs, had a question: okay, that Kaldi is superior to its direct counterparts, we figured out, but what about recognition systems from Google and Yandex? Maybe the relevance of the frameworks described earlier is doubtful if there are tools from these two giants? The question is really good, so let's test, and as a dataset we will take notes and the corresponding text transcripts from the notorious VoxForge .

As a result, after each system recognized 3677 sound files, I received the following WER (Word Error Rate) values:

Summing up, we can say that all systems coped with the task at approximately the same level, and Kaldi was not much inferior to the Yandex Speech Kit and the Google Speech API. But at the same time, the Yandex Speech Kit and the Google Speech API are “black boxes” that work somewhere far, far away on other people's servers and are not accessible for tuning, but Kaldi can be adapted to the specifics of the task at hand - characteristic vocabulary (professionalism, jargon, colloquial slang), pronunciation features, etc. And all this for free and without SMS! The system is a kind of designer, which we can all use to create something unusual and interesting.

I work in the laboratory of LAPDiMO NSU:

Website: https://bigdata.nsu.ru/

VK Group: https://vk.com/lapdimo

All Articles