Excellent Quantum Excellence FAQ by Scott Aaronson

A couple of days ago a draft article from Google on their achievement of quantum superiority in a superconducting quantum computer leaked to the network. The text itself was quickly removed, and rumors and assumptions, including erroneous ones, multiplied around it. The author of the post is Professor Scott Aaronson , one of the leading specialists in quantum algorithms, and has an excellent blog. In the last post, he answers the main questions about quantum superiority.

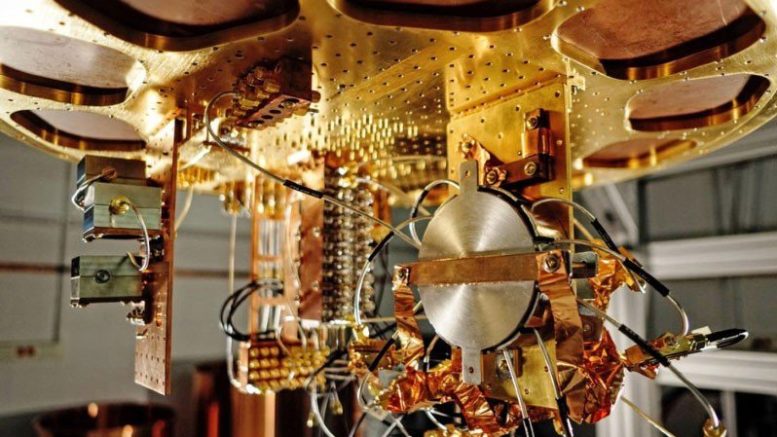

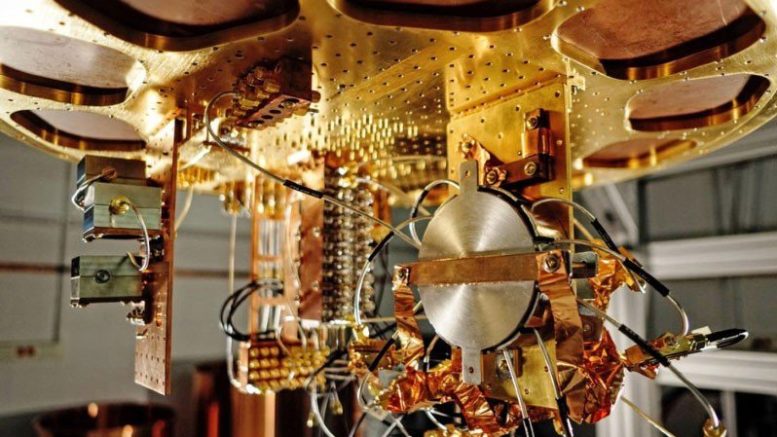

You've already seen stories - in the Financial Times , Technology Review , CNET , Facebook, Reddit, Twitter, or elsewhere - that talk about a group from Google that achieved quantum superiority with a 53-qubit superconducting computer. These stories are easy to find, I will not attach them here, since not one of them should have existed yet.

As the whole world knows now, Google is actually preparing a big statement on quantum superiority, which is planned in conjunction with a scientific article in a cool magazine (which choice is small - one of two). I hope this happens within a month.

Meanwhile, NASA, where some of the authors of the article works, accidentally published an old version of the article on a public site. She was there for a short time, but long enough to be in the Financial Times, my mail and a million other places. Speculation without facts about its significance is expected to multiply.

It seems that the world was deprived of the pure moment of the "moon landing", where the extended Church-Thuring thesis is destroyed by experimental evidence during a press conference. It will be more like the flight of the Wright brothers - about which rumors and half-truths seeped into public space between 1903 and 1908, when Will and Orville finally decided to demonstrate their flight. (This time, fortunately, everything will be clarified much faster!)

This post is not an official statement or confirmation of anything. Although the lightning bolts are already visible, the thunder belongs to a group from Google, in time and place of their choice.

Rather, I want to stop the flow of misinformation and offer here, as a blogger and “public intellectual,” my Scott's Supreme Quantum Supremacy FAQ. You know, in case you suddenly happen to be curious about quantum superiority, or you always wanted to know what would happen if suddenly some search company from Mountain View or from somewhere else hypothetically announced that quantum superiority was achieved.

Without further ado, here it is:

IN 1. What is quantum computational excellence?

Often shortened to simply “quantum superiority”, the term refers to the use of a quantum computer to solve a special set of problems, the solution of which on a classical computer using any known algorithm would take orders of magnitude longer - and not for some random reasons, but from due to asymptotic quantum complexity. The emphasis here is on being as sure as possible that the problem was really solved in a quantum way and really classically unattainable, and ideally it will allow to achieve computation acceleration in a short time (with noisy, non-universal quantum computers that we already have there or will be soon). If the task is also useful for something, so much the better, but it is not necessary. The Wright plane and the Fermi woodpile were not useful on their own.

IN 2. If Google really achieved quantum superiority, does this mean that now there is no cracking code , as the recent Democratic candidate for the United States Democratic candidate Andrew Young?

No, it doesn’t (although I still like Young's candidacy).

There are two problems. Firstly, the devices created by Google, IBM and others have 50-100 qubits, and no error correction. Hacking RSA using the Shore algorithm will require several thousand logical qubits. With well-known error correction methods, millions of physical qubits, and better quality than we have now, can easily be needed for this. It does not seem to me that anyone is close to this, and we have no idea how soon they will be able to get close to this.

And the second problem is that even in the hypothetical future of QC with error correction, we will be able to break only some codes, but not all, at least as far as we know now. Coincidentally, the public keys that can be cracked include most of the algorithms that we use to secure the Internet: RSA, Diffie-Hellman, elliptical cryptography, etc. But cryptography based on private keys should not be affected. And there are also cryptosystems for public keys (for example, based on gratings) that no one knows how to crack using QC even after 20+ years of trying, and there are attempts to switch to these systems. For example, see my letter to Rebecca Goldstein .

IN 3. What kind of calculations does Google plan to do (or have already done), which are considered classically complex.

Of course, I can tell you, but I feel silly at the same time. The calculation is this: the experimenter generates a random quantum circuit C (i.e., a random sequence of 1-qubit and 2-qubit - between the nearest neighbors - gates, with a depth of 20, for example, acting on a 2D network n = 50-60 qubits). After that, the experimenter sends C to the quantum computer, and asks him to apply C to the initial state of 0, measure the result in the basis {0,1}, send back the n-bit observable sequence (string), and repeat several thousand or millions of times. Finally, using his knowledge of C, the experimenter performs a statistical check on the compliance of the result with the expected output from a quantum computer.

This task is not from the category of tasks with the only correct answer, unlike factorization of numbers. The result of the operation of scheme C turns out to be a statistical distribution (let's call it D C ) over n-bit strings, from which we need to select samples. In fact, usually D C can correspond to 2 n lines - so many that if the QC works as expected, it will never produce the same line in the output twice. It is important that the distribution of D C is not uniform. Some lines arose as a result of constructive interference of amplitudes and therefore have a higher probability, and others as a result of destructive, and have a lower probability. And although we get only a small fraction of all possible 2 n samples, we can check the distribution of these samples statistically for compliance with the expected uneven distribution, and make sure that we get something that is difficult to reproduce classically.

TL; DR, a quantum computer is asked to apply a random (but known) sequence of quantum operations - not because we are interested in the result, but because we are trying to prove that QC can perform this clearly stated task better than a classical computer.

AT 4. But if a quantum computer just executes some junk code, whose only purpose is to be difficult for classical simulation, who cares? Isn't that a big over-pie with ... nothing?

No. This, of course, is not a pie with everything tasty, but at least a pie with something.

Have at least a bit of respect for the immensity of what we are talking about here, and what kind of engineering genius it took to translate this into reality. Before quantum superiority (KP), KP skeptics simply chuckled that even after billions of dollars and 20+ years of work, a quantum computer still cannot do something faster than your laptop. At least not using quantumness. In the world after achieving quantum supremacy, they no longer laugh. Superposition of 2 50 or 2 60 complex numbers was calculated using a significantly smaller resource of time and data volume than 2 50 or 2 60

I’m talking about the Wright plane only because the gap between what we are talking about and the denial, something I see in different parts of the Internet, is completely incomprehensible. It is as if you believed that it was impossible to fly in principle, and then you saw a flimsy wooden airplane - it may not shake your faith, but it certainly should not strengthen it.

Was I right, worrying many years ago that the constant hype about the much lesser achievements of the KK will tire people and they will give a damn when something really worthwhile finally happens?

AT 5. Many years ago, you reproached the masses for their enthusiasm for D-Wave and their claims of a stunning quantum advantage in optimization problems using quantum annealing. Now you are reproaching them for their lack of enthusiasm for quantum superiority. Why are you so fickle?

Because my goal is not to shift the level of enthusiasm in a clearly chosen direction, but to be right. In hindsight, wasn’t I mostly right about D-Wave, even when my statements made me very unpopular in some circles? Well, now I'm trying to be right about quantum superiority too.

AT 6. If quantum superiority calculations are simply based on samples from a probability distribution, how can one verify that they are made correctly?

Thanks for asking! This is precisely the topic on which I and others have developed many theoretical foundations in the last ten years. I already told the short version in my answer B3: you test this by running the statistical tests on the samples that the quantum computer gives out to verify that they are concentrated around the peaks of the chaotic probability distribution D C. One convenient way to do this, which Google calls the “linear cross-entropy test”, is simply to sum all Pr [C yields s i ] for all samples s 1 , ..., s k that KK returned, and then declare the test successful if and only if the sum exceeds a certain level - say, bk / 2 n , where b is a constant between 1 and 2.

Honestly, in order to apply this test, you need to calculate all the probabilities Pr [C gives s i ] on a classic computer - and the only known way to do this is iterate over a time of ~ 2 n . But does it bother anyone? No, if n = 50, and you google and you can count numbers like 2 50 (although you won’t get 2 1000 , which is superior to google , khe-khe). Having driven out a large cluster of classical cores for, say, a month, you will end up with the result that the CC produced in a couple of seconds - at the same time, make sure that the CC is a couple of orders faster. Nevertheless, this means that experiments based on sampling are almost specially designed for devices with ~ 50 qubits. For even with 100 qubits we have no idea how to assure the result even using all the computing power available on Earth.

(I note separately that this problem is specific only for sampling experiments, similar to what is being carried out now. If you used the Shor algorithm to factor a 2000-digit number, you can easily check the result by simply multiplying all the factors and checking their simplicity. Exactly so if KK is used to simulate a complex biomolecule, you can check the result by experimenting with the molecule.)

AT 7. Wait a minute. If classical computers can only verify the results of an experiment on quantum superiority only in a mode where classical computers can still simulate an experiment (albeit very slowly), how can one speak of “quantum superiority”?

Well then you. With a 53-qubit chip, you see acceleration several million times, and you can still verify the result, and also verify that the acceleration grows exponentially with the number of qubits, just as the asymptotic analysis predicts. This is not insignificant.

B8 Is there any mathematical evidence that no fast classic computer can beat the results of a sampling experiment?

Not at the moment. But this is not the fault of researchers of quantum superiority! As long as theorists cannot even prove basic hypotheses, such as P ≠ NP or P ≠ PSPACE, and without this we cannot unconditionally exclude the possibility of a quick classical simulation. The best we can hope for is conditional complexity. And we have some results about this, for example, an article on boson sampling or an article by Bouldand et al. about the average # P-complexity of calculating the amplitudes of random circuits, or my article with Lijie Chen ("Complexity-Theoretic Foundations of Quantum Supremacy Experiments"). The most important open question in this area, in my opinion, is the proof of the best conditional difficulties.

AT 9. Is there any use for sampling quantum superiority?

Until recently, the answer was obviously no! (And I know, because I myself was one of such people). Recently, however, the situation has changed - for example, thanks to my certified randomness protocol, which shows how KP based on sampling can be independently used to generate bits that can be proved random even for a skeptical third party (under assumptions about calculations). This in turn has an application in cryptocurrency and other cryptographic protocols. I hope more such applications come soon.

AT 10. If experiments on quantum superiority do random bits generate everything, why is this interesting at all? Is it not possible to convert qubits into random bits trivially just by measuring them?

The bottom line is that QC generates non-uniformly distributed random bits. Instead, it creates a selection from some complex, correlated probability distribution over 50-60 bit strings. In my protocol, deviations from uniformity play a central role in how the QC convinces the skeptic that he actually selected random bits, rather than using some kind of pseudo-random generator in secret.

AT 11. Have decades of quantum mechanical experiments, for example, with violation of Bell's inequality, not demonstrated quantum superiority?

This is a purely lexical question. Those experiments demonstrated other types of quantum superiority: for example, in the case of Bell's inequality, they can be called "quantum correlation superiority." They showed no quantum computational superiority, i.e. the ability to find something inaccessible to a classical computer (where classical simulation has no restrictions on spatial locally, etc.). Nowadays, when people use the phrase "quantum superiority", they mean quantum computational superiority.

AT 12. Even so, do not there exist countless examples of materials and chemical reactions that are difficult to simulate classically, and specially constructed quantum simulators (such as those in the Lukin group at Harvard). Why aren't they considered quantum computing superiority?

In the definitions of some people, they are considered! The main difference with a Google computer is that they have a fully programmable computer - you can enter any arbitrary sequence of 2-qubit gates (for neighboring qubits) by simply entering commands from a classic computer.

In other words, KP skeptics can no longer mockingly notice that, of course, quantum systems are difficult to simulate classically, but this is simply because nature is generally difficult to simulate, and you cannot reprogram the random molecule found in the field into a computer to simulate itself yourself. By any reasonable definition, superconducting devices created by Google, IBM and others are computers in the full sense of the word.

B13 Did you (Scott Aaronson) invent the concept of quantum excellence?

No. I played a role in its development, and therefore, for example, Sabina Hosenfelder, among others, mistakenly attributed the authorship of the whole idea to me . The term "quantum superiority" was proposed by John Preskill in 2012, although in a sense, the basic idea appeared at the dawn of quantum contributions in the early 80s. In 1994, using the Shore algorithm to factorize a large number became the main experiment to test quantum superiority, although at the moment this is still too difficult to produce.

The key idea that sampling can be used instead was, as far as I know, first proposed by Barbara Terhal and David DiVincenzo in a 2002 article . The current effort on a sampled CP began around 2011, when Alex Arkhipov and I published our article on bosonic sampling , and Bremner, Jozsa, and Shepherd independently published an article on models of commuting Hamiltonians . These articles showed not only “simple”, not universal quantum systems that can solve obviously complex sampling problems, but also that the existence of an effective classical algorithm would mean a fall in the polynomial hierarchy . Arkhipov and I also laid the foundation for the argument that even approximate versions of quantum sampling can be classically complex.

As far as I know, the idea of “randomly sampling circuits” —that is, generating a complex sampling problem by randomly selecting a sequence of 2-qubit gates in, say, a superconducting architecture –– their correspondence arose, which I started in December 2015, which included John Martinis, Hartmut Neven , Sergio Boixo, Ashley Montanaro, Michael Bremner, Richard Jozsa, Aram Harrow, Greg Kuperberg, and others. The thread was entitled “Challenging Sampling Issues with 40 Qubits,” and the letter began with “Sorry for spam.” In it, I discussed the various advantages and disadvantages of three options for demonstrating a quantum advantage using sampling: (1) random circuits, (2) commuting Hamiltonians, (3) boson sampling.After Greg Cooperberg stood up for option (1), a consensus quickly formed that (1) would really be the best option from an engineering point of view, and that if we do not yet have a satisfactory theoretical justification for (1), we can somehow get around this.

After that, the Google team did a great job analyzing random sampling schemes, both theoretically and numerically, while Lijie Chen and I and Bouland et al. provided different evidence of the classical complexity of this problem in terms of complexity theory.

B14. If quantum superiority is achieved, what does this mean for skeptics?

Wow, I would not want to be in their place now! They may fall back on the position that, well, of course, quantum superiority is possible (and who said otherwise? Well, of course they are not!), And the whole difficulty has always been in quantum error correction. And in fact, some have said so from the very beginning. And others, my good friend Gil Kalai, among them, by writing, right on this blog, predicted that quantum superiority would never be achieved for fundamental reasons. Now they will not be screwed.

B15. So, what is next?

If this is really achieved quantum superiority, then, I think, the Google group has all the hardware to demonstrate my protocol for certified random bit generation. And this is actually one of the following things in their plan.

And besides this, the next obvious step is to use a programmable QC, with 50-100 qubits for some useful quantum simulation (for example, a system with a condensed state of matter) much faster than any classical method. And the second obvious step is to demonstrate the use of error correction to keep the logical qubit alive longer than the lifetime of the physical qubits on which it is based. There is no doubt that IBM, Google and the rest of the players will chase each other for primacy in these steps.

From the translator. I am not at all a specialist in algorithmic complexity and quantum algorithms in particular. But the post seemed to me too interesting not to discuss it on Habré, and for any errors in terms or their unusual use, please kick me in PM.

Scott's Supreme Quantum Supremacy FAQ!

You've already seen stories - in the Financial Times , Technology Review , CNET , Facebook, Reddit, Twitter, or elsewhere - that talk about a group from Google that achieved quantum superiority with a 53-qubit superconducting computer. These stories are easy to find, I will not attach them here, since not one of them should have existed yet.

As the whole world knows now, Google is actually preparing a big statement on quantum superiority, which is planned in conjunction with a scientific article in a cool magazine (which choice is small - one of two). I hope this happens within a month.

Meanwhile, NASA, where some of the authors of the article works, accidentally published an old version of the article on a public site. She was there for a short time, but long enough to be in the Financial Times, my mail and a million other places. Speculation without facts about its significance is expected to multiply.

It seems that the world was deprived of the pure moment of the "moon landing", where the extended Church-Thuring thesis is destroyed by experimental evidence during a press conference. It will be more like the flight of the Wright brothers - about which rumors and half-truths seeped into public space between 1903 and 1908, when Will and Orville finally decided to demonstrate their flight. (This time, fortunately, everything will be clarified much faster!)

This post is not an official statement or confirmation of anything. Although the lightning bolts are already visible, the thunder belongs to a group from Google, in time and place of their choice.

Rather, I want to stop the flow of misinformation and offer here, as a blogger and “public intellectual,” my Scott's Supreme Quantum Supremacy FAQ. You know, in case you suddenly happen to be curious about quantum superiority, or you always wanted to know what would happen if suddenly some search company from Mountain View or from somewhere else hypothetically announced that quantum superiority was achieved.

Without further ado, here it is:

IN 1. What is quantum computational excellence?

Often shortened to simply “quantum superiority”, the term refers to the use of a quantum computer to solve a special set of problems, the solution of which on a classical computer using any known algorithm would take orders of magnitude longer - and not for some random reasons, but from due to asymptotic quantum complexity. The emphasis here is on being as sure as possible that the problem was really solved in a quantum way and really classically unattainable, and ideally it will allow to achieve computation acceleration in a short time (with noisy, non-universal quantum computers that we already have there or will be soon). If the task is also useful for something, so much the better, but it is not necessary. The Wright plane and the Fermi woodpile were not useful on their own.

IN 2. If Google really achieved quantum superiority, does this mean that now there is no cracking code , as the recent Democratic candidate for the United States Democratic candidate Andrew Young?

No, it doesn’t (although I still like Young's candidacy).

There are two problems. Firstly, the devices created by Google, IBM and others have 50-100 qubits, and no error correction. Hacking RSA using the Shore algorithm will require several thousand logical qubits. With well-known error correction methods, millions of physical qubits, and better quality than we have now, can easily be needed for this. It does not seem to me that anyone is close to this, and we have no idea how soon they will be able to get close to this.

And the second problem is that even in the hypothetical future of QC with error correction, we will be able to break only some codes, but not all, at least as far as we know now. Coincidentally, the public keys that can be cracked include most of the algorithms that we use to secure the Internet: RSA, Diffie-Hellman, elliptical cryptography, etc. But cryptography based on private keys should not be affected. And there are also cryptosystems for public keys (for example, based on gratings) that no one knows how to crack using QC even after 20+ years of trying, and there are attempts to switch to these systems. For example, see my letter to Rebecca Goldstein .

IN 3. What kind of calculations does Google plan to do (or have already done), which are considered classically complex.

Of course, I can tell you, but I feel silly at the same time. The calculation is this: the experimenter generates a random quantum circuit C (i.e., a random sequence of 1-qubit and 2-qubit - between the nearest neighbors - gates, with a depth of 20, for example, acting on a 2D network n = 50-60 qubits). After that, the experimenter sends C to the quantum computer, and asks him to apply C to the initial state of 0, measure the result in the basis {0,1}, send back the n-bit observable sequence (string), and repeat several thousand or millions of times. Finally, using his knowledge of C, the experimenter performs a statistical check on the compliance of the result with the expected output from a quantum computer.

This task is not from the category of tasks with the only correct answer, unlike factorization of numbers. The result of the operation of scheme C turns out to be a statistical distribution (let's call it D C ) over n-bit strings, from which we need to select samples. In fact, usually D C can correspond to 2 n lines - so many that if the QC works as expected, it will never produce the same line in the output twice. It is important that the distribution of D C is not uniform. Some lines arose as a result of constructive interference of amplitudes and therefore have a higher probability, and others as a result of destructive, and have a lower probability. And although we get only a small fraction of all possible 2 n samples, we can check the distribution of these samples statistically for compliance with the expected uneven distribution, and make sure that we get something that is difficult to reproduce classically.

TL; DR, a quantum computer is asked to apply a random (but known) sequence of quantum operations - not because we are interested in the result, but because we are trying to prove that QC can perform this clearly stated task better than a classical computer.

AT 4. But if a quantum computer just executes some junk code, whose only purpose is to be difficult for classical simulation, who cares? Isn't that a big over-pie with ... nothing?

No. This, of course, is not a pie with everything tasty, but at least a pie with something.

Have at least a bit of respect for the immensity of what we are talking about here, and what kind of engineering genius it took to translate this into reality. Before quantum superiority (KP), KP skeptics simply chuckled that even after billions of dollars and 20+ years of work, a quantum computer still cannot do something faster than your laptop. At least not using quantumness. In the world after achieving quantum supremacy, they no longer laugh. Superposition of 2 50 or 2 60 complex numbers was calculated using a significantly smaller resource of time and data volume than 2 50 or 2 60

I’m talking about the Wright plane only because the gap between what we are talking about and the denial, something I see in different parts of the Internet, is completely incomprehensible. It is as if you believed that it was impossible to fly in principle, and then you saw a flimsy wooden airplane - it may not shake your faith, but it certainly should not strengthen it.

Was I right, worrying many years ago that the constant hype about the much lesser achievements of the KK will tire people and they will give a damn when something really worthwhile finally happens?

AT 5. Many years ago, you reproached the masses for their enthusiasm for D-Wave and their claims of a stunning quantum advantage in optimization problems using quantum annealing. Now you are reproaching them for their lack of enthusiasm for quantum superiority. Why are you so fickle?

Because my goal is not to shift the level of enthusiasm in a clearly chosen direction, but to be right. In hindsight, wasn’t I mostly right about D-Wave, even when my statements made me very unpopular in some circles? Well, now I'm trying to be right about quantum superiority too.

AT 6. If quantum superiority calculations are simply based on samples from a probability distribution, how can one verify that they are made correctly?

Thanks for asking! This is precisely the topic on which I and others have developed many theoretical foundations in the last ten years. I already told the short version in my answer B3: you test this by running the statistical tests on the samples that the quantum computer gives out to verify that they are concentrated around the peaks of the chaotic probability distribution D C. One convenient way to do this, which Google calls the “linear cross-entropy test”, is simply to sum all Pr [C yields s i ] for all samples s 1 , ..., s k that KK returned, and then declare the test successful if and only if the sum exceeds a certain level - say, bk / 2 n , where b is a constant between 1 and 2.

Honestly, in order to apply this test, you need to calculate all the probabilities Pr [C gives s i ] on a classic computer - and the only known way to do this is iterate over a time of ~ 2 n . But does it bother anyone? No, if n = 50, and you google and you can count numbers like 2 50 (although you won’t get 2 1000 , which is superior to google , khe-khe). Having driven out a large cluster of classical cores for, say, a month, you will end up with the result that the CC produced in a couple of seconds - at the same time, make sure that the CC is a couple of orders faster. Nevertheless, this means that experiments based on sampling are almost specially designed for devices with ~ 50 qubits. For even with 100 qubits we have no idea how to assure the result even using all the computing power available on Earth.

(I note separately that this problem is specific only for sampling experiments, similar to what is being carried out now. If you used the Shor algorithm to factor a 2000-digit number, you can easily check the result by simply multiplying all the factors and checking their simplicity. Exactly so if KK is used to simulate a complex biomolecule, you can check the result by experimenting with the molecule.)

AT 7. Wait a minute. If classical computers can only verify the results of an experiment on quantum superiority only in a mode where classical computers can still simulate an experiment (albeit very slowly), how can one speak of “quantum superiority”?

Well then you. With a 53-qubit chip, you see acceleration several million times, and you can still verify the result, and also verify that the acceleration grows exponentially with the number of qubits, just as the asymptotic analysis predicts. This is not insignificant.

B8 Is there any mathematical evidence that no fast classic computer can beat the results of a sampling experiment?

Not at the moment. But this is not the fault of researchers of quantum superiority! As long as theorists cannot even prove basic hypotheses, such as P ≠ NP or P ≠ PSPACE, and without this we cannot unconditionally exclude the possibility of a quick classical simulation. The best we can hope for is conditional complexity. And we have some results about this, for example, an article on boson sampling or an article by Bouldand et al. about the average # P-complexity of calculating the amplitudes of random circuits, or my article with Lijie Chen ("Complexity-Theoretic Foundations of Quantum Supremacy Experiments"). The most important open question in this area, in my opinion, is the proof of the best conditional difficulties.

AT 9. Is there any use for sampling quantum superiority?

Until recently, the answer was obviously no! (And I know, because I myself was one of such people). Recently, however, the situation has changed - for example, thanks to my certified randomness protocol, which shows how KP based on sampling can be independently used to generate bits that can be proved random even for a skeptical third party (under assumptions about calculations). This in turn has an application in cryptocurrency and other cryptographic protocols. I hope more such applications come soon.

AT 10. If experiments on quantum superiority do random bits generate everything, why is this interesting at all? Is it not possible to convert qubits into random bits trivially just by measuring them?

The bottom line is that QC generates non-uniformly distributed random bits. Instead, it creates a selection from some complex, correlated probability distribution over 50-60 bit strings. In my protocol, deviations from uniformity play a central role in how the QC convinces the skeptic that he actually selected random bits, rather than using some kind of pseudo-random generator in secret.

AT 11. Have decades of quantum mechanical experiments, for example, with violation of Bell's inequality, not demonstrated quantum superiority?

This is a purely lexical question. Those experiments demonstrated other types of quantum superiority: for example, in the case of Bell's inequality, they can be called "quantum correlation superiority." They showed no quantum computational superiority, i.e. the ability to find something inaccessible to a classical computer (where classical simulation has no restrictions on spatial locally, etc.). Nowadays, when people use the phrase "quantum superiority", they mean quantum computational superiority.

AT 12. Even so, do not there exist countless examples of materials and chemical reactions that are difficult to simulate classically, and specially constructed quantum simulators (such as those in the Lukin group at Harvard). Why aren't they considered quantum computing superiority?

In the definitions of some people, they are considered! The main difference with a Google computer is that they have a fully programmable computer - you can enter any arbitrary sequence of 2-qubit gates (for neighboring qubits) by simply entering commands from a classic computer.

In other words, KP skeptics can no longer mockingly notice that, of course, quantum systems are difficult to simulate classically, but this is simply because nature is generally difficult to simulate, and you cannot reprogram the random molecule found in the field into a computer to simulate itself yourself. By any reasonable definition, superconducting devices created by Google, IBM and others are computers in the full sense of the word.

B13 Did you (Scott Aaronson) invent the concept of quantum excellence?

No. I played a role in its development, and therefore, for example, Sabina Hosenfelder, among others, mistakenly attributed the authorship of the whole idea to me . The term "quantum superiority" was proposed by John Preskill in 2012, although in a sense, the basic idea appeared at the dawn of quantum contributions in the early 80s. In 1994, using the Shore algorithm to factorize a large number became the main experiment to test quantum superiority, although at the moment this is still too difficult to produce.

The key idea that sampling can be used instead was, as far as I know, first proposed by Barbara Terhal and David DiVincenzo in a 2002 article . The current effort on a sampled CP began around 2011, when Alex Arkhipov and I published our article on bosonic sampling , and Bremner, Jozsa, and Shepherd independently published an article on models of commuting Hamiltonians . These articles showed not only “simple”, not universal quantum systems that can solve obviously complex sampling problems, but also that the existence of an effective classical algorithm would mean a fall in the polynomial hierarchy . Arkhipov and I also laid the foundation for the argument that even approximate versions of quantum sampling can be classically complex.

As far as I know, the idea of “randomly sampling circuits” —that is, generating a complex sampling problem by randomly selecting a sequence of 2-qubit gates in, say, a superconducting architecture –– their correspondence arose, which I started in December 2015, which included John Martinis, Hartmut Neven , Sergio Boixo, Ashley Montanaro, Michael Bremner, Richard Jozsa, Aram Harrow, Greg Kuperberg, and others. The thread was entitled “Challenging Sampling Issues with 40 Qubits,” and the letter began with “Sorry for spam.” In it, I discussed the various advantages and disadvantages of three options for demonstrating a quantum advantage using sampling: (1) random circuits, (2) commuting Hamiltonians, (3) boson sampling.After Greg Cooperberg stood up for option (1), a consensus quickly formed that (1) would really be the best option from an engineering point of view, and that if we do not yet have a satisfactory theoretical justification for (1), we can somehow get around this.

After that, the Google team did a great job analyzing random sampling schemes, both theoretically and numerically, while Lijie Chen and I and Bouland et al. provided different evidence of the classical complexity of this problem in terms of complexity theory.

B14. If quantum superiority is achieved, what does this mean for skeptics?

Wow, I would not want to be in their place now! They may fall back on the position that, well, of course, quantum superiority is possible (and who said otherwise? Well, of course they are not!), And the whole difficulty has always been in quantum error correction. And in fact, some have said so from the very beginning. And others, my good friend Gil Kalai, among them, by writing, right on this blog, predicted that quantum superiority would never be achieved for fundamental reasons. Now they will not be screwed.

B15. So, what is next?

If this is really achieved quantum superiority, then, I think, the Google group has all the hardware to demonstrate my protocol for certified random bit generation. And this is actually one of the following things in their plan.

And besides this, the next obvious step is to use a programmable QC, with 50-100 qubits for some useful quantum simulation (for example, a system with a condensed state of matter) much faster than any classical method. And the second obvious step is to demonstrate the use of error correction to keep the logical qubit alive longer than the lifetime of the physical qubits on which it is based. There is no doubt that IBM, Google and the rest of the players will chase each other for primacy in these steps.

All Articles