GPU Bound. How to transfer everything to the video card and a little more. Animations

Once upon a time, it was a huge event when a multitexturing unit or hardware transformation & lighting (T&L) appeared on the GPU. Setting Fixed Function Pipeline was a magical shamanism. And those who knew how to enable and use the advanced features of specific chips through the D3D9 API hacks, considered themselves to have learned Zen. But time passed, shaders appeared. At first, strongly limited both in functionality and in length. Further, more and more features, more instructions, more speed. Compute (CUDA, OpenCL, DirectCompute) appeared, and the scope of video card capacities began to expand rapidly.

In this series of (hopefully) articles, I will try to explain and show how “unusually” you can apply the capabilities of the modern GPU when developing games, in addition to graphic effects. The first part will be devoted to the animation system. Everything that is described is based on practical experience, implemented and works in real game projects.

Oooo, again the animation. About it a hundred times already written and described. What's so complicated? We pack the matrix of bones in the buffer / texture, and use it for skinning in the vertex shader. This has been described in the GPU Gems 3 (Chapter 2. Animated Crowd Rendering) . And implemented in the recent Unite Tech Presentation . Is it possible in a different way?

A lot of hype, but is it really cool? There is an article on the hub that describes in detail how skeletal animation is made and works in this techno-demo. Parallel job is all good, we do not consider them. But we need to find out what and how there, in terms of rendering.

In a large-scale battle, two armies fight, each consisting ... of one type of unit. Skeletons on the left, knights on the right. Variety is so-so. Each unit consists of 3 LODs (~ 300, ~ 1000, ~ 4000 vertices each), and only 2 bones affect the vertex. The animation system consists of only 7 animations for each type of unit (I recall that there are already 2 of them). Animations do not blend in, but switch discretely from simple code that is executed in job'ax, which is emphasized in the presentation. There is no state machine. When we have two types of mesh, you can draw the whole crowd in two instanced draw call. Skeletal animation, as I already wrote, is based on the technology described back in 2009.

Innovative? Hmm ... a breakthrough? Um ... Suitable for modern games? Well, perhaps, the ratio of FPS to the number of units boast.

The main disadvantages of this approach (pre-matrix in textures):

GPU Powered Animation System. The name just came up.

The new animation system had several requirements:

Let’s rethink the preparation and baking of animations. We will not use matrices. Modern video cards work well with loops, natively support int in addition to float, so we will work with keyframes like on a CPU.

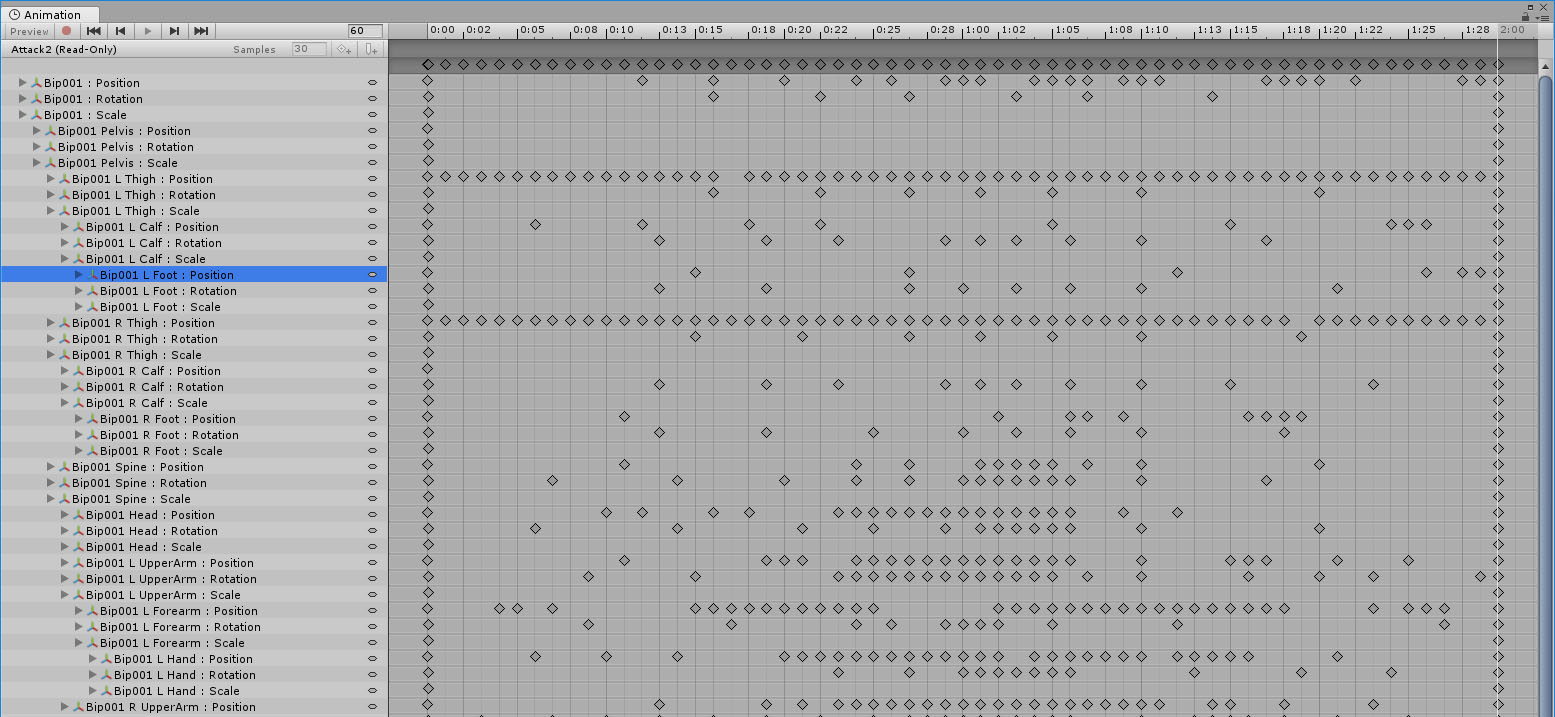

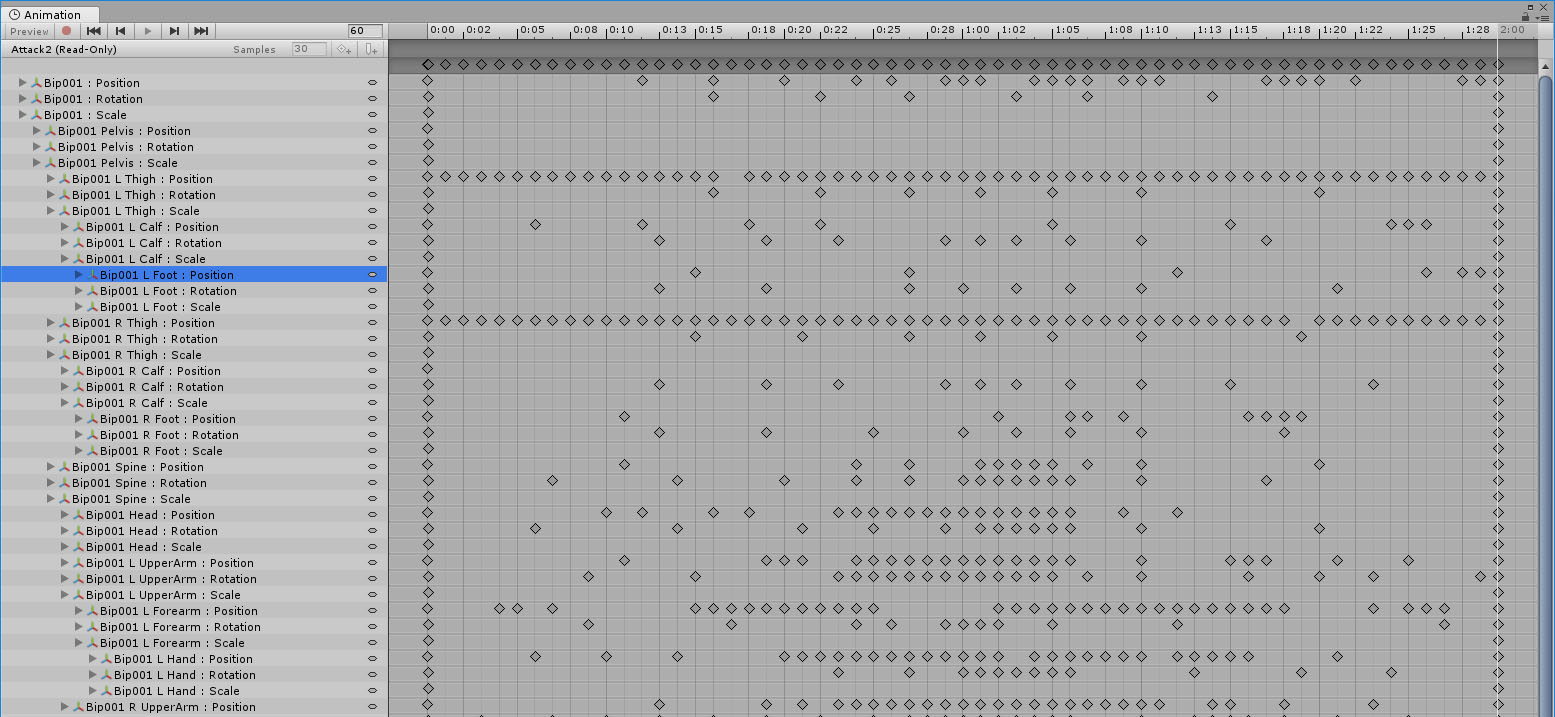

Consider an example of animation in the Animation Viewer:

It can be seen that the keyframes are set separately for position, scale and rotation. For some bones, you need a lot of them, for some only a few, and for those bones that are not animated separately, just the initial and final keyframes are set.

Position - Vector3, quaternion - Vector4, scale - Vector3. The keyframe structure will have one thing in common (for simplification), so we need 4 float to fit any of the above types. We also need InTangent and OutTangent for proper interpolation between keyframes according to curvature. Oh yes, and the normalized time is not to forget:

To get all the keyframes, use AnimationUtility.GetEditorCurve ().

Also, we must remember the names of the bones, since it will be necessary to remap the bones of the animation in the bones of the skeleton (and they may not coincide) at the stage of preparing the hcp data.

Filling linear buffers with arrays of keyframes, we will remember the offsets in them in order to find those that relate to the animation we need.

Now interesting. GPU skeleton animation.

We prepare a large (“number of animated skeletons” X “number of bones in the skeleton” X “empirical coefficient of the maximum number of animation blends”) buffer. In it we will store the position, rotation and scale of the bone at the moment of the animation. And for all the planned animated bones in this frame, run compute shader. Each thread animates its bone.

Each keyframe, regardless of what size it belongs to (Translate, Rotation, Scale), is interpolated in exactly the same way (search by linear search, forgive me Knuth):

The curve is a cubic Bezier curve, so the interpolation function will be as follows:

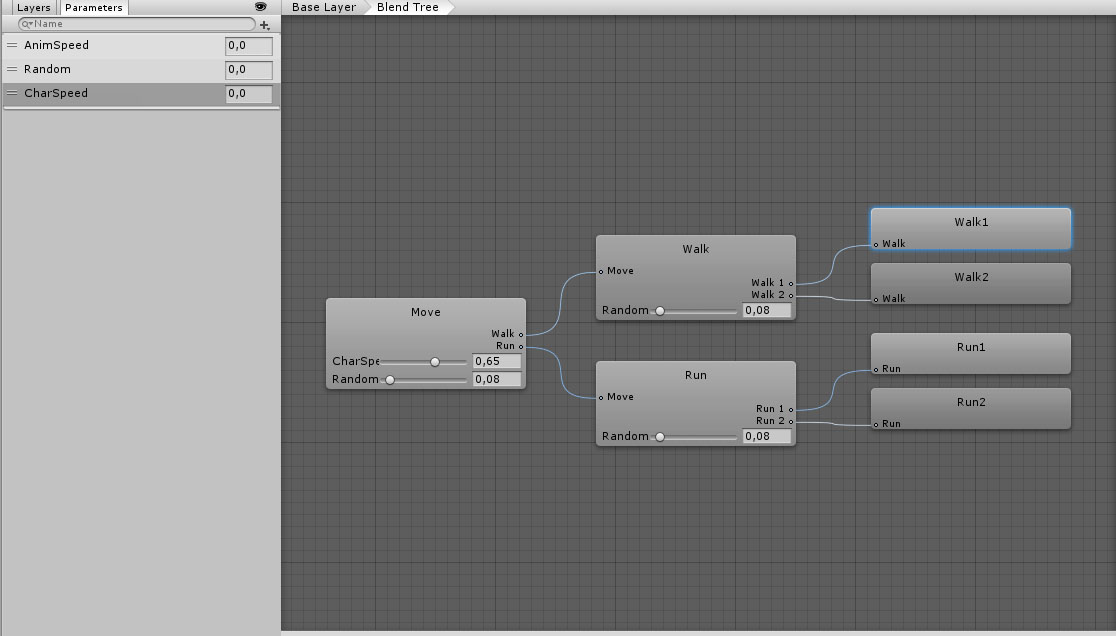

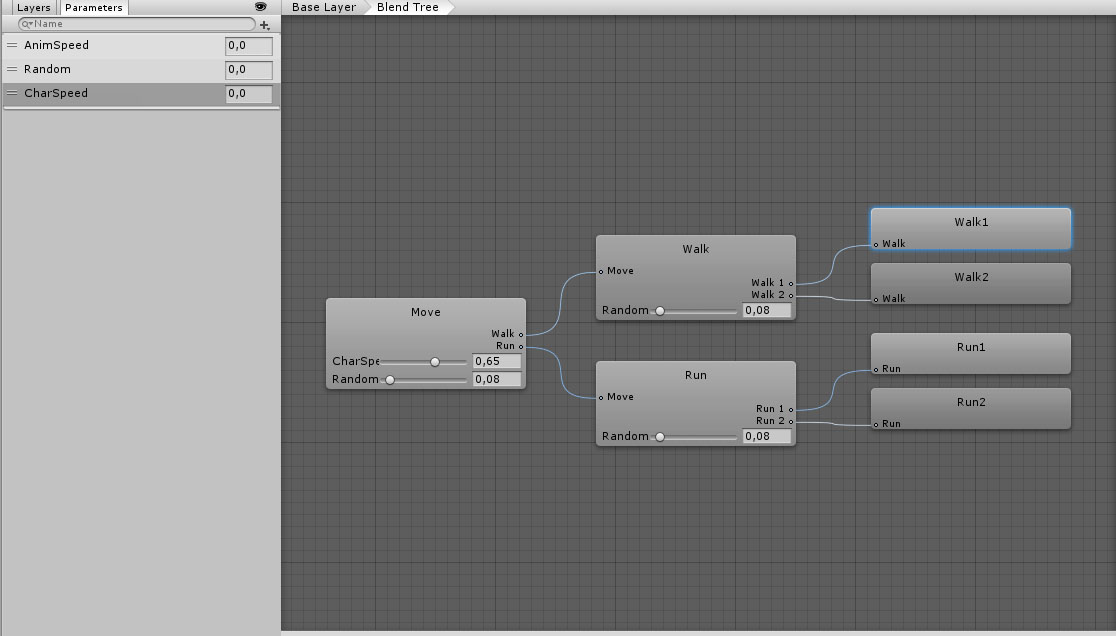

The local bone position (TRS) was calculated. Next, with a separate compute shader, we blend all the necessary animations for this bone. To do this, we have a buffer with animation indices and weights of each animation in the final blend. We get this information from the state machine. The situation of BlendTree inside BlendTree is solved as follows. For example, there is a tree:

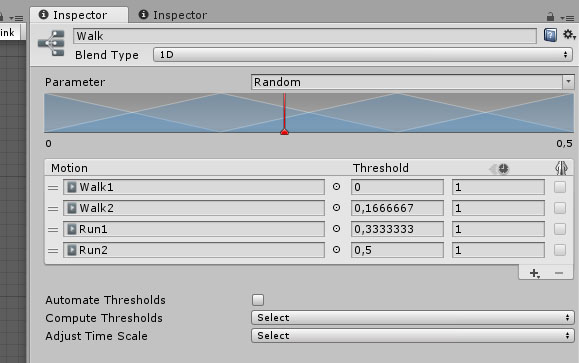

BlendTree Walk will have a weight of 0.35, Run - 0.65. Accordingly, the final position of the bones should be determined by 4 animations: Walk1, Walk2, Run1 and Run2. Their weights will have values (0.35 * 0.92, 0.35 * 0.08, 0.65 * 0.92, 0.65 * 0.08) = (0.322, 0.028, 0.598, 0.052), respectively. It should be noted that the sum of the weights should always be equal to one, or magic bugs are provided.

The "heart" of the blending function:

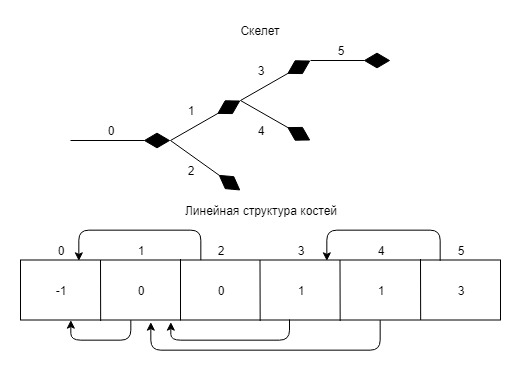

Now you can translate into a transformation matrix. Stop. About the hierarchy of bones completely forgotten.

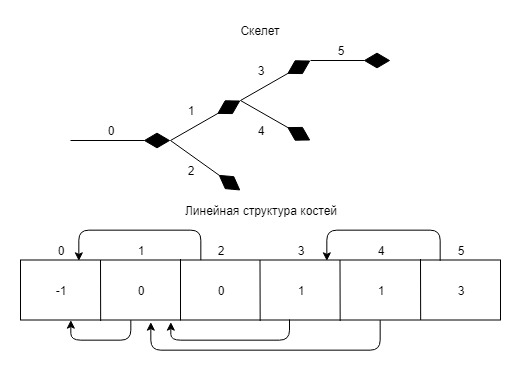

Based on the data from the skeleton, we construct an array of indices, where the cell with the bone index contains the index of its parent. In root, write -1.

Example:

Here, in principle, all the main points of rendering and blending animations.

GPU Powered State Machine (you guessed it right). The animation system described above would work just fine with the Unity Animation State Machine, but then all efforts would be worthless. With the possibility of calculating tens (if not hundreds) of thousands of animations per frame, UnityAnimator will not pull out thousands of simultaneously working state machines. Hm ...

What is a state machine in Unity? This is a closed system of states and transitions, which is controlled by simple numerical properties. Each state machine operates independently of each other, and on the same set of input data. Wait a minute. This is an ideal task for GPUs and compute shaders!

Baking phase

First, we need to collect and place all state machine data in a GPU friendly structure. And this: states (states), transitions (transitions) and parameters (parameters).

All this data is placed in linear buffers, and addressed by indexes.

Each compute thread considers its state machine. AnimatorController provides an interface to all the necessary internal state machine structures.

The main structures of the state machine:

Runtime phase

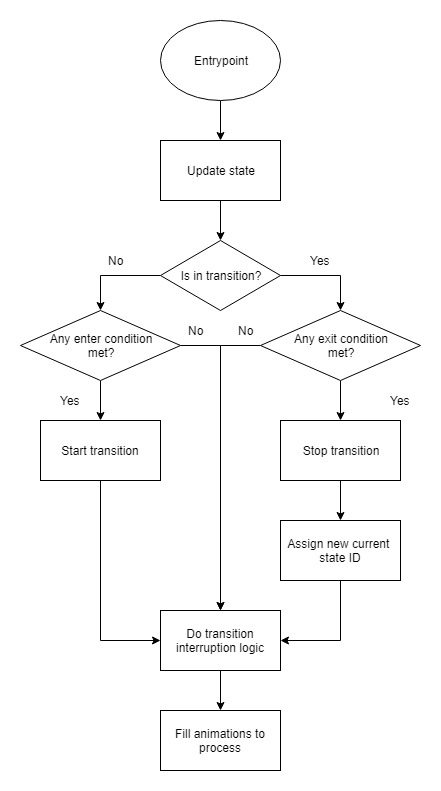

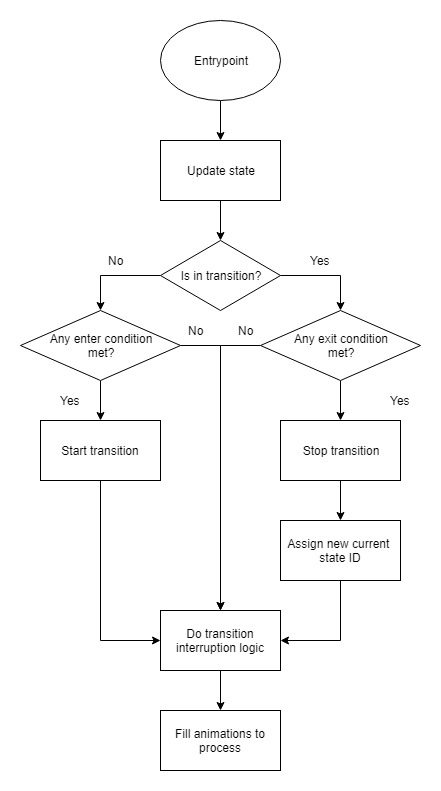

The main cycle of each state machine can be displayed using the following algorithm:

There are 4 types of parameters in Unity animator: float, int, bool and trigger (which is bool). We will present all of them as float. When setting up the conditions, it is possible to choose one of six types of comparison. If == Equals. IfNot == NotEqual. So we will use only 4. The operator index is passed to the checkMode field of the Condition structure.

To start the transition, all conditions must be true. The weird case labels are just (int) AnimatorConditionMode. Interruption logic is a tricky logic to interrupt and roll back transitions.

After we updated the state of the state machine and scrolled the time stamps on the delta t frame, it’s time to prepare data about what animations should be read in this frame and the corresponding weights. This step is skipped if the unit model is not in the frame (Frustum culled). Why should we consider animations of what is not visible? We go over the blend tree source state, the blend tree destination state, add all the animations from them, and calculate the weights by the normalized time from the source to destination (time spent in transition). With prepared data, GPAS enters the case, and counts animations for each animated entity in the game.

The unit control parameters come from the unit control logic. For example, you need to enable running, set the CharSpeed parameter, and a correctly configured state machine smoothly blends transition animations from “walking” to “running”.

Naturally, the complete analogy with Unity Animator did not work. Internal principles of work, if they are not described in the documentation, had to be reversed and an analogue made. Some functionality has not yet been completed (it may not be). For example, BlendType in BlendTree only supports 1D. To make other types, in principle, is not difficult, just now it is not necessary. There are no animation events, since it is necessary to do readback with the GPU, and the “correct” readback will be several frames behind, which is not always acceptable. But it is also possible.

Unit rendering is done through instancing. According to SV_InstanceID, in the vertex shader, we get the matrix of all the bones that affect the vertex, and transform it. Absolutely nothing out of the ordinary:

Does this farm work fast? Obviously slower than sampling the texture with matrices, but, nevertheless, I can show some numbers (GTX 970).

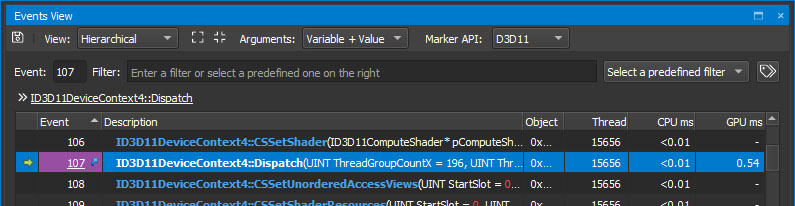

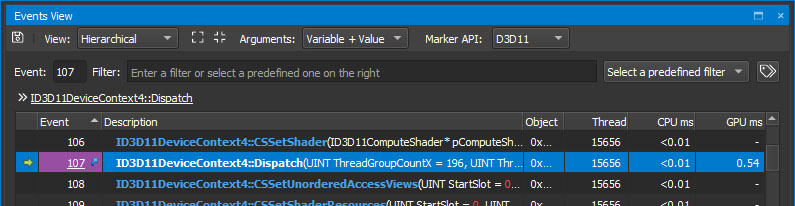

Here are 50,000 state machines:

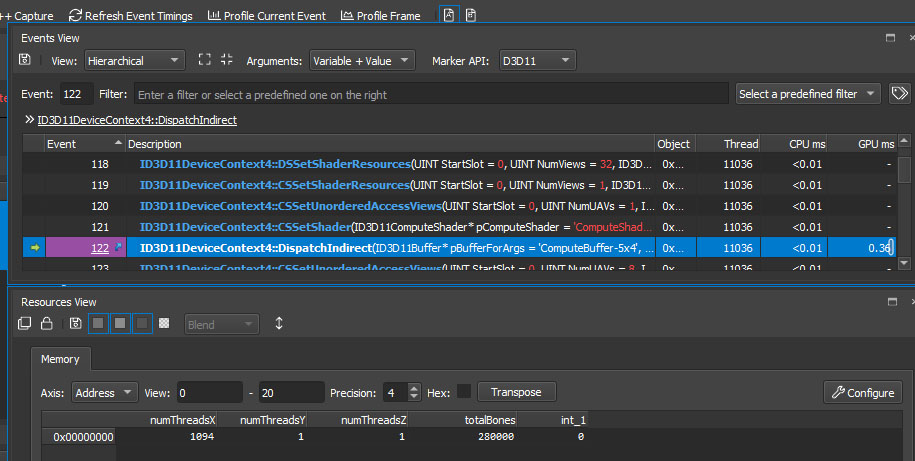

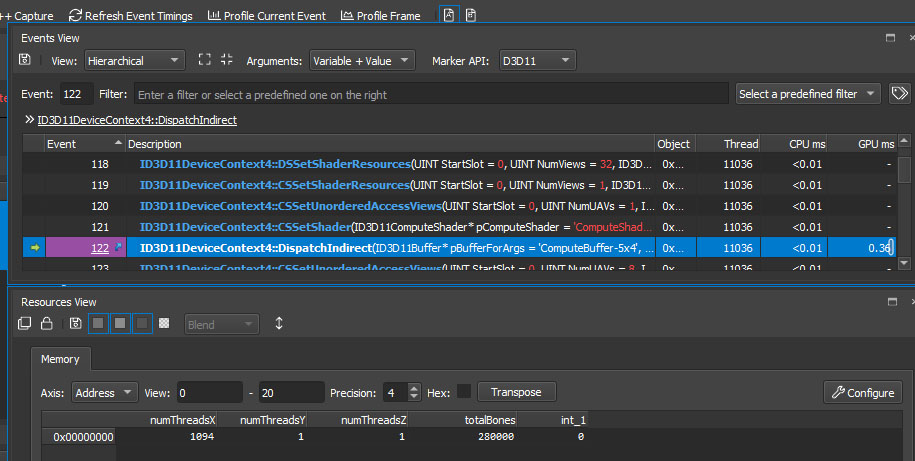

Here are 280,000 animated bones:

Designing and debugging all of this is a real pain. A bunch of buffers and offsets. A bunch of components and their interactions. There were times when hands fell when you beat your head about a problem for several days, but you cannot find what the problem is. It’s especially “pleasant” when everything works as it should on the test data, but in a real “combat” situation there isn’t any kind of animation glitch. Discrepancies between the operation of Unity state machines and their own are also not immediately visible. In general, if you decide to make an analog for yourself, then I do not envy you. Actually, the whole development under the GPU is such, why complain.

PS I want to throw a stone in the garden of Unite TechDemo developers. They have on stage a large number of identical models of ruins and bridges, and they did not optimize their rendering in any way. Rather, they tried by ticking “static”. Only now, in 16 bit indexes you can’t cram a lot of geometry (three times haha, 2017) and nothing has come together, since the models are highly polygonal. I put “Enable Instancing” for all shaders, and unchecked “Static”. There was no tangible boost, but, damn it, you’re doing a techno-demo, fighting for every FPS. You can’t crap like that.

PPS At all times, games were mainly CPU bound, i.e. The CPU did not keep up with the GPU. Too much logic and physics. Transferring part of the game logic from the CPU to the GPU, we unload the first and load the second, i.e. make the situation of GPU bound more likely. Hence the title of the article.

In this series of (hopefully) articles, I will try to explain and show how “unusually” you can apply the capabilities of the modern GPU when developing games, in addition to graphic effects. The first part will be devoted to the animation system. Everything that is described is based on practical experience, implemented and works in real game projects.

Oooo, again the animation. About it a hundred times already written and described. What's so complicated? We pack the matrix of bones in the buffer / texture, and use it for skinning in the vertex shader. This has been described in the GPU Gems 3 (Chapter 2. Animated Crowd Rendering) . And implemented in the recent Unite Tech Presentation . Is it possible in a different way?

Technodemka from Unity

A lot of hype, but is it really cool? There is an article on the hub that describes in detail how skeletal animation is made and works in this techno-demo. Parallel job is all good, we do not consider them. But we need to find out what and how there, in terms of rendering.

In a large-scale battle, two armies fight, each consisting ... of one type of unit. Skeletons on the left, knights on the right. Variety is so-so. Each unit consists of 3 LODs (~ 300, ~ 1000, ~ 4000 vertices each), and only 2 bones affect the vertex. The animation system consists of only 7 animations for each type of unit (I recall that there are already 2 of them). Animations do not blend in, but switch discretely from simple code that is executed in job'ax, which is emphasized in the presentation. There is no state machine. When we have two types of mesh, you can draw the whole crowd in two instanced draw call. Skeletal animation, as I already wrote, is based on the technology described back in 2009.

Innovative? Hmm ... a breakthrough? Um ... Suitable for modern games? Well, perhaps, the ratio of FPS to the number of units boast.

The main disadvantages of this approach (pre-matrix in textures):

- Frame rate dependent. Wanted twice as many frames of animation - give twice as much memory.

- Lack of blending animations. Of course, they can be made, but in the skin shader a complex mess will form from the blending logic.

- Lack of binding to the Unity Animator state machine. A convenient tool for customizing the character’s behavior, which can be connected to any skinning system, but in our case, due to point 2, everything becomes very complicated (imagine how to blend nested BlendTree).

GPAS

GPU Powered Animation System. The name just came up.

The new animation system had several requirements:

- Work fast (well, understandable). You need to animate tens of thousands of different units.

- Be a complete (or nearly) analogue of the Unity animation system. If there the animation looks like this, then in the new system it should look exactly the same. Ability to switch between built-in CPU and GPU systems. This is often necessary for debugging. When the animations are “buggy”, then by switching to the classic animator you can understand: these are the glitches of the new system, or the state machine / animation itself.

- All animations are customizable in Unity Animator. A convenient, tested, and most importantly ready-to-use tool. We will build bikes elsewhere.

Let’s rethink the preparation and baking of animations. We will not use matrices. Modern video cards work well with loops, natively support int in addition to float, so we will work with keyframes like on a CPU.

Consider an example of animation in the Animation Viewer:

It can be seen that the keyframes are set separately for position, scale and rotation. For some bones, you need a lot of them, for some only a few, and for those bones that are not animated separately, just the initial and final keyframes are set.

Position - Vector3, quaternion - Vector4, scale - Vector3. The keyframe structure will have one thing in common (for simplification), so we need 4 float to fit any of the above types. We also need InTangent and OutTangent for proper interpolation between keyframes according to curvature. Oh yes, and the normalized time is not to forget:

struct KeyFrame { float4 v; float4 inTan, outTan; float time; };

To get all the keyframes, use AnimationUtility.GetEditorCurve ().

Also, we must remember the names of the bones, since it will be necessary to remap the bones of the animation in the bones of the skeleton (and they may not coincide) at the stage of preparing the hcp data.

Filling linear buffers with arrays of keyframes, we will remember the offsets in them in order to find those that relate to the animation we need.

Now interesting. GPU skeleton animation.

We prepare a large (“number of animated skeletons” X “number of bones in the skeleton” X “empirical coefficient of the maximum number of animation blends”) buffer. In it we will store the position, rotation and scale of the bone at the moment of the animation. And for all the planned animated bones in this frame, run compute shader. Each thread animates its bone.

Each keyframe, regardless of what size it belongs to (Translate, Rotation, Scale), is interpolated in exactly the same way (search by linear search, forgive me Knuth):

void InterpolateKeyFrame(inout float4 rv, int startIdx, int endIdx, float t) { for (int i = startIdx; i < endIdx; ++i) { KeyFrame k0 = keyFrames[i + 0]; KeyFrame k1 = keyFrames[i + 1]; float lerpFactor = (t - k0.time) / (k1.time - k0.time); if (lerpFactor < 0 || lerpFactor > 1) continue; rv = CurveInterpoate(k0, k1, lerpFactor); break; } }

The curve is a cubic Bezier curve, so the interpolation function will be as follows:

float4 CurveInterpoate(KeyFrame v0, KeyFrame v1, float t) { float dt = v1.time - v0.time; float4 m0 = v0.outTan * dt; float4 m1 = v1.inTan * dt; float t2 = t * t; float t3 = t2 * t; float a = 2 * t3 - 3 * t2 + 1; float b = t3 - 2 * t2 + t; float c = t3 - t2; float d = -2 * t3 + 3 * t2; float4 rv = a * v0.v + b * m0 + c * m1 + d * v1.v; return rv; }

The local bone position (TRS) was calculated. Next, with a separate compute shader, we blend all the necessary animations for this bone. To do this, we have a buffer with animation indices and weights of each animation in the final blend. We get this information from the state machine. The situation of BlendTree inside BlendTree is solved as follows. For example, there is a tree:

BlendTree Walk will have a weight of 0.35, Run - 0.65. Accordingly, the final position of the bones should be determined by 4 animations: Walk1, Walk2, Run1 and Run2. Their weights will have values (0.35 * 0.92, 0.35 * 0.08, 0.65 * 0.92, 0.65 * 0.08) = (0.322, 0.028, 0.598, 0.052), respectively. It should be noted that the sum of the weights should always be equal to one, or magic bugs are provided.

The "heart" of the blending function:

float bw = animDef.blendWeight; BoneXForm boneToBlend = animatedBones[srcBoneIndex]; float4 q = boneToBlend.quat; float3 t = boneToBlend.translate; float3 s = boneToBlend.scale; if (dot(resultBone.quat, q) < 0) q = -q; resultBone.translate += t * bw; resultBone.quat += q * bw; resultBone.scale += s * bw;

Now you can translate into a transformation matrix. Stop. About the hierarchy of bones completely forgotten.

Based on the data from the skeleton, we construct an array of indices, where the cell with the bone index contains the index of its parent. In root, write -1.

Example:

float4x4 animMat = IdentityMatrix(); float4x4 mat = initialPoses[boneId]; while (boneId >= 0) { BoneXForm b = blendedBones[boneId]; float4x4 xform = MakeTransformMatrix(b.translate, b.quat, b.scale); animMat = mul(animMat, xform); boneId = bonesHierarchyIndices[boneId]; } mat = mul(mat, animMat); resultSkeletons[id] = mat;

Here, in principle, all the main points of rendering and blending animations.

GPSM

GPU Powered State Machine (you guessed it right). The animation system described above would work just fine with the Unity Animation State Machine, but then all efforts would be worthless. With the possibility of calculating tens (if not hundreds) of thousands of animations per frame, UnityAnimator will not pull out thousands of simultaneously working state machines. Hm ...

What is a state machine in Unity? This is a closed system of states and transitions, which is controlled by simple numerical properties. Each state machine operates independently of each other, and on the same set of input data. Wait a minute. This is an ideal task for GPUs and compute shaders!

Baking phase

First, we need to collect and place all state machine data in a GPU friendly structure. And this: states (states), transitions (transitions) and parameters (parameters).

All this data is placed in linear buffers, and addressed by indexes.

Each compute thread considers its state machine. AnimatorController provides an interface to all the necessary internal state machine structures.

The main structures of the state machine:

struct State { float speed; int firstTransition; int numTransitions; int animDefId; }; struct Transition { float exitTime; float duration; int sourceStateId; int targetStateId; int firstCondition; int endCondition; uint properties; }; struct StateData { int id; float timeInState; float animationLoop; }; struct TransitionData { int id; float timeInTransition; }; struct CurrentState { StateData srcState, dstState; TransitionData transition; }; struct AnimationDef { uint animId; int nextAnimInTree; int parameterIdx; float lengthInSec; uint numBones; uint loop; }; struct ParameterDef { float2 line0ab, line1ab; int runtimeParamId; int nextParameterId; }; struct Condition { int checkMode; int runtimeParamIndex; float referenceValue; };

- State contains the speed at which the state is played, and the indices of the conditions of transition to others according to the state machine.

- Transition contains state indices “from” and “to”. Transition time, exit time, and a link to an array of conditions for entering this state.

- CurrentState is a runtime data block with data on the current state of the state machine.

- AnimationDef contains a description of the animation with links to others related to it by BlendTree.

- ParameterDef is a description of the parameter that controls the behavior of the state machines. Line0ab and Line1ab are the coefficients of the equation of the lines, to determine the weight of the animation by the value of the parameter. From here:

- Condition - the specification of the condition for comparing the runtime value of the parameter and the reference value.

Runtime phase

The main cycle of each state machine can be displayed using the following algorithm:

There are 4 types of parameters in Unity animator: float, int, bool and trigger (which is bool). We will present all of them as float. When setting up the conditions, it is possible to choose one of six types of comparison. If == Equals. IfNot == NotEqual. So we will use only 4. The operator index is passed to the checkMode field of the Condition structure.

for (int i = t.firstCondition; i < t.endCondition; ++i) { Condition c = allConditions[i]; float paramValue = runtimeParameters[c.runtimeParamIndex]; switch (c.checkMode) { case 3: if (paramValue < c.referenceValue) return false; case 4: if (paramValue > c.referenceValue) return false; case 6: if (abs(paramValue - c.referenceValue) > 0.001f) return false; case 7: if (abs(paramValue - c.referenceValue) < 0.001f) return false; } } return true;

To start the transition, all conditions must be true. The weird case labels are just (int) AnimatorConditionMode. Interruption logic is a tricky logic to interrupt and roll back transitions.

After we updated the state of the state machine and scrolled the time stamps on the delta t frame, it’s time to prepare data about what animations should be read in this frame and the corresponding weights. This step is skipped if the unit model is not in the frame (Frustum culled). Why should we consider animations of what is not visible? We go over the blend tree source state, the blend tree destination state, add all the animations from them, and calculate the weights by the normalized time from the source to destination (time spent in transition). With prepared data, GPAS enters the case, and counts animations for each animated entity in the game.

The unit control parameters come from the unit control logic. For example, you need to enable running, set the CharSpeed parameter, and a correctly configured state machine smoothly blends transition animations from “walking” to “running”.

Naturally, the complete analogy with Unity Animator did not work. Internal principles of work, if they are not described in the documentation, had to be reversed and an analogue made. Some functionality has not yet been completed (it may not be). For example, BlendType in BlendTree only supports 1D. To make other types, in principle, is not difficult, just now it is not necessary. There are no animation events, since it is necessary to do readback with the GPU, and the “correct” readback will be several frames behind, which is not always acceptable. But it is also possible.

Render

Unit rendering is done through instancing. According to SV_InstanceID, in the vertex shader, we get the matrix of all the bones that affect the vertex, and transform it. Absolutely nothing out of the ordinary:

float4 ApplySkin(float3 v, uint vertexID, uint instanceID) { BoneInfoPacked bip = boneInfos[vertexID]; BoneInfo bi = UnpackBoneInfo(bip); SkeletonInstance skelInst = skeletonInstances[instanceID]; int bonesOffset = skelInst.boneOffset; float4x4 animMat = 0; for (int i = 0; i < 4; ++i) { float bw = bi.boneWeights[i]; if (bw > 0) { uint boneId = bi.boneIDs[i]; float4x4 boneMat = boneMatrices[boneId + bonesOffset]; animMat += boneMat * bw; } } float4 rv = float4(v, 1); rv = mul(rv, animMat); return rv; }

Summary

Does this farm work fast? Obviously slower than sampling the texture with matrices, but, nevertheless, I can show some numbers (GTX 970).

Here are 50,000 state machines:

Here are 280,000 animated bones:

Designing and debugging all of this is a real pain. A bunch of buffers and offsets. A bunch of components and their interactions. There were times when hands fell when you beat your head about a problem for several days, but you cannot find what the problem is. It’s especially “pleasant” when everything works as it should on the test data, but in a real “combat” situation there isn’t any kind of animation glitch. Discrepancies between the operation of Unity state machines and their own are also not immediately visible. In general, if you decide to make an analog for yourself, then I do not envy you. Actually, the whole development under the GPU is such, why complain.

PS I want to throw a stone in the garden of Unite TechDemo developers. They have on stage a large number of identical models of ruins and bridges, and they did not optimize their rendering in any way. Rather, they tried by ticking “static”. Only now, in 16 bit indexes you can’t cram a lot of geometry (three times haha, 2017) and nothing has come together, since the models are highly polygonal. I put “Enable Instancing” for all shaders, and unchecked “Static”. There was no tangible boost, but, damn it, you’re doing a techno-demo, fighting for every FPS. You can’t crap like that.

It was

*** Summary *** Draw calls: 2553 Dispatch calls: 0 API calls: 8378 Index/vertex bind calls: 2992 Constant bind calls: 648 Sampler bind calls: 395 Resource bind calls: 805 Shader set calls: 682 Blend set calls: 230 Depth/stencil set calls: 92 Rasterization set calls: 238 Resource update calls: 1017 Output set calls: 74 API:Draw/Dispatch call ratio: 3.28163 298 Textures - 1041.01 MB (1039.95 MB over 32x32), 42 RTs - 306.94 MB. Avg. tex dimension: 1811.77x1810.21 (2016.63x2038.98 over 32x32) 216 Buffers - 180.11 MB total 17.54 MB IBs 159.81 MB VBs. 1528.06 MB - Grand total GPU buffer + texture load. *** Draw Statistics *** Total calls: 2553, instanced: 2, indirect: 2 Instance counts: 1: 2: 3: 4: 5: 6: 7: 8: 9: 10: 11: 12: 13: 14: >=15: ******************************************************************************************************************************** (2)

Has become

*** Summary *** Draw calls: 1474 Dispatch calls: 0 API calls: 11106 Index/vertex bind calls: 3647 Constant bind calls: 1039 Sampler bind calls: 348 Resource bind calls: 718 Shader set calls: 686 Blend set calls: 230 Depth/stencil set calls: 110 Rasterization set calls: 258 Resource update calls: 1904 Output set calls: 74 API:Draw/Dispatch call ratio: 7.5346 298 Textures - 1041.01 MB (1039.95 MB over 32x32), 42 RTs - 306.94 MB. Avg. tex dimension: 1811.77x1810.21 (2016.63x2038.98 over 32x32) 427 Buffers - 93.30 MB total 9.81 MB IBs 80.51 MB VBs. 1441.25 MB - Grand total GPU buffer + texture load. *** Draw Statistics *** Total calls: 1474, instanced: 391, indirect: 2 Instance counts: 1: 2: ******************************************************************************************************************************** (104) 3: ************************************************* (40) 4: ********************** (18) 5: ****************************** (25) 6: ********************************************************************************************* (76) 7: *********************************** (29) 8: ************************************************** (41) 9: ********* (8) 10: ************** (12) 11: 12: ****** (5) 13: ******* (6) 14: ** (2) >=15: ****************************** (25)

PPS At all times, games were mainly CPU bound, i.e. The CPU did not keep up with the GPU. Too much logic and physics. Transferring part of the game logic from the CPU to the GPU, we unload the first and load the second, i.e. make the situation of GPU bound more likely. Hence the title of the article.

All Articles