“Alice, let's go to the front end!”

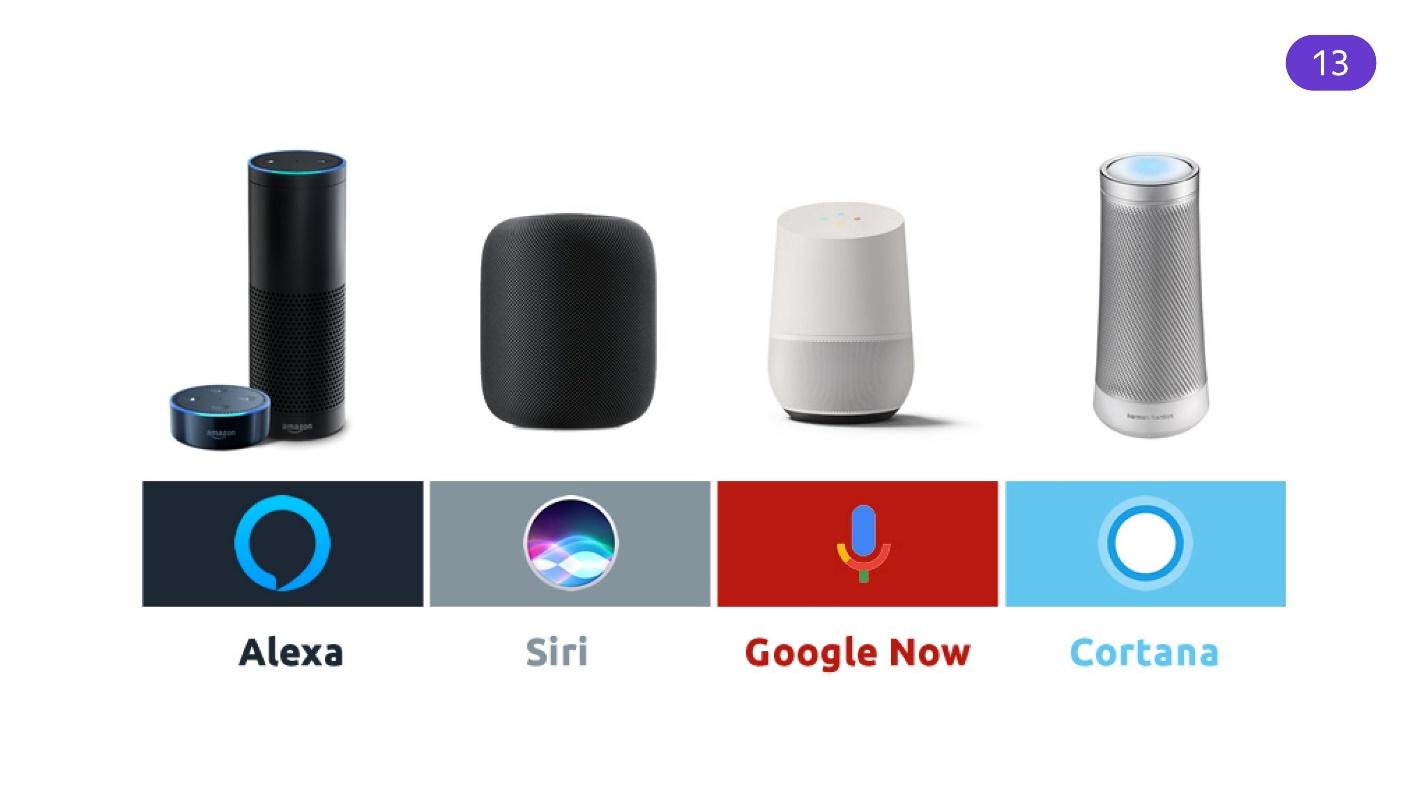

Voice assistants are not a distant future, but a reality. Alexa, Siri, Google Now, Alice are built into smart speakers, watches and phones. They are gradually changing our way of interacting with applications and devices. Through an assistant you can find out the weather forecast, buy plane tickets, order a taxi, listen to music and turn on the kettle in the kitchen, lying on the couch in another room.

Siri or Alexa speak mostly English to users, so in Russia they are not as popular as Yandex's Alice. For developers, Alice is also more convenient: her creators have a blog, post convenient tools on GitHub and help build the assistant in new devices.

Nikita Dubko (@dark_mefody on Twitter) is a Yandex interface developer, organizer of MinskCSS and MinskJS meetings and a news editor in Web standards. Nikita does not work in Yandex.Dialogs and is not affiliated with Yandex.Alisa in any way. But he was interested in understanding how Alice works, so he tried to apply her skills for the Web and prepared a report on this at FrontendConf RIT ++. In deciphering Nikita's report, we will consider what voice assistants can bring useful and build a skill right in the process of reading this material.

Let's start with the history of bots. In 1966, the Eliza bot appeared, pretending to be a psychotherapist. One could communicate with him, and some even believed that a living person answers them. In 1995, the ALICE bot came out. - not to be confused with Alice. The bot was able to impersonate a real person. To this day lies in Open Source and is being finalized. Unfortunately, ALICE does not pass the Turing Test, but it does not stop him from misleading people.

In 2006, IBM placed a huge knowledge base and sophisticated intelligence in the bot - that is how IBM Watson came about. This is a huge computing cluster that can process English speech and give out some facts.

In 2016, Microsoft conducted an experiment. She created the Tay bot, which she launched on Twitter. There, the bot learned to microblog based on how live subscribers interacted with it. As a result, Tay became a racist and misogynist. Now this is a closed account. Moral: do not let children on Twitter, he can teach the bad.

But these are all bots that you can’t communicate with for your own benefit. In 2015, “useful” appeared on Telegram. Bots existed in other programs, but Telegram made a splash. It was possible to create a useful bot that would provide information, generate content, administer publics - the possibilities are great, and the API is simple. Bots added pictures, buttons, tooltips - an interaction interface appeared.

Gradually, the idea spread to almost all instant messengers: Facebook, Viber, VKontakte, WhatsApp and other applications. Now bots are a trend, they are everywhere. There are services that allow you to write APIs immediately for all platforms.

The development went in parallel with the bots, but we will assume that the era of assistants came later.

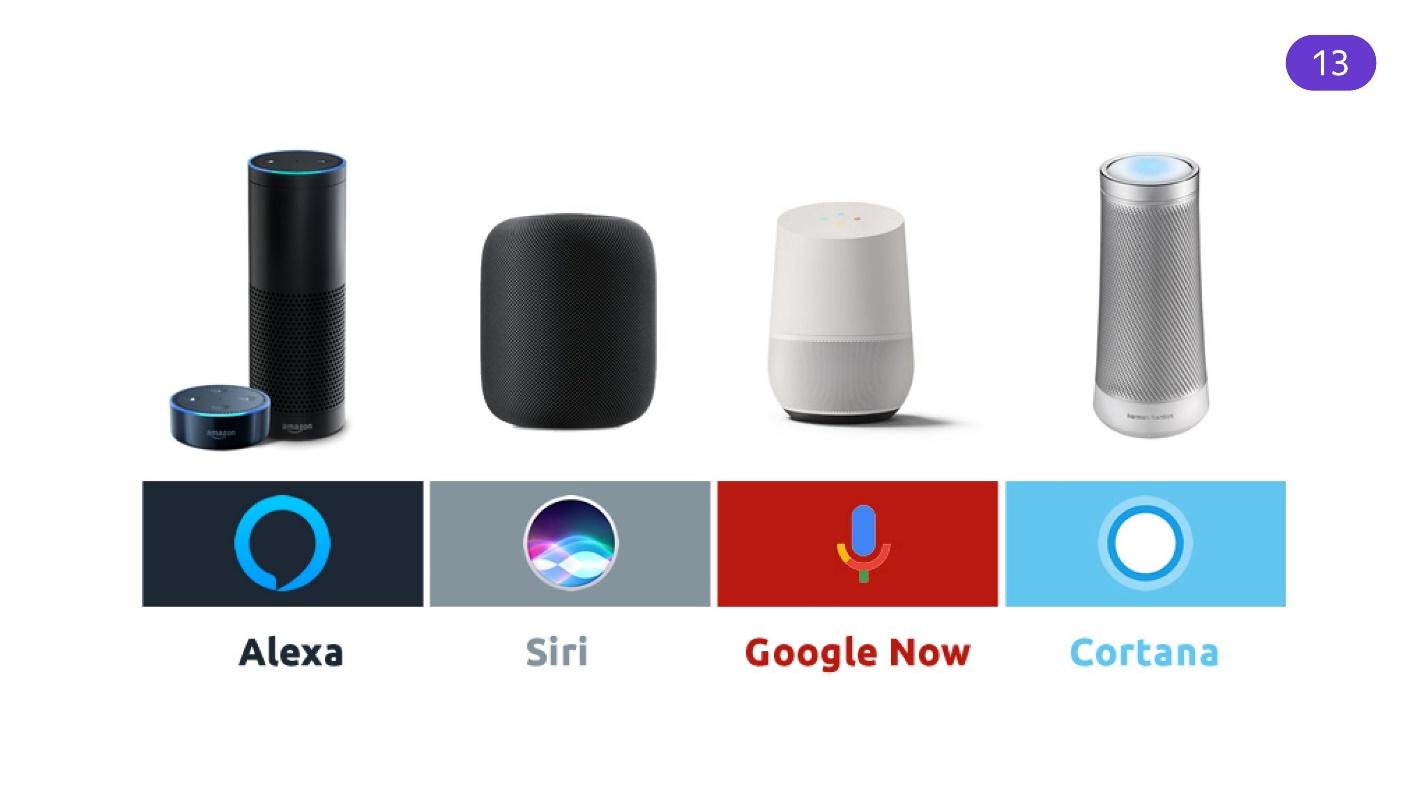

On August 9, 2011, Siri appeared. Initially, it was an independent project in which Apple saw something interesting, so I bought it. This is the oldest popular voice assistant built into the OS. A year later, Google quickly caught up with Apple by integrating Google Now’s voice assistant into its operating system.

After 2 years, Microsoft released Microsoft Cortana . But it’s not clear why - the mobile market for voice assistants, it seems, they have already missed. The company tried to integrate a voice assistant into desktop systems, when there was already a struggle for the market of different devices. Amazon Alexa came out a little later that year.

Assistants have evolved. In addition to software systems that knew how to work with voice, speakers appeared with assistants. According to statistics, at the beginning of 2019, every third family in the United States has a smart column. This is a huge market in which you can invest.

But there is a problem - foreign assistants have a bad language with Russian. The assistants are imprisoned in English and understand it well, but when communicating in Russian, translation difficulties arise. Languages are different and require a different approach to natural language processing.

Alice released in open beta on October 10, 2017. It is imprisoned for the Russian language and this is its huge advantage. Alice understands English, but worse.

Yandex is a large company and can afford to embed Alice in all of its applications that can somehow talk.

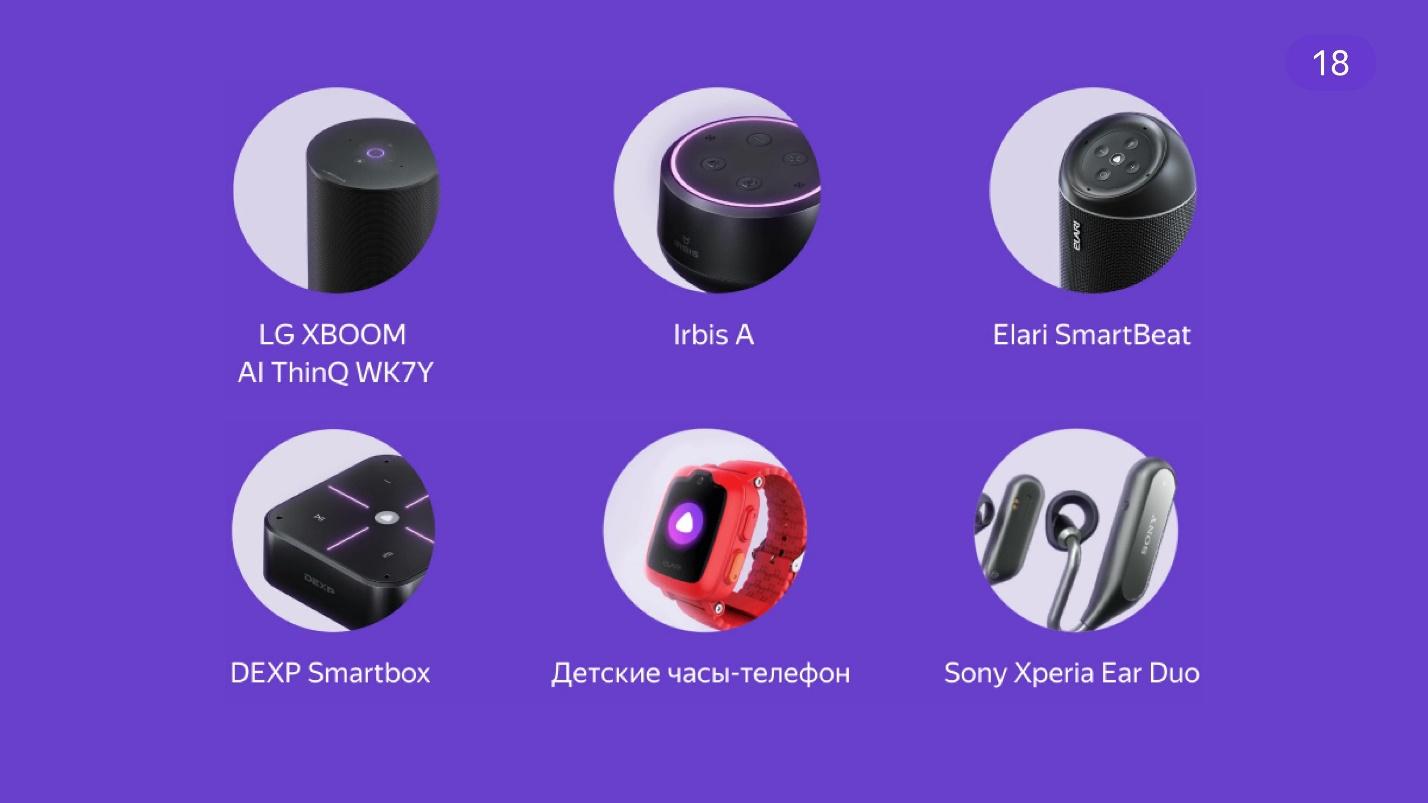

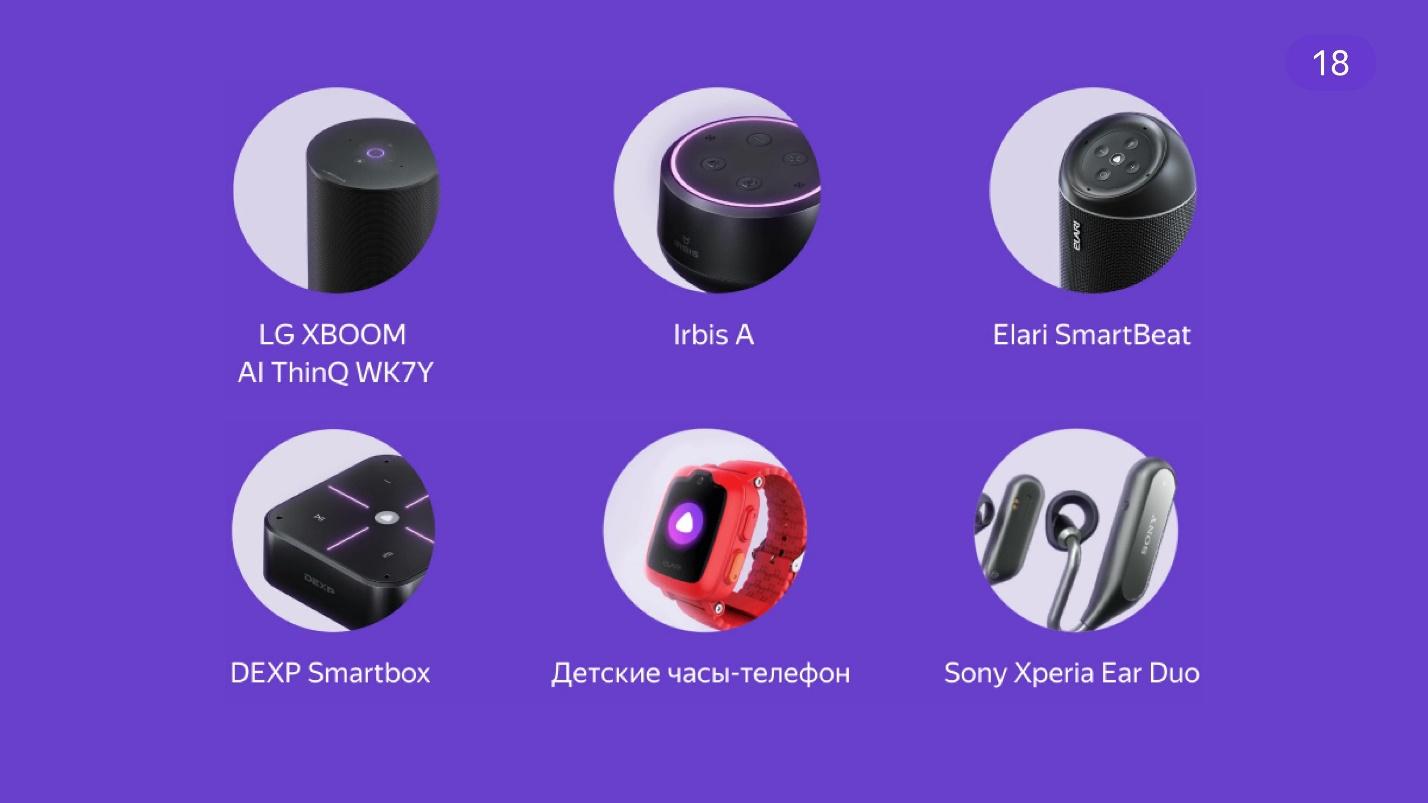

The integration went so well that third-party manufacturers also decided to build Alice.

Over the 2 years of development of the assistant, she was integrated into many services and added new skills. She knows how to play music, recognize pictures, search for information in Yandex and work with a smart home.

It is convenient when hands are busy . I am preparing dinner and want to turn on the music. Go to the tap, wash your hands, dry, open the application, find the desired track - for a long time. Faster and easier to give a voice command.

Laziness . I am lying on a sofa under a plaid blanket and I do not want to get up to go somewhere to turn on the speakers. If you are lazy, then in full.

The big market is apps for kids . Young children do not yet know how to read, write and print, but they talk and understand speech. Therefore, children adore Alice and love to communicate with her. Parents are also happy - there is no need to look for what to do with the child. Interestingly, Alice understands children thanks to a well-trained neural network.

Availability Visually impaired people are comfortable working with voice assistants - when the interface is not visible, you can hear it and give it commands.

Voice faster . An average person, not a developer, prints an average of 30 words per minute, and says 120. Per minute, 4 times more information is transmitted by voice.

The future . Fantastic movies and futuristic predictions suggest that the future is with voice interfaces. Scriptwriters think that voice control will probably be the main way to interact with interfaces where the picture is not so important.

According to statistics, 35 million people use Alisa per month. By the way, the population of Belarus is 9,475,600 people. That is, approximately 3.5 Belarus uses Alice every month.

Voice assistants conquer the market. According to forecasts, by 2021 it will grow by about 2 times. Today's popularity will not stop, but will continue to grow. More and more developers realize that they need to invest in this area.

It’s great when companies invest in voice assistants. They understand how they can be integrated with their services. But developers also want to somehow participate in this, and the company itself is profitable.

Alexa has Alexa Skills. According to the documented methods of interaction, she understands what the developers wrote for her. Google launched Actions - the ability to integrate something of its own into the voice assistant.

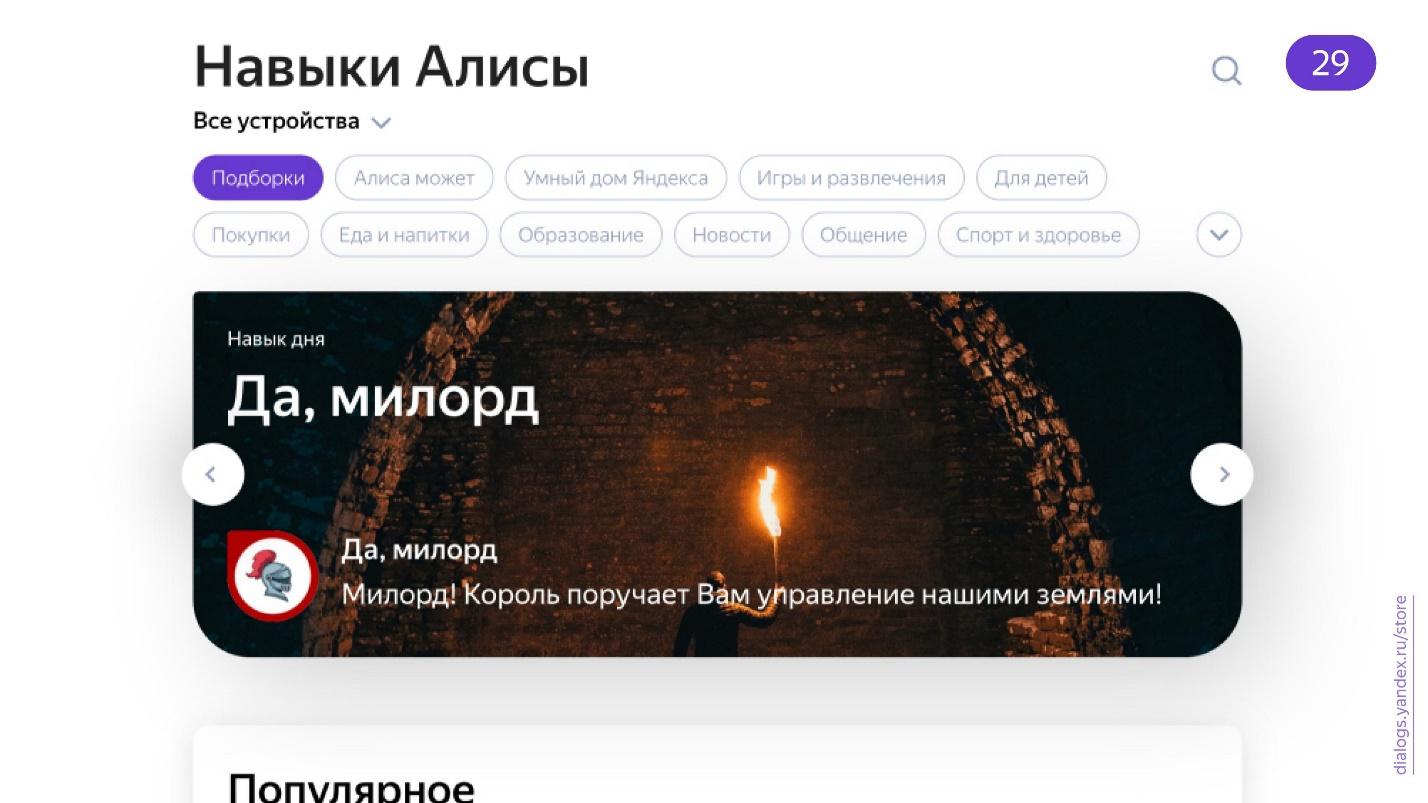

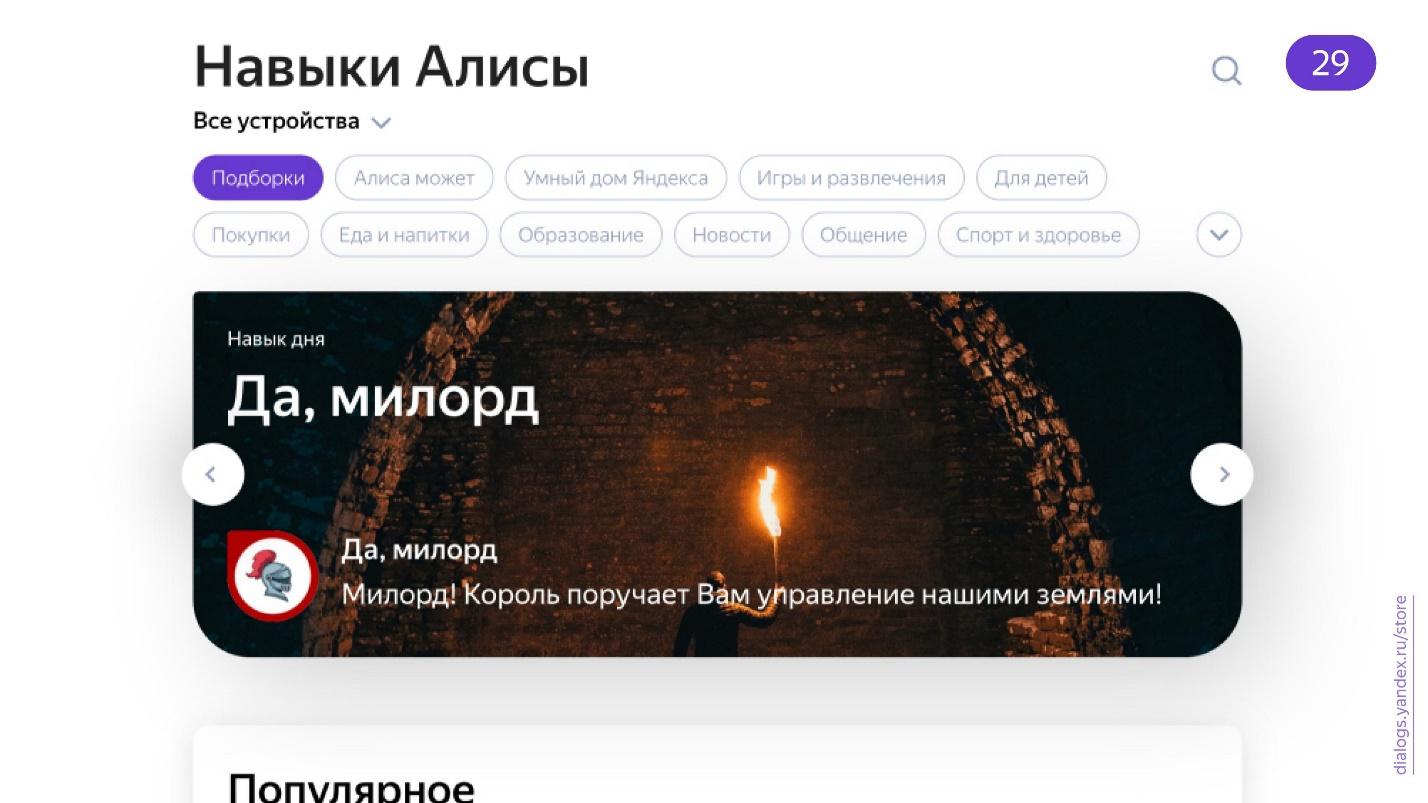

Alice also has skills - the ability for developers to implement something third-party.

At the same time, there is an alternative catalog of skills, not from Yandex, which is supported by the community.

There are good reports on how to make voice applications. For example, Pavel Guy spoke at AppsConf 2018 with the theme “Creating a voice application using the example of Google Assistance” . Enthusiasts are actively involved in the development of voice applications. One example is a visual voice-activated game written by Ivan Golubev.

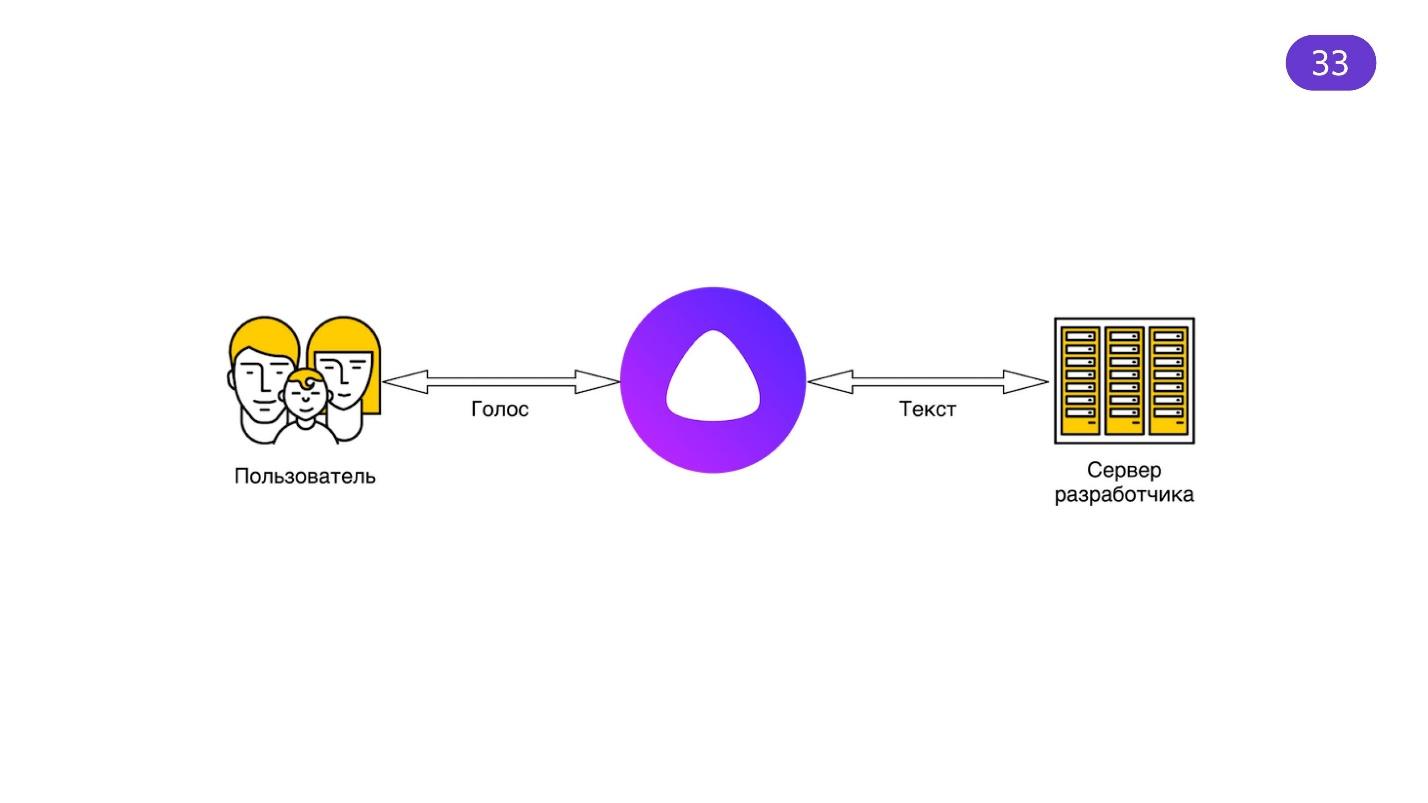

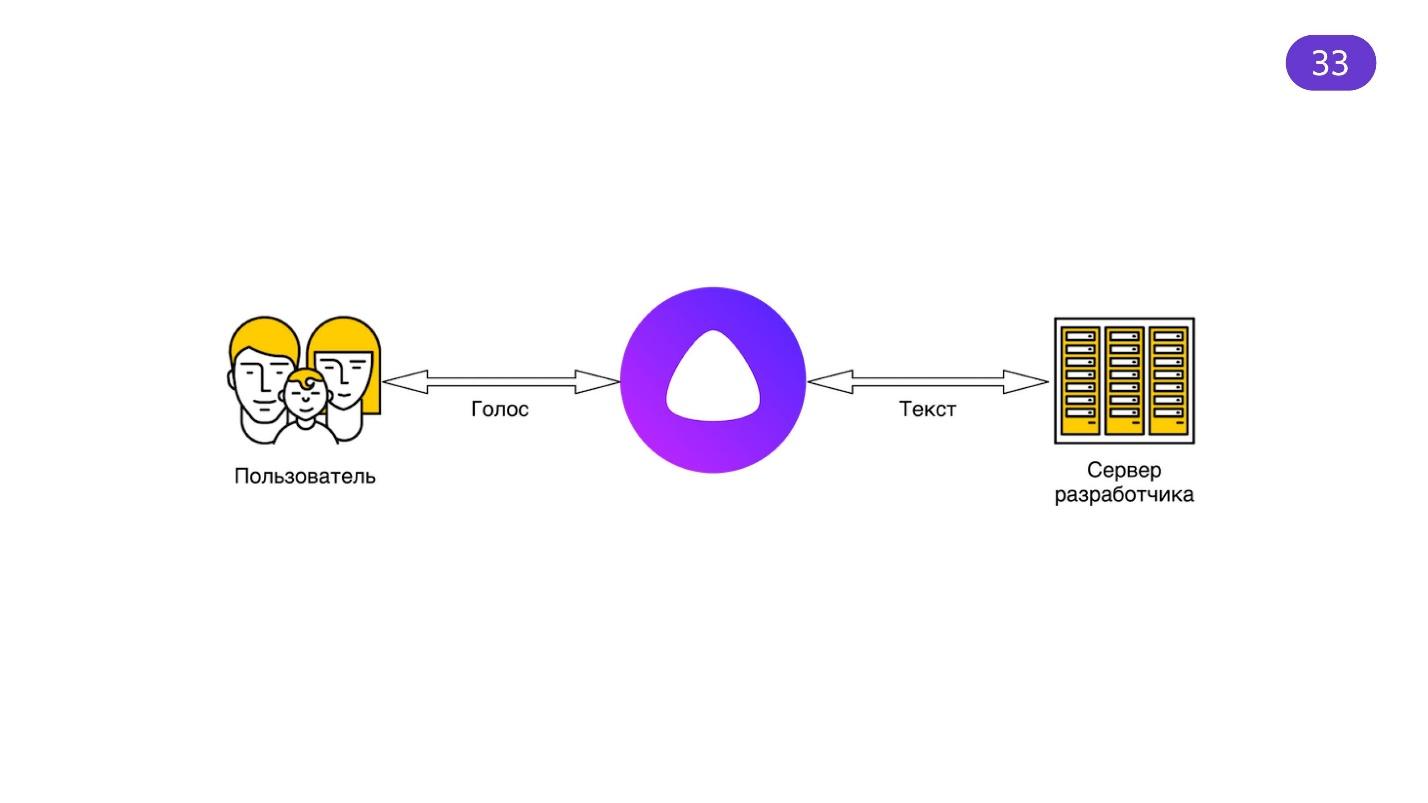

Alice is popular, although essentially everything she does is halfway between voice and text.

Alice knows how to listen to a voice and turn it according to her algorithms into text, create an answer and voice it. It seems that this is not enough, but it is an extremely difficult task. A lot of people are working to ensure that Alice sounds natural, correctly recognizes, understands accents and children's speech. Yandex provides something like a proxy that passes everything through itself. Stunning minds work so that you can use the results of their work.

Alice's skills - Yandex.Dialogs - have one limitation. The time taken for your API to respond must not exceed 1.5 seconds. And this is logical, because if the answer hangs - why wait?

When we receive information with our ears, pauses are perceived by the brain longer than similar pauses in the visual interface. For example, loaders, spinners - everything that we like to add to interfaces distract the user from waiting. Consider everything working fast.

Everything is described in detail in the Yandex.Dialog documentation and it is always up to date. I will not repeat myself. I’ll tell you what was interesting to me and show how to quickly create a demo, which I spent only one evening on.

Let's start with the idea. There are many skills, there are catalogs, but I did not find what is important to me - this is a calendar of events on the front-end. Imagine waking up in the morning: “I'll go to the meeting today. Alice! Is there anything interesting there? ”, And Alice answers you, moreover, correctly and taking into account your location.

If you are organizing conferences, join on GitHub . You can bring events and meetings there, learn about many events on the front-end in the world from one calendar.

I took the well-known technologies that were at hand: Node.js and Express. Still Heroku, because it's free. The application itself is simple: it is a server on Node.js, an Express application. Just lift the server on some port and listen to requests.

I took advantage of the fact that everything is already configured in the Web standards calendar, and from a huge number of small files there is one ICS file that can be downloaded. Why should I collect my own?

Make sure everything works fast.

Use GET methods for tests. Skills work with POST methods, so GET methods can be done exclusively for debugging. I have implemented such a method. All that he does is download the same ICS, pars, and issue it in JSON form.

I built the demo quickly, so I took the ready-made node-ical library:

She knows how to parse the ICS format. The output gives such a sheet:

To parse and provide the necessary information to the user of the skill, it is enough to know the time of the beginning and end of the event, its name, link and, importantly, the city. I want the skill to search for events around the city.

How Yandex.Dialogs return information? A column or a voice assistant built into the mobile application listens to you, and Yandex servers process what they hear and send an object in response:

The object contains meta-information, information about the request, the current session and the version of the API in case it suddenly updates - skills should not break.

There is a lot of useful information in meta information .

" Locale " - used to understand the user's region.

“ Timezone ” can be used to competently work with time and more accurately determine the user's location.

" Interfaces " - information about the screen. If there is no screen, you should think about how the user will see the pictures if you give them in the answer. If there is a screen, we take out information on it.

The request format is simple :

It gives what the user said, the request type and the NLU - Natural-language unit . This is exactly the magic that Yandex.Dialogs platform takes on. She breaks the whole sentence that she recognized into tokens - words. Still there are entities about which we will talk a little later. Using tokens is enough for a start.

We got these words, and what to do with them? The user has said something, but he can say the words in a different order, use the “not” particle, which changes everything dramatically, or even say “morning” rather than “morning”. If the user also speaks Belarusian, then there will be a “satchel”, not morning. A large project will require the help of linguists to develop a skill that understands everything. But I did a simple task, so I did without outside help.

This is a philosophical question that the Turing test is trying to answer. The test allows with a certain probability to determine that artificial intelligence can impersonate a person. There is a Loebner Prize to receive which programs compete in passing the Turing test. The decision is made by a panel of judges. To get the prize, you need to trick 33% of the judges or more. Only in 2014, the bot Zhenya Gustman from St. Petersburg finally deceived the commission.

In 2019, nothing really changed - it’s still difficult to deceive a person. But we are gradually moving towards this.

A good skill requires an interesting use case. I advise one book that is worth reading - “Designing Voice User Interfaces: Principles of Conversational Experiences”. It is awesome about writing scripts for voice interfaces and keeping user attention. I didn’t see the book in English, but it is read quite easily.

The first thing to start developing a skill is a greeting.

When the skill turns on, you need to somehow hold the user from the first second, and for this you need to explain how to use the skill. Imagine that the user launched the skill and there was silence. How do you know if a skill works at all? Give the user instructions, such as buttons on the screen.

Signs of easy dialogue. The list was invented by Ivan Golubev, and I really like the wording.

Personal means that the bot must have a character. If you talk with Alice, then you will understand that she has a character - the developers take care of this. Therefore, your bot for organicity must have a "personality". To give out phrases with at least one voice, use the same verbal constructions. This helps to retain the user.

Natural . If the user request is simple, then the answer should be the same. During communication with the bot, the user must understand what to do next.

Flexible . Get ready for anything. There are many synonyms in Russian. The user can distract from the column and transfer the conversation to the interlocutor, and then return to the column. All this is difficult to handle. But if you want to make a bot well, then you have to. Note that some percentage of non-recognition will still be. Be prepared for this - offer options.

Contextual - the bot, ideally, should remember what happened before. Then the conversation will be lively.

- Alice, what is the weather like today?

- Today in the District from +11 to +20, cloudy, with clearings.

- And tomorrow?

- Tomorrow in the District from +14 to +27, cloudy, with clearings.

Imagine that your bot does not know how to store context. What then does the query “for tomorrow?” Mean for him. If you know how to store a context like Alice, then you can use the previous results to improve the answers in the skill.

Proactive . If the user dulls, the bot should prompt him: "Click this button!", "Look, I have a picture for you!", "Follow the link." The bot should tell you how to work with it.

The bot should be short . When a person speaks for a long time, it is difficult for him to keep the attention of the audience. It’s even more complicated with the bot - it’s not a pity for it, it is inanimate. To maintain attention, you need to build a conversation interestingly or briefly and succinctly. This will help “Write. Cut it. ” When you start developing bots, read this book.

When developing a complex bot, you can’t do without a database. My demo does not use the database, it is simple. But if you screw some databases, you can use the information about the user session, at least to store the context.

There is a nuance: Yandex.Dialogs do not give away user private information, for example, name, location. But this information can be asked from the user, saved and linked to a specific session ID, which Yandex.Dialogs send in the request.

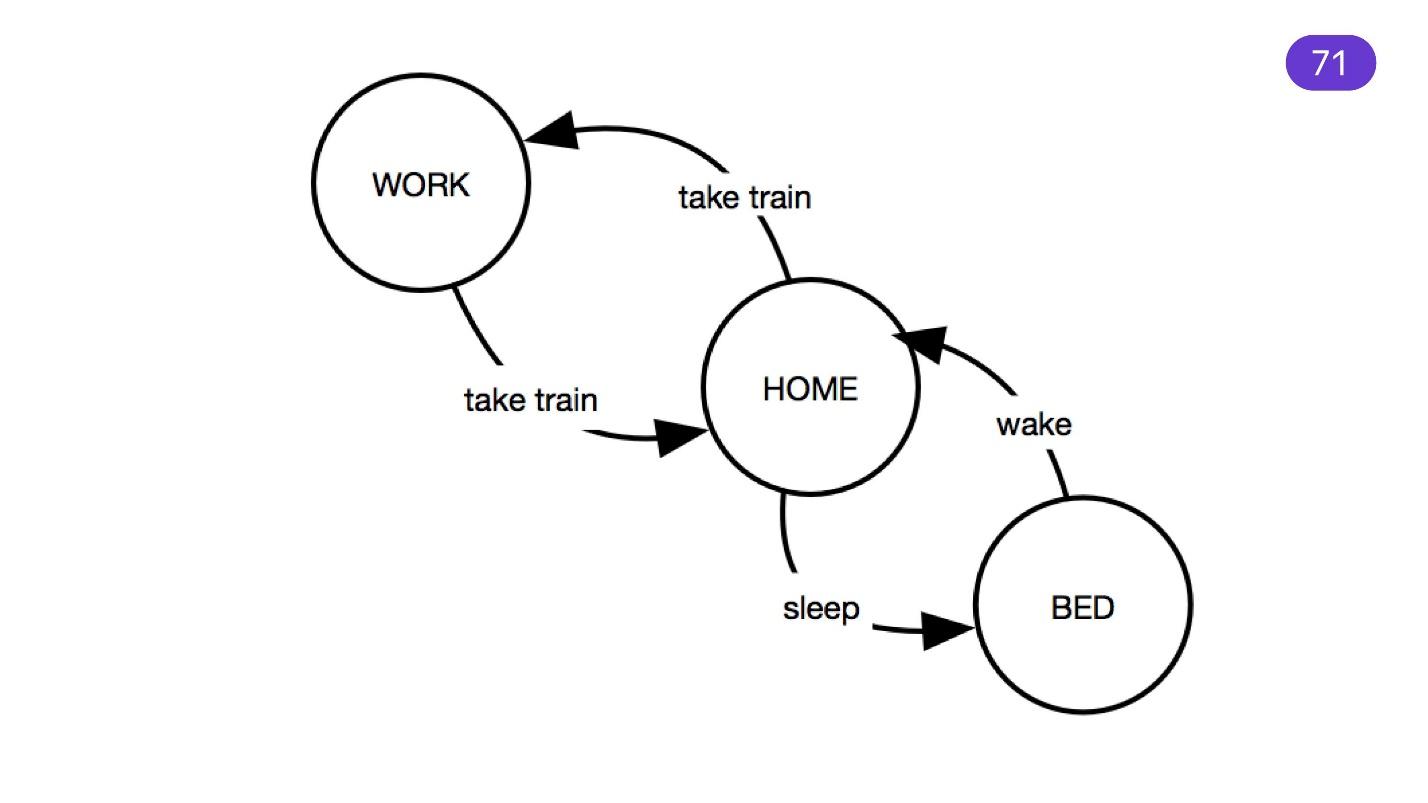

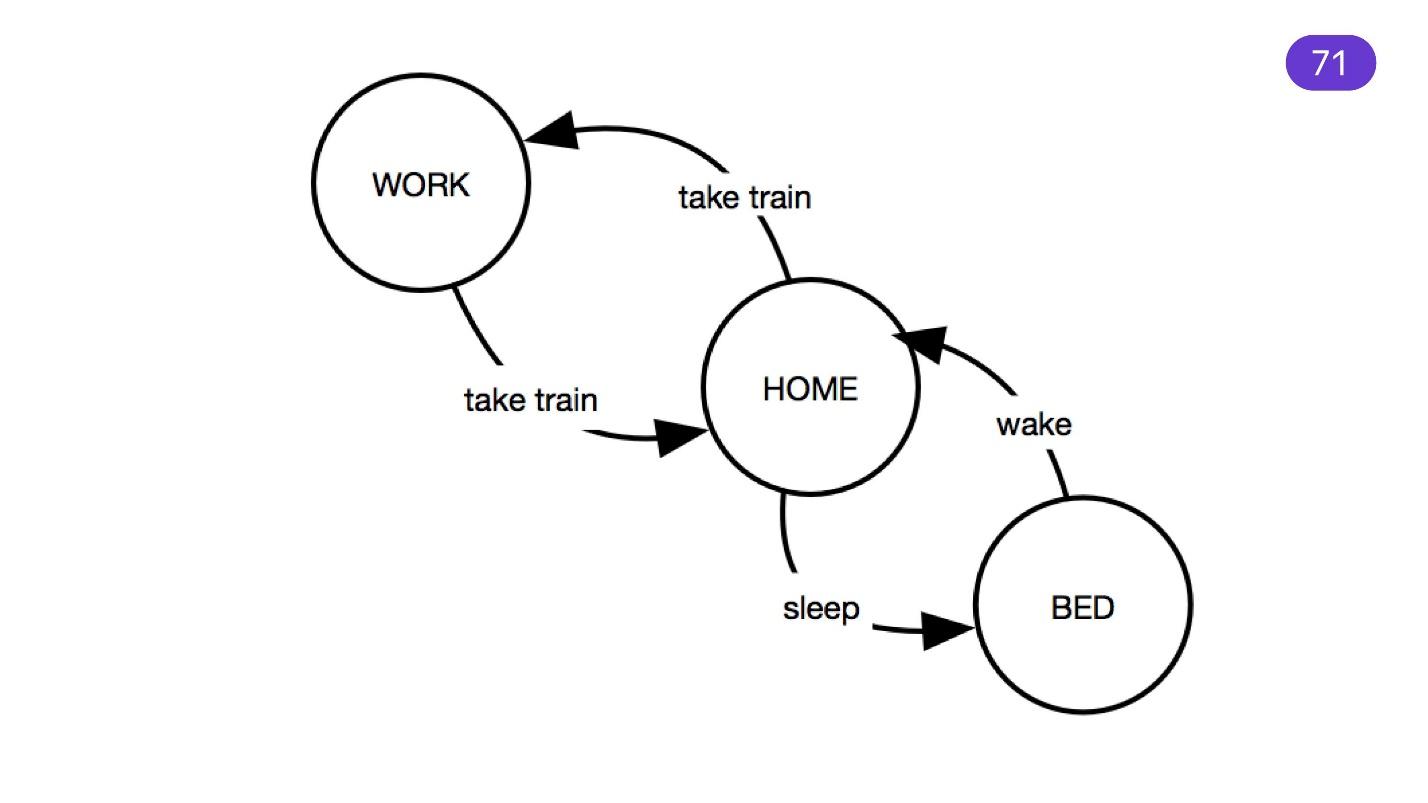

Mentioning complex scenarios, one cannot but recall the state machine. This mechanism has long and been excellently used for programming microcontrollers, and sometimes the front end. The state machine is convenient for the scenario: there are states from which we pass to other states for certain phrases.

Do not overdo it. You can get carried away and create a huge state machine, which will be difficult to figure out - maintaining such code is difficult. It’s easier to write one script that consists of small sub-scripts.

Never say, "Repeat, please." What does a person do when asked to repeat? He speaks louder. If the user yells at your skill, recognition will not improve. Ask a clarifying question. If one part of the user’s dialogue is recognized and something is missing, specify the missing block.

Text recognition is the most difficult task in developing a bot, so sometimes clarification does not help. In any incomprehensible situation, the best solution is to collect everything in one place, log, and then analyze and use in the future. For example, if the user says frankly strange and incomprehensible things.

“Cooked. Creamy Shore

Poked on nav.

And grunts grunted.

Like a mummy in a mov. ”

Users can unexpectedly use some neologism, which means something, and it needs to be processed somehow. As a result, the recognition percentage drops. Do not worry - log in, study and improve your bot.

There must be something that stops the skill when you want to get out of it. Alice can stop after the phrases: “Alice, stop it!” Or “Alice, stop!” But users usually don’t read the instructions. Therefore, at least respond to the word “Stop” and return control to Alice.

Now let's see the code.

I want to implement the following phrases.

For "upcoming events" any phrase is suitable. I created a lazy bot, and when he does not understand what they are saying, he gives out information about the next three events.

Yandex gradually improves the Yandex.Dialogs platform and issues entities that it has been able to recognize. For example, he knows how to get addresses from a text, sorting it in parts: city, country, street, house. He also knows how to recognize numbers and dates, both absolute and relative. He will understand that the word "Tomorrow" is today's date, to which one is added.

You need to somehow respond to your user. The whole skill is 209 lines with the last empty line. Nothing complicated - work for the evening.

All you do is process the POST request and get a “request”.

Further, I did not greatly complicate the state machine, but went according to priorities. If the user wants to learn how to use the bot, then this is the first launch or a request for help. Therefore, we just prepare him an "EmptyResponse" - this is what I call it.

The needHelp function is simple.

When we have zero tokens, then we are at the beginning of the request. The user just started the skill or asked nothing. You need to check that the tokens are zero and this is not a button - when you click the button, the user also does not say anything. When a user asks for help, we go through tokens and look for the word “Help”. The logic is simple.

If the user wants to stop.

So we are looking for some kind of stop word inside.

In all answers, you must return the information that Yandex.Dialogs sent about the session. He needs to somehow match your answer and the user's request.

Therefore, what you got in the variables "session" and "version", return back, and everything will be fine. Already in the answer you can give some text for Alice to pronounce it, and pass “end session: true”. This means that we end the skill session and transfer control to Alice.

When you call a skill, Alice turns off. All she listens to is her stop words, and you are in full control of the process of working with the skill. Therefore, you need to return control.

With an empty request is more interesting.

There is a TTS ( Text To Speech ) field - voice control . This is a simple format that allows you to read the text in different ways. For example, the word “multidisciplinary” has two stresses in the Russian language - one primary, the second secondary. The task is that Alice was able to pronounce this word correctly. You can break it with a space:

She will understand him as two. Plus accent is highlighted.

There are pauses in the speech - you put a punctuation mark, which is separated by spaces. So you can create dramatic pauses:

I already spoke about buttons . They are important if you are not communicating with a column, but with the Yandex mobile application, for example.

Buttons are also hints for phrases that you perceive in your skill. Skills work in Yandex applications - you communicate with the interface. If you want to give out some information - give a link, the user clicks on it. You can also add buttons for this.

There is a “payload” field where you can add data. They will then come back with a “request” - you will know, for example, how to mark this button.

You can choose the voices that your skill will speak.

To complete the skill , just return "end_session: true".

First, I filter by date.

The logic is simple: in all the events that I parsed from the calendar, I take those that will take place in the future, or are going now. It is probably strange to ask about past events - the skill is not about that.

Next, filtering in place is what it was all about.

In "entities" you can find the YANDEX.GEO entity, which refines the location. If the entity has a city, add to our set. Next, the logic is also simple. We are looking for this city in tokens, and if it is there, we are looking for what the user wants. If not, we look in all the “locations” and “events” that we have.

Suppose Yandex did not recognize that it was YANDEX.GEO, but the user named the city - he was sure that something was going on there. We go through all the cities in “events” and look for the same in tokens. It turns out a cross-comparison of arrays. Not the most productive way, of course, but what is. That's the whole skill!

Please do not scold me for the code - I wrote it quickly. Everything is primitive there, but try to use it or just play around.

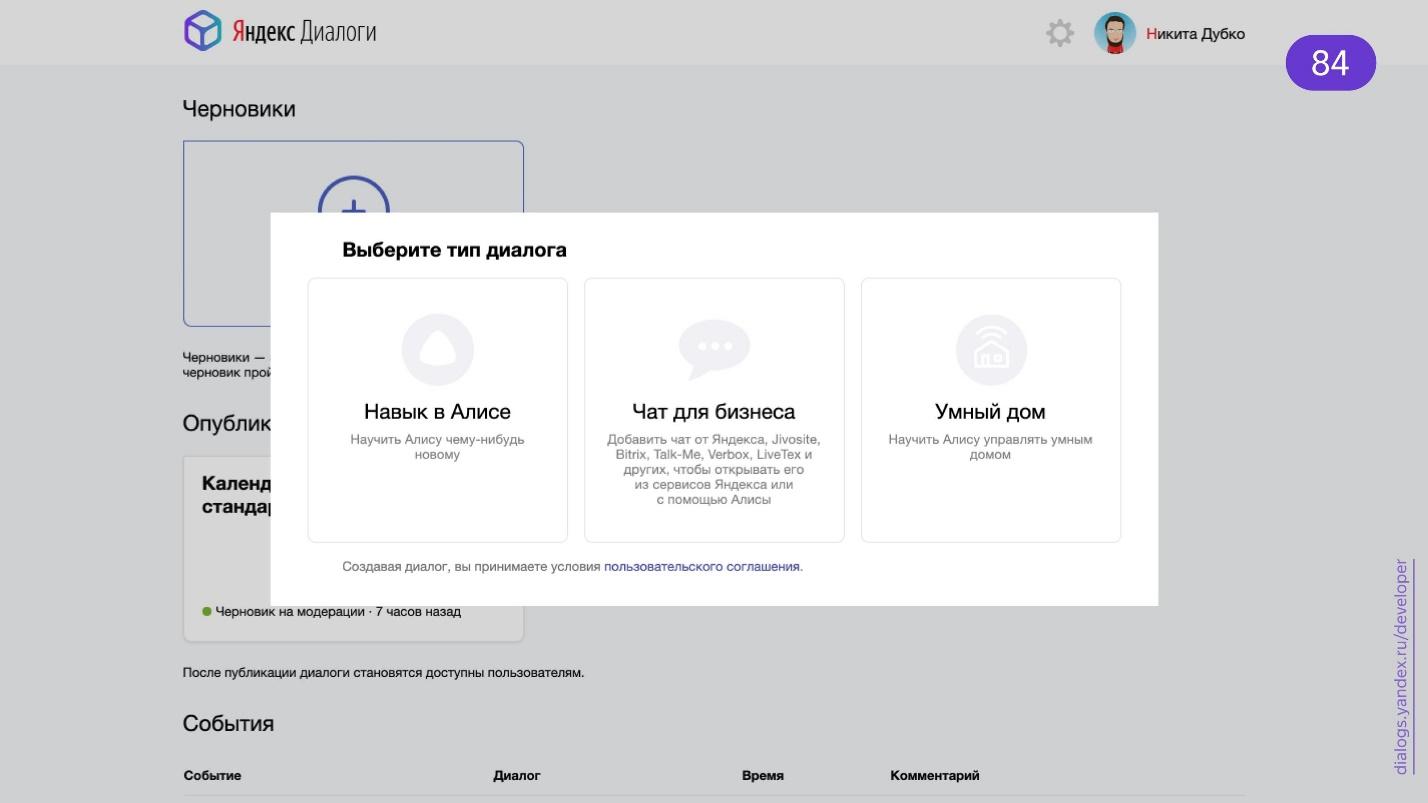

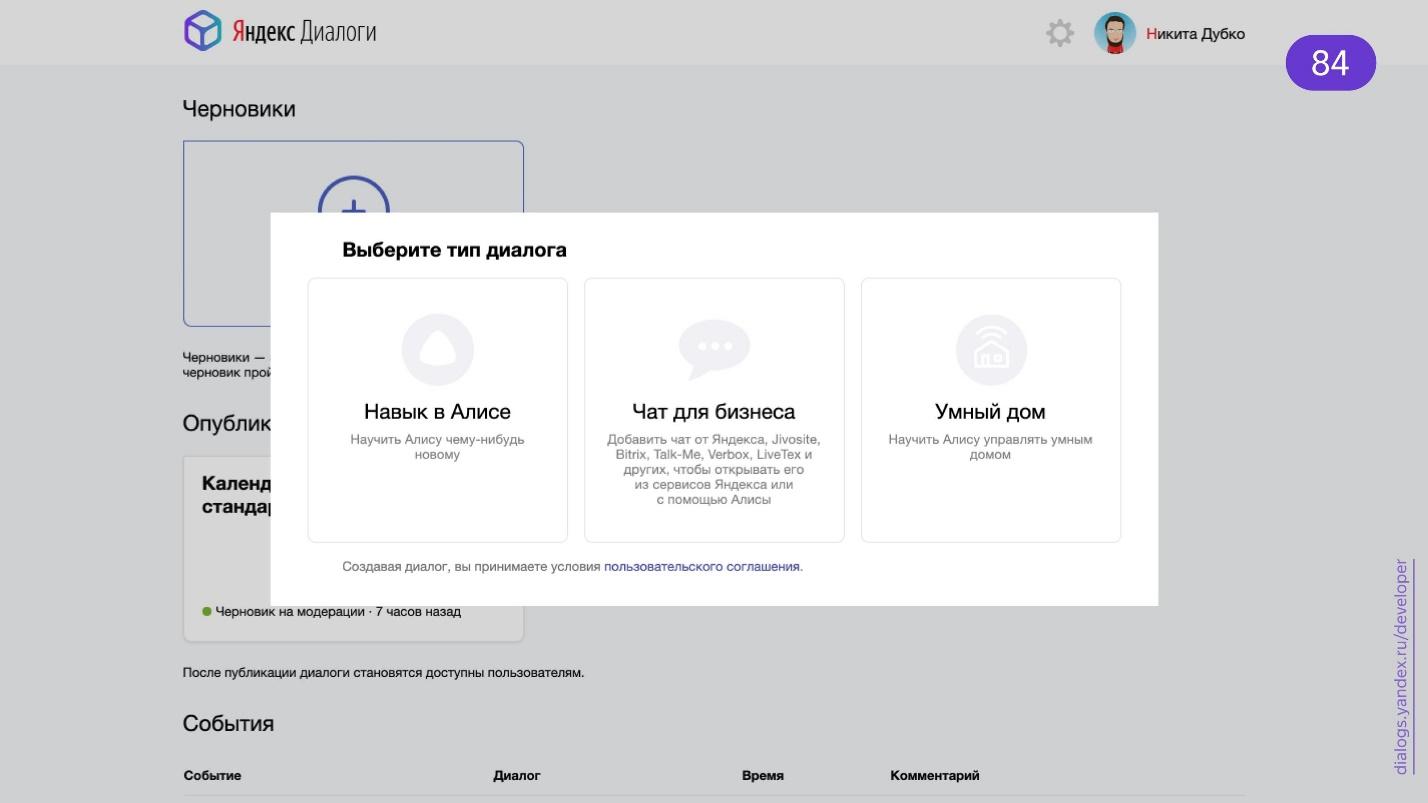

Go to the Yandex.Dialogs page.

Choose a skill in Alice. Press the button "Create dialogue" and you will find yourself in the form that you need to fill in with your data.

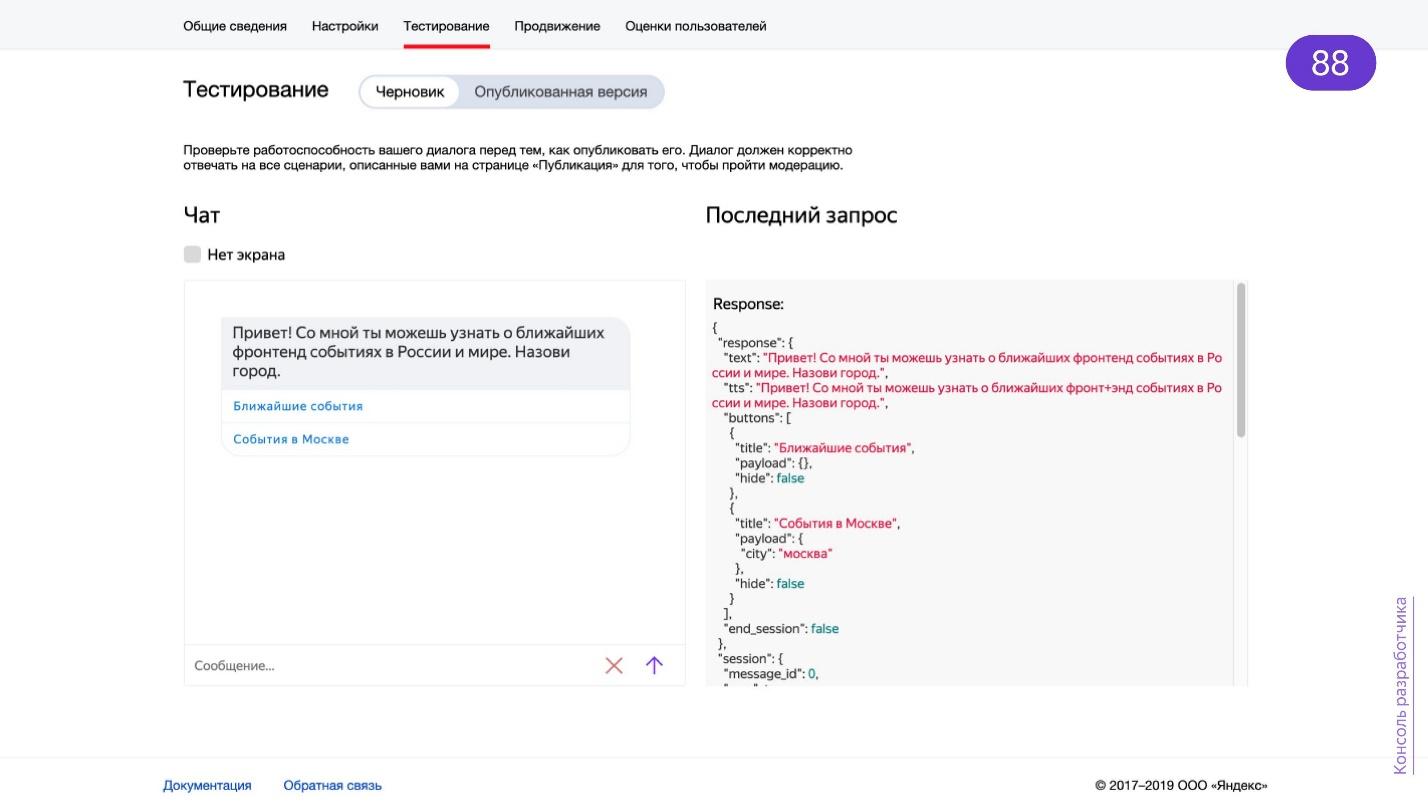

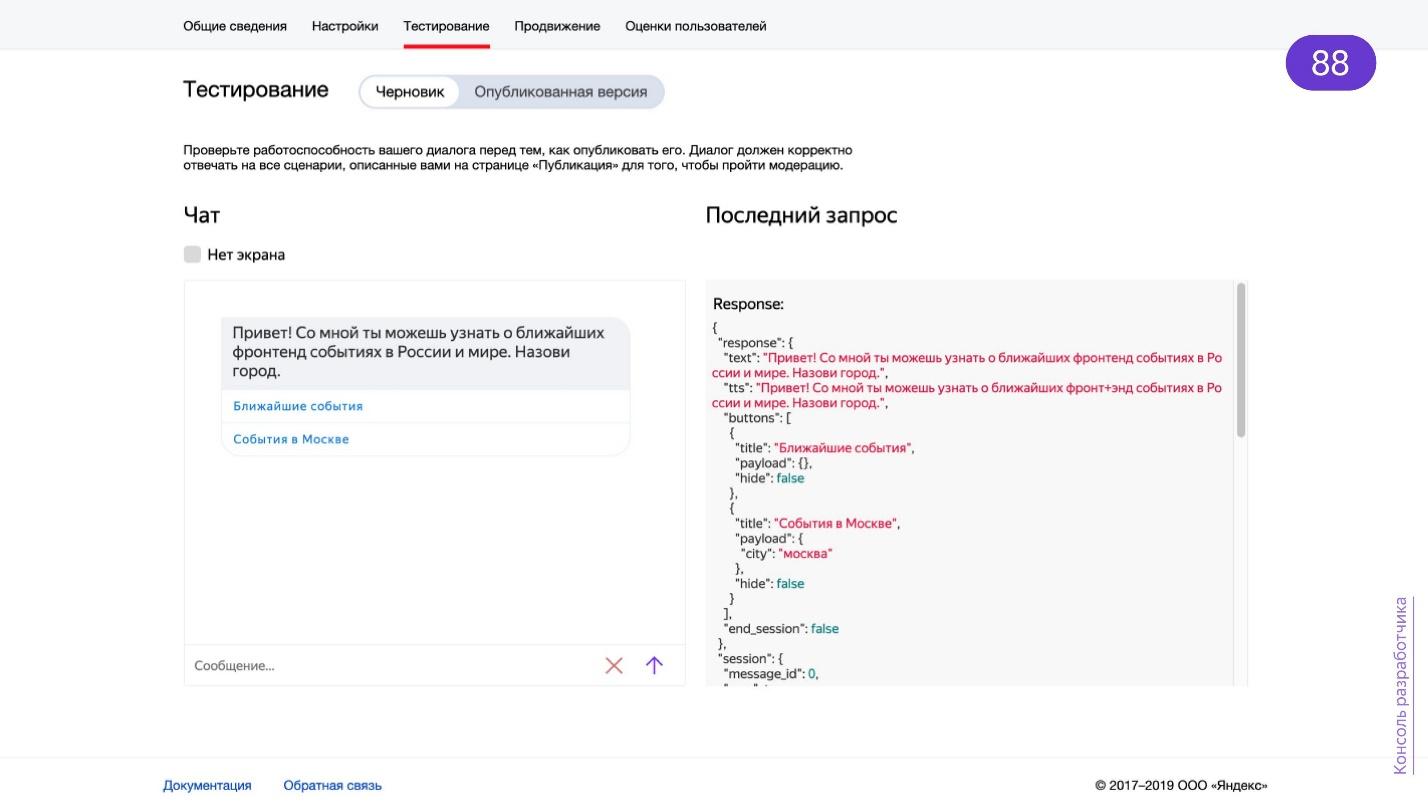

Done - send the skill for moderation, and you can test.

I wrote a banal Express server.This is a simple API that is covered by regular tests. There are specialized utilities, for example, alice-tester - it knows how to work with the exact format that Alice provides.

., «».

, , . , : , , . , — , . Postman — .

. . Just AI. , , .

. dialogs.home.popstas.ru URL, , localhost. , debug. , localhost.

. — . URL , - .

, , , .

— . , , , , , . - - .

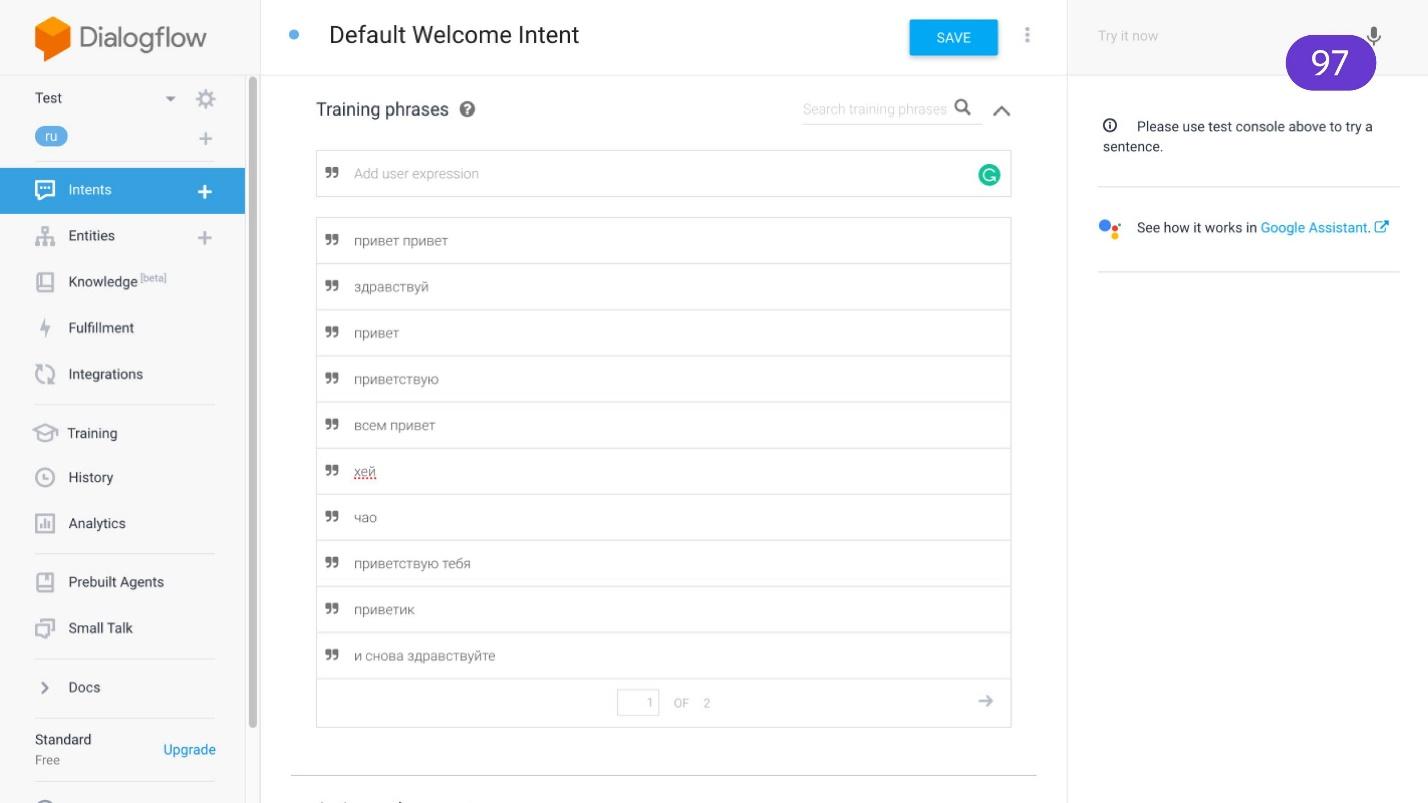

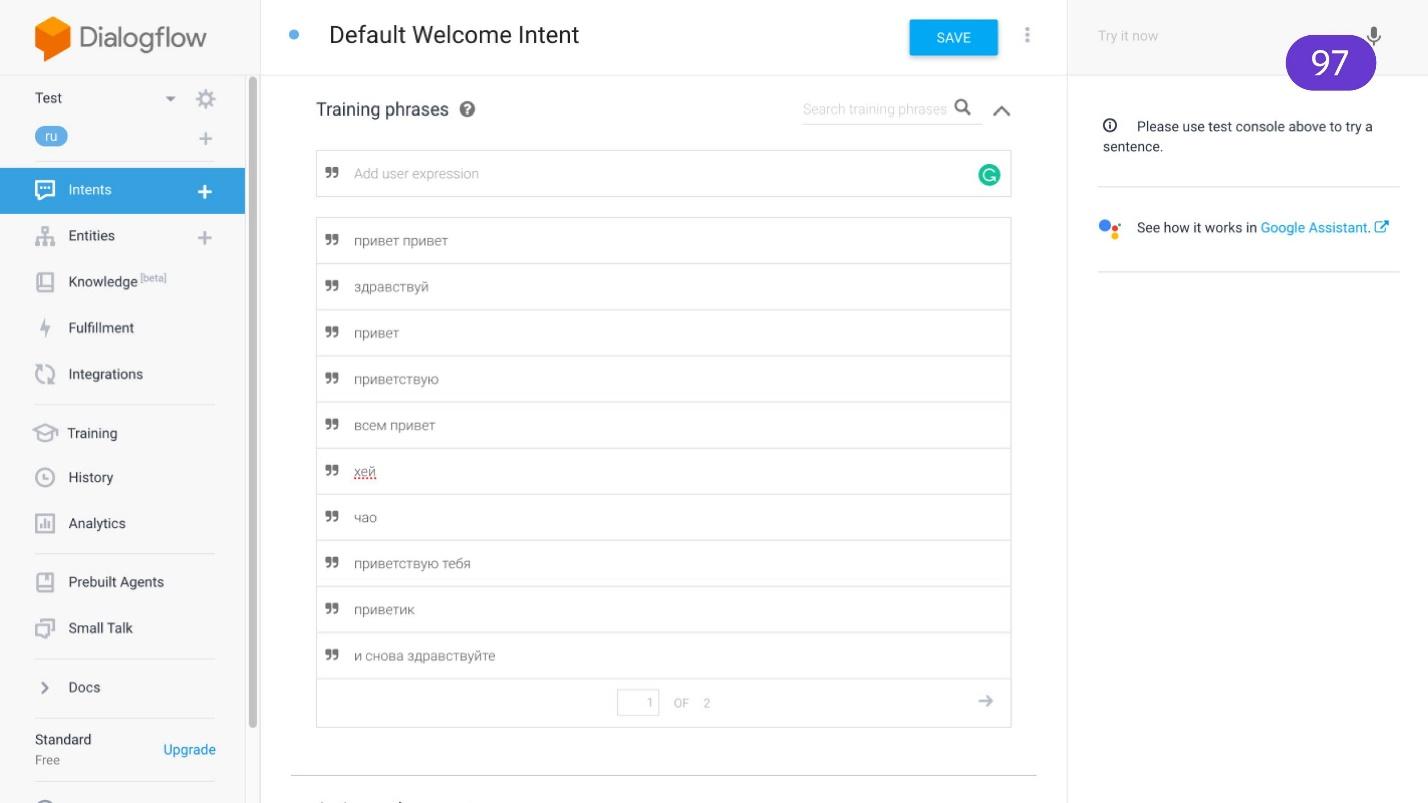

Dialogflow , Google Now. , — , — .

, . . , . , .

Google Dialogflow ., Dialogflower . Alexa, Google Now, . — API, .

, . Aimyloqic — . Zenbot , Tortu Alfa.Bot — , . I recommend!

FAQ . , . , , .

. , , , . — , . , . , .

IFTTT , — Trello. — API. , , . , IFTT — .

. , . .

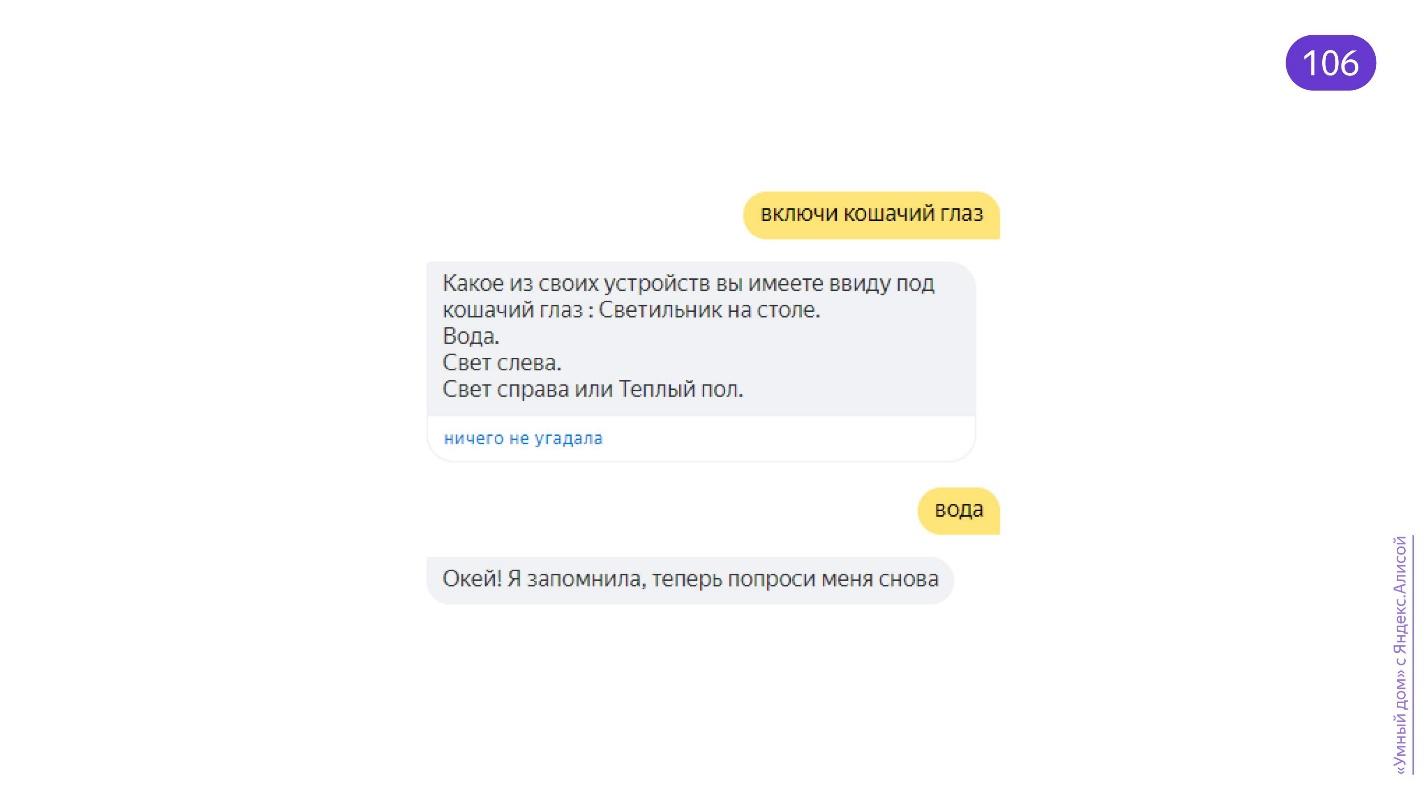

, «» .

. Xiaomi . . . , Arduino - --- , , : «, - 2000!» — !

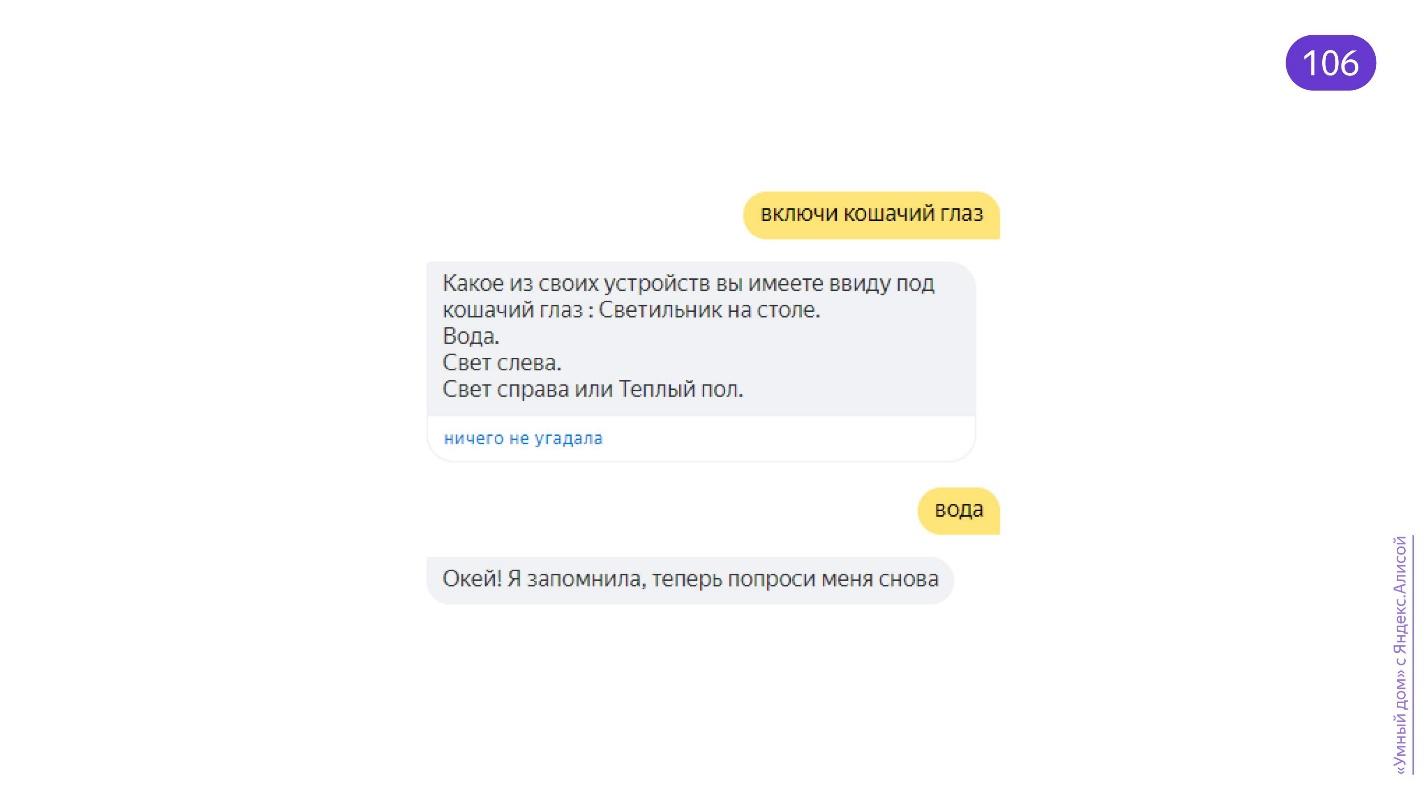

, : «, !» — ! . , .

. , , : , . . . . . . GitHub- ., . — .

Telegram- . . — . , . . .

. , . - , . , . . , . , : , -, , . , , . : « , ?» , , , .

— , . .

. — . , , . , .

— , — . . — — !

Siri or Alexa speak mostly English to users, so in Russia they are not as popular as Yandex's Alice. For developers, Alice is also more convenient: her creators have a blog, post convenient tools on GitHub and help build the assistant in new devices.

Nikita Dubko (@dark_mefody on Twitter) is a Yandex interface developer, organizer of MinskCSS and MinskJS meetings and a news editor in Web standards. Nikita does not work in Yandex.Dialogs and is not affiliated with Yandex.Alisa in any way. But he was interested in understanding how Alice works, so he tried to apply her skills for the Web and prepared a report on this at FrontendConf RIT ++. In deciphering Nikita's report, we will consider what voice assistants can bring useful and build a skill right in the process of reading this material.

Bots

Let's start with the history of bots. In 1966, the Eliza bot appeared, pretending to be a psychotherapist. One could communicate with him, and some even believed that a living person answers them. In 1995, the ALICE bot came out. - not to be confused with Alice. The bot was able to impersonate a real person. To this day lies in Open Source and is being finalized. Unfortunately, ALICE does not pass the Turing Test, but it does not stop him from misleading people.

In 2006, IBM placed a huge knowledge base and sophisticated intelligence in the bot - that is how IBM Watson came about. This is a huge computing cluster that can process English speech and give out some facts.

In 2016, Microsoft conducted an experiment. She created the Tay bot, which she launched on Twitter. There, the bot learned to microblog based on how live subscribers interacted with it. As a result, Tay became a racist and misogynist. Now this is a closed account. Moral: do not let children on Twitter, he can teach the bad.

But these are all bots that you can’t communicate with for your own benefit. In 2015, “useful” appeared on Telegram. Bots existed in other programs, but Telegram made a splash. It was possible to create a useful bot that would provide information, generate content, administer publics - the possibilities are great, and the API is simple. Bots added pictures, buttons, tooltips - an interaction interface appeared.

Gradually, the idea spread to almost all instant messengers: Facebook, Viber, VKontakte, WhatsApp and other applications. Now bots are a trend, they are everywhere. There are services that allow you to write APIs immediately for all platforms.

Voice assistants

The development went in parallel with the bots, but we will assume that the era of assistants came later.

On August 9, 2011, Siri appeared. Initially, it was an independent project in which Apple saw something interesting, so I bought it. This is the oldest popular voice assistant built into the OS. A year later, Google quickly caught up with Apple by integrating Google Now’s voice assistant into its operating system.

After 2 years, Microsoft released Microsoft Cortana . But it’s not clear why - the mobile market for voice assistants, it seems, they have already missed. The company tried to integrate a voice assistant into desktop systems, when there was already a struggle for the market of different devices. Amazon Alexa came out a little later that year.

Assistants have evolved. In addition to software systems that knew how to work with voice, speakers appeared with assistants. According to statistics, at the beginning of 2019, every third family in the United States has a smart column. This is a huge market in which you can invest.

But there is a problem - foreign assistants have a bad language with Russian. The assistants are imprisoned in English and understand it well, but when communicating in Russian, translation difficulties arise. Languages are different and require a different approach to natural language processing.

Alice

Alice released in open beta on October 10, 2017. It is imprisoned for the Russian language and this is its huge advantage. Alice understands English, but worse.

Alice's mission is to help Russian-speaking users.

Yandex is a large company and can afford to embed Alice in all of its applications that can somehow talk.

- Yandex browser.

- Yandex.Navigator.

- Yandex Station.

- Yandex.Phone.

- Yandex.Auto.

- Yandex.Drive.

The integration went so well that third-party manufacturers also decided to build Alice.

Over the 2 years of development of the assistant, she was integrated into many services and added new skills. She knows how to play music, recognize pictures, search for information in Yandex and work with a smart home.

Why so popular?

It is convenient when hands are busy . I am preparing dinner and want to turn on the music. Go to the tap, wash your hands, dry, open the application, find the desired track - for a long time. Faster and easier to give a voice command.

Laziness . I am lying on a sofa under a plaid blanket and I do not want to get up to go somewhere to turn on the speakers. If you are lazy, then in full.

The big market is apps for kids . Young children do not yet know how to read, write and print, but they talk and understand speech. Therefore, children adore Alice and love to communicate with her. Parents are also happy - there is no need to look for what to do with the child. Interestingly, Alice understands children thanks to a well-trained neural network.

Availability Visually impaired people are comfortable working with voice assistants - when the interface is not visible, you can hear it and give it commands.

Voice faster . An average person, not a developer, prints an average of 30 words per minute, and says 120. Per minute, 4 times more information is transmitted by voice.

The future . Fantastic movies and futuristic predictions suggest that the future is with voice interfaces. Scriptwriters think that voice control will probably be the main way to interact with interfaces where the picture is not so important.

According to statistics, 35 million people use Alisa per month. By the way, the population of Belarus is 9,475,600 people. That is, approximately 3.5 Belarus uses Alice every month.

Voice assistants conquer the market. According to forecasts, by 2021 it will grow by about 2 times. Today's popularity will not stop, but will continue to grow. More and more developers realize that they need to invest in this area.

Developer Skills

It’s great when companies invest in voice assistants. They understand how they can be integrated with their services. But developers also want to somehow participate in this, and the company itself is profitable.

Alexa has Alexa Skills. According to the documented methods of interaction, she understands what the developers wrote for her. Google launched Actions - the ability to integrate something of its own into the voice assistant.

Alice also has skills - the ability for developers to implement something third-party.

At the same time, there is an alternative catalog of skills, not from Yandex, which is supported by the community.

There are good reports on how to make voice applications. For example, Pavel Guy spoke at AppsConf 2018 with the theme “Creating a voice application using the example of Google Assistance” . Enthusiasts are actively involved in the development of voice applications. One example is a visual voice-activated game written by Ivan Golubev.

Alice is popular, although essentially everything she does is halfway between voice and text.

Alice knows how to listen to a voice and turn it according to her algorithms into text, create an answer and voice it. It seems that this is not enough, but it is an extremely difficult task. A lot of people are working to ensure that Alice sounds natural, correctly recognizes, understands accents and children's speech. Yandex provides something like a proxy that passes everything through itself. Stunning minds work so that you can use the results of their work.

Alice's skills - Yandex.Dialogs - have one limitation. The time taken for your API to respond must not exceed 1.5 seconds. And this is logical, because if the answer hangs - why wait?

Does it really matter what to ask, if you still do not get an answer?

When we receive information with our ears, pauses are perceived by the brain longer than similar pauses in the visual interface. For example, loaders, spinners - everything that we like to add to interfaces distract the user from waiting. Consider everything working fast.

Time for a demo

Everything is described in detail in the Yandex.Dialog documentation and it is always up to date. I will not repeat myself. I’ll tell you what was interesting to me and show how to quickly create a demo, which I spent only one evening on.

Let's start with the idea. There are many skills, there are catalogs, but I did not find what is important to me - this is a calendar of events on the front-end. Imagine waking up in the morning: “I'll go to the meeting today. Alice! Is there anything interesting there? ”, And Alice answers you, moreover, correctly and taking into account your location.

If you are organizing conferences, join on GitHub . You can bring events and meetings there, learn about many events on the front-end in the world from one calendar.

I took the well-known technologies that were at hand: Node.js and Express. Still Heroku, because it's free. The application itself is simple: it is a server on Node.js, an Express application. Just lift the server on some port and listen to requests.

import express from 'express'; import { router } from 'routes'; const app = express(); app.use('/', router); const port = process.env.PORT || 8000; app.listen(port, () => { console.log('Server started on :${port}'); });

I took advantage of the fact that everything is already configured in the Web standards calendar, and from a huge number of small files there is one ICS file that can be downloaded. Why should I collect my own?

// services/vendors/web-standards.js import axios from 'axios'; const axioslnstance = axios.create({ baseURL: 'https://web-standards.ru/' , }); export function getRemoteCal() { return axioslnstance.get('calendar.ics'); }

Make sure everything works fast.

import { Router } from 'express'; import * as wst from 'services/vendors/web-standards'; export const router = Router(); router.get('/', function(req, res, next) { wst .getRemoteCal() .then(vendorResponse => parseCalendar(vendorResponse.data)) .then(events => { res.json({ events }); }) .catch(next); });

Use GET methods for tests. Skills work with POST methods, so GET methods can be done exclusively for debugging. I have implemented such a method. All that he does is download the same ICS, pars, and issue it in JSON form.

I built the demo quickly, so I took the ready-made node-ical library:

import ical from 'node-ical'; function parseCalendar(str) { return new Promise((resolve, reject) => { ical.parseICS(str, function(err, data) { if (err) { reject(err); } resolve(data); }); }); }

She knows how to parse the ICS format. The output gives such a sheet:

{ "2018-10-04-f rontendconf@https://web-standards.ru/": { "type": "VEVENT", "params": [], "uid": "2018-10-04-f rontendconf@https://web-standards.ru/", "sequence": "0", "dtstamp": "2019-05-25T21:23:50.000Z", "start": "2018-10-04T00:00:00.000Z", "datetype": "date", "end": "2018-10-06T00:00:00.000Z", "MICROSOFT-CDO-ALLDAYEVENT": "TRUE", "MICROSOFT-MSNCALENDAR-ALLDAYEVENT": "TRUE", "summary": "FrontendConf", "location": "", "description": "http://frontendconf.ru/moscow/2018" } }

To parse and provide the necessary information to the user of the skill, it is enough to know the time of the beginning and end of the event, its name, link and, importantly, the city. I want the skill to search for events around the city.

Input format

How Yandex.Dialogs return information? A column or a voice assistant built into the mobile application listens to you, and Yandex servers process what they hear and send an object in response:

{ "meta": { … }, "request”: { … }, "session": { … }, "version": "1.0" }

The object contains meta-information, information about the request, the current session and the version of the API in case it suddenly updates - skills should not break.

There is a lot of useful information in meta information .

{ "meta": { "locale": "ru-RU", "timezone": "Europe/Moscow", "client_id": "ru.yandex.searchplugin/5.80…”, "interfaces": { "screen": {} } } }

" Locale " - used to understand the user's region.

“ Timezone ” can be used to competently work with time and more accurately determine the user's location.

" Interfaces " - information about the screen. If there is no screen, you should think about how the user will see the pictures if you give them in the answer. If there is a screen, we take out information on it.

The request format is simple :

{ "request": { "command": " 16", "original_utterance": " , 16", "type": "SimpleUtterance", "nlu": { "tokens": [ "", "", "", "", "", "16"], "entities": [...] } } }

It gives what the user said, the request type and the NLU - Natural-language unit . This is exactly the magic that Yandex.Dialogs platform takes on. She breaks the whole sentence that she recognized into tokens - words. Still there are entities about which we will talk a little later. Using tokens is enough for a start.

We got these words, and what to do with them? The user has said something, but he can say the words in a different order, use the “not” particle, which changes everything dramatically, or even say “morning” rather than “morning”. If the user also speaks Belarusian, then there will be a “satchel”, not morning. A large project will require the help of linguists to develop a skill that understands everything. But I did a simple task, so I did without outside help.

Can a computer talk like a person?

This is a philosophical question that the Turing test is trying to answer. The test allows with a certain probability to determine that artificial intelligence can impersonate a person. There is a Loebner Prize to receive which programs compete in passing the Turing test. The decision is made by a panel of judges. To get the prize, you need to trick 33% of the judges or more. Only in 2014, the bot Zhenya Gustman from St. Petersburg finally deceived the commission.

[15:46:05] Dudge: My favourite music is contemporary Dazz, what do you prefer? [15:46:14] Eugene: To be short I'll only say that I HATE Britnie [sic] Spears. All other music is OK compared to her. [15:47:06] Dudge: do you like to play any musical instruments [15:47:23] Eugene: I'm tone deaf, but my guinea pig likes to squeal Beethoven's Ode to Doy every morning. I suspect our neighbors want to cut his throat ... Could you tell me about your job, by the way? [15:48:02] Dudge: Guinea pig? Are you an animal lover [15:48:08] Eugene: Yeah. A nice little guinea pig. Not some annoying chatter bot.

In 2019, nothing really changed - it’s still difficult to deceive a person. But we are gradually moving towards this.

Script work

A good skill requires an interesting use case. I advise one book that is worth reading - “Designing Voice User Interfaces: Principles of Conversational Experiences”. It is awesome about writing scripts for voice interfaces and keeping user attention. I didn’t see the book in English, but it is read quite easily.

The first thing to start developing a skill is a greeting.

“While you think what to say, do curtsy! It saves time. ”

When the skill turns on, you need to somehow hold the user from the first second, and for this you need to explain how to use the skill. Imagine that the user launched the skill and there was silence. How do you know if a skill works at all? Give the user instructions, such as buttons on the screen.

Easy dialogue

Signs of easy dialogue. The list was invented by Ivan Golubev, and I really like the wording.

- L personal.

- Natural.

- Flexible.

- Ontext.

- And natsiative.

- Brief J.

Personal means that the bot must have a character. If you talk with Alice, then you will understand that she has a character - the developers take care of this. Therefore, your bot for organicity must have a "personality". To give out phrases with at least one voice, use the same verbal constructions. This helps to retain the user.

Natural . If the user request is simple, then the answer should be the same. During communication with the bot, the user must understand what to do next.

Flexible . Get ready for anything. There are many synonyms in Russian. The user can distract from the column and transfer the conversation to the interlocutor, and then return to the column. All this is difficult to handle. But if you want to make a bot well, then you have to. Note that some percentage of non-recognition will still be. Be prepared for this - offer options.

Contextual - the bot, ideally, should remember what happened before. Then the conversation will be lively.

- Alice, what is the weather like today?

- Today in the District from +11 to +20, cloudy, with clearings.

- And tomorrow?

- Tomorrow in the District from +14 to +27, cloudy, with clearings.

Imagine that your bot does not know how to store context. What then does the query “for tomorrow?” Mean for him. If you know how to store a context like Alice, then you can use the previous results to improve the answers in the skill.

Proactive . If the user dulls, the bot should prompt him: "Click this button!", "Look, I have a picture for you!", "Follow the link." The bot should tell you how to work with it.

The bot should be short . When a person speaks for a long time, it is difficult for him to keep the attention of the audience. It’s even more complicated with the bot - it’s not a pity for it, it is inanimate. To maintain attention, you need to build a conversation interestingly or briefly and succinctly. This will help “Write. Cut it. ” When you start developing bots, read this book.

Database

When developing a complex bot, you can’t do without a database. My demo does not use the database, it is simple. But if you screw some databases, you can use the information about the user session, at least to store the context.

There is a nuance: Yandex.Dialogs do not give away user private information, for example, name, location. But this information can be asked from the user, saved and linked to a specific session ID, which Yandex.Dialogs send in the request.

State machine

Mentioning complex scenarios, one cannot but recall the state machine. This mechanism has long and been excellently used for programming microcontrollers, and sometimes the front end. The state machine is convenient for the scenario: there are states from which we pass to other states for certain phrases.

Do not overdo it. You can get carried away and create a huge state machine, which will be difficult to figure out - maintaining such code is difficult. It’s easier to write one script that consists of small sub-scripts.

Unclear? Specify

Never say, "Repeat, please." What does a person do when asked to repeat? He speaks louder. If the user yells at your skill, recognition will not improve. Ask a clarifying question. If one part of the user’s dialogue is recognized and something is missing, specify the missing block.

Text recognition is the most difficult task in developing a bot, so sometimes clarification does not help. In any incomprehensible situation, the best solution is to collect everything in one place, log, and then analyze and use in the future. For example, if the user says frankly strange and incomprehensible things.

“Cooked. Creamy Shore

Poked on nav.

And grunts grunted.

Like a mummy in a mov. ”

Users can unexpectedly use some neologism, which means something, and it needs to be processed somehow. As a result, the recognition percentage drops. Do not worry - log in, study and improve your bot.

Stop word

There must be something that stops the skill when you want to get out of it. Alice can stop after the phrases: “Alice, stop it!” Or “Alice, stop!” But users usually don’t read the instructions. Therefore, at least respond to the word “Stop” and return control to Alice.

Now let's see the code.

Time for a demo

I want to implement the following phrases.

- Upcoming events in a city.

- Name of the city: “events in Moscow”, “events in Minsk”, “St. Petersburg” to show the events that were found there.

- Stop words: “Stop”, “Stop it.” "Thank you" if the user ends the conversation with this word. But ideally, a linguist is needed here.

For "upcoming events" any phrase is suitable. I created a lazy bot, and when he does not understand what they are saying, he gives out information about the next three events.

{ "request": { "nlu": { "entities": [ { "tokens": { "start": 2, "end": 6 }, "type": "YANDEX.GEO", "value": { "house_number": "16", "street": " ", "city": "" } } ] } } }

Yandex gradually improves the Yandex.Dialogs platform and issues entities that it has been able to recognize. For example, he knows how to get addresses from a text, sorting it in parts: city, country, street, house. He also knows how to recognize numbers and dates, both absolute and relative. He will understand that the word "Tomorrow" is today's date, to which one is added.

Reply to user

You need to somehow respond to your user. The whole skill is 209 lines with the last empty line. Nothing complicated - work for the evening.

All you do is process the POST request and get a “request”.

router.post('/', (req/ res, next) ⇒ { const request = req.body;

Further, I did not greatly complicate the state machine, but went according to priorities. If the user wants to learn how to use the bot, then this is the first launch or a request for help. Therefore, we just prepare him an "EmptyResponse" - this is what I call it.

if (needHelp(request.request)) { res.json(prepareEmptyResponse(request)); return; }

The needHelp function is simple.

function needHelp(req) { if (req.nlu.token.length ≤ 2 && req.nlu.tokens.includes('')) { return true; } if (req.nlu.token.length = 0 && req.type ≠ 'ButtonPressed') { return true; } return false; }

When we have zero tokens, then we are at the beginning of the request. The user just started the skill or asked nothing. You need to check that the tokens are zero and this is not a button - when you click the button, the user also does not say anything. When a user asks for help, we go through tokens and look for the word “Help”. The logic is simple.

If the user wants to stop.

if (needToStop(request.request)) { res.json(prepareStopResponse(request)); return; }

So we are looking for some kind of stop word inside.

function needStop(req) { const stopWords = ['', '', '' ]; return req.nlu.token.length ≤ 2 && stopWords.some(w ⇒ return req.nlu.token.includes(w)); }

In all answers, you must return the information that Yandex.Dialogs sent about the session. He needs to somehow match your answer and the user's request.

function prepare StopResponse(req) { const { session, version } = req; return { response: { text: ' . !', end_session: true, }, session, version, }; }

Therefore, what you got in the variables "session" and "version", return back, and everything will be fine. Already in the answer you can give some text for Alice to pronounce it, and pass “end session: true”. This means that we end the skill session and transfer control to Alice.

When you call a skill, Alice turns off. All she listens to is her stop words, and you are in full control of the process of working with the skill. Therefore, you need to return control.

With an empty request is more interesting.

return { "response": { "text": '! - .', "tts": '! - .', buttons: [ { title: ' ', payload: {} hide: false, }, { title: ' ', payload: { city: '', } hide: false, }, ], end_session: false, }, session, version, };

There is a TTS ( Text To Speech ) field - voice control . This is a simple format that allows you to read the text in different ways. For example, the word “multidisciplinary” has two stresses in the Russian language - one primary, the second secondary. The task is that Alice was able to pronounce this word correctly. You can break it with a space:

+ +

She will understand him as two. Plus accent is highlighted.

There are pauses in the speech - you put a punctuation mark, which is separated by spaces. So you can create dramatic pauses:

— - - - - - - - - +

I already spoke about buttons . They are important if you are not communicating with a column, but with the Yandex mobile application, for example.

{ "response": { "buttons": [ { "title": "Frontend Conf", "payload": {}, "url": "https://frontendconf.ru/moscow-rit/2019" , "hide": false } ] } }

Buttons are also hints for phrases that you perceive in your skill. Skills work in Yandex applications - you communicate with the interface. If you want to give out some information - give a link, the user clicks on it. You can also add buttons for this.

There is a “payload” field where you can add data. They will then come back with a “request” - you will know, for example, how to mark this button.

You can choose the voices that your skill will speak.

- Alice is Alice 's standard voice. Optimized for short interactions.

- Oksana - the voice of Yandex.Navigator.

- Jane.

- Zahar.

- Ermil.

- Erkan Yavas - for long texts. Originally created to read news.

To complete the skill , just return "end_session: true".

{ "response": { "end_session": true } }

What happened with the demo

First, I filter by date.

function filterByDate(events) { return events.filter(event ⇒ { const current = new Date().getTime(); const start = new Date(event.start).getTime(); return (start > current) || (event.end && new Date(event.end).getTime() > current && start ≤ current); }); }

The logic is simple: in all the events that I parsed from the calendar, I take those that will take place in the future, or are going now. It is probably strange to ask about past events - the skill is not about that.

Next, filtering in place is what it was all about.

function filterByPlace(events, req) { const cities = new Set(); const geoEntities = req.nlu.entities.filter(e ⇒ e.type = 'YANDEX.GEO'); if (req.payload && req.payload.city) cities.add(req.payload.city); geoEntities.forEach(e ⇒ { const city = e.value.city && e.value.city.toLowerCase(); if (city && !cities.has(city)) { cities.add(city); } });

In "entities" you can find the YANDEX.GEO entity, which refines the location. If the entity has a city, add to our set. Next, the logic is also simple. We are looking for this city in tokens, and if it is there, we are looking for what the user wants. If not, we look in all the “locations” and “events” that we have.

Suppose Yandex did not recognize that it was YANDEX.GEO, but the user named the city - he was sure that something was going on there. We go through all the cities in “events” and look for the same in tokens. It turns out a cross-comparison of arrays. Not the most productive way, of course, but what is. That's the whole skill!

Please do not scold me for the code - I wrote it quickly. Everything is primitive there, but try to use it or just play around.

Posting Skill

Go to the Yandex.Dialogs page.

Choose a skill in Alice. Press the button "Create dialogue" and you will find yourself in the form that you need to fill in with your data.

- The name is what will be in the dialogue.

- Activation name . If you select the activation name "Web Standards Calendar" through a hyphen, then Alice does not recognize it - she does not hear hyphens. We say words without hyphens, and activation will not work. To earn money, set the name to "Web Standards Calendar."

- Activation phrases to launch the skill. If this is a game, then “Let's play something,” “Ask someone.” The set is limited, but this is because such phrases are activation for Alice. She must understand that it’s time to go into a skill.

- Webhook URL - the same address to which Alice will send POST requests.

- The voice . The default is Oksana. Therefore, many in the catalog her voice, not Alice.

- Is a device with a screen required? If there are pictures, you will be limited to using the skill - on the column the user will not be able to launch it.

- Private skills are an important field for developers. If you are not ready to post the skill in public, if only because it is raw, then we do not show it in the catalog, limiting privacy. Private skills are moderated quickly - in a couple of hours. Such skills do not need to be thoroughly tested - it is enough to match the activation name. Since the user will not find them in the catalog, they are more loyal to them.

- Notes for the moderator . I asked the moderator to help: “I really need the skill for the demo at the conference!” - and I managed to get through the moderation quickly.

- Copyright Notice . If you, not working in a conditional bank, decide to create a skill for it, you need to prove that you have the right to do so. Suddenly they will come to you? And they will definitely come, and through the distributor, that is, Yandex, which does not need extra problems.

Done - send the skill for moderation, and you can test.

Testing

I wrote a banal Express server.This is a simple API that is covered by regular tests. There are specialized utilities, for example, alice-tester - it knows how to work with the exact format that Alice provides.

const assert = require('assert'); const User = require('alice-tester'); it('should show help', async () => { const user = new User('http://localhost:3000'); await user.enter(); await user.say(' ?'); assert.equal(user.response.text, ' .'); assert.equal(user.response.tts, ' +.'); assert.deepEqual(user.response.buttons, [{title: '', hide: true}]); }]);

., «».

, , . , : , , . , — , . Postman — .

. . Just AI. , , .

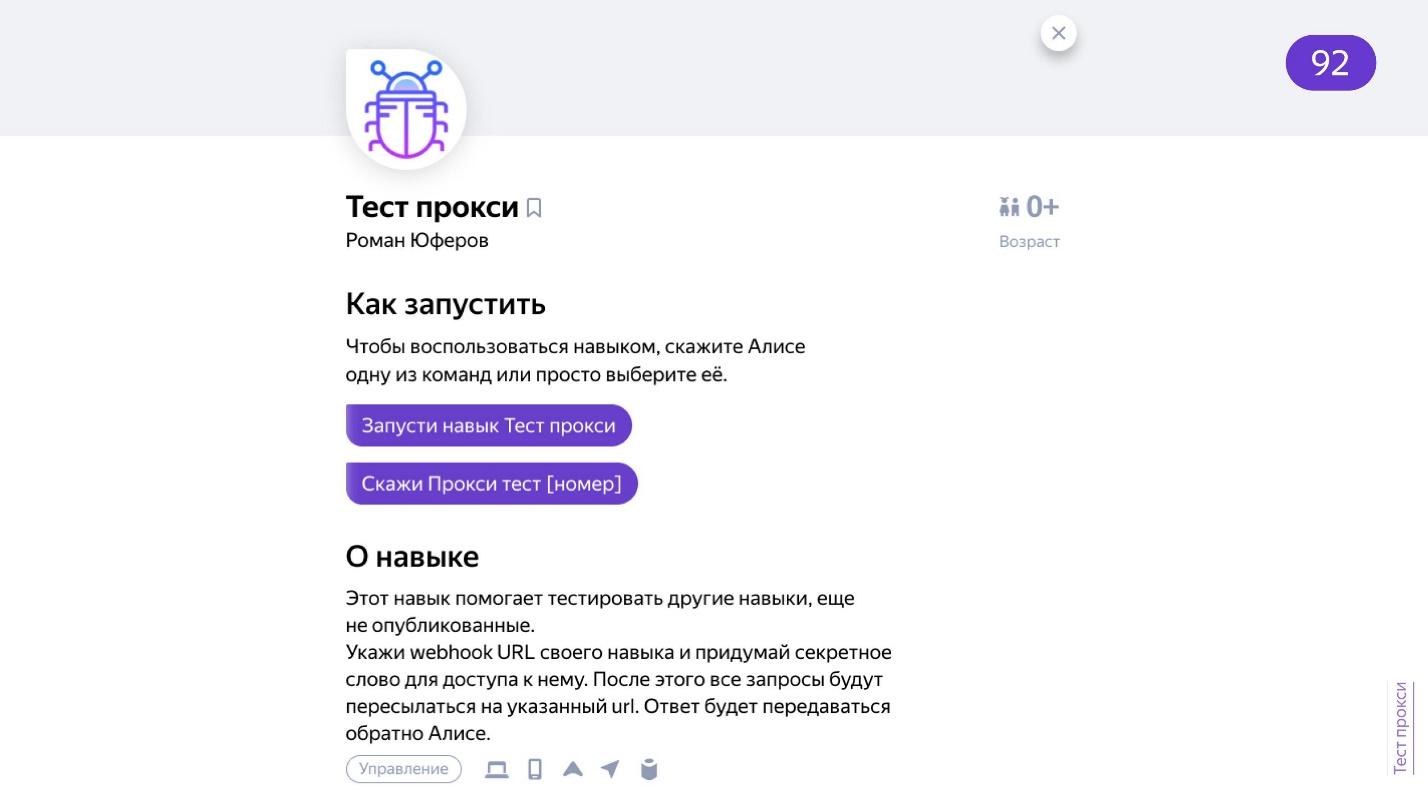

. dialogs.home.popstas.ru URL, , localhost. , debug. , localhost.

. — . URL , - .

— .

, , , .

— . , , , , , . - - .

Dialogflow , Google Now. , — , — .

, . . , . , .

Google Dialogflow ., Dialogflower . Alexa, Google Now, . — API, .

, . Aimyloqic — . Zenbot , Tortu Alfa.Bot — , . I recommend!

FAQ . , . , , .

. , , , . — , . , . , .

IFTTT , — Trello. — API. , , . , IFTT — .

. , . .

, «» .

. Xiaomi . . . , Arduino - --- , , : «, - 2000!» — !

, : «, !» — ! . , .

. , , : , . . . . . . GitHub- ., . — .

Telegram- . . — . , . . .

. , . - , . , . . , . , : , -, , . , , . : « , ?» , , , .

— , . .

. — . , , . , .

, , - , !

— , — . . — — !

FrontendConf 2019 « CSS — ». — . , FrontendConf 2019 . , , , , .

. , , .

All Articles