New Product Trends

About product analytics on Habré is written not so often, but publications, and good ones, appear with enviable regularity. Most articles on product analytics have appeared over the past couple of years, and this is logical - because product development is becoming increasingly important for both IT and business, only indirectly related to information technology.

Here, on Habré, an article was published in which the company’s expectations from a product analyst are well described. Such a specialist should, firstly, seek and find promising points of product growth, and secondly, identify and confirm the relevance of the problem by formulating and scaling it. You can’t say more precisely. But product analytics is developing, new tools for work and trends appear that help product analysts work. Just about trends, in relation to the work of mobile applications and services, we will talk in this article.

Custom data collection

Now the data that enables the company to improve its work by implementing a personalized approach to customer service is collected by everyone - from Internet companies like Google to retailers like Walmart.

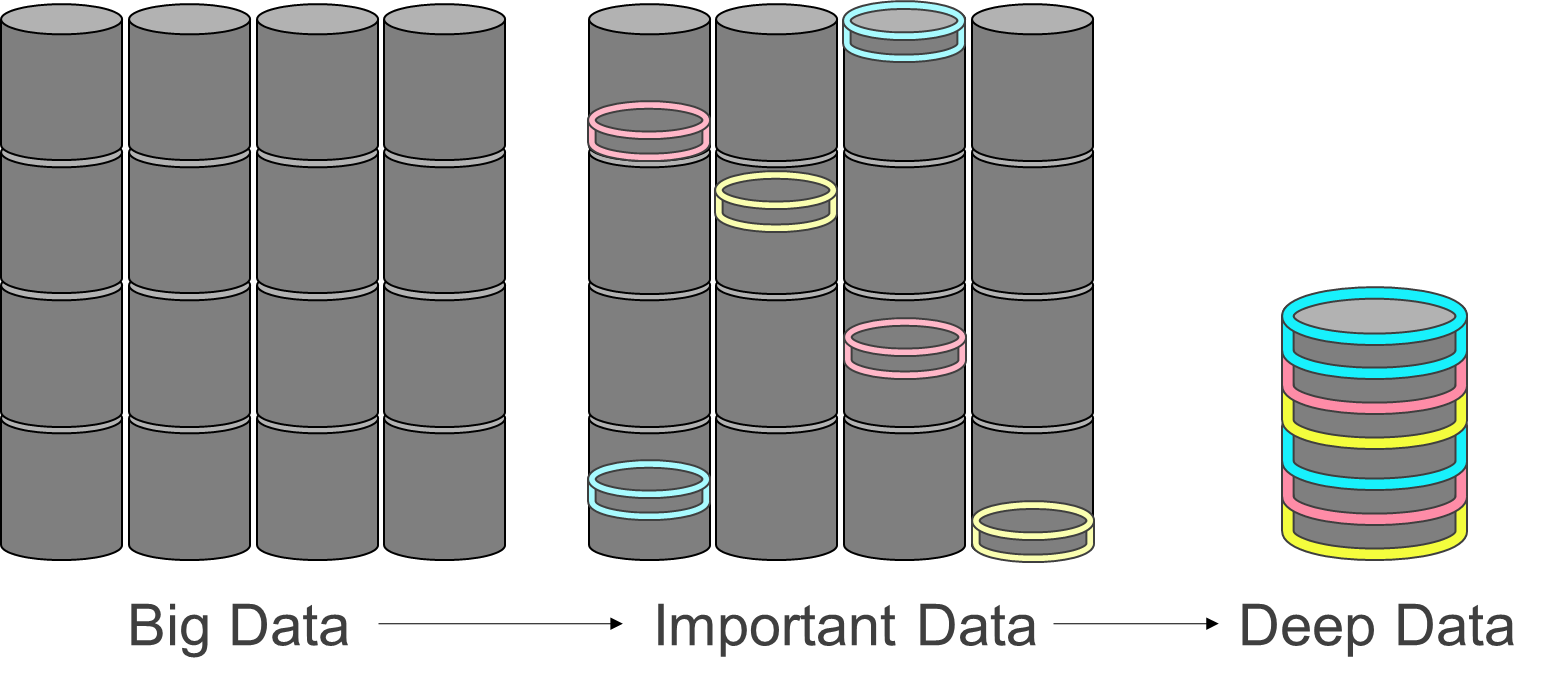

This information is not only about customers, but also about weather conditions that can affect the company’s work, average check size, customer preferences, dynamics of purchases of certain goods, congestion of points of sale, etc. But the problem is that there is more and more data, and it is very difficult for business to separate essential information from non-essential.

You can collect petabytes of data, and then it turns out that a company needs only a small fraction of the information collected to improve its performance. Everything else is “white noise”, which does not help in any way to move forward. Finding the right data is increasingly looking like finding a needle in a haystack. Only a stack the size of an iceberg, and the needle is thin and very small.

Any type of business needs a tool that allows you to clarify the key requirements for the data collected. Data collection should be tuned where problems are expected, because "where it is thin, it breaks." Accordingly, such a tool should identify the most relevant and important criteria and conduct a search precisely with their help.

Limiting the data collected makes it possible to reduce the cost of collecting, storing and processing information. Current working methods often lead to the fact that most of the data simply "gathers dust" on hard drives for years.

As an important trend, the introduction of “smart” data collection systems - trackers, to which the feedback from the results of “crude” analytics is cast is presented. Such a coarse grained approach, which in its logic is similar to hybrid QM / MM systems in molecular modeling of large proteins or fractal image compression algorithms: a large, rough picture of the user path is analyzed by a fast pipeline and there are edges (transitions between events) with the greatest analysis potential, such edges are broken by the tracker into smaller events, and the data collection as a result is constantly adapted to the required analysis accuracy and the ultimate analytic task.

The same approach with thrown feedback on the collection and storage of data can be used for “self-cleaning data”, when we basically do not store too much, we use fast compact databases for rough data (Greenplum DB, Clickhouse) and large slow ones for detailed (Apache Kafka) , in addition, we stop storing data common to all, bringing together user behavioral segments and separately preserving their preference models.

Feedback Acceleration and Predictive Analytics

It's time to talk about feedback of a more fundamental kind - analytics itself is feedback that regulates how the company works with its customers.

For any company that has a mobile application or service to work properly, feedback is needed that allows you to identify problems and solve them by searching for hypotheses about possible solutions and running tests.

Feedback time delays should be reduced to a minimum. There are two ways to do this.

Use predictive metrics instead of historical ones. In this case, speeding up the feedback means not waiting until the client, user, comes or comes to a certain goal in order to begin to correct the situation. The method allows predicting, based on models built on historical data, with what probability a particular client will reach which screens and buttons of the application, or external goals - product purchases, calls to the sales department, etc. Why is this company? To be able to influence the fate of a particular client or similar new clients as quickly as possible. The second is especially important for quickly redistributing the budgets of advertising channels - if a channel suddenly changed the type of customers delivered, you can change its budget without waiting for the final actions - orders, or vice versa, unsubscribes, failures.

Acceleration can often be achieved simply by replacing real metrics with what can be predicted. Another positive point is that the model is calibrated on all data, so if you use the current information just received, there is an opportunity to improve the prediction. Such a model will be constantly updated, and data deposits for the formation of historical metrics will simply not be needed.

An example is the situation when we create a dynamic interface for a service or application. And various interface elements, say buttons, appear depending on what is known about the user.

Another example is the work of a voice assistant and the purchase of plane tickets. Existing digital assistants need to be improved a lot, first of all - personalization. So, if you try to book a ticket using Siri, it will show an extensive selection of available options. But here personalization is needed, so that in the end the assistant shows 2-3 suitable options, no more. And predictive analytics is one way to achieve what you want, because you can continue the client’s intentions without forcing him to read out (in this case, it’s important not to confuse this method with ML for speech recognition, the predictive analytics discussed will work on top of events from client words already recognized in the text )

Acceleration of testing processes on segments. The results of a company's product analysis are usually tested on the entire audience of a company or service. But it is much more effective to conduct tests on individual segments, precisely those where the problem was observed.

By the way, there is an interesting method, which can be called "one-armed bandit against A / B tests." Why a “one-armed bandit”? In any casino there are these slot machines, and all these machines are configured differently in the same institution. Not always, but more often. Imagine that we want to identify a "bandit" who gives out the win more often than others. To do this, we begin to test all the machines. But where the gain is a little more - we will allocate more coins for the game. The advantage of this scheme is that individual test segments can be run in parallel, and successful results are extrapolated to all other segments, and continuous optimization is obtained instead of testing with control.

The “one-armed bandit” method can be used in practice when testing a mobile application. So, different interfaces / screens are shown to different user segments, and the control segment is also left, which makes it possible for reinforcement learning to the robot and the analyst who watches it, to evaluate the interaction of users from different segments with different screens. As soon as the situation clarifies, a successful find is formulated as a refinement to the entire application, or personalization is carried out, sharing the application’s functionality for different segments. User models and models of user interaction with applications can be different. Using abstract embeddings (screen2vec by analogy with word2vec), the model can be built on one application, and applied, albeit with limitations, on the second. This makes it possible to transfer analytical insights between different versions, platforms, releases, and even affiliate applications. Of course, it is necessary to control the applicability of other people's models so as not to shoot yourself in the foot.

Feedback Automation

In order to marginally reduce the feedback loop time, you can try to develop automatic and autonomous application elements or real-time analytical microservices. This is especially captivating - the buttons and interface elements themselves could evaluate user behavior and the influence of various factors on the entire user path and its business metrics - conversion, average check, engagement and retention. This opens up the possibility, without human intervention, to determine the value of individual elements in terms of increasing orders or customer loyalty, and the individual stages of analytics are simply not used, because the process is automated. The buttons adjust themselves, having forwarded signals from other buttons in the user path and from the central controller, they constantly optimize their behavior.

At a certain level, this moment can be compared with self-regulation of the vital activity of a living organism. It has independent agents - individual cells that allow the whole body to self-regulate. As for applications, one can imagine a situation where the ecosystem of interface components regulates each other, reading user paths and exchanging important information, such as segments and types of users and their experience in interacting with users in the past. We call such a set of smart components Business Driven Intellectual Agents and now, based on our research, we are collecting an experimental prototype of this approach. Probably the first time he will play a purely research function and inspire us and other teams to develop a full-fledged framework that is compatible with common interface building platforms - React JS, Java, Kotlin and Swift.

So far, there is no such technology, but its appearance can be expected not only from us literally any day. Most likely it will look like a framework or SDK for the predictive UI interface. We demonstrated a similar technology on Yandex Data Driven 2019 using the example of a Kickstarter application modification, when on a client the serialized model considered the probability of user loss and conditionally rendered interface elements depending on it.

What will product analytics look like in 20 years? In fact, now the industry itself, where everything or almost everything is done manually, is outdated. Yes, there are new tools that can increase work efficiency. But all the same, all this is too slow and slow, in modern conditions you need to work faster. Detection and correction of problems in the future should occur autonomously.

It is likely that applications will "learn from each other." For example, an application that is used once a month will be able to adopt user models relevant to it and their preferences for CJM embeddings from another application that is used daily. In this case, the speed of development of the first application can significantly increase.

Inside analytics itself, there are very few well-defined tasks for the automation of analytical pipelines, almost everywhere analysts are fighting with poor data markup or unclear business goals. But gradually, as the development penetrates into analytics, the application of ML inside analytics is purely for solving analytical problems, as well as as the digitalization of HR and the more correct transfer of goals and tasks between departments, the landscape of product analytics will begin to change dramatically and characteristic tasks will be automated. And the exchange of insights and methods will turn into a code exchange and setting up autonomous agents acting as a flexible interface for the user and optimizing the business robot for the company. Of course, all this will not come soon, but there is already a chance for the future, so the very future of product analytics is somewhere nearby.

All Articles