Pinterest Sharding: How We Scaled Our MySQL Park

Salute, Khabrovites! Congratulations to all on the day of the programmer and share the translation of the article, which was specially prepared for students of the course "High Load Architect" .

"Sharding. Or do not shard. Without trying. "

- Yoda

Today we will dive into the separation of data between several MySQL servers. We finished sharding at the beginning of 2012, and this system is still used to store our basic data.

Before we discuss how to share data, let's get to know them better. We’ll set up a pleasant light, get strawberries in chocolate, recall quotes from Star Trek ...

Pinterest is a search engine for everything that interests you. In terms of data, Pinterest is the largest graph of human interests worldwide. It contains over 50 billion pins that have been saved by users on more than a billion boards. People keep some pins for themselves and like other pins, subscribe to other pins, boards and interests, view the home feed of all pins, boards and interests to which they are subscribed. Fine! Now let's make it scalable!

In 2011, we began to gain momentum. By some estimates , we grew faster than any startup known at that time. Around September 2011, every component of our infrastructure was overloaded. We had several NoSQL technologies at our disposal, and all of them disastrously could not cope. We also had many MySQL slaves, which we used to read, which caused a lot of extraordinary errors, especially when caching. We rebuilt our entire data storage model. To work efficiently, we carefully approached the development of requirements.

Since we wanted this data to span multiple databases, we could not use just a join, foreign keys, and indexes to collect all the data, although they can be used for subqueries that do not span the database.

We also needed to maintain load balancing on the data. We decided that moving data, element by element, would make the system unnecessarily complex and cause a lot of errors. If we needed to move data, it was better to move the entire virtual node to another physical node.

In order for our implementation to quickly go into circulation, we needed the simplest and most convenient solution and very stable nodes in our distributed data platform.

All data had to be replicated to the slave machine to create a backup, with high availability and dumping to S3 for MapReduce. We interact with master only on production. On production, you will not want to write or read in slave. Slave lag, and it causes strange bugs. If sharding is done, there is no point interacting with a slave on production.

Finally, we need a good way to generate universal unique identifiers (UUIDs) for all of our objects.

What we were going to create was supposed to meet the requirements, work stably, in general, be workable and maintainable. That is why we have chosen the already quite mature MySQL technology as the underlying technology. We intentionally wary of new technologies for automatic scaling MongoDB, Cassandra and Membase, because they were far enough from maturity (and in our case they broke in impressive ways!).

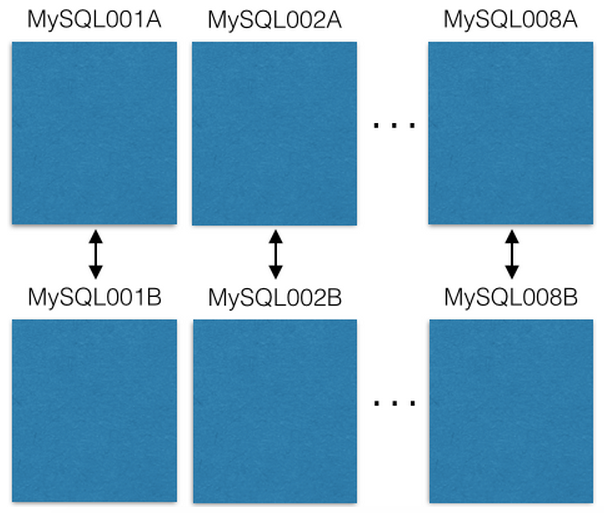

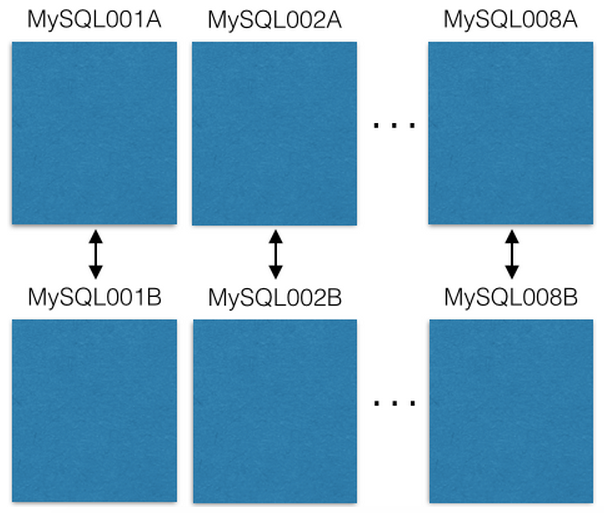

We started with eight EC2 servers, one instance of MySQL on each:

Each MySQL master-master server is replicated to the backup host in the event of a primary failure. Our production servers only read or write to master. I recommend that you do as well. This greatly simplifies and avoids errors with replication delays.

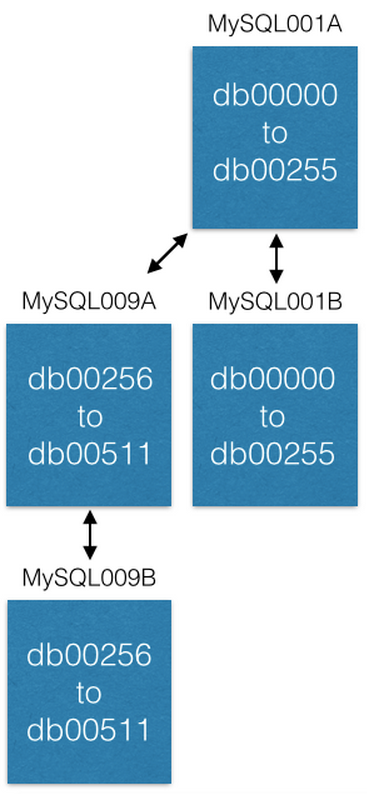

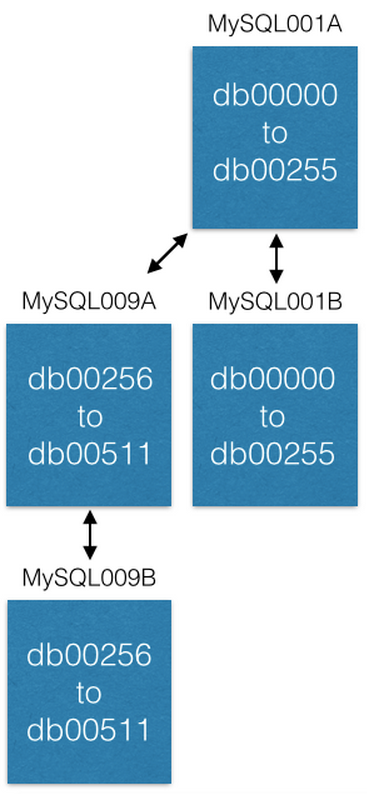

Each MySQL entity has many databases:

Note that each database is uniquely named: db00000, db00001 to dbNNNNN. Each database is a shard of our data. We made an architectural decision, on the basis of which only part of the data falls into the shard, and it never goes beyond this shard. However, you can get more capacity by moving shards to other machines (we'll talk about this later).

We work with a configuration table that indicates which machines have shards:

This configuration only changes when we need to move shards or replace the host. If

dies, we can use the existing

, and then pick up a new one. The configuration is located in ZooKeeper and, when updated, is sent to services that serve MySQL shard.

Each shard has the same set of tables:

,

,

,

,

, etc. I will talk about this a bit later.

How do we distribute data for these shards?

We create a 64-bit ID that contains the shard ID, the type of data contained in it, and the place where this data is in the table (local ID). The shard ID consists of 16 bits, the type ID is 10 bits, and the local ID is 36 bits. Advanced mathematicians will notice that there are only 62 bits. My past experience as a compiler and circuit board developer has taught me that backup bits are worth their weight in gold. So, we have two such bits (set to zero).

Let's take this pin: https://www.pinterest.com/pin/241294492511762325/ , let's analyze its ID 241294492511762325:

Thus, the pin object lives in 3429 shard. Its type is “1” (that is, “Pin”), and it is on line 7075733 in the pin table. For example, let's imagine this shard is in MySQL012A. We can get to it as follows:

There are two types of data: objects and mappings. Objects contain parts, such as pin data.

Object tables such as Pins, users, boards, and comments have an ID (local ID, with an automatically increasing primary key) and a blob that contains JSON with all the object data.

For example, pin objects look like this:

To create a new pin, we collect all the data and create a JSON blob. Then we select the shard ID (we prefer to choose the same shard ID as the board on which it is placed, but this is not necessary). For pin type 1. We connect to this database and insert JSON into the pin table. MySQL will return an automatically increased local ID. Now we have a shard, a type and a new local ID, so we can make up a full 64-bit identifier!

To edit the pin, we read-modify-write JSON using MySQL transaction :

To remove a pin, you can delete its row in MySQL. However, it is better to add the “active” field in JSON and set it to “false” , as well as filter the results on the client side.

The mapping table links one object to another, for example, a board with pins on it. The MySQL table for mappings contains three columns: 64-bits for the ID "from", 64-bits for the ID "where" and the sequence ID. In this triple (from where, where, sequence) there are index keys, and they are on the shard of the identifier "from".

Mapping tables are unidirectional, for example, like the

table. If you need the opposite direction, you will need a separate

table. The sequence ID defines the sequence (our IDs cannot be compared between shards, because the new local IDs are different). Usually we insert new pins on a new board with a sequence ID equal to time in unix (unix timestamp). Any number can be in the sequence, but unix time is a good way to store new materials sequentially, since this indicator increases monotonously. You can take a look at the data in the mapping table:

This will give you more than 50 pin_id, which can then be used to search for pin objects.

What we just did is an application layer join (board_id -> pin_id -> pin objects). One of the amazing properties of connections at the application level is that you can cache the image separately from the object. We store pin_id in the cache of the pin object in the memcache cluster, however we save board_id in pin_id in the redis cluster. This allows us to choose the right technology that best suits the cached object.

There are three main ways to increase capacity in our system. The easiest way to update the machine (to increase space, put faster hard drives, more RAM).

The next way to increase capacity is to open up new ranges. Initially, we created a total of 4096 shards, despite the fact that the shard ID consisted of 16 bits (a total of 64k shards). New objects can only be created in these first 4k shards. At some point, we decided to create new MySQL servers with shards from 4096 to 8191 and began to fill them.

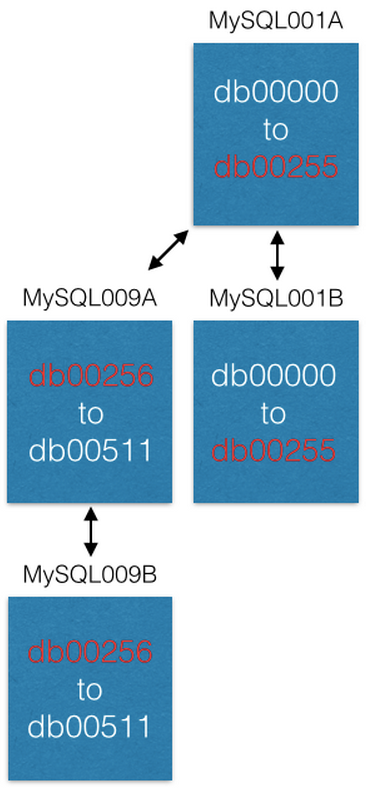

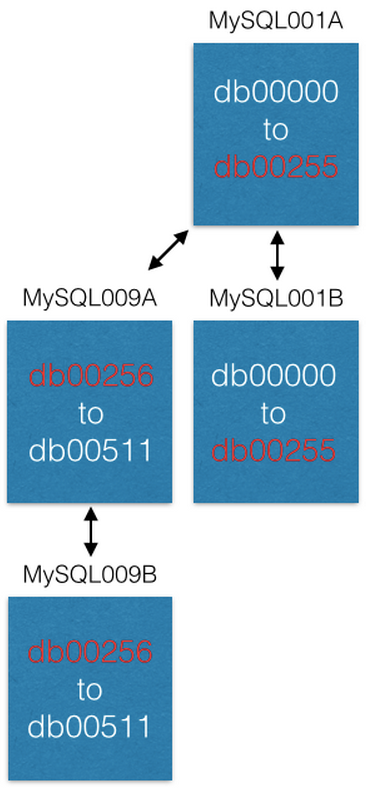

The last way we increased capacity is to move some shards to new machines. If we want to increase the capacity of MySQL001A (with shards from 0 to 511), we create a new master-master pair with the following possible names (say MySQL009A and B) and start replication from MySQL001A.

Once replication is complete, we change our configuration so that in MySQL001A there are only shards from 0 to 255, and in MySQL009A from 256 to 511. Now each server should process only half of those shards that it processed before.

Those who already had systems for generating new UUIDs will understand that in this system we get them at no cost! When you create a new object and insert it into the table of objects, it returns a new local identifier. This local ID, combined with the shard ID and type ID, gives you a UUID.

Those of you who have performed ALTERs to add more columns to MySQL tables know that they can work extremely slowly and become a big problem. Our approach does not require any MySQL level changes. On Pinterest, we probably only made one ALTER in the last three years. To add new fields to objects, just tell your services that there are several new fields in the JSON schema. You can change the default value so that when deserializing JSON from an object without a new field, you get the default value. If you need a mapping table, create a new mapping table and start populating it whenever you want. And when done, you can send!

It's almost like a mod squad , only completely different.

Some objects need to be found without an ID. For example, if a user logs in with a Facebook account, we need mapping from the Facebook ID to Pinterest ID. For us, Facebook IDs are just bits, so we store them in a separate shard system called mod shard.

Other examples include IP addresses, username and email address.

Mod Shard is very similar to the sharding system described in the previous section, with the only difference being that you can search for data using arbitrary input data. This input is hashed and modified according to the total number of shards in the system. As a result, a shard will be obtained on which the data will be or is already located. For example:

In this case, the shard will be equal to 1524. We process the configuration file corresponding to the shard ID:

Thus, in order to find data on the IP address 1.2.3.4, we will need to do the following:

You are losing some good shard ID properties, such as spatial locality. You will have to start with all the shards created at the very beginning and create the key yourself (it will not be generated automatically). It is always better to represent objects on your system with immutable IDs. This way, you don’t need to update many links when, for example, the user changes his “username”.

This system has been running production on Pinterest for 3.5 years and is likely to stay there forever. Its implementation was relatively simple, but putting it into operation and moving all the data from old machines was hard. If you encounter a problem when you first created a new shard, consider creating a cluster of background processing machines (hint: use pyres ) to move your data with scripts from old databases to your new shard. I guarantee that part of the data will be lost, no matter how hard you try (it's all gremlins, I swear), so repeat the data transfer again and again until the amount of new information in the shard becomes very small or not at all.

Every effort has been made to this system. But it does not ensure atomicity, isolation or coherence in any way. Wow! That sounds bad! But don’t worry. Surely, you will feel excellent without them. You can always build up these layers with other processes / systems, if necessary, but by default and at no cost you already get quite a lot: uptime. Reliability achieved through simplicity, and even works fast!

But what about fault tolerance? We created a service for servicing MySQL shards, saved the shard configuration table in ZooKeeper. When the master server crashes, we raise the slave machine and then raise the machine that will replace it (always up to date). We do not use automatic failure processing to this day.

"Sharding. Or do not shard. Without trying. "

- Yoda

Today we will dive into the separation of data between several MySQL servers. We finished sharding at the beginning of 2012, and this system is still used to store our basic data.

Before we discuss how to share data, let's get to know them better. We’ll set up a pleasant light, get strawberries in chocolate, recall quotes from Star Trek ...

Pinterest is a search engine for everything that interests you. In terms of data, Pinterest is the largest graph of human interests worldwide. It contains over 50 billion pins that have been saved by users on more than a billion boards. People keep some pins for themselves and like other pins, subscribe to other pins, boards and interests, view the home feed of all pins, boards and interests to which they are subscribed. Fine! Now let's make it scalable!

Painful growth

In 2011, we began to gain momentum. By some estimates , we grew faster than any startup known at that time. Around September 2011, every component of our infrastructure was overloaded. We had several NoSQL technologies at our disposal, and all of them disastrously could not cope. We also had many MySQL slaves, which we used to read, which caused a lot of extraordinary errors, especially when caching. We rebuilt our entire data storage model. To work efficiently, we carefully approached the development of requirements.

Requirements

- The whole system should be very stable, easy to use and scale from the size of a small box to the size of the moon as the site grows.

- All content generated by the pinner should be available on the site at any time.

- The system should support the request for N pins on the board in a deterministic order (for example, in the reverse order of creation time or in the order specified by the user). The same is true for like pinners, their pins, etc.

- For simplicity, you should strive for updates in every possible way. To get the necessary consistency, you will need additional toys, such as a distributed transaction journal. It's fun and (not too) easy!

Philosophy of Architecture and Notes

Since we wanted this data to span multiple databases, we could not use just a join, foreign keys, and indexes to collect all the data, although they can be used for subqueries that do not span the database.

We also needed to maintain load balancing on the data. We decided that moving data, element by element, would make the system unnecessarily complex and cause a lot of errors. If we needed to move data, it was better to move the entire virtual node to another physical node.

In order for our implementation to quickly go into circulation, we needed the simplest and most convenient solution and very stable nodes in our distributed data platform.

All data had to be replicated to the slave machine to create a backup, with high availability and dumping to S3 for MapReduce. We interact with master only on production. On production, you will not want to write or read in slave. Slave lag, and it causes strange bugs. If sharding is done, there is no point interacting with a slave on production.

Finally, we need a good way to generate universal unique identifiers (UUIDs) for all of our objects.

How we did sharding

What we were going to create was supposed to meet the requirements, work stably, in general, be workable and maintainable. That is why we have chosen the already quite mature MySQL technology as the underlying technology. We intentionally wary of new technologies for automatic scaling MongoDB, Cassandra and Membase, because they were far enough from maturity (and in our case they broke in impressive ways!).

In addition: I still recommend startups to avoid new bizarre things - just try to use MySQL. Trust me. I can prove it with scars.MySQL - the technology is proven, stable, and simple - it works. Not only do we use it, it is popular in other companies with scales even more impressive. MySQL fully meets our need for streamlining data queries, selecting specific data ranges and row-level transactions. In fact, in his arsenal there are much more opportunities, but we all do not need them. But MySQL is a “boxed” solution, so the data had to be sharded. Here is our solution:

We started with eight EC2 servers, one instance of MySQL on each:

Each MySQL master-master server is replicated to the backup host in the event of a primary failure. Our production servers only read or write to master. I recommend that you do as well. This greatly simplifies and avoids errors with replication delays.

Each MySQL entity has many databases:

Note that each database is uniquely named: db00000, db00001 to dbNNNNN. Each database is a shard of our data. We made an architectural decision, on the basis of which only part of the data falls into the shard, and it never goes beyond this shard. However, you can get more capacity by moving shards to other machines (we'll talk about this later).

We work with a configuration table that indicates which machines have shards:

[{“range”: (0,511), “master”: “MySQL001A”, “slave”: “MySQL001B”}, {“range”: (512, 1023), “master”: “MySQL002A”, “slave”: “MySQL002B”}, ... {“range”: (3584, 4095), “master”: “MySQL008A”, “slave”: “MySQL008B”}]

This configuration only changes when we need to move shards or replace the host. If

master

dies, we can use the existing

slave

, and then pick up a new one. The configuration is located in ZooKeeper and, when updated, is sent to services that serve MySQL shard.

Each shard has the same set of tables:

pins

,

boards

,

users_has_pins

,

users_likes_pins

,

pin_liked_by_user

, etc. I will talk about this a bit later.

How do we distribute data for these shards?

We create a 64-bit ID that contains the shard ID, the type of data contained in it, and the place where this data is in the table (local ID). The shard ID consists of 16 bits, the type ID is 10 bits, and the local ID is 36 bits. Advanced mathematicians will notice that there are only 62 bits. My past experience as a compiler and circuit board developer has taught me that backup bits are worth their weight in gold. So, we have two such bits (set to zero).

ID = (shard ID << 46) | (type ID << 36) | (local ID<<0)

Let's take this pin: https://www.pinterest.com/pin/241294492511762325/ , let's analyze its ID 241294492511762325:

Shard ID = (241294492511762325 >> 46) & 0xFFFF = 3429 Type ID = (241294492511762325 >> 36) & 0x3FF = 1 Local ID = (241294492511762325 >> 0) & 0xFFFFFFFFF = 7075733

Thus, the pin object lives in 3429 shard. Its type is “1” (that is, “Pin”), and it is on line 7075733 in the pin table. For example, let's imagine this shard is in MySQL012A. We can get to it as follows:

conn = MySQLdb.connect(host=”MySQL012A”) conn.execute(“SELECT data FROM db03429.pins where local_id=7075733”)

There are two types of data: objects and mappings. Objects contain parts, such as pin data.

Object Tables

Object tables such as Pins, users, boards, and comments have an ID (local ID, with an automatically increasing primary key) and a blob that contains JSON with all the object data.

CREATE TABLE pins ( local_id INT PRIMARY KEY AUTO_INCREMENT, data TEXT, ts TIMESTAMP DEFAULT CURRENT_TIMESTAMP ) ENGINE=InnoDB;

For example, pin objects look like this:

{“details”: “New Star Wars character”, “link”: “http://webpage.com/asdf”, “user_id”: 241294629943640797, “board_id”: 241294561224164665, …}

To create a new pin, we collect all the data and create a JSON blob. Then we select the shard ID (we prefer to choose the same shard ID as the board on which it is placed, but this is not necessary). For pin type 1. We connect to this database and insert JSON into the pin table. MySQL will return an automatically increased local ID. Now we have a shard, a type and a new local ID, so we can make up a full 64-bit identifier!

To edit the pin, we read-modify-write JSON using MySQL transaction :

> BEGIN > SELECT blob FROM db03429.pins WHERE local_id=7075733 FOR UPDATE [Modify the json blob] > UPDATE db03429.pins SET blob='<modified blob>' WHERE local_id=7075733 > COMMIT

To remove a pin, you can delete its row in MySQL. However, it is better to add the “active” field in JSON and set it to “false” , as well as filter the results on the client side.

Mapping Tables

The mapping table links one object to another, for example, a board with pins on it. The MySQL table for mappings contains three columns: 64-bits for the ID "from", 64-bits for the ID "where" and the sequence ID. In this triple (from where, where, sequence) there are index keys, and they are on the shard of the identifier "from".

CREATE TABLE board_has_pins ( board_id INT, pin_id INT, sequence INT, INDEX(board_id, pin_id, sequence) ) ENGINE=InnoDB;

Mapping tables are unidirectional, for example, like the

board_has_pins

table. If you need the opposite direction, you will need a separate

pin_owned_by_board

table. The sequence ID defines the sequence (our IDs cannot be compared between shards, because the new local IDs are different). Usually we insert new pins on a new board with a sequence ID equal to time in unix (unix timestamp). Any number can be in the sequence, but unix time is a good way to store new materials sequentially, since this indicator increases monotonously. You can take a look at the data in the mapping table:

SELECT pin_id FROM board_has_pins WHERE board_id=241294561224164665 ORDER BY sequence LIMIT 50 OFFSET 150

This will give you more than 50 pin_id, which can then be used to search for pin objects.

What we just did is an application layer join (board_id -> pin_id -> pin objects). One of the amazing properties of connections at the application level is that you can cache the image separately from the object. We store pin_id in the cache of the pin object in the memcache cluster, however we save board_id in pin_id in the redis cluster. This allows us to choose the right technology that best suits the cached object.

Increase capacity

There are three main ways to increase capacity in our system. The easiest way to update the machine (to increase space, put faster hard drives, more RAM).

The next way to increase capacity is to open up new ranges. Initially, we created a total of 4096 shards, despite the fact that the shard ID consisted of 16 bits (a total of 64k shards). New objects can only be created in these first 4k shards. At some point, we decided to create new MySQL servers with shards from 4096 to 8191 and began to fill them.

The last way we increased capacity is to move some shards to new machines. If we want to increase the capacity of MySQL001A (with shards from 0 to 511), we create a new master-master pair with the following possible names (say MySQL009A and B) and start replication from MySQL001A.

Once replication is complete, we change our configuration so that in MySQL001A there are only shards from 0 to 255, and in MySQL009A from 256 to 511. Now each server should process only half of those shards that it processed before.

Some cool features

Those who already had systems for generating new UUIDs will understand that in this system we get them at no cost! When you create a new object and insert it into the table of objects, it returns a new local identifier. This local ID, combined with the shard ID and type ID, gives you a UUID.

Those of you who have performed ALTERs to add more columns to MySQL tables know that they can work extremely slowly and become a big problem. Our approach does not require any MySQL level changes. On Pinterest, we probably only made one ALTER in the last three years. To add new fields to objects, just tell your services that there are several new fields in the JSON schema. You can change the default value so that when deserializing JSON from an object without a new field, you get the default value. If you need a mapping table, create a new mapping table and start populating it whenever you want. And when done, you can send!

Mod shard

It's almost like a mod squad , only completely different.

Some objects need to be found without an ID. For example, if a user logs in with a Facebook account, we need mapping from the Facebook ID to Pinterest ID. For us, Facebook IDs are just bits, so we store them in a separate shard system called mod shard.

Other examples include IP addresses, username and email address.

Mod Shard is very similar to the sharding system described in the previous section, with the only difference being that you can search for data using arbitrary input data. This input is hashed and modified according to the total number of shards in the system. As a result, a shard will be obtained on which the data will be or is already located. For example:

shard = md5(“1.2.3.4") % 4096

In this case, the shard will be equal to 1524. We process the configuration file corresponding to the shard ID:

[{“range”: (0, 511), “master”: “msdb001a”, “slave”: “msdb001b”}, {“range”: (512, 1023), “master”: “msdb002a”, “slave”: “msdb002b”}, {“range”: (1024, 1535), “master”: “msdb003a”, “slave”: “msdb003b”}, …]

Thus, in order to find data on the IP address 1.2.3.4, we will need to do the following:

conn = MySQLdb.connect(host=”msdb003a”) conn.execute(“SELECT data FROM msdb001a.ip_data WHERE ip='1.2.3.4'”)

You are losing some good shard ID properties, such as spatial locality. You will have to start with all the shards created at the very beginning and create the key yourself (it will not be generated automatically). It is always better to represent objects on your system with immutable IDs. This way, you don’t need to update many links when, for example, the user changes his “username”.

Last thoughts

This system has been running production on Pinterest for 3.5 years and is likely to stay there forever. Its implementation was relatively simple, but putting it into operation and moving all the data from old machines was hard. If you encounter a problem when you first created a new shard, consider creating a cluster of background processing machines (hint: use pyres ) to move your data with scripts from old databases to your new shard. I guarantee that part of the data will be lost, no matter how hard you try (it's all gremlins, I swear), so repeat the data transfer again and again until the amount of new information in the shard becomes very small or not at all.

Every effort has been made to this system. But it does not ensure atomicity, isolation or coherence in any way. Wow! That sounds bad! But don’t worry. Surely, you will feel excellent without them. You can always build up these layers with other processes / systems, if necessary, but by default and at no cost you already get quite a lot: uptime. Reliability achieved through simplicity, and even works fast!

But what about fault tolerance? We created a service for servicing MySQL shards, saved the shard configuration table in ZooKeeper. When the master server crashes, we raise the slave machine and then raise the machine that will replace it (always up to date). We do not use automatic failure processing to this day.

All Articles