How we went about energy efficiency

I dedicate this post to those people who lied in certificates, because of which we almost installed sparklers in our halls.

The stories are more than four years old, but I'm publishing now, because the NDA has ended. Then we realized that we loaded the data center (which we lease out) almost completely, and that its energy efficiency did not improve much. The earlier hypothesis was that the stronger we fill it, the better, because the engineer is distributed to everyone. But it turned out that we were deceiving ourselves in this regard, and with a good load, there were losses somewhere. We worked in many areas, but our brave team started cooling.

The real life of the data center is a bit different from the project. Constant screwing from the service department to increase efficiency and optimize settings for new tasks. Here take a mythical middle stance. In practice, it does not exist, the load distribution is uneven, somewhere dense, somewhere empty. So I had to reconfigure something for better energy efficiency.

Our data center Compressor is needed for a variety of customers. Therefore, there in the middle of the usual two-four-kilowatt racks can be quite a 23-kilowatt or more. Accordingly, the air conditioners were set up to cool them, and through less powerful racks the air simply passed by.

The second hypothesis was that the warm and cold corridors do not mix. After the measurements, I can say that this is an illusion, and real aerodynamics differs from the model in just about everything.

Survey

First we started to watch the air currents in the halls. Why did you get in there? Since they understood that the data center is designed for five to six kW per rack, but they knew that they were in fact from 0 to 25 kW. It is almost impossible to regulate all this with tiles: the first measurements showed that they miss almost the same way. And tiles at 25 kW do not exist at all, they must be not just empty, but with liquid vacuum.

We bought an anemometer and began to measure flows between racks and above racks. In general, it is necessary to work with him in accordance with GOST and a bunch of standards that are difficult to achieve without stopping the hall. We were not interested in accuracy, but in the fundamental picture. That is, they measured approximately.

By measuring out of 100 percent of the air that comes out of the tiles, 60 percent fall into the racks, the rest flies by. This is due to the fact that there are heavy racks of 15–25 kW, along which cooling is built.

We cannot take and extinguish the air conditioners, because it will be very warm on the warm racks in the area of the upper servers. At this moment, we understand that we need to isolate something from something, so that the air does not jump from row to row and that heat transfer in the block does occur.

In parallel with this, we ask ourselves whether it is financially expedient.

We are surprised to find that we generally have the power consumption of the data center, but we simply cannot calculate the fancoils for a particular room. That is, analytically we can, but in fact - no. And we are not able to evaluate the savings. The task becomes more interesting and more interesting. If we save 10% of the capacity of air conditioners - how much money can be put aside for insulation? How to count?

We went to the automation engineers who finished the monitoring system. Thanks to the guys: they had all the sensors, all that was needed was to add the code. They began to separately display chillers, UPS, lighting. With the new Pribluda, it became possible to watch how the situation is changing on the elements of the system.

Experiments with curtains

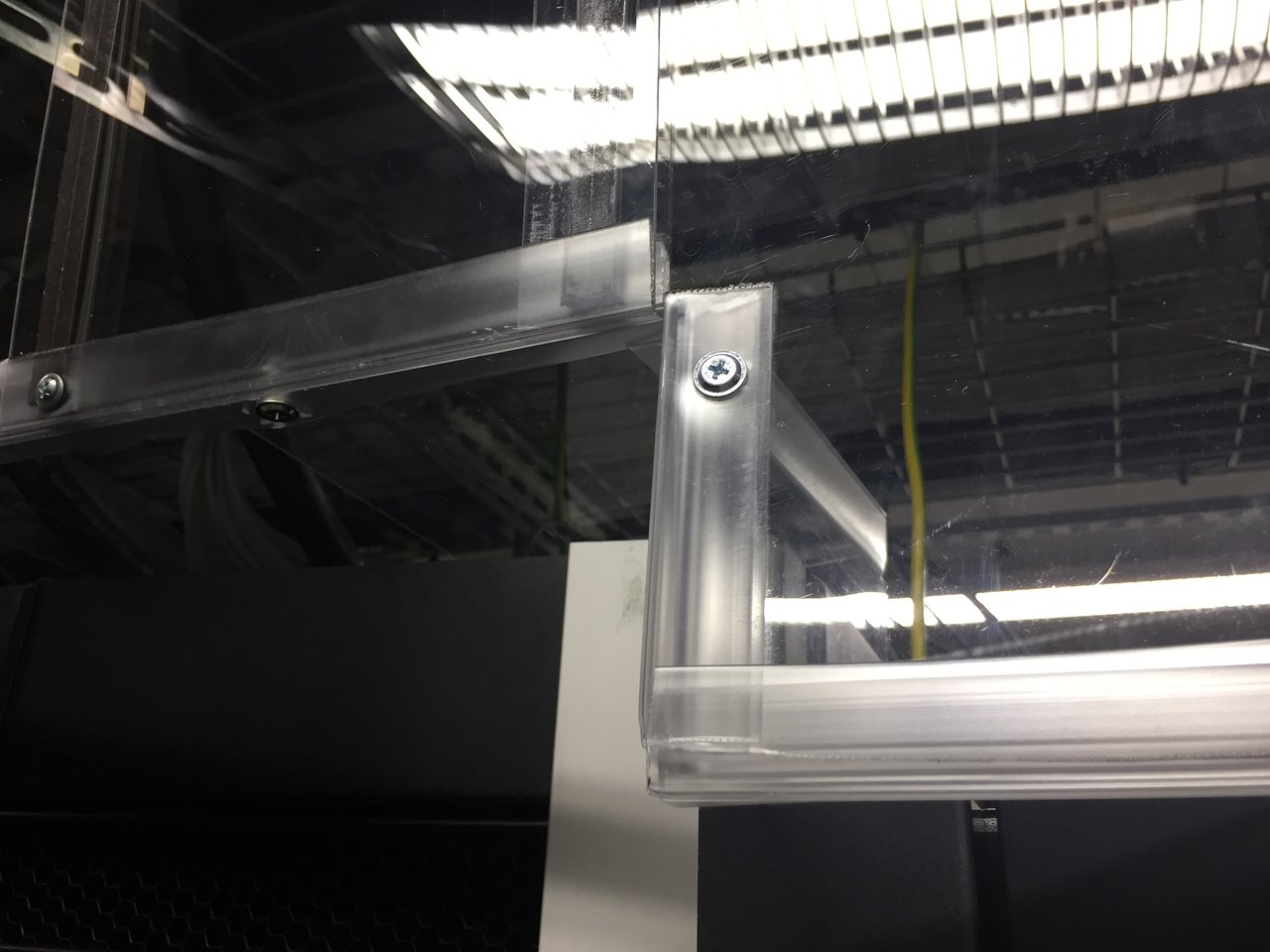

In parallel, we begin experiments with curtains (fences). We decide to fix them on the pins of the cable trays (there’s nothing more anyway), since they should be lightweight. With canopies or combs decided quickly.

The ambush is that before that we worked with a bunch of vendors. Everyone has solutions for their own data centers of companies, but for the commercial data center there are no ready-made solutions in essence. Our customers come and go constantly. We are one of the few “heavy” data centers with no limit on rack width with the ability to accept these threshing servers up to 25 kW. No infrastructure planning in advance. That is, if we take modular systems for caching vendors, there will always be holes for two months. That is, a mashroom will never be energy efficient in principle.

We decided to do it ourselves, since we have our own engineers.

The first thing they took was tapes from industrial refrigerators. These are such flexible polyethylene snots that you can tackle. You probably saw them somewhere at the entrance to the meat department of the largest grocery. They began to look for non-toxic and non-combustible materials. Found, bought in two rows. Veiled, began to watch what happens.

They understood that it would not be very. But it turned out very directly very not very generally. They begin, like pasta, to flutter in streams. Found magnetic tapes like fridge magnets. They glued them to these strips, glued to each other, the wall turned out to be moderately monolithic.

They started to figure out what would happen on the hall.

We went to the builders, showing their project. They look, they say: your curtains are something very heavy. 700 kilograms throughout the hall. Go, they say, good people, to hell. More precisely, to the SCS team. Let them consider how many noodles they have in the trays, because 120 kg per square meter is the maximum.

SCS say: Remember, one large customer came to us? He has tens of thousands of ports in one hall. At the edges, the mashzola is still normal, but it will not come out closer to the cross: the trays will fall off.

More builders asked for a certificate for the material. I note that before that we worked on the vendor’s honesty, since it was just a test run. We turned to this supplier, we say: OK, ready to go into beta, let's get all the pieces of paper. They send something of a not very established pattern.

We say: listen, where did you get this piece of paper? They: this is our Chinese manufacturer who sent us in response to requests. On a piece of paper, this thing does not burn at all.

At this moment, we realized that it was time to stop and check the facts. We go to the girls from the fire safety of the data center, they call us a laboratory that checks the combustibility. It is quite terrestrial money and terms (although we cursed everything until we made up the right amount of pieces of paper). There, scientists say: bring the material, we will do tests.

In conclusion, it was written that about 50 grams of ash remains from a kilogram of a substance. The rest burns brightly, drains and very well supports burning in a puddle.

We understand - it’s good that they did not buy. They began to look for other material.

Found polycarbonate. It turned out to be tougher. Transparent sheet - two mm, doors - from four millimeter. In fact, it is plexiglass. Together with the manufacturer, we start a conversation with fire safety: give a certificate. They send. Signed by the same institute. We call there, we say: well, why, guys, did you check this?

They say: yes, they did. At first they burned it for themselves, then they brought it only for tests. About 930 grams of ashes remain from a kilogram of material (if burned with a burner). It melts and drips, but the puddle will not burn.

Immediately check our magnets (they are on a polymer lining). Surprisingly, they burn poorly.

Assembly

From this we begin to collect. Polycarbonate is excellent because it is lighter than polyethylene, which bends much worse. True, they bring sheets of 2.5 by 3 meters, and the supplier does not care what to do about it. And we need 2.8 a width of 20-25 centimeters. Doors were sent to offices that cut the sheet as it should. And the lamellas were cut by themselves. The cutting process itself is twice as expensive as a sheet.

Here's what happened:

Bottom line - the caching system pays off in less than a year. We saved this at 200–250 kW continuously on fan coil power. How much more on chillers, how much exactly - we don’t know. Servers suck in at a constant speed, fancoils blow. And the chiller is turned on and off with a comb: it is difficult to extract data from it. It is impossible to stop the mashroom for tests.

We are glad that at one time there was a rule to put 5x5 racks in modules so that their average consumption was six kW maximum. That is, the warm is not concentrated by the island, but distributed throughout the hall. But there is a situation where 10 pieces of 15-kilowatt racks are nearby, but opposite there is a hundred-hour rack. He is cold. Balanced.

Where there is no rack, you need a fence to the floor.

And we also have some customers insulated with gratings. With them, too, there were several features.

They cut into lamellas, because the width of the racks is unfixed, and the frequency of the comb attachment is determined: three or four cm, either to the right or left, will always be. If you have a block of 600 under the counter, then with a probability of 85 percent it will not rise. And short and long lamellas coexist and stick together. Sometimes we cut the lamella with the letter G along the contours of the racks.

Sensors

Before reducing the power of the fan coils, it was necessary to set up very accurate temperature monitoring at different points in the hall so as not to catch any surprises. So there were wireless sensors. Wired - on each row you need to hang your own thing for crossing these sensors and sometimes extension cords on it. It turns into a garland. Very bad. And when these wires enter the cells of the customers, the security guards immediately get excited and ask to explain with a certificate what is removed through these wires. The nerves of the security must be protected. For some reason, they do not touch wireless sensors.

And more racks come and go. The sensor on the magnet is easier to remount, because each time it must be hung higher or lower. If the servers are in the lower third of the rack - you need to hang it down, and not according to the standard one and a half meters from the floor on the rack door in the cold corridor. It is useless to measure there, it is necessary to measure what is in the iron.

One sensor on three racks - more often you can not hang. The temperature is no different. They were afraid that there would be a pull of air through the racks themselves - it did not happen. But we still give a little more cold air than the calculated values. We made windows in the lamellas 3, 7 and 12, above the counter we make a hole. We put an anemometer into it when bypassing: we look that the flow goes where necessary.

Then they hung bright strings: an old practice for snipers. It looks strange, but allows you to detect a possible problem faster.

Funny

While we were doing all this in silence, a vendor came to produce an engineer for data centers. He says: let’s come and tell us about energy efficiency. They arrive, begin to talk about the non-optimal hall, air currents. We nod understandingly. Because we have three years as established.

They hang three sensors on each rack. Monitoring pictures are bruised, beautiful. More than half of this solution in price is software. At the “alert in Zabbix” level, but proprietary and very expensive. The ambush is that they have sensors, software, and then they look for a contractor on the spot: there are no vendors for cages.

It turns out that their hands are five to seven times more expensive than we did.

References

All Articles