Build a kubernetes platform on Pinterest

Over the years, Pinterest has 300 million users of the service created more than 200 billion pins on more than 4 billion boards. To serve this army of users and an extensive content base, the portal has developed thousands of services, ranging from microservices that several CPUs can handle, and ending with giant monoliths that spin on a whole fleet of virtual machines. And then the moment came when the company’s eyes fell on k8s. What did the "cube" look to "Interest"? You’ll learn about this from our translation of the latest Pinterest engeneering blog post .

So, hundreds of millions of users and hundreds of billions of pins. To serve this army of users and an extensive content base, we have developed thousands of services, ranging from microservices that can be handled by several CPUs to giant monoliths that spin on a whole fleet of virtual machines. In addition, we have a variety of frameworks that may also require CPU resources, memory, or access to I / O.

In supporting this zoo of tools, the development team faces a number of challenges:

Container orchestration systems are a way to unify workload management. They open the way for you to increase the development speed and simplify infrastructure management, since all the resources involved in the project are managed by one centralized system.

Figure 1: Infrastructure Priorities (reliability, developer productivity, and efficiency).

The Cloud Management Platform team on Pinterest met K8s in 2017. By the first half of 2017, we documented most of our production facilities, including the API and all our web servers. After that, we carefully assessed the various systems of container solutions orchestration, building clusters and working with them. By the end of 2017, we decided to use Kubernetes. It was flexible enough and widely supported in the community of developers.

So far, we have created our own Kops-based cluster bootstrap tools and transferred to Kubernetes existing infrastructure components such as network, security, metrics, logging, identity management and traffic. We also implemented a workload modeling system for our resource, the complexity of which is hidden from the developers. Now we are focused on ensuring the stability of the cluster, its scaling and connecting new clients.

Getting started with Kubernetes on a Pinterest scale as a platform that our engineers will love is overwhelming.

As a large company, we have invested heavily in infrastructure tools. Examples include security tools that process certificates and distribute keys, traffic control components, service discovery systems, visibility, and sending logs and metrics. All this was collected for a reason: we went the normal way of trial and error, and therefore we wanted to integrate all this economy into the new infrastructure on Kubernetes, instead of reinventing the old bicycle on a new platform. This approach generally simplified migration, since all application support already exists, it does not need to be created from scratch.

On the other hand, the load forecast models in Kubernetes itself (for example, deployment, jobs and Daemon kits) are not enough for our project. These usability issues are huge barriers to moving to Kubernetes. For example, we heard service developers complain about the absence or incorrect setting of an input. We also encountered improper use of template engines when hundreds of copies were created with the same specification and task, which resulted in nightmare problems with debugging.

It was also very difficult to support different versions in the same cluster. Imagine the complexity of customer support if you need to work immediately in multiple versions of the same runtime, with all their problems, bugs and updates.

To make Kubernetes deployment easier for our engineers, as well as to simplify and speed up the infrastructure, we have developed our own custom resource definitions (CRD).

CRDs provide the following features:

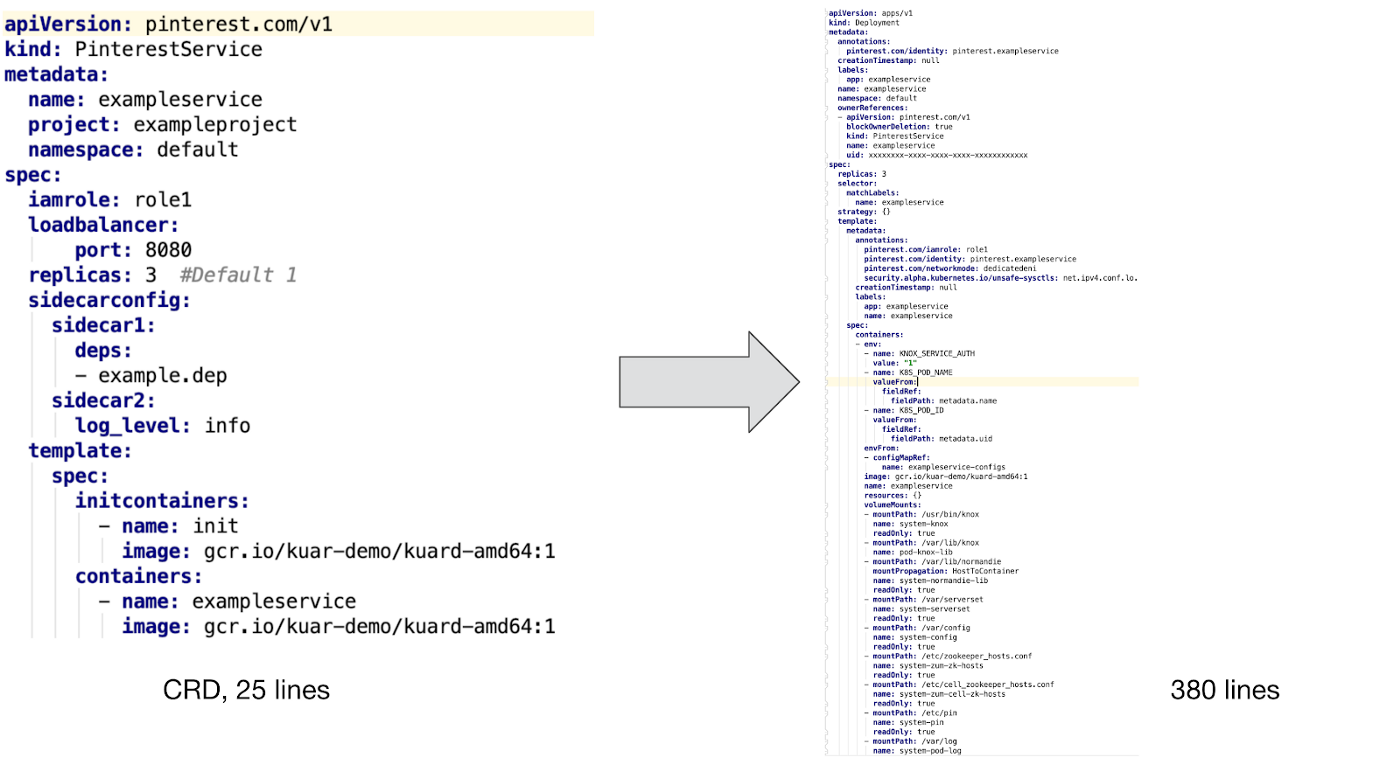

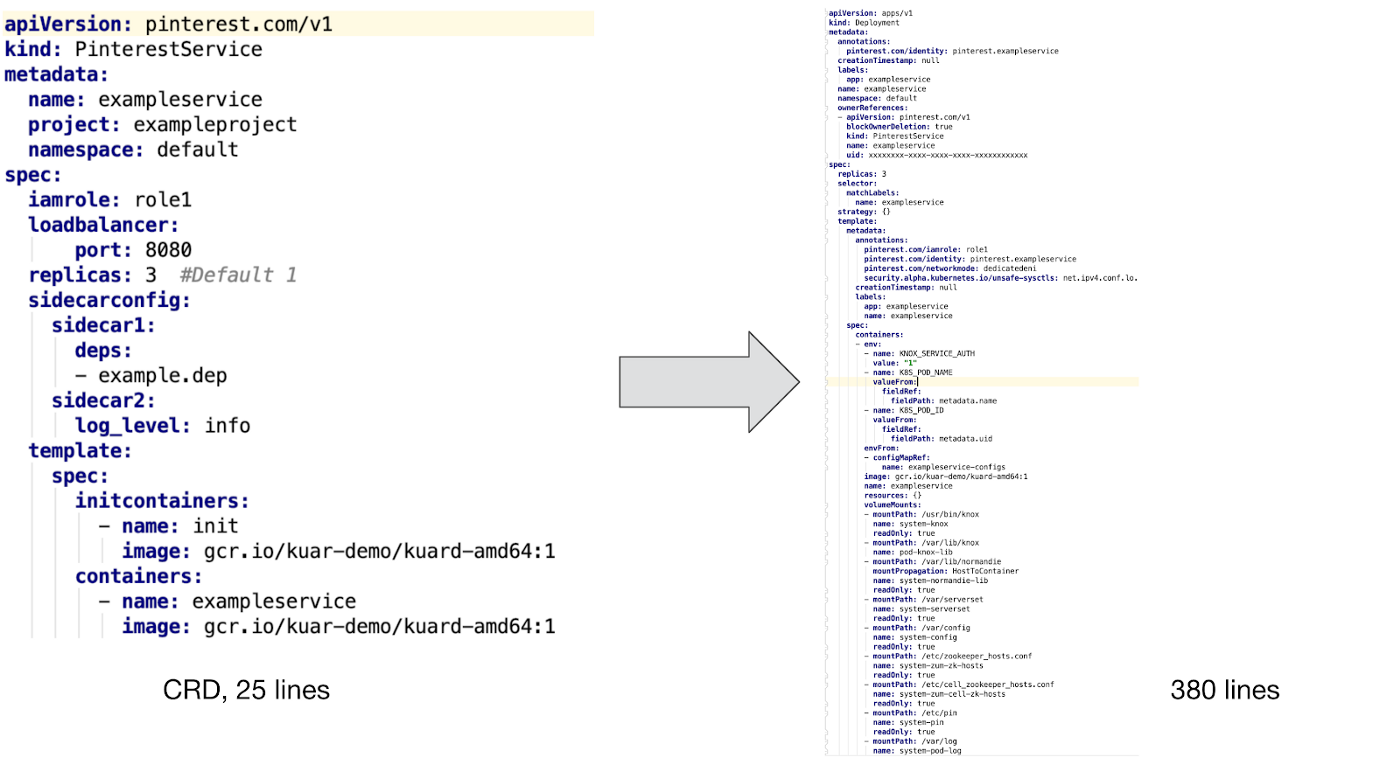

Here is an example of PinterestService and the internal resource that is controlled by our controller:

As you can see above, to support a custom container, we need to integrate an initialization container and several add-ons into it to ensure security, visibility and work with network traffic. In addition, we created configuration map templates and implemented support for PVC templates for batch jobs, as well as tracking a variety of environment variables to track identification, resource consumption, and garbage collection.

It is hard to imagine that developers would want to write these configuration files manually without CRD support, not to mention further support and debugging of configurations.

The figure above shows how to deploy a Pinterest custom resource in a Kubernetes cluster:

Note : this pre-release workflow deployment was created for the first users of the new k8s platform. We are now in the process of finalizing this process in order to fully integrate with our new CI / CD. This means that we cannot tell everything related to Kubernetes. We look forward to sharing our experience and telling the team about this progress in our next blog post “Building a CI / CD platform for Pinterest”.

Based on the specific needs of Pinterest, we have developed the following CRDs that are suitable for a variety of workflows:

We are also working on PinterestStatefulSet, which will soon be adapted for data warehouses and other stateful systems.

When the application module runs in Kubernetes, it automatically receives a certificate to identify itself. This certificate is used to access the secret store or to communicate with other services through mTLS. Meanwhile, the container initialization configurator and Daemon will download all the necessary dependencies before launching the container application. When everything is ready, the sidecar traffic and Daemon will register the module IP address in our Zookeeper so that customers can find it. All this will work, since the network module was configured before the application was launched.

The following are typical examples of run-time workload support. For other types of workloads, a slightly different support may be required, but they are all presented as sidecar pod level, nodal or daemon level virtual machines. We make sure that all this is deployed within the framework of the management infrastructure and coordinated between applications, which ultimately significantly reduces the load in terms of technical work and customer support.

We have put together an end-to-end test pipeline on top of the existing Kubernetes test infrastructure. These tests apply to all of our clusters. Our pipeline went through many changes before becoming part of the product cluster.

In addition to testing systems, we have monitoring and warning systems that constantly monitor the status of system components, resource consumption and other important indicators, notifying us only when necessary human intervention.

We looked at some alternatives to custom resources, such as mutational access controllers and template systems. However, all of them are fraught with serious difficulties in work, so we chose the path of CRD.

A mutation tolerance controller was used to enter sidecars, an environment variable, and other runtime support. Nevertheless, he faced various problems, for example, with resource binding and management of their life cycle, when such problems do not arise in CRD.

Note: Template systems such as Helm diagrams are also widely used to run applications with similar configurations. However, our production applications are too diverse to manage them with templates. Also, during continuous deployment, using templates will generate too many errors.

Now we are dealing with a mixed load on all of our clusters. To support similar processes of different types and sizes, we work in the following areas:

So, hundreds of millions of users and hundreds of billions of pins. To serve this army of users and an extensive content base, we have developed thousands of services, ranging from microservices that can be handled by several CPUs to giant monoliths that spin on a whole fleet of virtual machines. In addition, we have a variety of frameworks that may also require CPU resources, memory, or access to I / O.

In supporting this zoo of tools, the development team faces a number of challenges:

- Engineers do not have a unified way to run a work environment. Stateless services, Stateful services, and projects under active development are based on completely different technology stacks. This led to the creation of a whole training course for engineers, and also seriously complicates the work of our infrastructure team.

- Developers with their own fleet of virtual machines create a huge load on internal administrators. As a result, such simple operations as updating the OS or AMI last for weeks and months. This leads to an increase in workload in seemingly absolutely everyday situations.

- Difficulties with creating global infrastructure management tools on top of existing solutions. The situation is complicated by the fact that finding the owners of virtual machines is not easy. That is, we do not know whether it is safe to extract these capacities to work in other parts of our infrastructure.

Container orchestration systems are a way to unify workload management. They open the way for you to increase the development speed and simplify infrastructure management, since all the resources involved in the project are managed by one centralized system.

Figure 1: Infrastructure Priorities (reliability, developer productivity, and efficiency).

The Cloud Management Platform team on Pinterest met K8s in 2017. By the first half of 2017, we documented most of our production facilities, including the API and all our web servers. After that, we carefully assessed the various systems of container solutions orchestration, building clusters and working with them. By the end of 2017, we decided to use Kubernetes. It was flexible enough and widely supported in the community of developers.

So far, we have created our own Kops-based cluster bootstrap tools and transferred to Kubernetes existing infrastructure components such as network, security, metrics, logging, identity management and traffic. We also implemented a workload modeling system for our resource, the complexity of which is hidden from the developers. Now we are focused on ensuring the stability of the cluster, its scaling and connecting new clients.

Kubernetes: Pinterest's Way

Getting started with Kubernetes on a Pinterest scale as a platform that our engineers will love is overwhelming.

As a large company, we have invested heavily in infrastructure tools. Examples include security tools that process certificates and distribute keys, traffic control components, service discovery systems, visibility, and sending logs and metrics. All this was collected for a reason: we went the normal way of trial and error, and therefore we wanted to integrate all this economy into the new infrastructure on Kubernetes, instead of reinventing the old bicycle on a new platform. This approach generally simplified migration, since all application support already exists, it does not need to be created from scratch.

On the other hand, the load forecast models in Kubernetes itself (for example, deployment, jobs and Daemon kits) are not enough for our project. These usability issues are huge barriers to moving to Kubernetes. For example, we heard service developers complain about the absence or incorrect setting of an input. We also encountered improper use of template engines when hundreds of copies were created with the same specification and task, which resulted in nightmare problems with debugging.

It was also very difficult to support different versions in the same cluster. Imagine the complexity of customer support if you need to work immediately in multiple versions of the same runtime, with all their problems, bugs and updates.

Pinterest custom resources and controllers

To make Kubernetes deployment easier for our engineers, as well as to simplify and speed up the infrastructure, we have developed our own custom resource definitions (CRD).

CRDs provide the following features:

- Combining various native Kubernetes resources so that they work as a single load. For example, the PinterestService resource includes a deployment, login service, and configuration map. This allows developers to not worry about setting up DNS.

- Implement the necessary application support. The user should focus only on the container specification according to their business logic, while the CRD controller implements all the necessary init containers, environment variables, and pod specifications. This provides a fundamentally different level of comfort for developers.

- CRD controllers also manage the life cycle of their own resources and increase debugging availability. This includes agreeing on the desired and actual specifications, updating the status of the CRD and maintaining event logs and more. Without CRD, developers would have to manage a large set of resources, which would only increase the likelihood of an error.

Here is an example of PinterestService and the internal resource that is controlled by our controller:

As you can see above, to support a custom container, we need to integrate an initialization container and several add-ons into it to ensure security, visibility and work with network traffic. In addition, we created configuration map templates and implemented support for PVC templates for batch jobs, as well as tracking a variety of environment variables to track identification, resource consumption, and garbage collection.

It is hard to imagine that developers would want to write these configuration files manually without CRD support, not to mention further support and debugging of configurations.

Workflow application deployment

The figure above shows how to deploy a Pinterest custom resource in a Kubernetes cluster:

- Developers interact with our Kubernetes cluster through the CLI and user interface.

- CLI / UI tools extract the workflow configuration YAML files and other assembly properties (same version identifier) from Artifactory, and then send them to the Job Submission Service. This step ensures that only production versions are delivered to the cluster.

- JSS is the gateway to various platforms, including Kubernetes. This is where user authentication, quota issuance and partial verification of our CRD configuration takes place.

- After checking the CRD on the JSS side, the information is sent to the k8s platform API.

- Our CRD controller monitors events on all user resources. It converts CR into k8s native resources, adds the necessary modules, sets the appropriate environment variables and performs other auxiliary work, which guarantees container user applications sufficient infrastructure support.

- Then the CRD controller transfers the received data to the Kubernetes API so that it is processed by the scheduler and put into operation.

Note : this pre-release workflow deployment was created for the first users of the new k8s platform. We are now in the process of finalizing this process in order to fully integrate with our new CI / CD. This means that we cannot tell everything related to Kubernetes. We look forward to sharing our experience and telling the team about this progress in our next blog post “Building a CI / CD platform for Pinterest”.

Types of Special Resources

Based on the specific needs of Pinterest, we have developed the following CRDs that are suitable for a variety of workflows:

- PinterestService is a long-running stateless-services. Many of our main systems are based on a set of such services.

- PinterestJobSet models full-cycle batch jobs. Pinterest has a common scenario, according to which several tasks run the same containers in parallel, and regardless of other similar processes.

- PinterestCronJob is widely used in conjunction with small periodic loads. This is a cron native shell with Pinterest support mechanisms that are responsible for security, traffic, logs and metrics.

- PinterestDaemon includes Daemon's infrastructure. This family continues to grow as we add more support for our clusters.

- PinterestTrainingJob extends to Tensorflow and Pytorch processes, providing the same level of on-line support as all other CRDs. Since Pinterest is actively using Tensorflow and other machine learning systems, we had a reason to build a separate CRD around them.

We are also working on PinterestStatefulSet, which will soon be adapted for data warehouses and other stateful systems.

Runtime support

When the application module runs in Kubernetes, it automatically receives a certificate to identify itself. This certificate is used to access the secret store or to communicate with other services through mTLS. Meanwhile, the container initialization configurator and Daemon will download all the necessary dependencies before launching the container application. When everything is ready, the sidecar traffic and Daemon will register the module IP address in our Zookeeper so that customers can find it. All this will work, since the network module was configured before the application was launched.

The following are typical examples of run-time workload support. For other types of workloads, a slightly different support may be required, but they are all presented as sidecar pod level, nodal or daemon level virtual machines. We make sure that all this is deployed within the framework of the management infrastructure and coordinated between applications, which ultimately significantly reduces the load in terms of technical work and customer support.

Testing and QA

We have put together an end-to-end test pipeline on top of the existing Kubernetes test infrastructure. These tests apply to all of our clusters. Our pipeline went through many changes before becoming part of the product cluster.

In addition to testing systems, we have monitoring and warning systems that constantly monitor the status of system components, resource consumption and other important indicators, notifying us only when necessary human intervention.

Alternatives

We looked at some alternatives to custom resources, such as mutational access controllers and template systems. However, all of them are fraught with serious difficulties in work, so we chose the path of CRD.

A mutation tolerance controller was used to enter sidecars, an environment variable, and other runtime support. Nevertheless, he faced various problems, for example, with resource binding and management of their life cycle, when such problems do not arise in CRD.

Note: Template systems such as Helm diagrams are also widely used to run applications with similar configurations. However, our production applications are too diverse to manage them with templates. Also, during continuous deployment, using templates will generate too many errors.

Future work

Now we are dealing with a mixed load on all of our clusters. To support similar processes of different types and sizes, we work in the following areas:

- A cluster of clusters distributes large applications across clusters to provide scalability and stability.

- Ensuring stability, scalability and visibility of the cluster to create a connection between the application and its SLA.

- Resource and quota management so that applications do not conflict with each other, and the cluster scale is controlled by us.

- New CI / CD platform for supporting and deploying applications in Kubernetes.

All Articles