New in Hadoop: Get to Know the Various File Formats in Hadoop

Hello! We are publishing a translation of the article prepared for students of the new group of the Data Engineer course. If you are interested in learning how to build an efficient and scalable data processing system at minimal cost, see the recording of the master class by Yegor Mateshuk!

A few weeks ago I wrote an article about Hadoop, which covered various

parts and figured out what role he plays in the field of data engineering. In this article, I

I will give a brief description of the various file formats in Hadoop. It's quick and easy

theme. If you are trying to understand how Hadoop works and what place it takes in work

Data Engineer, check out my article on Hadoop here .

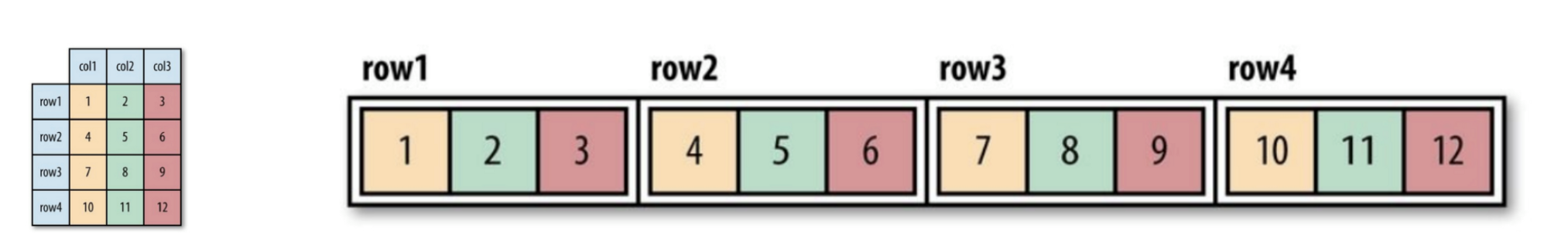

Hadoop file formats are divided into two categories: row-oriented and column-

oriented.

Row-oriented:

Rows of data of one type are stored together, forming a continuous

storage: SequenceFile, MapFile, Avro Datafile. Thus, if necessary

access only a small amount of data from a row, anyway the whole row

will be read into memory. Serialization delays can to some degree

alleviate the problem, but completely from the overhead of reading the entire data row with

drive will not be able to get rid. Row-oriented storage

suitable in cases where it is necessary to process the entire line at the same time

data.

Column-oriented:

The entire file is split into several data columns and all data columns

stored together: Parquet, RCFile, ORCFile. Column-oriented format (column-

oriented), allows you to skip unnecessary columns when reading data, which is suitable for

situations when a small amount of lines is needed. But this format of reading and writing

requires more memory space since the entire cache line must be in memory

(to get a column of several rows). At the same time, it is not suitable for

streaming recording, because after a recording failure the current file cannot be

restored and linearly oriented data can be reused

synchronized from the last synchronization point in the event of a write error, therefore,

for example, Flume uses a line-oriented storage format.

Figure 1 (left). Logical table shown

Figure 2 (right). Row-oriented location (Sequence file)

Figure 3. Column-oriented layout

If you haven’t fully understood what column or row orientation is,

do not worry. You can follow this link to understand the difference between the two.

Here are a few file formats that are widely used in the Hadoop system:

Sequence File

The storage format changes depending on whether the storage is compressed,

Does it use write compression or block compression:

Figure 4. The internal structure of the sequence file without compression and with compression of records.

Without compression:

Storage in the order corresponding to the record length, Key length, degree Value,

Key value and Value value. Range is the number of bytes. Serialization

performed using the specified.

Record Compression:

Only the value is compressed, and the compressed codec is stored in the header.

Block Compression:

Multiple records are compressed so that you can use

take advantage of the similarities between the two entries and save space. Flags

syncs are added to the beginning and end of the block. Minimum block value

set by the o.seqfile.compress.blocksizeset attribute.

Figure 4. The internal structure of the sequence file with block compression.

Map file

A map file is a type of sequence file. After adding the index to

the sequence file and its sorting results in a map file. The index is stored as a separate

a file that usually contains the indices of each of 128 entries. Indices may be

loaded into memory for quick retrieval, because the files in which data is stored,

arranged in the order specified by the key.

Map file entries must be in order. Otherwise we

get an IOException.

Derived map file types:

- SetFile: a special map file for storing a sequence of keys of the type

Writable Keys are written in a specific order. - ArrayFile: the key is an integer indicating the position in the array, value

of type Writable. - BloomMapFile: optimized for the get () method of a map file using

dynamic Bloom filters. The filter is stored in memory, and the usual method

get () is called to perform a read only if the key value

exists.

Files listed below on the Hadoop system include RCFile, ORCFile, and Parquet.

The column-oriented version of Avro is Trevni.

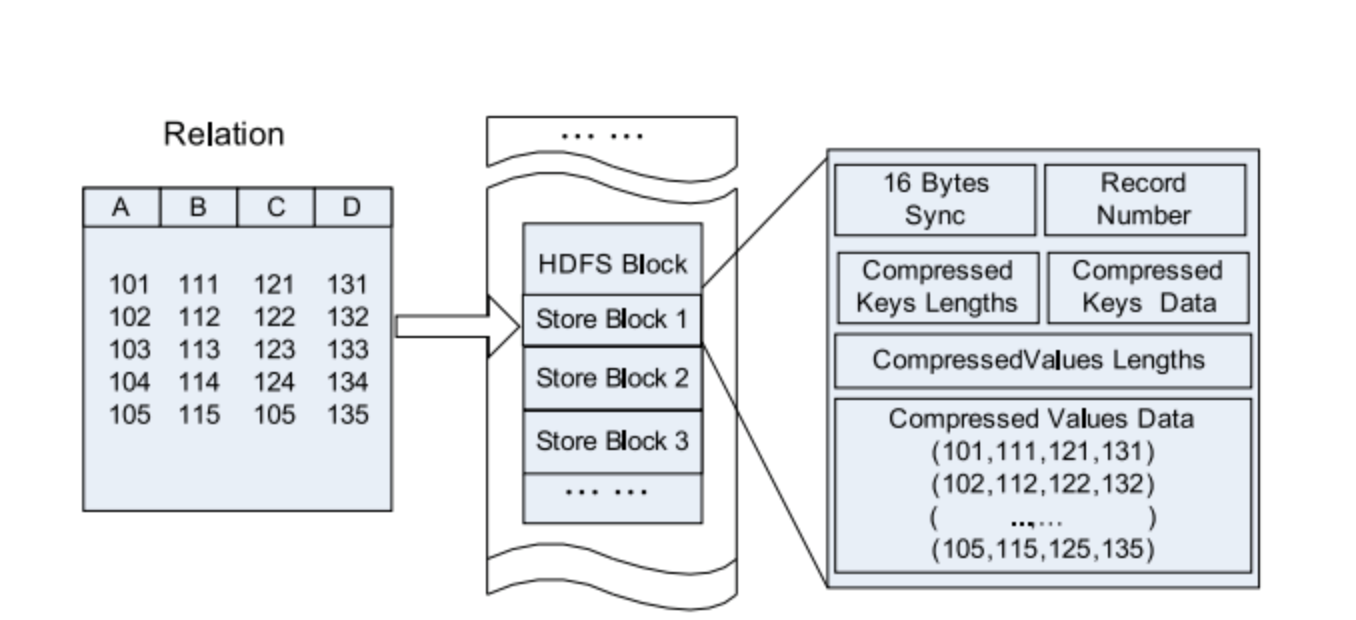

RC file

Hive's Record Columnar File - this type of file first divides the data into groups of rows,

and inside a row group, data is stored in columns. Its structure is as follows

way:

Figure 5. Location of the RC file data in the HDFS block.

Compare with pure row-oriented and column-oriented:

Figure 6. Storage row by row in the HDFS block.

Figure 7. Grouping by columns in an HDFS block.

ORC file

ORCFile (Optimized Record Columnar File) - is a more efficient format

file than rcfile. It internally divides the data into strips of 250M each.

Each lane has an index, data and footer. The index stores the minimum and

the maximum value of each column, as well as the position of each row in the column.

Figure 8. Location of data in the ORC file

In Hive, the following commands are used to use the ORC file:

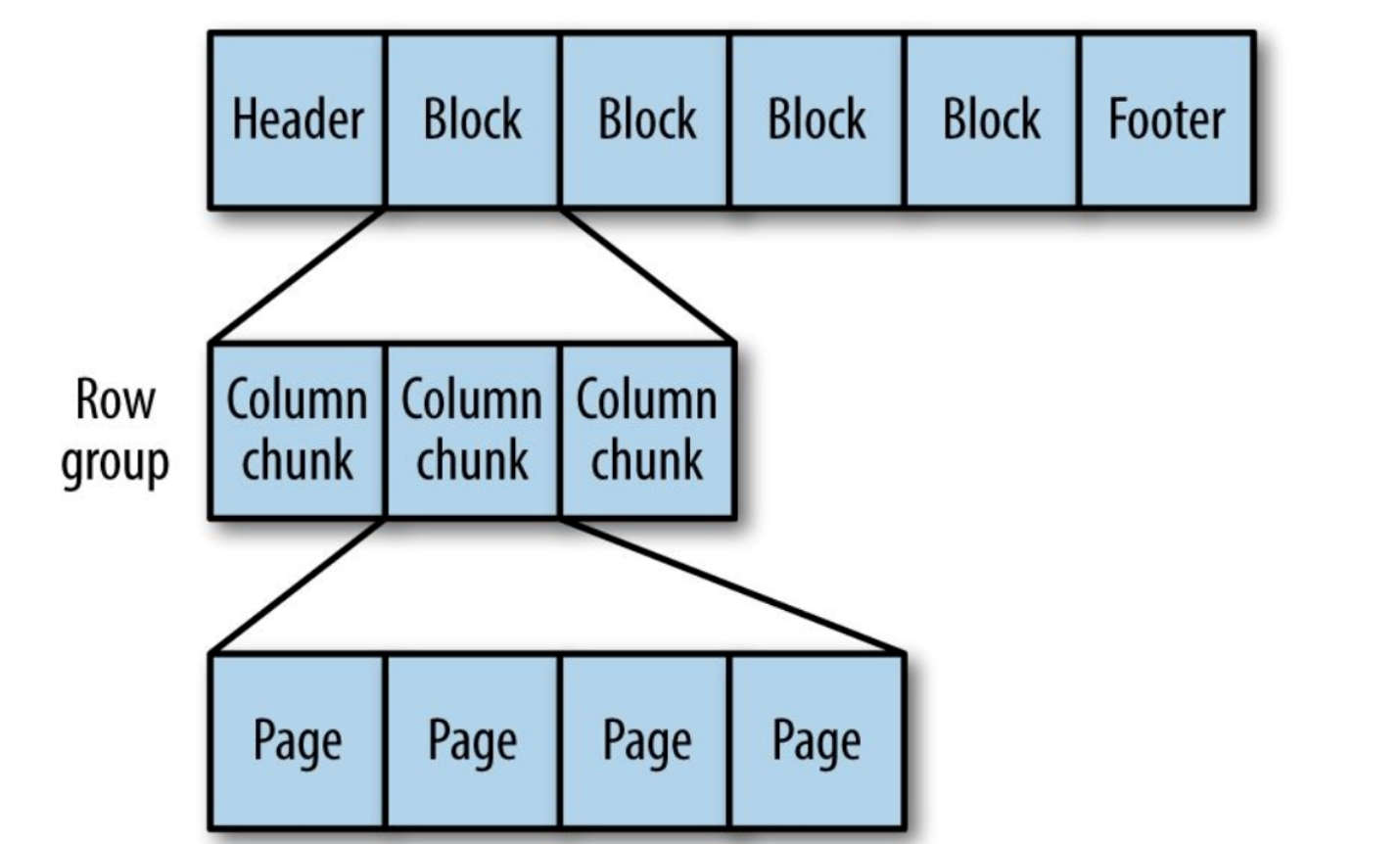

Parquet

Generic column-oriented storage format based on Google Dremel.

Especially good for processing data with a high degree of nesting.

Figure 9. The internal structure of the Parquet file.

Parquet transforms nested structures into flat column storage,

which is represented by the Repeat level and the Definition level (R and D) and uses

metadata to restore records while reading data to recover all

file. Next you will see an example of R and D:

AddressBook { contacts: { phoneNumber: “555 987 6543” } contacts: { } } AddressBook { }

That's all. Now you know the differences in file formats in Hadoop. If a

find any errors or inaccuracies, please feel free to contact

to me. You can contact me on LinkedIn .

All Articles