/etc/resolv.conf for Kubernetes pods, ndots: 5 option, as this may adversely affect application performance

Not so long ago, we launched Kubernetes 1.9 on AWS using Kops. Yesterday, while smoothly rolling out new traffic to the largest of our Kubernetes clusters, I began to notice unusual DNS name resolution errors logged by our application.

GitHub talked about this for quite a while, so I also decided to figure it out. In the end, I realized that in our case this is due to the increased load on kube-dns

and dnsmasq

. The most interesting and new for me was the very reason for a significant increase in the traffic of DNS queries. About this and what to do with it, my post.

The resolution of the DNS inside the container - as with any Linux system - is determined by the configuration file /etc/resolv.conf

. By default, Kubernetes dnsPolicy

is ClusterFirst

, which means that any DNS query will be redirected to dnsmasq

running in the kube-dns

inside the cluster, which in turn will redirect the query to the kube-dns

application if the name ends with a cluster suffix, or otherwise, to a higher level DNS server.

The file /etc/resolv.conf

inside each container will look like this by default:

nameserver 100.64.0.10 search namespace.svc.cluster.local svc.cluster.local cluster.local eu-west-1.compute.internal options ndots:5

As you can see, there are three directives:

- The name server is the IP service of

kube-dns

- 4 local search domains specified

- There is an option

ndots:5

An interesting part of this configuration is how local search domains and ndots:5

settings get along. To understand this, you need to understand how DNS resolution works for incomplete names.

What is a full name?

A fully qualified name is a name for which no local search will be performed, and the name will be considered absolute during name resolution. By convention, DNS software considers a name to be fully qualified if it ends with a period (.), And is not fully defined otherwise. That is google.com.

fully defined, but google.com

not.

How is an incomplete name handled?

When an application connects to the remote host specified in the name, DNS name resolution is usually done using a system call, for example, getaddrinfo()

. But if the name is incomplete (does not end with.), I wonder if the system call will first try to resolve the name as absolute, or will it go through the local search domains first? It depends on the ndots

option.

From the manual on resolv.conf

:

ndots:n , , . n 1, , - , , - .

This means that if ndots

is set to 5 and the name contains less than 5 points, the system call will try to resolve it sequentially, first by going through all local search domains, and, if unsuccessful, will eventually resolve it as an absolute name.

Why can ndots:5

adversely affect application performance?

As you understand, if your application uses a lot of external traffic, for each established TCP connection (or, more precisely, for each resolved name) it will issue 5 DNS queries before the name is resolved correctly, because it will go through 4 first local search domain, and at the end will issue an absolute name resolution request.

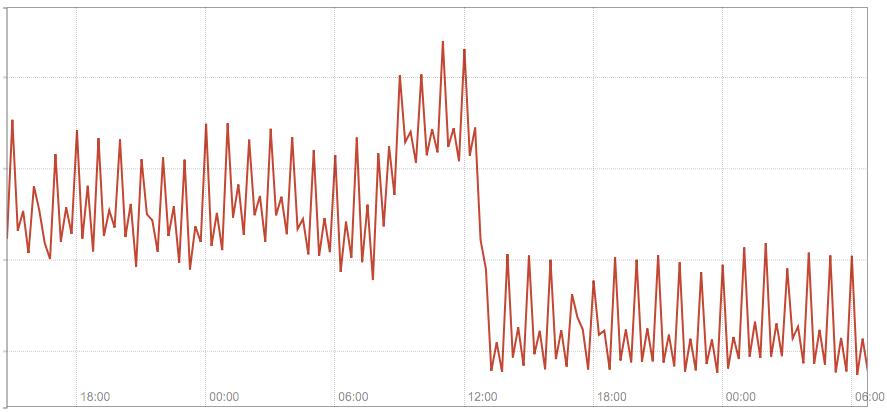

The following diagram shows the total traffic on our 3 kube-dns modules before and after we switched several host names configured in our application to fully defined ones.

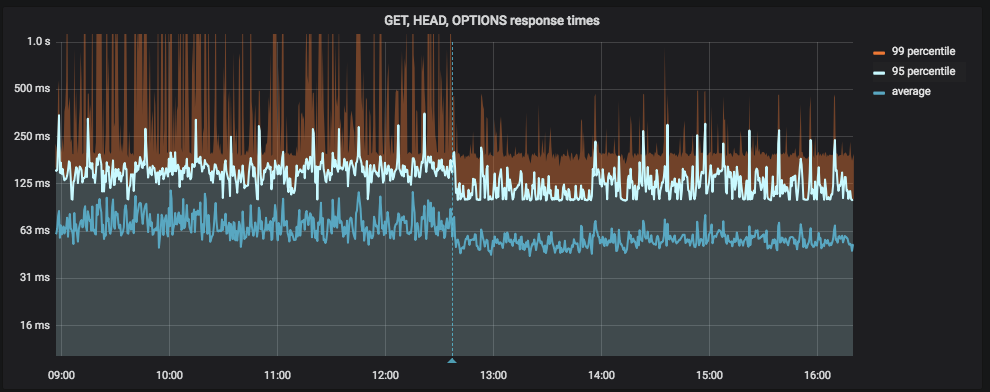

The following diagram shows the application delay before and after we switched several host names configured in our application to full (the vertical blue line is the deployment):

Solution # 1 - use fully qualified names

If you have few static external names (that is, defined in the application configuration) that you create a large number of connections to, perhaps the simplest solution is to switch them to fully defined ones by simply adding them. in the end.

This is not a final decision, but it helps to quickly, albeit not cleanly, improve the situation. We applied this patch to solve our problem, the results of which were shown in the screenshots above.

Solution # 2 - customizing ndots

in dnsConfig

In Kubernetes 1.9, a feature appeared in alpha mode (beta version v1.10), which allows better control of DNS parameters through the pod property in dnsConfig

. Among other things, it allows you to adjust the ndots

value for a specific hearth, i.e.

apiVersion: v1 kind: Pod metadata: namespace: default name: dns-example spec: containers: - name: test image: nginx dnsConfig: options: - name: ndots value: "1"

Sources

Also read other articles on our blog:

All Articles