Docker images can also be built in werf using the usual Dockerfile

Better late than never. Or how we almost made a serious mistake, not having the support of ordinary Dockerfiles for building application images.

We will talk about werf , a GitOps utility that integrates with any CI / CD system and provides control over the entire application life cycle, allowing you to:

The philosophy of the project is to assemble low-level tools into a single unified system that gives DevOps engineers control over applications. Where possible, existing utilities (such as Helm and Docker) should be involved. If there is no solution to a problem, we can create and maintain everything necessary for this.

This is what happened with the image collector in werf: we did not have the usual Dockerfile. If you quickly plunge into the history of the project, then this problem manifested itself in the first versions of werf (then still known as dapp ).

Creating a tool for building applications in Docker images, we quickly realized that the Dockerfile was not suitable for some very specific tasks:

Today in our collector there are many other possibilities, but the initial desires and urges were those.

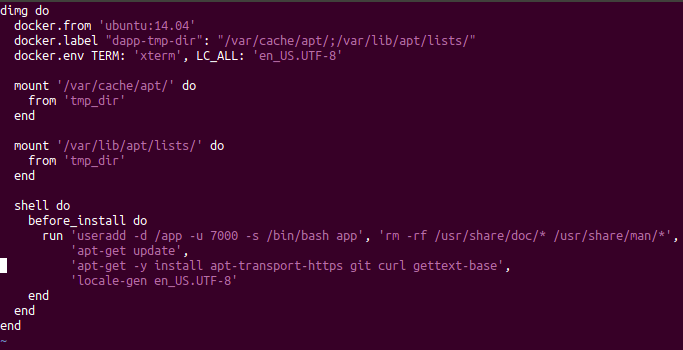

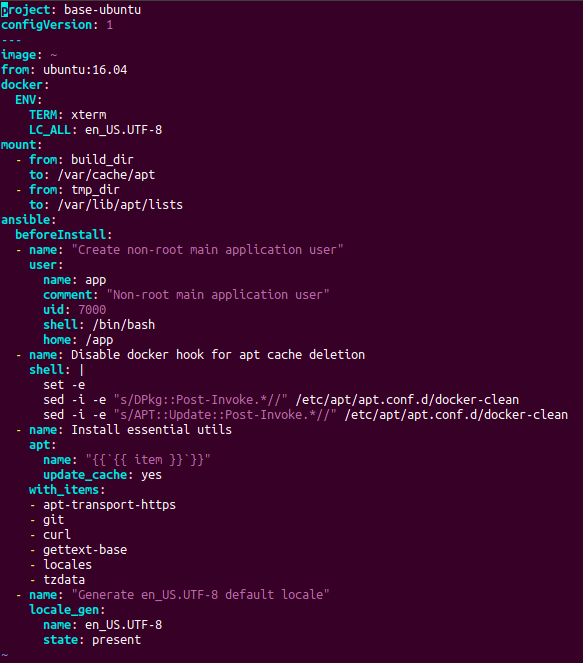

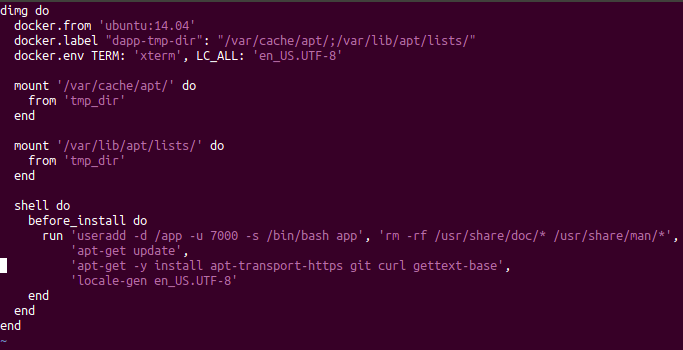

In general, without thinking twice, we armed ourselves with the programming language used (see below) and hit the road - implement our own DSL ! Corresponding to the tasks, it was intended to describe the assembly process by stages and to determine the dependencies of these stages on files. And complemented by his own collector , who turned DSL into the ultimate goal - an assembled image. At first, DSL was in Ruby, and as we switched to Golang , the config of our collector began to be described in the YAML file.

Old config for dapp on Ruby

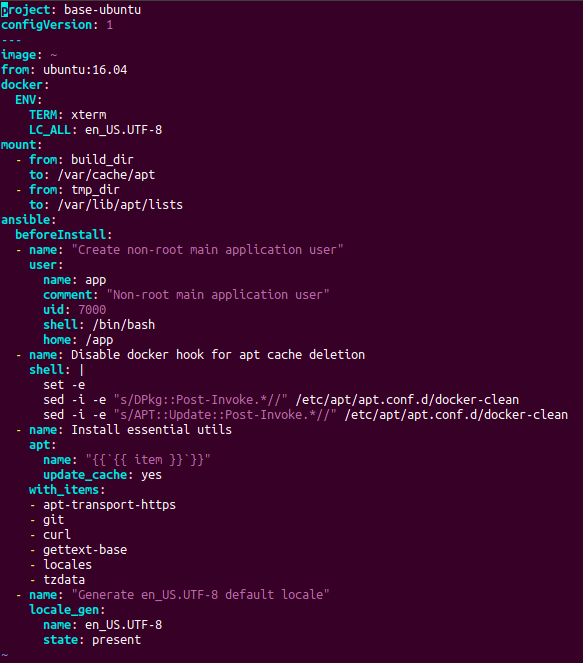

Current config for werf on YAML

The mechanism of the collector also changed over time. At first, we simply generated a temporary temporary Dockerfile from our configuration on the fly, and then began to run assembly instructions in temporary containers and made commit.

NB : At the moment, our collector, which works with its config (in YAML) and is called the Stapel-collector, has already developed into a fairly powerful tool. Its detailed description deserves separate articles, and the main details can be found in the documentation .

But we realized, and not immediately, that we made one mistake: we did not add the ability to collect images through the standard Dockerfile and integrate them into the same infrastructure for integrated application management (i.e., collect images, deploy and clean them). How could you make a deployment tool in Kubernetes and not implement Dockerfile support, i.e. a standard way to describe images for most projects? ..

Instead of answering such a question, we offer a solution. What if you already have a Dockerfile (or a set of Dockerfiles) and want to use werf?

NB : By the way, why would you even want to use werf? The main features are as follows:

A more complete list of them can be found on the project page .

So, if earlier we would suggest to rewrite Dockerfile to our config, now now we’ll be happy to say: “Let werf build your Dockerfiles!”

The full implementation of this feature appeared in the werf v1.0.3-beta.1 release . The general principle is simple: the user specifies the path to the existing Dockerfile in the werf config, and then runs the

... command and that's it - werf will collect the image. Consider an abstract example.

following

in the root of the project:

And declare

that uses this

:

Everything! It remains to run

:

In addition, you can declare the following

for building several images from different Dockerfiles at once:

Finally, it also supports the transfer of additional build parameters - such as

and

- through the werf config. A complete description of the Dockerfile image configuration is available on the documentation page .

During the build process, the standard local layer cache in Docker functions. However, importantly, werf also integrates the Dockerfile configuration into its infrastructure . What does this mean?

You can learn more about the points described here from the documentation:

Using an external URL in the

directive is not currently supported. Werf will not initiate rebuilding when the resource changes to the specified URL. Soon it is planned to add this feature.

Generally speaking, adding a

directory to an image is avicious bad practice, and here's why:

The last point has a consequence when using werf. Werf requires that the collected cache be present when certain commands are run (for example,

). During the operation of such commands, werf calculates the stage signatures for the images specified in

, and they must be in the assembly cache - otherwise the team will not be able to continue working. If the signature of the stages will depend on the contents of

, then we get a cache that is unstable to changes in irrelevant files, and werf will not be able to forgive such an oversight (see the documentation for more details).

In general, adding only certain necessary files through the

instruction in any case increases the efficiency and reliability of the written

, and also improves the stability of the cache compiled by this

against irrelevant changes in Git.

Our initial way of writing our own compiler for certain needs was difficult, honest and straightforward: instead of using crutches on top of the standard Dockerfile, we wrote our own solution with custom syntax. And this gave its advantages: the Stapel-builder copes with its task perfectly.

However, in the process of writing our own collector, we overlooked the support of existing Dockerfiles. Now this flaw has been fixed, and in the future we plan to develop Dockerfile support along with our custom Stapel collector for distributed assembly and for assembly using Kubernetes (i.e. assembly on runners inside Kubernetes, as is done in kaniko).

So if you suddenly had a couple of Dockerfiles ... try werf !

Also read on our blog: “ werf is our CI / CD tool in Kubernetes (review and video report) .”

We will talk about werf , a GitOps utility that integrates with any CI / CD system and provides control over the entire application life cycle, allowing you to:

- Collect and publish images

- Deploy applications in Kubernetes

- Delete unused images using special policies.

The philosophy of the project is to assemble low-level tools into a single unified system that gives DevOps engineers control over applications. Where possible, existing utilities (such as Helm and Docker) should be involved. If there is no solution to a problem, we can create and maintain everything necessary for this.

Background: Your Image Collector

This is what happened with the image collector in werf: we did not have the usual Dockerfile. If you quickly plunge into the history of the project, then this problem manifested itself in the first versions of werf (then still known as dapp ).

Creating a tool for building applications in Docker images, we quickly realized that the Dockerfile was not suitable for some very specific tasks:

- The need to build typical small web applications according to the following standard scheme:

- Install system-wide application dependencies

- install bundle of application dependency libraries,

- collect assets

- and most importantly, update the code in the image quickly and efficiently.

- When changes are made to project files, the builder must quickly create a new layer by applying a patch to the modified files.

- If certain files have changed, then it is necessary to rebuild the appropriate dependent stage.

Today in our collector there are many other possibilities, but the initial desires and urges were those.

In general, without thinking twice, we armed ourselves with the programming language used (see below) and hit the road - implement our own DSL ! Corresponding to the tasks, it was intended to describe the assembly process by stages and to determine the dependencies of these stages on files. And complemented by his own collector , who turned DSL into the ultimate goal - an assembled image. At first, DSL was in Ruby, and as we switched to Golang , the config of our collector began to be described in the YAML file.

Old config for dapp on Ruby

Current config for werf on YAML

The mechanism of the collector also changed over time. At first, we simply generated a temporary temporary Dockerfile from our configuration on the fly, and then began to run assembly instructions in temporary containers and made commit.

NB : At the moment, our collector, which works with its config (in YAML) and is called the Stapel-collector, has already developed into a fairly powerful tool. Its detailed description deserves separate articles, and the main details can be found in the documentation .

Problem awareness

But we realized, and not immediately, that we made one mistake: we did not add the ability to collect images through the standard Dockerfile and integrate them into the same infrastructure for integrated application management (i.e., collect images, deploy and clean them). How could you make a deployment tool in Kubernetes and not implement Dockerfile support, i.e. a standard way to describe images for most projects? ..

Instead of answering such a question, we offer a solution. What if you already have a Dockerfile (or a set of Dockerfiles) and want to use werf?

NB : By the way, why would you even want to use werf? The main features are as follows:

- full application management cycle including image cleaning;

- the ability to manage the assembly of several images from a single config;

- Improved Helm compatible chart deployment process.

A more complete list of them can be found on the project page .

So, if earlier we would suggest to rewrite Dockerfile to our config, now now we’ll be happy to say: “Let werf build your Dockerfiles!”

How to use?

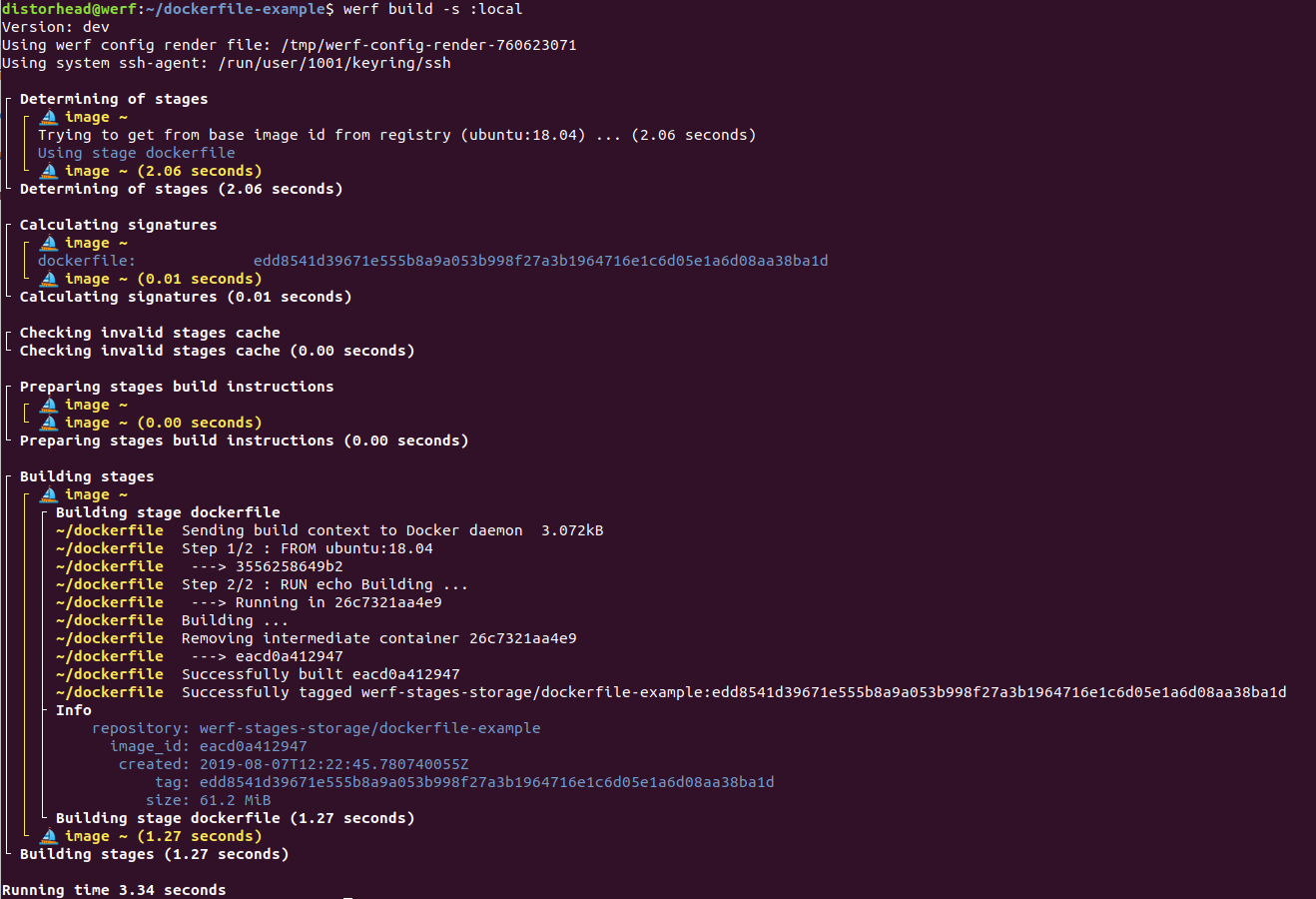

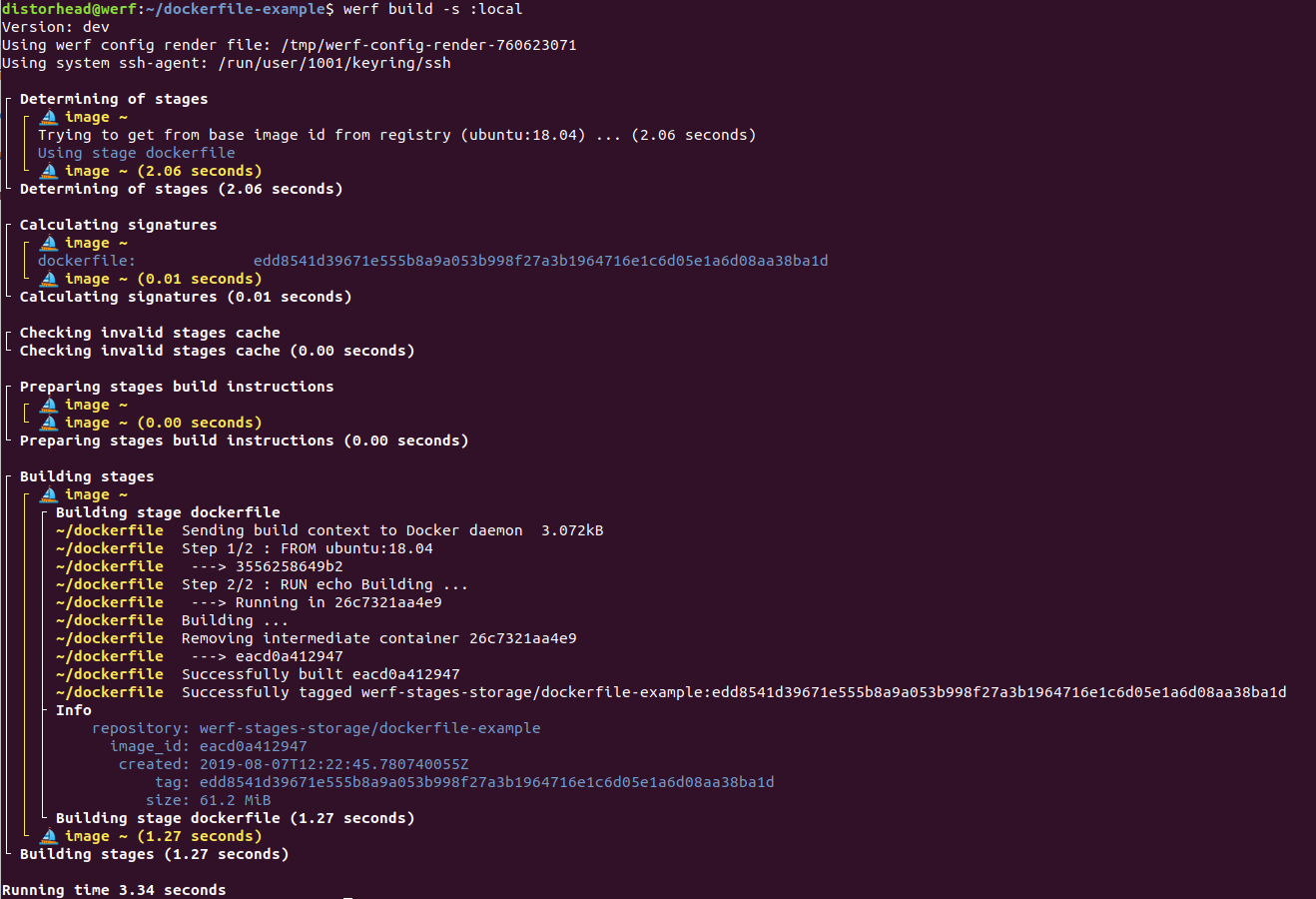

The full implementation of this feature appeared in the werf v1.0.3-beta.1 release . The general principle is simple: the user specifies the path to the existing Dockerfile in the werf config, and then runs the

werf build

... command and that's it - werf will collect the image. Consider an abstract example.

Dockerfile

following

Dockerfile

in the root of the project:

FROM ubuntu:18.04 RUN echo Building ...

And declare

werf.yaml

that uses this

Dockerfile

:

configVersion: 1 project: dockerfile-example --- image: ~ dockerfile: ./Dockerfile

Everything! It remains to run

werf build

:

In addition, you can declare the following

werf.yaml

for building several images from different Dockerfiles at once:

configVersion: 1 project: dockerfile-example --- image: backend dockerfile: ./dockerfiles/Dockerfile-backend --- image: frontend dockerfile: ./dockerfiles/Dockerfile-frontend

Finally, it also supports the transfer of additional build parameters - such as

--build-arg

and

--add-host

- through the werf config. A complete description of the Dockerfile image configuration is available on the documentation page .

How it works?

During the build process, the standard local layer cache in Docker functions. However, importantly, werf also integrates the Dockerfile configuration into its infrastructure . What does this mean?

- Each image collected from Dockerfile consists of one stage called

dockerfile

(more about what stages in werf are, you can read here ). - For stage,

dockerfile

werf calculates the signature, which depends on the contents of the Dockerfile configuration. When the Dockerfile configuration is changed, thedockerfile

stage signaturedockerfile

and werf initiates the rebuilding of this stage with the new Dockerfile config. If the signature does not change, then werf takes the image from the cache (more about using signatures in werf was described in this report ) . - Further, the collected images can be published

werf publish

command (orwerf build-and-publish

) and used for deployment in Kubernetes. Published images in the Docker Registry will be cleaned with standard werf cleaners, i.e. there will be an automatic cleaning of old images (older than N days), images associated with non-existent Git branches, and other policies.

You can learn more about the points described here from the documentation:

Notes and Precautions

1. External URL in ADD is not supported

Using an external URL in the

ADD

directive is not currently supported. Werf will not initiate rebuilding when the resource changes to the specified URL. Soon it is planned to add this feature.

2. You cannot add .git to an image

Generally speaking, adding a

.git

directory to an image is a

- If

.git

remains in the final image, this violates the principles of the 12 factor app : since the final image must be associated with a single commit, it should not be possible to make agit checkout

an arbitrary commit. -

.git

increases the size of the image (the repository may be large due to the fact that large files were once added to it and then deleted). The size of the work-tree, associated only with a specific commit, will not depend on the history of operations in Git. At the same time, adding and then removing.git

from the final image will not work: the image will still get an extra layer - this is how Docker works. - Docker can initiate unnecessary rebuilding, even if the same commit is being built, but from different work-trees. For example, GitLab creates separate cloned directories in

/home/gitlab-runner/builds/HASH/[0-N]/yourproject

when parallel assembly is enabled. The extra rebuild will be due to the fact that the.git

directory is different in different cloned versions of the same repository, even if the same commit is collected.

The last point has a consequence when using werf. Werf requires that the collected cache be present when certain commands are run (for example,

werf deploy

). During the operation of such commands, werf calculates the stage signatures for the images specified in

werf.yaml

, and they must be in the assembly cache - otherwise the team will not be able to continue working. If the signature of the stages will depend on the contents of

.git

, then we get a cache that is unstable to changes in irrelevant files, and werf will not be able to forgive such an oversight (see the documentation for more details).

In general, adding only certain necessary files through the

ADD

instruction in any case increases the efficiency and reliability of the written

Dockerfile

, and also improves the stability of the cache compiled by this

Dockerfile

against irrelevant changes in Git.

Total

Our initial way of writing our own compiler for certain needs was difficult, honest and straightforward: instead of using crutches on top of the standard Dockerfile, we wrote our own solution with custom syntax. And this gave its advantages: the Stapel-builder copes with its task perfectly.

However, in the process of writing our own collector, we overlooked the support of existing Dockerfiles. Now this flaw has been fixed, and in the future we plan to develop Dockerfile support along with our custom Stapel collector for distributed assembly and for assembly using Kubernetes (i.e. assembly on runners inside Kubernetes, as is done in kaniko).

So if you suddenly had a couple of Dockerfiles ... try werf !

PS List of related documentation

- Guides for a quick start ;

- Dockerfile builder configurations ;

- Stages device in werf ;

- The process of publishing images ;

- Integration with the deployment process in Kubernetes ;

- Cleaning process ;

- Stapel-builder as an alternative to Dockerfile .

Also read on our blog: “ werf is our CI / CD tool in Kubernetes (review and video report) .”

All Articles