More site statistics in your small repository

By analyzing the statistics of the site, we get an idea of what is happening with it. We compare the results with other knowledge about the product or service and thereby improve our experience.

When the analysis of the first results is completed, the information has been comprehended and conclusions are drawn, the next stage begins. Ideas arise: what will happen if you look at the data from another perspective?

At this point, there are limitations to the analysis tools. This is one of the reasons why the Google Analytics tool was not enough for me, namely, because of the limited ability to see and manipulate my data.

I always wanted to quickly load the basic data (master data), add another level of aggregation, or otherwise interpret the existing values.

This is easy to do in your small repository based on the access.log file, and the SQL language is enough.

So, what questions did I want to find the answer to?

The history of changes in the underlying data (master data) is always of interest.

For example, at some point, search engine optimization was carried out or new content was added to the site, in this regard, an increase in traffic is expected.

The simplest example of a group is a user agent or the name of an operating system.

The user agent dimension has accumulated about a thousand records, and I was interested to see the dynamics of agent distribution within the group.

Most of the various combinations of agents come to the site from the Windows world. Among the uncertain ones were such as WhatsApp, PocketImageCache, PlayStation, SmartTV, etc.

By combining some groups, we can observe the distribution of their activity.

For example, users of a Linux cluster consume more traffic on a site than everyone else.

The table shows the most active user groups and the day of their activity.

The most active ones belong to the Linux cluster.

Using the attributes day and agent ID, you can quickly find and track statistics for the days of individual user groups. If necessary, you can quickly find detailed information in the stage table.

The information from the access.log file can be made even more effective by integrating additional data sources and introducing new levels of aggregation and grouping.

The basic data include information about entities: web pages, pictures, video and audio contents, in the case of a store, products.

Entities themselves play the role of dimensions, and the process of saving attribute changes is called historicization. In a database, this process is often implemented in the form of slowly changing dimensions (SCD).

A variety of systems can be the source of basic data, so they almost always need to be integrated.

The DIM_REQUEST dimension will contain information about queries on the site in historical form.

In addition to it, create one view that always displays all the records in the last state. It is necessary to load the measurement itself.

And a view of where historical information is collected for each record. It is necessary to build a historically correct connection with facts.

Compression (aggregation) allows you to evaluate data at a higher level and detect anomalies and trends that are not visible in detailed reports.

For example, in the dimension with request status codes DIM_HTTP_STATUS, add the group:

It includes the organization of data transfer from the operating system to the reporting one. To do this, create a stage table with a structure similar to the source.

Information about web pages gets to the stage from the CMS backup in the form of insert requests.

Loading the historical DIM_REQUEST table with basic data takes three steps: loading new keys and attributes, updating existing ones and fixing deleted records.

Each data source must be accompanied by a formal description, for example, in the readme.txt file:

The data movement scheme will help in the process of maintenance and updating, for example, in text form:

Thus, the article describes such mechanisms as the integration of basic data and the introduction of new levels of aggregation. They are needed when building data warehouses in order to obtain additional knowledge and improve the quality of information.

When the analysis of the first results is completed, the information has been comprehended and conclusions are drawn, the next stage begins. Ideas arise: what will happen if you look at the data from another perspective?

At this point, there are limitations to the analysis tools. This is one of the reasons why the Google Analytics tool was not enough for me, namely, because of the limited ability to see and manipulate my data.

I always wanted to quickly load the basic data (master data), add another level of aggregation, or otherwise interpret the existing values.

This is easy to do in your small repository based on the access.log file, and the SQL language is enough.

So, what questions did I want to find the answer to?

What and when has changed on the site

The history of changes in the underlying data (master data) is always of interest.

SQL report request

SELECT 1 as 'SideStackedBar: Content Updates by Months', strftime('%m/%Y', datetime(UPDATE_DT, 'unixepoch')) AS 'Day', COUNT(CASE WHEN PAGE_TITLE != 'na' THEN DIM_REQUEST_ID END) AS 'Web page updates', COUNT(CASE WHEN PAGE_DESCR = 'IMAGES' THEN DIM_REQUEST_ID END) AS 'Image uploads', COUNT(CASE WHEN PAGE_DESCR = 'VIDEO' THEN DIM_REQUEST_ID END) AS 'Video uploads', COUNT(CASE WHEN PAGE_DESCR = 'AUDIO' THEN DIM_REQUEST_ID END) AS 'Audio uploads' FROM DIM_REQUEST WHERE PAGE_TITLE != 'na' OR PAGE_DESCR != 'na' GROUP BY strftime('%m/%Y', datetime(UPDATE_DT, 'unixepoch')) ORDER BY UPDATE_DT

For example, at some point, search engine optimization was carried out or new content was added to the site, in this regard, an increase in traffic is expected.

Group of users

The simplest example of a group is a user agent or the name of an operating system.

The user agent dimension has accumulated about a thousand records, and I was interested to see the dynamics of agent distribution within the group.

SQL report request

SELECT 1 AS 'SideStackedBar: User Agents', AGENT_OS AS 'OS', SUM(CASE WHEN AGENT_BOT = 'na' THEN 1 ELSE 0 END ) AS 'User Agent of Users', SUM(CASE WHEN AGENT_BOT != 'na' THEN 1 ELSE 0 END ) AS 'User Agent of Bots' FROM DIM_USER_AGENT WHERE DIM_USER_AGENT_ID != -1 GROUP BY AGENT_OS ORDER BY 3 DESC

Most of the various combinations of agents come to the site from the Windows world. Among the uncertain ones were such as WhatsApp, PocketImageCache, PlayStation, SmartTV, etc.

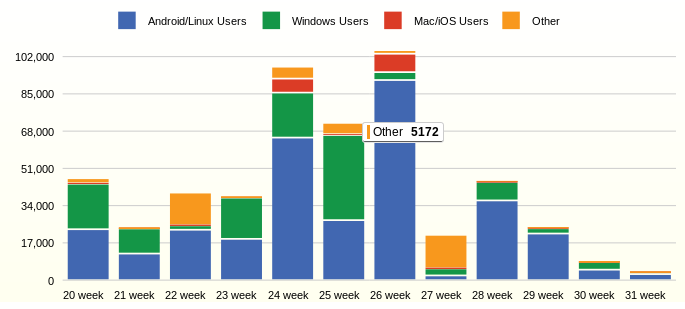

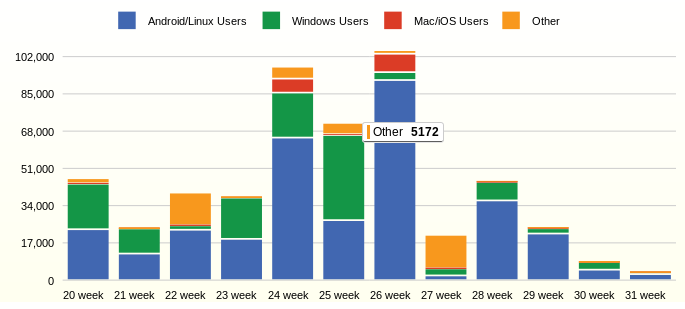

Weekly user group activity

By combining some groups, we can observe the distribution of their activity.

For example, users of a Linux cluster consume more traffic on a site than everyone else.

SQL report request

SELECT 1 as 'StackedBar: Traffic Volume by User OS and by Week', strftime('%W week', datetime(FCT.EVENT_DT, 'unixepoch')) AS 'Week', SUM(CASE WHEN USG.AGENT_OS IN ('Android', 'Linux') THEN FCT.BYTES ELSE 0 END)/1000 AS 'Android/Linux Users', SUM(CASE WHEN USG.AGENT_OS IN ('Windows') THEN FCT.BYTES ELSE 0 END)/1000 AS 'Windows Users', SUM(CASE WHEN USG.AGENT_OS IN ('Macintosh', 'iOS') THEN FCT.BYTES ELSE 0 END)/1000 AS 'Mac/iOS Users', SUM(CASE WHEN USG.AGENT_OS IN ('na', 'BlackBerry') THEN FCT.BYTES ELSE 0 END)/1000 AS 'Other' FROM FCT_ACCESS_USER_AGENT_DD FCT, DIM_USER_AGENT USG, DIM_HTTP_STATUS HST WHERE FCT.DIM_USER_AGENT_ID=USG.DIM_USER_AGENT_ID AND FCT.DIM_HTTP_STATUS_ID = HST.DIM_HTTP_STATUS_ID AND USG.AGENT_BOT = 'na' /* users only */ AND HST.STATUS_GROUP IN ('Successful') /* good pages */ AND datetime(FCT.EVENT_DT, 'unixepoch') > date('now', '-3 month') GROUP BY strftime('%W week', datetime(FCT.EVENT_DT, 'unixepoch')) ORDER BY FCT.EVENT_DT

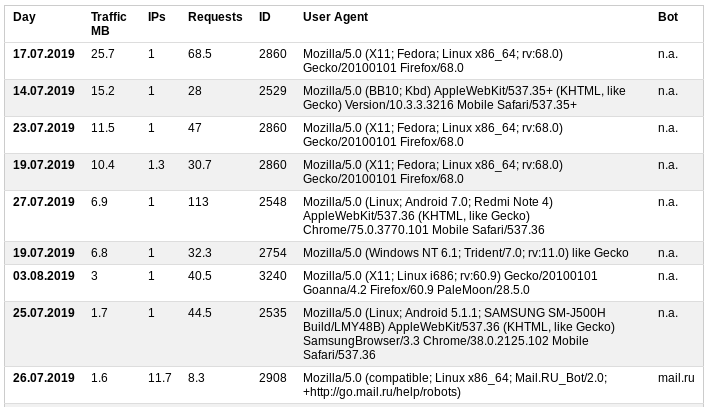

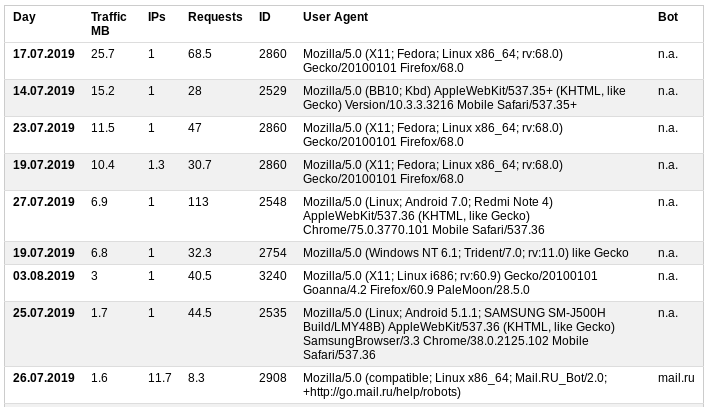

Heavy traffic consumption

The table shows the most active user groups and the day of their activity.

The most active ones belong to the Linux cluster.

SQL report request

SELECT 1 AS 'Table: User Agent with Havy Usage', strftime('%d.%m.%Y', datetime(FCT.EVENT_DT, 'unixepoch')) AS 'Day', ROUND(1.0*SUM(FCT.BYTES)/1000000, 1) AS 'Traffic MB', ROUND(1.0*SUM(FCT.IP_CNT)/SUM(1), 1) AS 'IPs', ROUND(1.0*SUM(FCT.REQUEST_CNT)/SUM(1), 1) AS 'Requests', USA.DIM_USER_AGENT_ID AS 'ID', MAX(USA.USER_AGENT_NK) AS 'User Agent', MAX(USA.AGENT_BOT) AS 'Bot' FROM FCT_ACCESS_USER_AGENT_DD FCT, DIM_USER_AGENT USA WHERE FCT.DIM_USER_AGENT_ID = USA.DIM_USER_AGENT_ID AND datetime(FCT.EVENT_DT, 'unixepoch') >= date('now', '-30 day') GROUP BY USA.DIM_USER_AGENT_ID, strftime('%d.%m.%Y', datetime(FCT.EVENT_DT, 'unixepoch')) ORDER BY SUM(FCT.BYTES) DESC, FCT.EVENT_DT LIMIT 10

Using the attributes day and agent ID, you can quickly find and track statistics for the days of individual user groups. If necessary, you can quickly find detailed information in the stage table.

How to get information?

The information from the access.log file can be made even more effective by integrating additional data sources and introducing new levels of aggregation and grouping.

Basic data and entities

The basic data include information about entities: web pages, pictures, video and audio contents, in the case of a store, products.

Entities themselves play the role of dimensions, and the process of saving attribute changes is called historicization. In a database, this process is often implemented in the form of slowly changing dimensions (SCD).

A variety of systems can be the source of basic data, so they almost always need to be integrated.

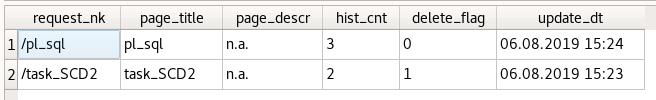

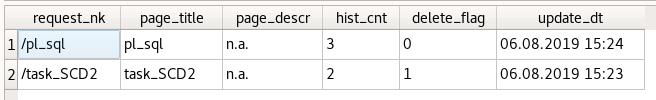

Slowly changing dimension

The DIM_REQUEST dimension will contain information about queries on the site in historical form.

SCD2 table

CREATE TABLE DIM_REQUEST ( /* scd table for user requests */ DIM_REQUEST_ID INTEGER NOT NULL PRIMARY KEY AUTOINCREMENT, DIM_REQUEST_ID_HIST INTEGER NOT NULL DEFAULT -1, REQUEST_NK TEXT NOT NULL DEFAULT 'na', /* request without ?parameters */ PAGE_TITLE TEXT NOT NULL DEFAULT 'na', PAGE_DESCR TEXT NOT NULL DEFAULT 'na', PAGE_KEYWORDS TEXT NOT NULL DEFAULT 'na', DELETE_FLAG INTEGER NOT NULL DEFAULT 0, UPDATE_DT INTEGER NOT NULL DEFAULT 0, UNIQUE (REQUEST_NK, DIM_REQUEST_ID_HIST) ); INSERT INTO DIM_REQUEST (DIM_REQUEST_ID) VALUES (-1);

In addition to it, create one view that always displays all the records in the last state. It is necessary to load the measurement itself.

Current view of SCD2

/* Content: actual view on scd table */ SELECT HI.DIM_REQUEST_ID, HI.DIM_REQUEST_ID_HIST, HI.REQUEST_NK, HI.PAGE_TITLE, HI.PAGE_DESCR, HI.PAGE_KEYWORDS, NK.CNT AS HIST_CNT, HI.DELETE_FLAG, strftime('%d.%m.%Y %H:%M', datetime(HI.UPDATE_DT, 'unixepoch')) AS UPDATE_DT FROM ( SELECT REQUEST_NK, MAX(DIM_REQUEST_ID) AS DIM_REQUEST_ID, SUM(1) AS CNT FROM DIM_REQUEST GROUP BY REQUEST_NK ) NK, DIM_REQUEST HI WHERE 1 = 1 AND NK.REQUEST_NK = HI.REQUEST_NK AND NK.DIM_REQUEST_ID = HI.DIM_REQUEST_ID;

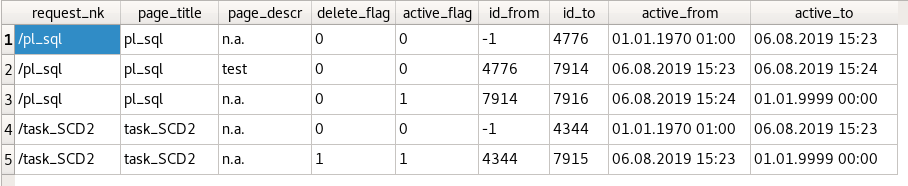

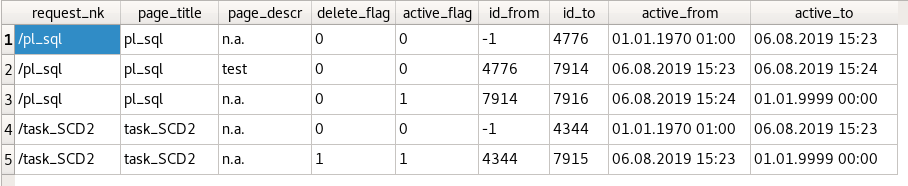

And a view of where historical information is collected for each record. It is necessary to build a historically correct connection with facts.

Historical view of SCD2

/* Content: actual view on scd table */ SELECT SCD.DIM_REQUEST_ID, SCD.DIM_REQUEST_ID_HIST, SCD.REQUEST_NK, SCD.PAGE_TITLE, SCD.PAGE_DESCR, SCD.PAGE_KEYWORDS, SCD.DELETE_FLAG, CASE WHEN HIS.UPDATE_DT IS NULL THEN 1 ELSE 0 END ACTIVE_FLAG, SCD.DIM_REQUEST_ID_HIST AS ID_FROM, SCD.DIM_REQUEST_ID AS ID_TO, CASE WHEN SCD.DIM_REQUEST_ID_HIST=-1 THEN 3600 ELSE IFNULL(SCD.UPDATE_DT,3600) END AS TIME_FROM, CASE WHEN HIS.UPDATE_DT IS NULL THEN 253370764800 ELSE HIS.UPDATE_DT END AS TIME_TO, CASE WHEN SCD.DIM_REQUEST_ID_HIST=-1 THEN STRFTIME('%d.%m.%Y %H:%M', DATETIME(3600, 'unixepoch')) ELSE STRFTIME('%d.%m.%Y %H:%M', DATETIME(IFNULL(SCD.UPDATE_DT,3600), 'unixepoch')) END AS ACTIVE_FROM, CASE WHEN HIS.UPDATE_DT IS NULL THEN STRFTIME('%d.%m.%Y %H:%M', DATETIME(253370764800, 'unixepoch')) ELSE STRFTIME('%d.%m.%Y %H:%M', DATETIME(HIS.UPDATE_DT, 'unixepoch')) END AS ACTIVE_TO FROM DIM_REQUEST SCD LEFT OUTER JOIN DIM_REQUEST HIS ON SCD.REQUEST_NK = HIS.REQUEST_NK AND SCD.DIM_REQUEST_ID = HIS.DIM_REQUEST_ID_HIST;

Data aggregation

Compression (aggregation) allows you to evaluate data at a higher level and detect anomalies and trends that are not visible in detailed reports.

For example, in the dimension with request status codes DIM_HTTP_STATUS, add the group:

STATUS / GROUPThe user agent dimension DIM_USER_AGENT will contain the AGENT_OS and AGENT_BOT attributes for the groups. They can be filled in during the ETL process:

0xx / na

1xx / Informational

2xx / Successful

3xx / redirection

4xx / Client Error

5xx / Server Error

Download DIM_USER_AGENT

/* Propagate the user agent from access log */ INSERT INTO DIM_USER_AGENT (USER_AGENT_NK, AGENT_OS, AGENT_ENGINE, AGENT_DEVICE, AGENT_BOT, UPDATE_DT) WITH CLS AS ( SELECT BROWSER FROM STG_ACCESS_LOG WHERE LENGTH(BROWSER)>1 GROUP BY BROWSER ) SELECT CLS.BROWSER AS USER_AGENT_NK, CASE WHEN INSTR(CLS.BROWSER,'Macintosh')>0 THEN 'Macintosh' WHEN INSTR(CLS.BROWSER,'iPhone')>0 OR INSTR(CLS.BROWSER,'iPad')>0 OR INSTR(CLS.BROWSER,'iPod')>0 OR INSTR(CLS.BROWSER,'Apple TV')>0 OR INSTR(CLS.BROWSER,'Darwin')>0 THEN 'iOS' WHEN INSTR(CLS.BROWSER,'Android')>0 THEN 'Android' WHEN INSTR(CLS.BROWSER,'X11;')>0 OR INSTR(CLS.BROWSER,'Wayland;')>0 OR INSTR(CLS.BROWSER,'linux-gnu')>0 THEN 'Linux' WHEN INSTR(CLS.BROWSER,'BB10;')>0 OR INSTR(CLS.BROWSER,'BlackBerry')>0 THEN 'BlackBerry' WHEN INSTR(CLS.BROWSER,'Windows')>0 THEN 'Windows' ELSE 'na' END AS AGENT_OS, -- OS CASE WHEN INSTR(CLS.BROWSER,'AppleCoreMedia')>0 THEN 'AppleWebKit' WHEN INSTR(CLS.BROWSER,') ')>1 AND LENGTH(CLS.BROWSER)>INSTR(CLS.BROWSER,') ') THEN COALESCE(SUBSTR(CLS.BROWSER, INSTR(CLS.BROWSER,') ')+2, LENGTH(CLS.BROWSER) - INSTR(CLS.BROWSER,') ')-1), 'N/A') ELSE 'na' END AS AGENT_ENGINE, -- Engine CASE WHEN INSTR(CLS.BROWSER,'iPhone')>0 THEN 'iPhone' WHEN INSTR(CLS.BROWSER,'iPad')>0 THEN 'iPad' WHEN INSTR(CLS.BROWSER,'iPod')>0 THEN 'iPod' WHEN INSTR(CLS.BROWSER,'Apple TV')>0 THEN 'Apple TV' WHEN INSTR(CLS.BROWSER,'Android ')>0 AND INSTR(CLS.BROWSER,'Build')>0 THEN COALESCE(SUBSTR(CLS.BROWSER, INSTR(CLS.BROWSER,'Android '), INSTR(CLS.BROWSER,'Build')-INSTR(CLS.BROWSER,'Android ')), 'na') WHEN INSTR(CLS.BROWSER,'Android ')>0 AND INSTR(CLS.BROWSER,'MIUI')>0 THEN COALESCE(SUBSTR(CLS.BROWSER, INSTR(CLS.BROWSER,'Android '), INSTR(CLS.BROWSER,'MIUI')-INSTR(CLS.BROWSER,'Android ')), 'na') ELSE 'na' END AS AGENT_DEVICE, -- Device CASE WHEN INSTR(LOWER(CLS.BROWSER),'yandex.com')>0 THEN 'yandex' WHEN INSTR(LOWER(CLS.BROWSER),'googlebot')>0 THEN 'google' WHEN INSTR(LOWER(CLS.BROWSER),'bingbot')>0 THEN 'microsoft' WHEN INSTR(LOWER(CLS.BROWSER),'ahrefsbot')>0 THEN 'ahrefs' WHEN INSTR(LOWER(CLS.BROWSER),'jobboersebot')>0 OR INSTR(LOWER(CLS.BROWSER),'jobkicks')>0 THEN 'job.de' WHEN INSTR(LOWER(CLS.BROWSER),'mail.ru')>0 THEN 'mail.ru' WHEN INSTR(LOWER(CLS.BROWSER),'baiduspider')>0 THEN 'baidu' WHEN INSTR(LOWER(CLS.BROWSER),'mj12bot')>0 THEN 'majestic-12' WHEN INSTR(LOWER(CLS.BROWSER),'duckduckgo')>0 THEN 'duckduckgo' WHEN INSTR(LOWER(CLS.BROWSER),'bytespider')>0 THEN 'bytespider' WHEN INSTR(LOWER(CLS.BROWSER),'360spider')>0 THEN 'so.360.cn' WHEN INSTR(LOWER(CLS.BROWSER),'compatible')>0 OR INSTR(LOWER(CLS.BROWSER),'http')>0 OR INSTR(LOWER(CLS.BROWSER),'libwww')>0 OR INSTR(LOWER(CLS.BROWSER),'spider')>0 OR INSTR(LOWER(CLS.BROWSER),'java')>0 OR INSTR(LOWER(CLS.BROWSER),'python')>0 OR INSTR(LOWER(CLS.BROWSER),'robot')>0 OR INSTR(LOWER(CLS.BROWSER),'curl')>0 OR INSTR(LOWER(CLS.BROWSER),'wget')>0 THEN 'other' ELSE 'na' END AS AGENT_BOT, -- Bot STRFTIME('%s','now') AS UPDATE_DT FROM CLS LEFT OUTER JOIN DIM_USER_AGENT TRG ON CLS.BROWSER = TRG.USER_AGENT_NK WHERE TRG.DIM_USER_AGENT_ID IS NULL

Data integration

It includes the organization of data transfer from the operating system to the reporting one. To do this, create a stage table with a structure similar to the source.

Information about web pages gets to the stage from the CMS backup in the form of insert requests.

Loading the historical DIM_REQUEST table with basic data takes three steps: loading new keys and attributes, updating existing ones and fixing deleted records.

Download new SCD2 entries

/* Load request table SCD from master data */ INSERT INTO DIM_REQUEST (DIM_REQUEST_ID_HIST, REQUEST_NK, PAGE_TITLE, PAGE_DESCR, PAGE_KEYWORDS, DELETE_FLAG, UPDATE_DT) WITH CLS AS ( -- prepare keys SELECT '/' || NAME AS REQUEST_NK, TITLE AS PAGE_TITLE, CASE WHEN DESCRIPTION = '' OR DESCRIPTION IS NULL THEN 'na' ELSE DESCRIPTION END AS PAGE_DESCR, CASE WHEN KEYWORDS = '' OR KEYWORDS IS NULL THEN 'na' ELSE KEYWORDS END AS PAGE_KEYWORDS FROM STG_CMS_MENU WHERE CONTENT_TYPE != 'folder' -- only web pages AND PAGE_TITLE != 'na' -- master data which make sense ) /* new records from stage: CLS */ SELECT -1 AS DIM_REQUEST_ID_HIST, CLS.REQUEST_NK, CLS.PAGE_TITLE, CLS.PAGE_DESCR, CLS.PAGE_KEYWORDS, 0 AS DELETE_FLAG, STRFTIME('%s','now') AS UPDATE_DT FROM CLS LEFT OUTER JOIN ( SELECT DIM_REQUEST_ID, REQUEST_NK, PAGE_TITLE, PAGE_DESCR, PAGE_KEYWORDS FROM DIM_REQUEST_V_ACT ) TRG ON CLS.REQUEST_NK = TRG.REQUEST_NK WHERE TRG.REQUEST_NK IS NULL -- no such record in data mart

Update SCD2 Attributes

/* Load request table SCD from master data */ INSERT INTO DIM_REQUEST (DIM_REQUEST_ID_HIST, REQUEST_NK, PAGE_TITLE, PAGE_DESCR, PAGE_KEYWORDS, DELETE_FLAG, UPDATE_DT) WITH CLS AS ( -- prepare keys SELECT '/' || NAME AS REQUEST_NK, TITLE AS PAGE_TITLE, CASE WHEN DESCRIPTION = '' OR DESCRIPTION IS NULL THEN 'na' ELSE DESCRIPTION END AS PAGE_DESCR, CASE WHEN KEYWORDS = '' OR KEYWORDS IS NULL THEN 'na' ELSE KEYWORDS END AS PAGE_KEYWORDS FROM STG_CMS_MENU WHERE CONTENT_TYPE != 'folder' -- only web pages AND PAGE_TITLE != 'na' -- master data which make sense ) /* updated records from stage: CLS and build reference to history: HIST */ SELECT HIST.DIM_REQUEST_ID AS DIM_REQUEST_ID_HIST, HIST.REQUEST_NK, CLS.PAGE_TITLE, CLS.PAGE_DESCR, CLS.PAGE_KEYWORDS, 0 AS DELETE_FLAG, STRFTIME('%s','now') AS UPDATE_DT FROM CLS, DIM_REQUEST_V_ACT TRG, DIM_REQUEST HIST WHERE CLS.REQUEST_NK = TRG.REQUEST_NK AND TRG.DIM_REQUEST_ID = HIST.DIM_REQUEST_ID AND ( CLS.PAGE_TITLE != HIST.PAGE_TITLE /* changes only */ OR CLS.PAGE_DESCR != HIST.PAGE_DESCR OR CLS.PAGE_KEYWORDS != HIST.PAGE_KEYWORDS )

Deleted SCD2 Entries

/* Load request table SCD from master data */ INSERT INTO DIM_REQUEST (DIM_REQUEST_ID_HIST, REQUEST_NK, PAGE_TITLE, PAGE_DESCR, PAGE_KEYWORDS, DELETE_FLAG, UPDATE_DT) WITH CLS AS ( -- prepare keys SELECT '/' || NAME AS REQUEST_NK, TITLE AS PAGE_TITLE FROM STG_CMS_MENU WHERE CONTENT_TYPE != 'folder' -- only web pages AND PAGE_TITLE != 'na' -- master data which make sense ) /* deleted records in data mart: TRG */ SELECT TRG.DIM_REQUEST_ID AS DIM_REQUEST_ID_HIST, TRG.REQUEST_NK, TRG.PAGE_TITLE, TRG.PAGE_DESCR, TRG.PAGE_KEYWORDS, 1 AS DELETE_FLAG, STRFTIME('%s','now') AS UPDATE_DT FROM ( SELECT DIM_REQUEST_ID, REQUEST_NK, PAGE_TITLE, PAGE_DESCR, PAGE_KEYWORDS FROM DIM_REQUEST_V_ACT WHERE PAGE_TITLE != 'na' -- track master data only AND DELETE_FLAG = 0 -- not already deleted ) TRG LEFT OUTER JOIN CLS ON TRG.REQUEST_NK = CLS.REQUEST_NK WHERE CLS.REQUEST_NK IS NULL -- no such record in stage

Each data source must be accompanied by a formal description, for example, in the readme.txt file:

Recipient formally / technically: name, email address

Data Provider Formally / Technically: Name, Email

Data source: file path, service names

Data Access Information: Users and Passwords

The data movement scheme will help in the process of maintenance and updating, for example, in text form:

Moving a file. Source: ftp.domain.net: /logs/access.log Purpose: /var/www/access.log

Reading in stage. Target: STG_ACCESS_LOG

Download and transformation. Target: FCT_ACCESS_REQUEST_REF_HH

Download and transformation. Target: FCT_ACCESS_USER_AGENT_DD

Report. Target: /var/www/report.html

Conclusion

Thus, the article describes such mechanisms as the integration of basic data and the introduction of new levels of aggregation. They are needed when building data warehouses in order to obtain additional knowledge and improve the quality of information.

All Articles