How we designed and implemented the new network on Huawei in the Moscow office, part 3: server factory

In the previous two parts ( one , two ), we examined the principles on the basis of which a new user factory was built, and talked about the migration of all jobs. Now it's time to talk about the server factory.

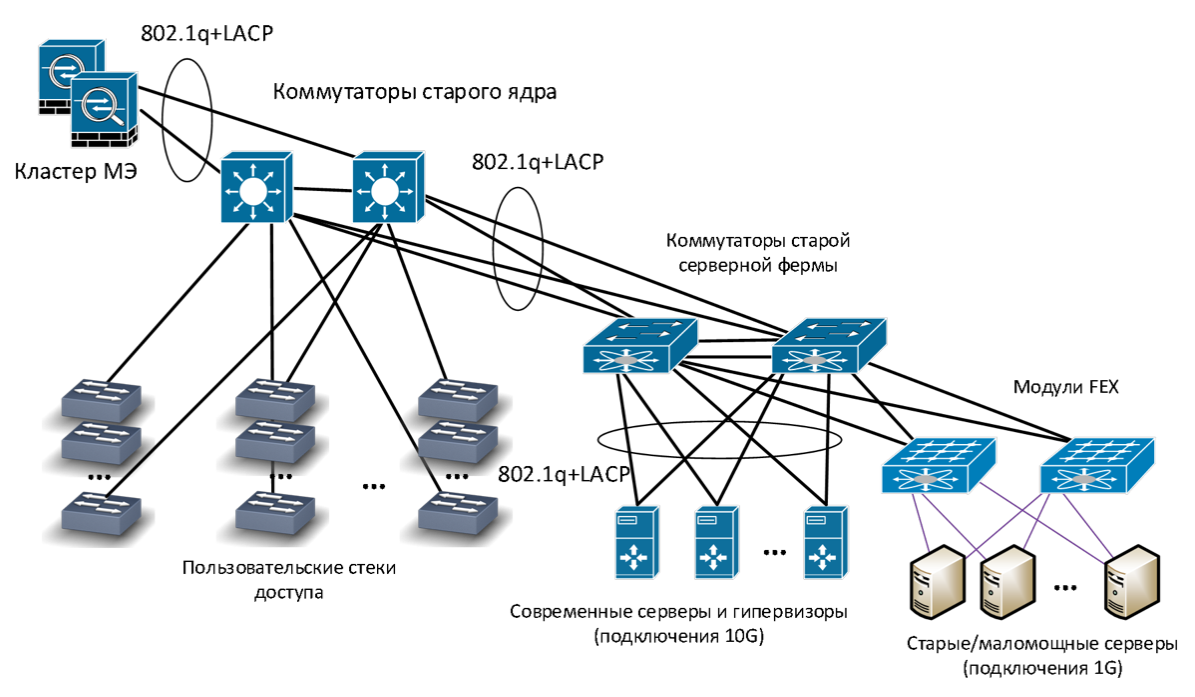

Previously, we did not have a separate server infrastructure: server switches were connected to the same core as user distribution switches. Access control was carried out using virtual networks (VLANs), VLAN routing was performed at one point - on the core (according to the Collapsed Backbone principle).

Old network infrastructure

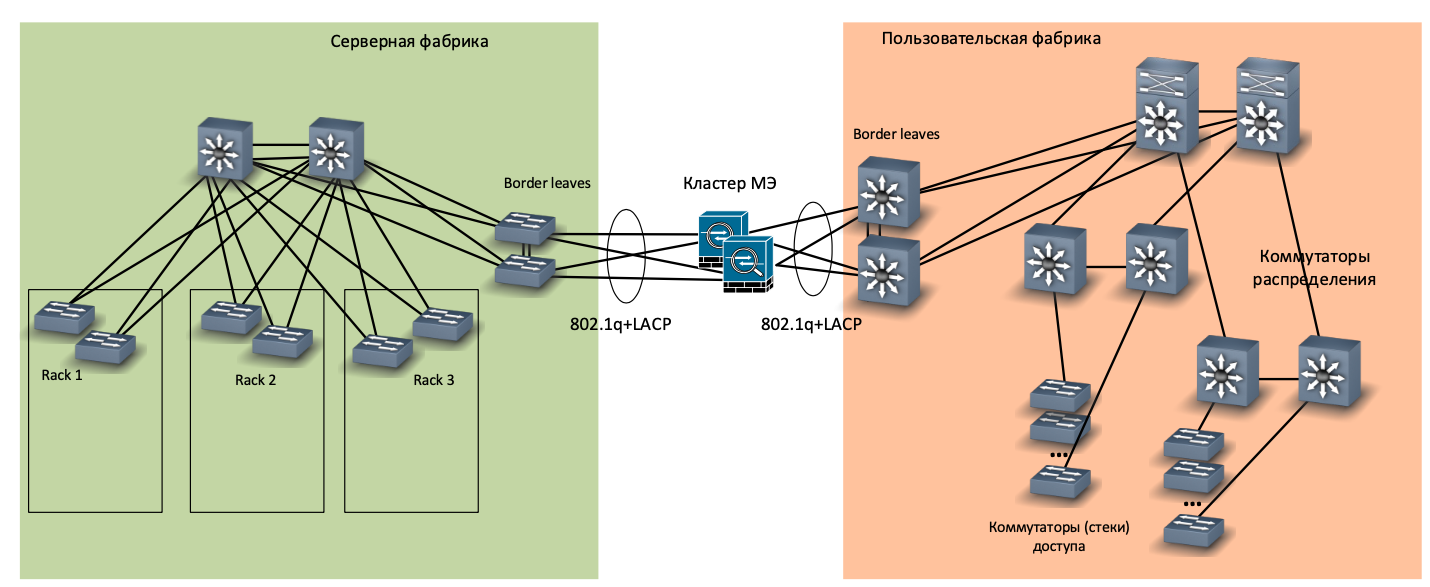

Along with the new office network, we decided to build a new server room, and for it - a separate new factory. It turned out to be small (three server cabinets), but in compliance with all the canons: a separate core on the CE8850 switches, a fully-connected (spine-leaf) topology, top of the rack (ToR) -commutators CE6870, a separate pair of switches for interfacing with the rest of the network (border leaves). In short, full stuffing.

Network of the new server factory

We decided to abandon the server SCS in favor of connecting the servers directly to the ToR switches. Why? We already have two server rooms that are built using server-based SCS, and we realized that this is:

- inconvenient in operation (a lot of switching, you need to carefully update the cable log);

- costly in terms of the space occupied by patch panels;

- it is an obstacle, if necessary, to increase the connection speed of servers (for example, switch from 1 Gbit / s connections on copper to 10 Gbit / s on optics).

When moving to a new server factory, we tried to get away from connecting servers at a speed of 1 Gbit / s and limited ourselves to 10-gigabit interfaces. Virtualized almost all the old servers that do not know how to do this, while the rest were connected via gigabit transceivers to 10-gigabit ports. We calculated and decided that it would be cheaper than installing separate gigabit switches for them.

ToR Switches

Also in our new server room, we installed separate out-of-band management (OOM) switches on 24 ports, one per rack. This idea turned out to be very good, only the ports were not enough, next time we will install OOM switches for 48 ports.

In the OOM network, we connect remote management interfaces for servers such as iLO, or iBMC according to Huawei terminology. If the server has lost the main connection to the network, then you can reach it through this interface. The OOM switches also connect ToR switch management interfaces, temperature sensors, UPS control interfaces, and other similar devices. The OOM network is accessible through a separate firewall interface.

OOM network connection

Pairing server and user networks

In a user factory, separate VRFs are used for different purposes - to connect user workstations, video surveillance systems, multimedia systems in meeting rooms, to organize stands and demo zones, etc.

A different set of VRFs has been created in the server factory:

- For connecting ordinary servers on which corporate services are deployed.

- Separate VRF, in which servers with access from the Internet are deployed.

- Separate VRF for database servers that are accessed only by other servers (for example, application servers).

- Separate VRF for our mail system (MS Exchange + Skype for Business).

Thus, we have a VRF set from the user factory side and a VRF set from the server factory side. Both sets are clustered on corporate firewalls (MEs). MEs are connected to border switches of both the server factory and the user factory.

Interfacing factories through ME - physics

Interfacing factories through ME - logic

How did the migration go

During the migration, we connected the new and old server factories at the channel level, through temporary trunks. To migrate servers located in a specific VLAN, we created a separate bridge domain, which included the VLAN of the old server factory and the VXLAN of the new server factory.

The configuration looks something like this, the key are the last two lines:

bridge-domain 22 vxlan vni 600022 evpn route-distinguisher 10.xxx.xxx.xxx:60022 vpn-target 6xxxx:60022 export-extcommunity vpn-target 6xxxx:60022 import-extcommunity interface Eth-Trunk1 mode lacp-static dfs-group 1 m-lag 1 interface Eth-Trunk1.1022 mode l2 encapsulation dot1q vid 22 bridge-domain 22

Virtual machine migration

Then, using VMware vMotion, the virtual machines in this VLAN migrated from the old hypervisors (version 5.5) to the new ones (version 6.5). Along the way, hardware servers were virtualized.

When you try to repeat

Configure MTUs in advance and check for large end-to-end packets.

In the old server network, we used the VMware vShield virtual ME. Since VMware no longer supports this tool, at the same time as we migrated to the new virtual farm, we switched from vShield to hardware firewalls.

After no servers were left in a specific VLAN in the old network, we switched routing. Previously, it was implemented on an old core built on Collapsed Backbone technology, and in the new server factory we used Anycast Gateway technology.

Routing switch

After switching routing for a specific VLAN, it disconnected from the bridge domain and was excluded from the trunk between the old and the new network, i.e., it was completely transferred to the new server factory. So we migrated about 20 VLANs.

So we created a new network, a new server and a new virtualization farm. In one of the following articles, we will talk about what we did with Wi-Fi.

Maxim Klochkov

Senior Consultant, Network Audit and Integrated Projects

Network Solution Center

Jet Infosystems

All Articles