The "creativity" of artificial intelligence changes our ideas about the real

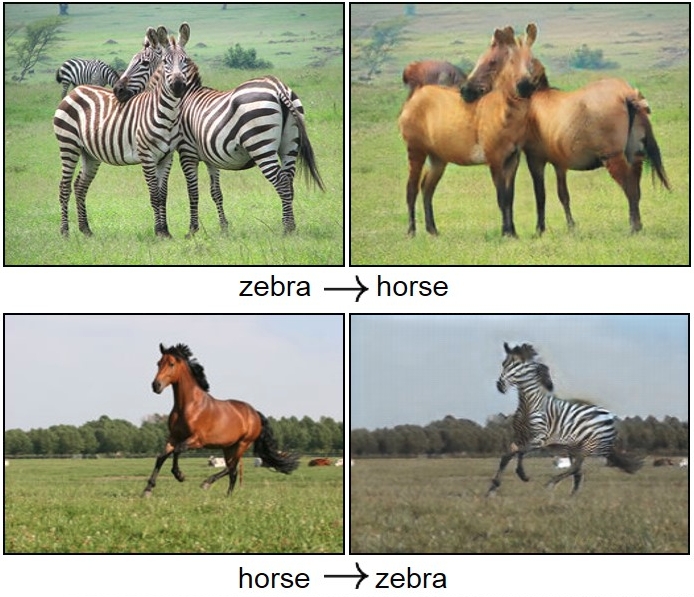

This year, a group of researchers from Berkeley released two videos. In one of them, a horse runs behind a fence. In the second, she suddenly gets a black and white zebra pattern. The result is not perfect, but the strips fit the horse so clearly that the family tree of these animals was plunged into chaos. Such a trick is an indicator of the evolving ability of machine learning algorithms to change reality.

Some other researchers used neural networks to transform photos of black bears into believable images of pandas, apples into oranges, and cats into dogs. One Reddit user used a different machine learning algorithm to edit the porn video and put celebrity faces in there . Startup Lyrebird synthesizes a completely convincing speech based on a one-minute recording of a person’s voice. And Adobe engineers, who are developing the Sensei artificial intelligence platform, are introducing machine learning into innovative video, photo and audio editing tools. These projects are very different in their origin and goals, but they have one thing in common: they synthesize pictures and sounds that turn out to be amazingly similar to real ones. Unlike previous experiments, these AI creations look and sound realistic.

The technologies underlying these changes will soon push us to new creative areas, increasing the possibilities of modern artists and making ordinary amateurs professionals. We will look for new definitions of the concept of creativity, which will expand its horizon and will include the creation of machines. But this boom will have a downside. Some of the content created by artificial intelligence will be used to deceive, giving rise to the fear of an endless avalanche of fake news . The old debate about whether the picture was changed, will give way to a new one - about the origin of all types of content, including text. You will begin to wonder: what role did people play (if they played at all) in the creation of this album / series / article?

Previously, there were two ways to create audio or video similar to the real ones. The first is to use cameras and microphones to record a staged scene. The second is to maximize the use of human talent, often for huge money, to create an exact copy. Now, machine learning algorithms offer a third option, allowing anyone with minimal technical knowledge to modify existing content to create new material.

"The Birth of Venus" - the version of Deep Dreams

At first, the content generated by neural networks was not focused on realism. Google's Deep Dreams, released in 2015, was an early example of using deep learning for stamping psychedelic landscapes and multi-eyed grotesque works of art. The 2016 hit application, Prisma, used deep learning to enhance photo filters, such as styling pictures for Mondrian or Munch paintings. This technique is known as style transfer: take the style of one image (for example, “Scream”) and apply it to the second frame.

Now style transfer algorithms are constantly being improved. Take, for example, the work of the laboratory of Cavita Bala from Cornell University. It shows how deep learning can transfer the style of a single photo (flickering night atmosphere) to a snapshot of a gloomy metropolis - and deceive reviewers who think this place is real. Inspired by the potential of artificial intelligence to recognize aesthetic properties, Professor Bala co-founded GrokStyle . Imagine that you like decorative pillows on the couch with a friend or a blanket in a magazine. Show the GrokStyle algorithm this image, and it will find images of things in the same style.

Professor Bala says: “What I like about these technologies is how they democratize design and style. I am a technologist: I appreciate beauty and style, but I cannot even create them at all. And this work makes such things available to me. Also very pleased with the opportunity to make it available to others. The fact that we are not gifted in this area does not mean that we should live in a boring atmosphere. ”

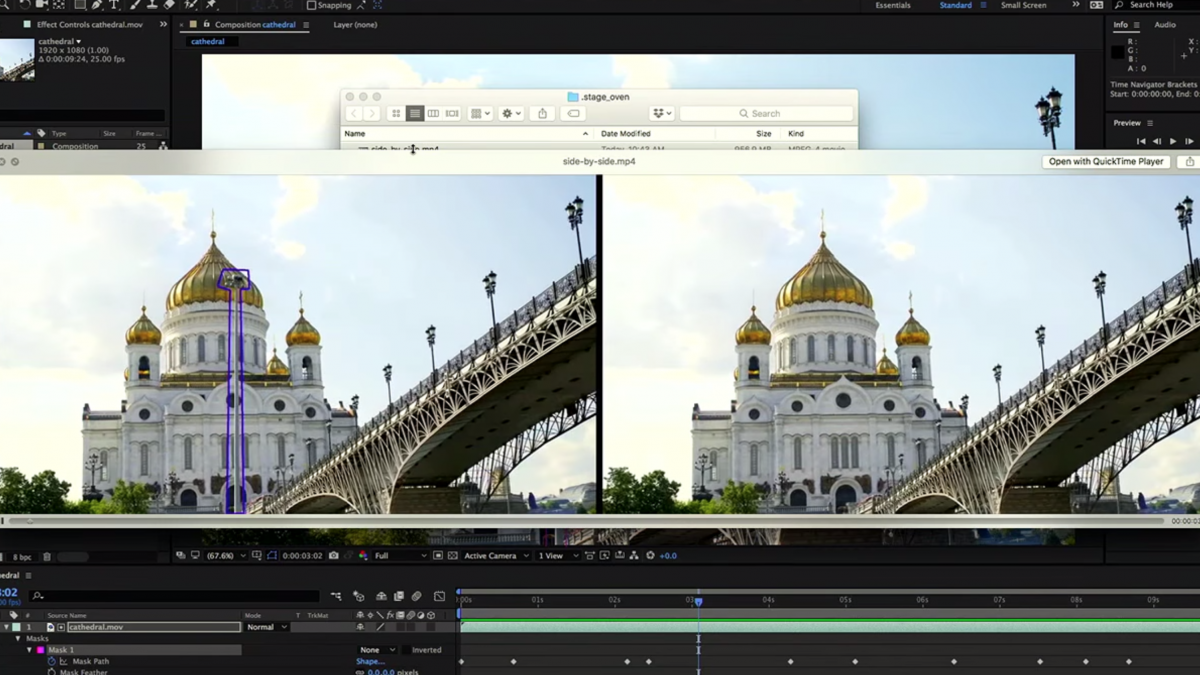

At Adobe, machine learning has been part of creating creative products for over a decade, but the company recently took a huge step forward. In October, engineers working on Sensei demonstrated a promising video editing tool called Adobe Cloak. It allows the user to easily remove, say, a lamppost from a video clip — a task that would be terribly painful even for an experienced editor. Another project, Project Puppetron, applies a specific art style to real-time video. It allows you to display a person in the form of a revived bronze statue or a cartoon character. “You can just do something in front of the camera and turn it into animation in real time,” says John Brandt, a senior scientist and director of Adobe Research.

Machine learning makes these projects possible because it is able to distinguish parts of the face and see the difference between the front and back backgrounds better than previous approaches to computer vision. Sensei tools allow artists to work not with materials, but with concepts. “Photoshop does a great job managing pixels, but in fact people are trying to manipulate the content displayed by these pixels,” Brandt explains.

This is a good piece of news. When artists no longer need to spend time drawing individual points on the screen, their productivity increases. According to Brandt, he is very pleased with the possibility of the emergence of new art forms and is waiting for them to come.

Demonstration of Adobe Cloak features

But it is not difficult to imagine how this creative explosion leads to very bad consequences. Yuanshun Yao, a graduate of the University of Chicago, saw the fake video created by AI, and decided to establish a project that explores the dangers of machine learning. In the video he saw, the generated Barack Obama made a speech. Yao wanted to check whether it was possible to turn something like this with text material?

The structure of the text should be almost flawless, so that most readers are convinced of its “human origin”. Yao began with a relatively simple task - generating fake reviews for Yelp and Amazon. Such a text may consist of only a few sentences and readers do not expect a high level of language proficiency. He and his colleagues created a neural network that creates Yelp texts with a length of 5 sentences. The reviews received included such statements as “Now this is our favorite place!” And “Went there with my brother, ordered vegetarian pasta - very tasty.” Further, Yao asked people whether these were real or fake texts - and of course, people were often mistaken.

The cost of writing such reviews ranges from $ 10 to $ 50, and Yao claims that it is only a matter of time until someone tries to automate the process, reducing prices and flooding the site with fake reviews. He also explored the power of neural networks to protect Yelp from counterfeit content - and achieved some success. His next goal is to generate credible news articles.

As for the video content, then progress can move even faster. Hani Farid, an expert in detecting fake photos and videos and a professor at Dartmouth College, is concerned about how quickly viral content is spreading and how the process of recognizing its authenticity is falling behind. He admits that in the near future there will be a plausible video where Donald Trump gives an order of a nuclear attack on North Korea. It will become viral and sow panic - the same as it once caused a radio play on War of the Worlds. “I don’t want to make hysterical assumptions, but I don’t think that the fears are unfounded,” he says.

However, Trump's generated speeches are already walking around the web. They are the product of Lyrebird, a voice synthesizing startup. José Sotelo, co-founder and CEO of the company, is convinced that the existence of this technology is inevitable, so he and his colleagues will continue to develop it - not forgetting, of course, about ethics. He is confident that the best defense for today is to raise awareness of machine learning opportunities. Sotelo notes: “If you see my photos on the moon, you will almost certainly decide that they are made in a graphic editor. But if you hear an audio recording where the best friend says bad things about you, most likely you will be worried. This is really a new technology, and it presents new challenges to humanity. ”

It is unlikely that something will be able to stop the wave of generated AI content. A scenario in which fraudsters and unscrupulous politicians will use technology to create misleading information is not excluded.

The positive side is that the generated AI content can also provide a huge service to the public. Lyrebird Sotelo wants his technology to be able to regain the ability to talk to people who have lost their voice due to amyotrophic lateral sclerosis or cancer. A video with horses and zebras, which was mentioned at the beginning, was a side result of work to improve the view of unmanned vehicles. Software for such machines is first trained in a virtual environment. But a world like Grand Theft Auto resembles reality very remotely. The zebraification algorithm was created to narrow the gap between the virtual environment and the real world, to ultimately make unmanned cars safer.

All Articles