Why the ban on autonomous robotic killers won't solve anything

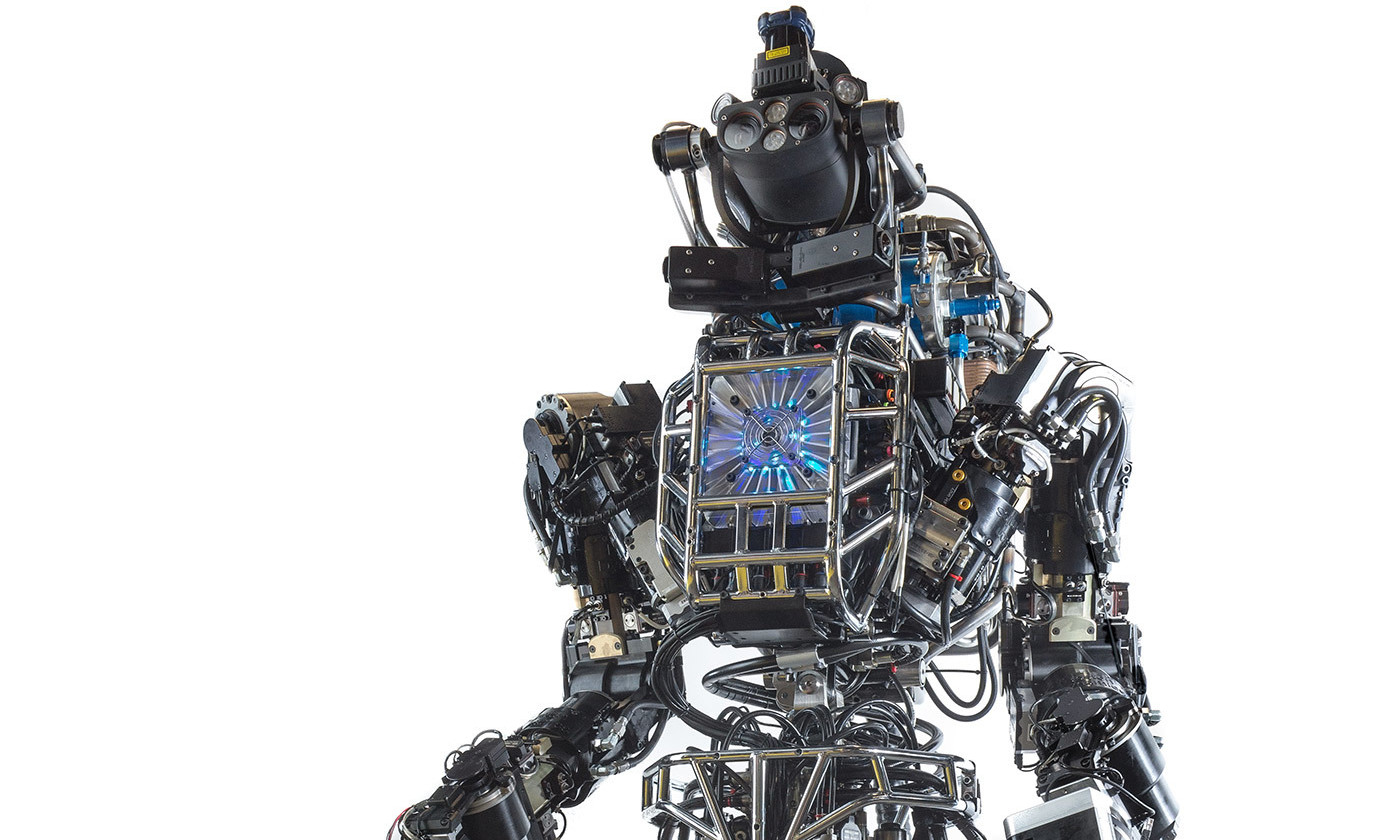

Autonomous weapons - killer robots that can attack without human intervention is a very dangerous tool. There is no doubt about this. As stated in his open letter to the UN, the signatories Ilon Mask, Mustava Suleiman [ co-founder of AI-company DeepMind / approx. trans. ] and other authors, autonomous weapons can become "weapons of intimidation, weapons that tyrants and terrorists can use against innocent people, weapons that can be hacked and made to work in undesirable ways."

But this does not mean that the UN should introduce a preventive ban on further research on such weapons, as the authors of the letter call for.

First, sometimes dangerous tools are needed to achieve decent goals. Think of the genocide in Rwanda , when the world stood by and did nothing. If in 1994 there existed an autonomous weapon, perhaps we would not look away. It seems likely that if the cost of humanitarian interventions could be measured only in money, it would be easier to get public support for such interventions.

Secondly, it is naive to believe that we can enjoy the benefits of recent breakthroughs in AI, without experiencing some of their shortcomings. For example, the UN will introduce a preventive ban on any technology of autonomous weapons. Also suppose - quite optimistically enough - that all armies in the world will respect this ban, and cancel autonomous weapons development programs. And even then we still have to worry about it. Robo-mobile can easily be converted into an autonomous weapon system: instead of driving around pedestrians, you can teach him to move them.

Generally speaking, AI technologies are extremely useful and are already penetrating our lives, even if sometimes we don’t see it and can’t fully realize it. Given this penetration, it would be short-sighted to think that the abuse of technology can be prohibited simply by banning the development of autonomous weapons. Perhaps it is the complex and selectively operating autonomous weapons systems developed by different armies around the world that will be able to effectively resist the coarser autonomous weapons that are easy to develop by reprogramming the seemingly peaceful AI technology, such as the same robomobils.

Moreover, the idea of a simple ban at the international level gives an oversimplified approach to the consideration of autonomous weapons. This concept does not recognize the long history of the causes and consequences of actions and agreements of various countries among themselves and alone, which, through thousands of small actions and omissions, led to the development of such technologies. While the debate on autonomous weapons is conducted mainly at the UN level, it is possible to forgive the average citizen, soldier or programmer for the fact that he does not assume moral obligations for the harm done by autonomous weapons. But this is a very dangerous assumption that could lead to a catastrophe.

All people who are somehow related to automatic weapon technology must exercise due diligence, and each of us must carefully think about how his action or inaction makes a contribution to the list of potential hazards of this technology. This does not mean that countries and international agencies do not have their important role. This underlines the fact that if we want to eliminate the potential danger of automatic weapons, then it is necessary to promote the ethics of personal responsibility, and this propaganda must reach the lowest levels at which decisions are made. To begin with, it is extremely important to talk in detail and fully about the development of autonomous weapons - including the consequences of the actions of all those who make decisions at all levels.

Finally, it is sometimes argued that an autonomous weapon is dangerous not because this tool is dangerous in itself, but because it can become independent and begin to act in its own interests. This is an erroneous mistake, and besides, the danger of a ban on the development of autonomous weapons does not help prevent. If superintelligence is a threat to humanity, we urgently need to look for ways to effectively counter this threat, and to do this regardless of whether the technology of automatic weapons will continue to be developed.

Open Letter to the United Nations Convention on Certain Conventional Weapons

As some companies create technologies related to artificial intelligence and robotics that can be redirected to the development of autonomous weapons, we feel a special responsibility for raising this alarm. We warmly welcome the decision of the UN conference on the adoption of the “Convention on Specific Conventional Weapons” on the appointment of a group of government experts (Group of Governmental Experts, GGE) on lethal autonomous weapon systems. Many of our researchers and engineers are ready to provide technological advice to help with this issue.

We endorse the appointment of Ambassador Amandip Singh Gill of India as Chairman of the GGE. We ask the High Contracting Parties participating in the GGE to actively work on finding ways to prevent the race of such weapons, to protect citizens from their misuse and to avoid the destabilizing effects of these technologies. We regret that the first GGE meeting, scheduled for August 2017, was canceled due to the fact that some countries did not make a financial contribution to the UN. We urge the High Contracting Parties to redouble their efforts at the first meeting of the GGE, to be held in November.

Detailed autonomous weapons threaten to make the third revolution in military affairs. After its development, it will allow to conduct military conflicts on a scale unseen before, and on time intervals smaller than a person is capable of perceiving. It can become a deterrent weapon, a weapon that tyrants and terrorists can use against the innocent population, a weapon that can be hacked and made to work in undesirable ways. We have little time left. After opening this Pandora's box, it will be difficult to close. Therefore, we ask the High Contracting Parties to find ways to protect us all from these threats.

All Articles