Historic HDD

HDD or simply a hard disk from the moment of its appearance quickly captured the market of storage devices and data processing. Now they are gradually being replaced by SSDs, whose lineup is replete with a variety of characteristics, such as read-write speed, physical size, volume, etc. But in the beginning, in those distant times, when even HDD was considered a novelty, there were several very strange, but surprising, models, which I will try to tell you today.

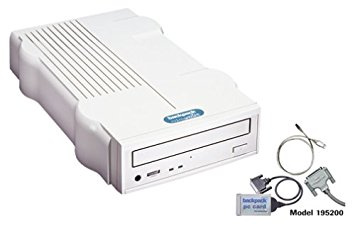

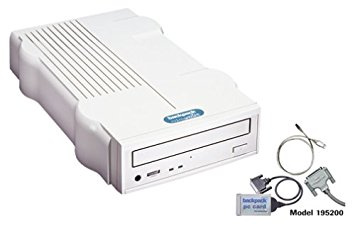

Microsolutions Backpack

What is the most common port that even a beginner in the world of computers knows about? Of course, USB. With it, we connect external storage media to our PCs, right? And it was not always. In the early 90s, when USB was out of the question, the Backpack hard drive from Microsolutions was released. Its feature was the type of connection - through the port for the printer. The first model was as much as 80 MB.

The driver for this device needed to add just one line to CONFIG.SYS, and it consumed less than 5K of memory. Backpack, at the time of release, worked as fast as the internal IDE drives for 80 or 512 MB. The transfer rate was about 1MB per second. Unlike Zip Drives, Backpacks were real hard drives with a typical performance indicator - the search time was 12 ms. It was even possible to create a chain of such discs, connecting one to another.

Quantum Bigfoot

Bigfoot disk manufacturers have set themselves the task of creating a reliable and fast data storage device with minimal cost per megabyte. And they approached this issue very unusually, using the form factor of 5.25 inches (the size of a CD-ROM or an old floppy disk). What is the point? Plates measuring 5.25 inches made it possible to store more data than was possible on standard 3.5 inch CDs. The rotation speed was not the biggest - only 3600 rev / sec, but due to the increased circumference, it was higher than the same CD. Not to mention the fact that the cost of Quantum Bigfoot was significantly lower.

However, there were significant flaws when working with a large form factor. The average Bigfoot search time was no more than 12-14 milliseconds. Although it is a great achievement for such a large disk. An even more important issue was latency (latency). In order to understand this problem better, the following are comparative indicators of Bigfoot and HDD from other manufacturers:

If we look at the rightmost column, we see that two SCSI drives (namely, WD and Cheetah) doubled the performance, but their cost was $ 1,000 more than IDE drives. However, the 3.5 inch Medalist was only $ 30 more expensive than Bigfoot and significantly faster. And if 60 evergreens are added to the Bigfoot cost, then Deskstar 4 with 5400 rpm could be obtained.

The Bigfoot drives are certainly interesting, but Quantum still did not focus on speed and performance, but on cost.

Iomega zip drive

Hard drives with removable drives have never been particularly popular, with the exception of the Iomega Zip Drive, which has been at its height for several years. For a couple of hundred bucks you could get an external device of parallel ports with 100MB cartridges, similar to overgrown floppy disks. They could be inserted into the device as much as fit. The performance was quite acceptable, although the cost of one megabyte was extremely high. The data transfer rate is 1MB per second. Not bad. But the search time was not so joyful - as much as 29 ms.

Overall, Zip Drive was too small, too slow and expensive to become a mass product. However, at one time they were very popular because they were something unusual and new in the market.

SDX Drives

SDX meant data storage acceleration. It was not quite a hard drive. Rather, it was a new method for realizing the connection of a CD-ROM drive. First, a little prehistory.

The standard way to connect a drive to a PC has a dedicated bus: early models (mostly single-speed and two-speed) used one of three connections: Panasonic, Mitsumi, or Sony. SCSI was and remains another effective, albeit rather expensive option. Virtually all of the more modern CD-ROM drives used the ATA bus, better known as IDE. IDE CDs were fast, inexpensive and easy to set up. For a long time, all personal computers had two IDE controllers, each of which was capable of supporting two IDE devices: these were usually hard drives and a CD-ROM, although tape and CD drives were also available in the IDE. (DVD drives came later, but it worked according to the same principles.)

Typically, the CD-ROM drive was configured as a stand-alone device on the second IDE controller or as a copy of the hard disk on the main IDE controller. The performance was good: the maximum IDE data transfer rate for ATA-33 reached 33 MB / s.

Western Digital's SDX principle of operation was completely different: the SDX CD was designed to connect to an SDX hard drive, which in turn connects to a standard IDE controller. This is not an IDE slave, SDX uses another connection altogether. With a 10-pin cable instead of a 40-pin IDE cable.

An SDX hard disk was installed to automatically cache a relatively slow CD-ROM drive, which greatly accelerated the search time on the hard disk and gave a slightly higher transfer rate, which increased the performance of the CD-ROM. This was the main task of the SDX cache for hard drives.

The cache can significantly affect the performance of the CD. CD-ROM caching RAM exists from the very beginning of two-speed drives and is a standard part of all major operating systems. For example, the old DOS Smart Drive automatically used a small amount of RAM for caching a CD drive, as well as a hard disk. (Assuming you downloaded SMARTDRV after MSCDEX, of course.) However, although the RAM was very fast — about 2000 times faster than the CD-ROM drive — the maximum reasonable RAM-based cache size in those days was only a few MB, and for CDs, about 650 MB.

A more practical idea was to use a hard disk for caching a CD drive. In the 1990s, the hard disk was much slower than RAM, but still about 10 times faster than a CD-ROM. Even then, most of the hard drives were so large that tuning to a gigabyte or so for a CD cache was usually practical. This is what SDX was talking about. The SDX hard drive was designed to cache SDX CD-ROM drives attached to it. Roughly speaking, SDX can double the performance of a CD-drive and do it without using any additional CPU or PCI resources and without adding another layer to the file systems.

On the other hand, there was no need to use a new and non-standard type of CD-ROM drive in order to take advantage of the hard disk of the CD-cache. This can be easily done in software, with performance gains equal to or greater than SDX. There were several software CDs, and they worked with any type of CD: old or new, IDE or SCSI. (Even old Panasonic, Mitsumi and Sony two-speed drives).

SDX benefits

SDX Disadvantages

Benefits common to both SDX and software

Software Benefits

Software flaws

In order to achieve complete success, SDX had to work with other hard drive manufacturers, not just Western Digital. WD said they intend to make the SDX interface an open standard, such as IDE or SCSI, but they did not do it on time. Other hard drive manufacturers, such as Maxtor, wanted to use SDX, but WD asked for licensing fees, which strongly repelled other manufacturers who thought it was at all at odds with the principles of open access. For example, Ultra ATA technology from Quantum was absolutely free.

It was possible to conclude that WD just wanted to earn more money at licensing fees, before distributing their technology in the public domain and becoming it as a single standard. At the end of 1997, this led to serious disagreements among manufacturers of hard drives, and WD had to abandon its Napoleonic plans.

Western digital portfolio

In the mid-90s, Western Digital got a slap in the face from the world of computer technology for its weird ideas (see point above). But they decided not to give up and does not stop there. A new type of Portfolio drives with a non-standard form factor for laptops - 3 inches, and not the standard 2.5. At first it seemed that this is another crazy idea. If the principle of the laptop is smaller and lighter, then why make it bigger? However, WD said they found a way to get more memory in a smaller space using a slightly larger disk.

At that time, the development of laptops was aimed at more large in section but at the same time more flat models. All this was due to the increase in screens. Portfolio kept up with these trends. Increasing the size by half an inch allowed up to 70% increase in storage capacity on a single disk. Using a slightly larger plate, WD was able to store twice as much information per cubic inch on a disk. In addition, such a disk faster and easier to rotate.

In turn, WD had a very large competitor with his vision of how hard drives should evolve, this is IBM. They increased productivity due to such methods and technologies: first of all, this is a significant increase in data density, MRX and GMR read heads, glass plates, improved PRML, increased speed of rotation, and clever formatting. Of course, occupying 40% of the global market, IBM could afford to conduct research in the field of hard drives.

In contrast, this was an innovative 3-inch disk from WD, which greatly saved their money and did not require high data density, with which the manufacturer always had problems. Thus, WD was able to squeeze into the market and capture a part of it.

The most prominent representative of the Portfolio line has a very impressive specification:

Such figures were inferior only to the flagship line of IBM laptops.

All is good, but the financial problems of WD led to the fact that the Portfolio line in January 1998 was minimized. He did the same for another 3-inch drive manufacturer - JTS. In those days, many companies suffered precisely from a lack of finances rather than a lack of ideas, and WD is a good example.

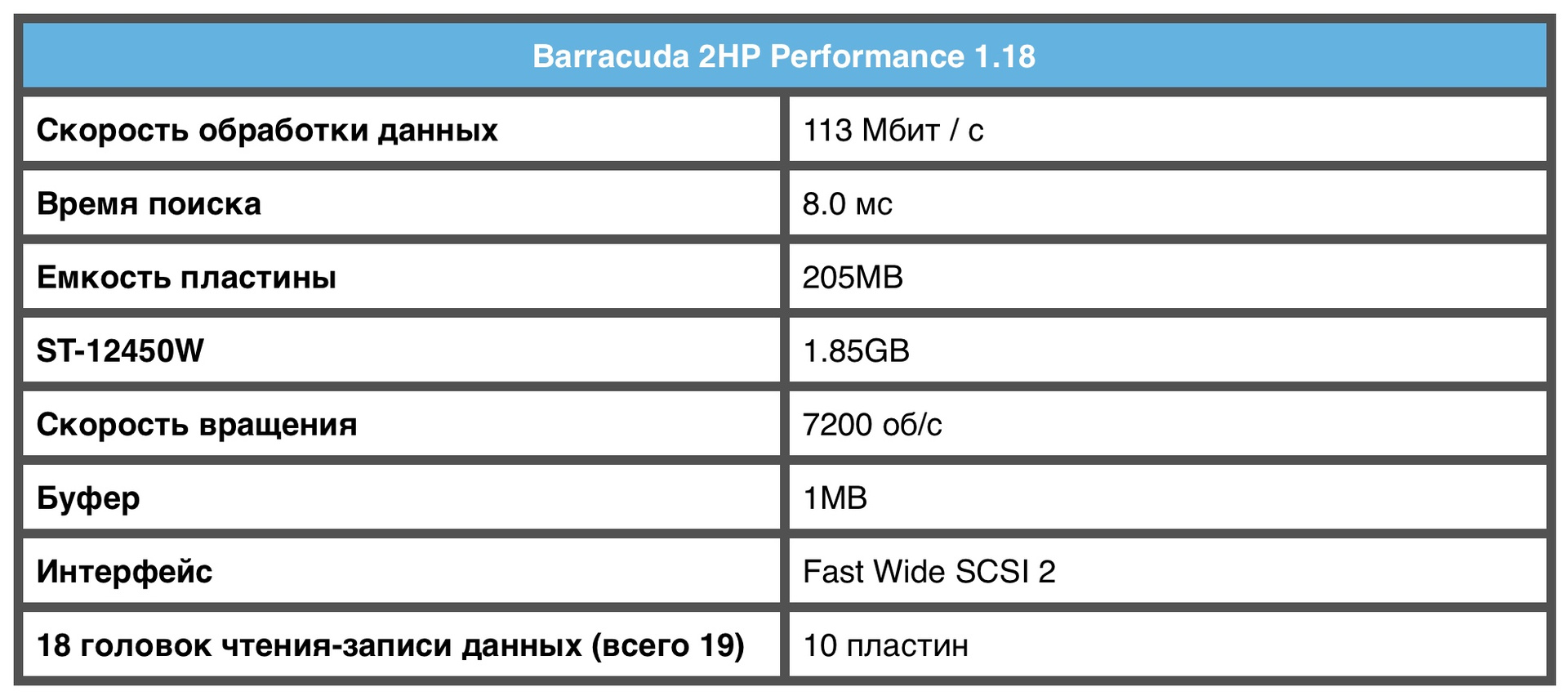

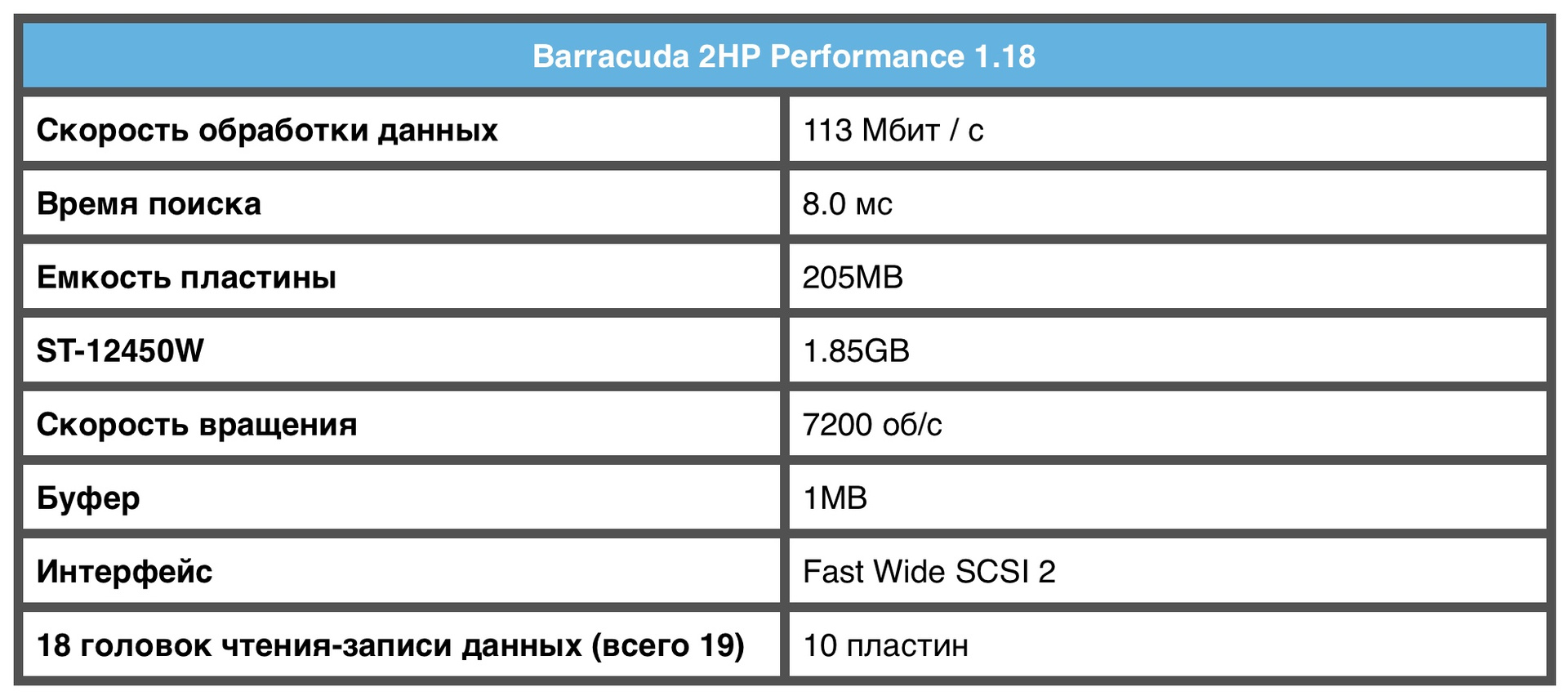

Seagate Barracuda 2HP

Unfortunately, especially for Seagate, these discs are no longer produced. But they were very popular at the time, because they differed from the others in that they used 2 read heads, which in the end provided twice the data transfer rate.

A large number of read-write heads are not something unique, but they are all used one at a time, and the maximum data transfer speed depends on how much data passes under the head. In contrast, Barracuda 2HP divided the data stream into 2 halves, sending one half of each of the two heads and recompiling the data while reading. At that time, the best disks with a rotational speed of 7200 rpm gave out a transfer rate of 50 - 60 Mbit / s, and Barracuda gave out as much as 113 Mbit / s, which made this disk the fastest on the planet at that time.

This idea was not new, but it was the first to be implemented in the world. So why 2HP technology just disappeared now?

First of all, this is due to the fact that such data transfer speeds can be achieved through a RAID array (combining several disks into one structure). In this version, the RAID controller or controller software performs the complex task of data separation and recombination. In addition, over the past few years, RAID has become a cheaper option for increasing disk performance.

The second reason for the disappearance of 2HP technology was the time to market. 2HP developers have spent a lot of time implementing new models that adhere to this technology. While other manufacturers did not waste time on empty.

Barracuda 2HP Specification

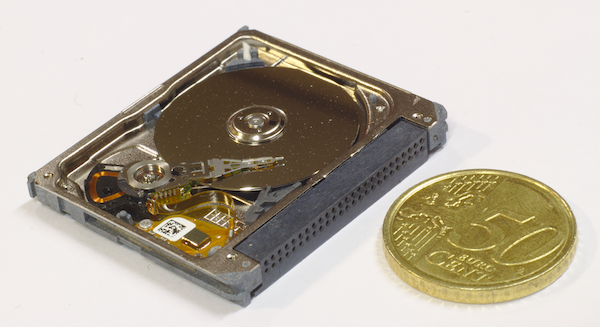

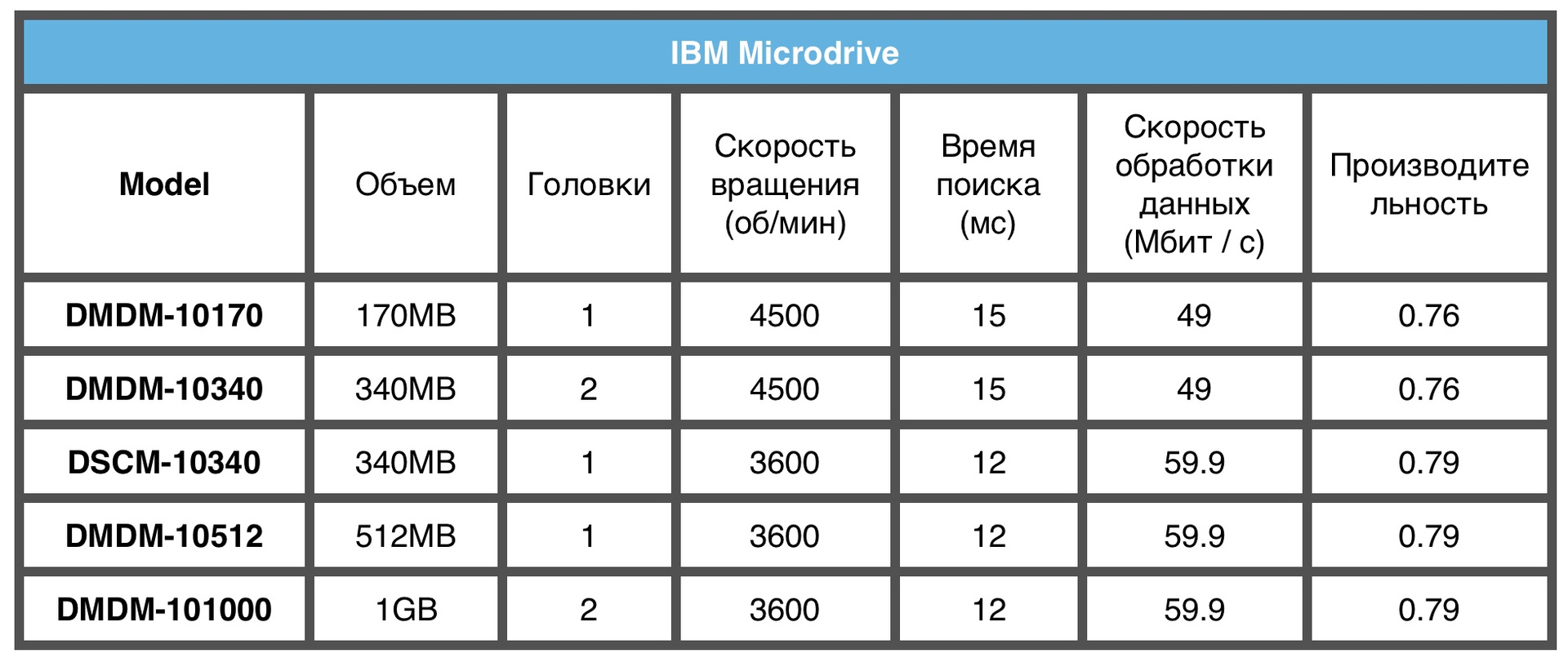

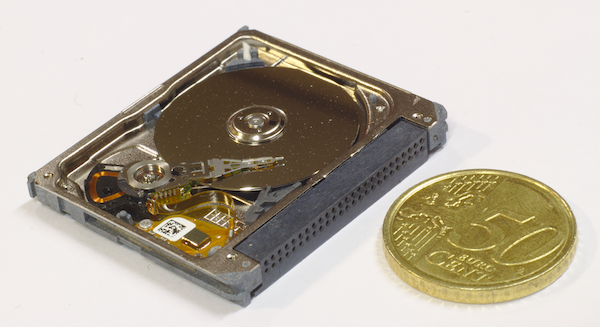

Ibm microdrive

The IBM Microdrive is 1 inch across, much lighter than PCMCIA, but it's still a real hard disk with moving read-write heads and a tiny one-inch disk rotating at an incredible speed of 4500 rpm at that time.

The 2 released models stored 170 and 360 MB of data, and their performance was comparable to the performance of a 20-megabyte desktop disk.

The 170MB version was unique because it has only one read / write head.

Not a single disc had only one head, right up until the early 2000s.

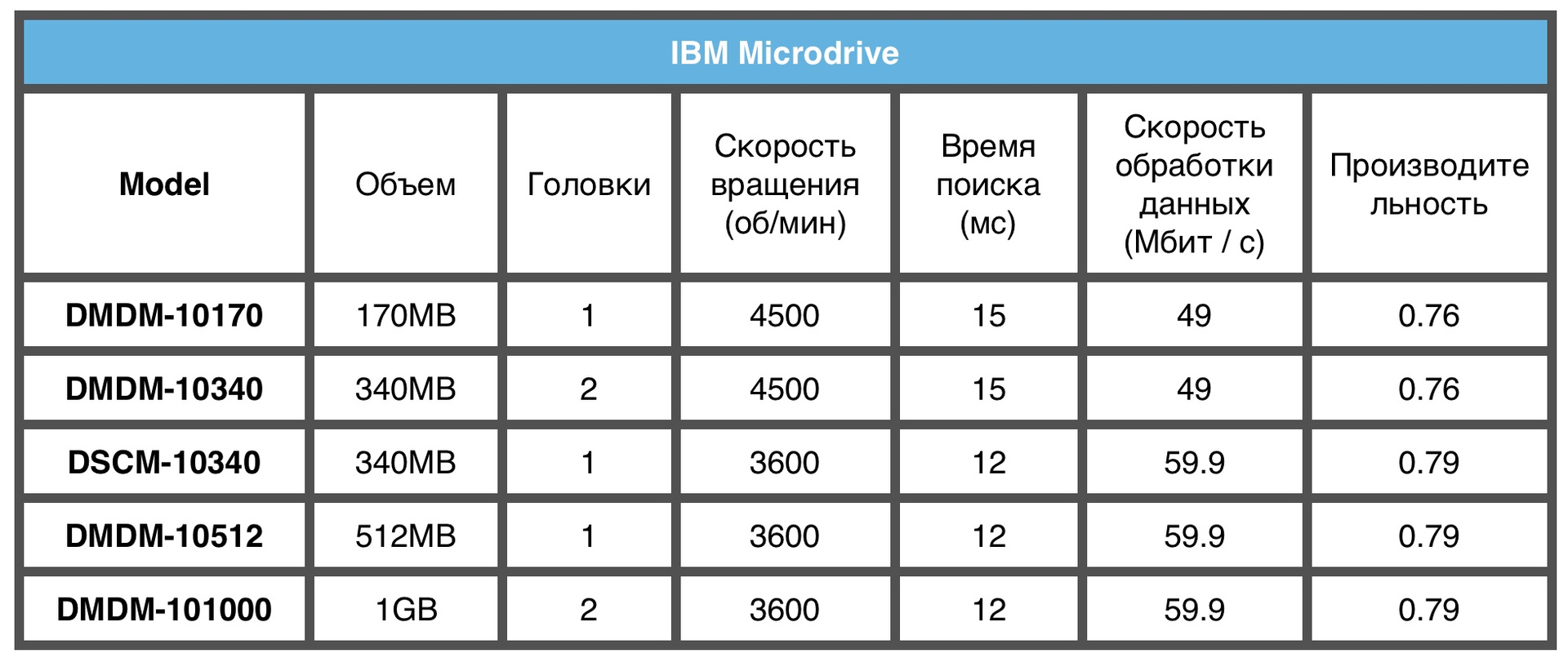

IBM Microdrive Model Specifications

Of course, now there are many modern drives with performance much higher than those of our list, but they still remain an integral part of the history of hard drives due to their unique at that time technology, and therefore deserve attention. They were the impetus for the development and research of HDD, and later SSD.

As advertising. Stock! Only now get up to 4 months of free use of VPS (KVM) with dedicated drives in the Netherlands and the USA (configurations from VPS (KVM) - E5-2650v4 (6 Cores) / 10GB DDR4 / 240GB SSD or 4TB HDD / 1Gbps 10TB - $ 29 / month and above, options with RAID1 and RAID10 are available) , a full-fledged analogue of dedicated servers, when ordering for a period of 1-12 months, the conditions of the promotion are here, existing subscribers can receive a 2-month bonus!

How to build the infrastructure of the building. class c using servers Dell R730xd E5-2650 v4 worth 9000 euros for a penny?

Microsolutions Backpack

What is the most common port that even a beginner in the world of computers knows about? Of course, USB. With it, we connect external storage media to our PCs, right? And it was not always. In the early 90s, when USB was out of the question, the Backpack hard drive from Microsolutions was released. Its feature was the type of connection - through the port for the printer. The first model was as much as 80 MB.

The driver for this device needed to add just one line to CONFIG.SYS, and it consumed less than 5K of memory. Backpack, at the time of release, worked as fast as the internal IDE drives for 80 or 512 MB. The transfer rate was about 1MB per second. Unlike Zip Drives, Backpacks were real hard drives with a typical performance indicator - the search time was 12 ms. It was even possible to create a chain of such discs, connecting one to another.

Quantum Bigfoot

Bigfoot disk manufacturers have set themselves the task of creating a reliable and fast data storage device with minimal cost per megabyte. And they approached this issue very unusually, using the form factor of 5.25 inches (the size of a CD-ROM or an old floppy disk). What is the point? Plates measuring 5.25 inches made it possible to store more data than was possible on standard 3.5 inch CDs. The rotation speed was not the biggest - only 3600 rev / sec, but due to the increased circumference, it was higher than the same CD. Not to mention the fact that the cost of Quantum Bigfoot was significantly lower.

However, there were significant flaws when working with a large form factor. The average Bigfoot search time was no more than 12-14 milliseconds. Although it is a great achievement for such a large disk. An even more important issue was latency (latency). In order to understand this problem better, the following are comparative indicators of Bigfoot and HDD from other manufacturers:

If we look at the rightmost column, we see that two SCSI drives (namely, WD and Cheetah) doubled the performance, but their cost was $ 1,000 more than IDE drives. However, the 3.5 inch Medalist was only $ 30 more expensive than Bigfoot and significantly faster. And if 60 evergreens are added to the Bigfoot cost, then Deskstar 4 with 5400 rpm could be obtained.

The Bigfoot drives are certainly interesting, but Quantum still did not focus on speed and performance, but on cost.

Iomega zip drive

Hard drives with removable drives have never been particularly popular, with the exception of the Iomega Zip Drive, which has been at its height for several years. For a couple of hundred bucks you could get an external device of parallel ports with 100MB cartridges, similar to overgrown floppy disks. They could be inserted into the device as much as fit. The performance was quite acceptable, although the cost of one megabyte was extremely high. The data transfer rate is 1MB per second. Not bad. But the search time was not so joyful - as much as 29 ms.

Overall, Zip Drive was too small, too slow and expensive to become a mass product. However, at one time they were very popular because they were something unusual and new in the market.

SDX Drives

SDX meant data storage acceleration. It was not quite a hard drive. Rather, it was a new method for realizing the connection of a CD-ROM drive. First, a little prehistory.

The standard way to connect a drive to a PC has a dedicated bus: early models (mostly single-speed and two-speed) used one of three connections: Panasonic, Mitsumi, or Sony. SCSI was and remains another effective, albeit rather expensive option. Virtually all of the more modern CD-ROM drives used the ATA bus, better known as IDE. IDE CDs were fast, inexpensive and easy to set up. For a long time, all personal computers had two IDE controllers, each of which was capable of supporting two IDE devices: these were usually hard drives and a CD-ROM, although tape and CD drives were also available in the IDE. (DVD drives came later, but it worked according to the same principles.)

Typically, the CD-ROM drive was configured as a stand-alone device on the second IDE controller or as a copy of the hard disk on the main IDE controller. The performance was good: the maximum IDE data transfer rate for ATA-33 reached 33 MB / s.

Western Digital's SDX principle of operation was completely different: the SDX CD was designed to connect to an SDX hard drive, which in turn connects to a standard IDE controller. This is not an IDE slave, SDX uses another connection altogether. With a 10-pin cable instead of a 40-pin IDE cable.

An SDX hard disk was installed to automatically cache a relatively slow CD-ROM drive, which greatly accelerated the search time on the hard disk and gave a slightly higher transfer rate, which increased the performance of the CD-ROM. This was the main task of the SDX cache for hard drives.

The cache can significantly affect the performance of the CD. CD-ROM caching RAM exists from the very beginning of two-speed drives and is a standard part of all major operating systems. For example, the old DOS Smart Drive automatically used a small amount of RAM for caching a CD drive, as well as a hard disk. (Assuming you downloaded SMARTDRV after MSCDEX, of course.) However, although the RAM was very fast — about 2000 times faster than the CD-ROM drive — the maximum reasonable RAM-based cache size in those days was only a few MB, and for CDs, about 650 MB.

A more practical idea was to use a hard disk for caching a CD drive. In the 1990s, the hard disk was much slower than RAM, but still about 10 times faster than a CD-ROM. Even then, most of the hard drives were so large that tuning to a gigabyte or so for a CD cache was usually practical. This is what SDX was talking about. The SDX hard drive was designed to cache SDX CD-ROM drives attached to it. Roughly speaking, SDX can double the performance of a CD-drive and do it without using any additional CPU or PCI resources and without adding another layer to the file systems.

On the other hand, there was no need to use a new and non-standard type of CD-ROM drive in order to take advantage of the hard disk of the CD-cache. This can be easily done in software, with performance gains equal to or greater than SDX. There were several software CDs, and they worked with any type of CD: old or new, IDE or SCSI. (Even old Panasonic, Mitsumi and Sony two-speed drives).

SDX benefits

- Caching a CD drive without using additional CPU or PCI bus resources.

- The firmware level cache did not require operating system support, so SDX automatically worked with Unix, OS / 2, Linux and MacOS, and not just with DOS and Windows.

- Future hard drive upgrades may result in the CD-ROM drive also providing effective performance improvements.

- SDX CD disc production was a bit cheaper.

SDX Disadvantages

- Update SDX CD-ROM drives exclusively to other models of the same brand.

- Very limited support.

- Slightly increased the cost of the hard drive.

- The inability to use in laptops.

Benefits common to both SDX and software

- Significant increase in CD performance — minimum increase for large files, more increase in performance for small files.

- Increased service life of CD-ROM drives.

Software Benefits

- Many more features are offered for intelligent caching, including the prefetching of certain files from popular program names.

- Permissible dynamic setting of the total cache size without re-partitioning the hard disk.

- Worked with any type of hard disk or CD-ROM drive, new or existing.

- Free choice of hard drive brand and cache software is allowed.

- Much easier to update.

Software flaws

- Slightly increased processor load - by 3-4%.

- Added another complex layer to the Windows file system.

- Limited operating system support.

In order to achieve complete success, SDX had to work with other hard drive manufacturers, not just Western Digital. WD said they intend to make the SDX interface an open standard, such as IDE or SCSI, but they did not do it on time. Other hard drive manufacturers, such as Maxtor, wanted to use SDX, but WD asked for licensing fees, which strongly repelled other manufacturers who thought it was at all at odds with the principles of open access. For example, Ultra ATA technology from Quantum was absolutely free.

It was possible to conclude that WD just wanted to earn more money at licensing fees, before distributing their technology in the public domain and becoming it as a single standard. At the end of 1997, this led to serious disagreements among manufacturers of hard drives, and WD had to abandon its Napoleonic plans.

Western digital portfolio

In the mid-90s, Western Digital got a slap in the face from the world of computer technology for its weird ideas (see point above). But they decided not to give up and does not stop there. A new type of Portfolio drives with a non-standard form factor for laptops - 3 inches, and not the standard 2.5. At first it seemed that this is another crazy idea. If the principle of the laptop is smaller and lighter, then why make it bigger? However, WD said they found a way to get more memory in a smaller space using a slightly larger disk.

At that time, the development of laptops was aimed at more large in section but at the same time more flat models. All this was due to the increase in screens. Portfolio kept up with these trends. Increasing the size by half an inch allowed up to 70% increase in storage capacity on a single disk. Using a slightly larger plate, WD was able to store twice as much information per cubic inch on a disk. In addition, such a disk faster and easier to rotate.

In turn, WD had a very large competitor with his vision of how hard drives should evolve, this is IBM. They increased productivity due to such methods and technologies: first of all, this is a significant increase in data density, MRX and GMR read heads, glass plates, improved PRML, increased speed of rotation, and clever formatting. Of course, occupying 40% of the global market, IBM could afford to conduct research in the field of hard drives.

In contrast, this was an innovative 3-inch disk from WD, which greatly saved their money and did not require high data density, with which the manufacturer always had problems. Thus, WD was able to squeeze into the market and capture a part of it.

The most prominent representative of the Portfolio line has a very impressive specification:

- 2 plates 2.1 GB;

- MR and PRML heads;

- resistance to impact 300/100 g;

- search time 14 ms;

- rotation speed 4000 rpm;

- data transfer rate of 83 Mbps.

Such figures were inferior only to the flagship line of IBM laptops.

All is good, but the financial problems of WD led to the fact that the Portfolio line in January 1998 was minimized. He did the same for another 3-inch drive manufacturer - JTS. In those days, many companies suffered precisely from a lack of finances rather than a lack of ideas, and WD is a good example.

Seagate Barracuda 2HP

Unfortunately, especially for Seagate, these discs are no longer produced. But they were very popular at the time, because they differed from the others in that they used 2 read heads, which in the end provided twice the data transfer rate.

A large number of read-write heads are not something unique, but they are all used one at a time, and the maximum data transfer speed depends on how much data passes under the head. In contrast, Barracuda 2HP divided the data stream into 2 halves, sending one half of each of the two heads and recompiling the data while reading. At that time, the best disks with a rotational speed of 7200 rpm gave out a transfer rate of 50 - 60 Mbit / s, and Barracuda gave out as much as 113 Mbit / s, which made this disk the fastest on the planet at that time.

This idea was not new, but it was the first to be implemented in the world. So why 2HP technology just disappeared now?

First of all, this is due to the fact that such data transfer speeds can be achieved through a RAID array (combining several disks into one structure). In this version, the RAID controller or controller software performs the complex task of data separation and recombination. In addition, over the past few years, RAID has become a cheaper option for increasing disk performance.

The second reason for the disappearance of 2HP technology was the time to market. 2HP developers have spent a lot of time implementing new models that adhere to this technology. While other manufacturers did not waste time on empty.

Barracuda 2HP Specification

Ibm microdrive

The IBM Microdrive is 1 inch across, much lighter than PCMCIA, but it's still a real hard disk with moving read-write heads and a tiny one-inch disk rotating at an incredible speed of 4500 rpm at that time.

The 2 released models stored 170 and 360 MB of data, and their performance was comparable to the performance of a 20-megabyte desktop disk.

The 170MB version was unique because it has only one read / write head.

Not a single disc had only one head, right up until the early 2000s.

IBM Microdrive Model Specifications

Of course, now there are many modern drives with performance much higher than those of our list, but they still remain an integral part of the history of hard drives due to their unique at that time technology, and therefore deserve attention. They were the impetus for the development and research of HDD, and later SSD.

As advertising. Stock! Only now get up to 4 months of free use of VPS (KVM) with dedicated drives in the Netherlands and the USA (configurations from VPS (KVM) - E5-2650v4 (6 Cores) / 10GB DDR4 / 240GB SSD or 4TB HDD / 1Gbps 10TB - $ 29 / month and above, options with RAID1 and RAID10 are available) , a full-fledged analogue of dedicated servers, when ordering for a period of 1-12 months, the conditions of the promotion are here, existing subscribers can receive a 2-month bonus!

How to build the infrastructure of the building. class c using servers Dell R730xd E5-2650 v4 worth 9000 euros for a penny?

All Articles