Hello, Habr!

A few months ago, I spoke at the FrontendConf 2019 conference with a Docker talk for the front-end, and would like to make a little transcript of the talk for those who like reading more than listening.

I invite you to cat all web developers, especially front-end.

Climbing Docker

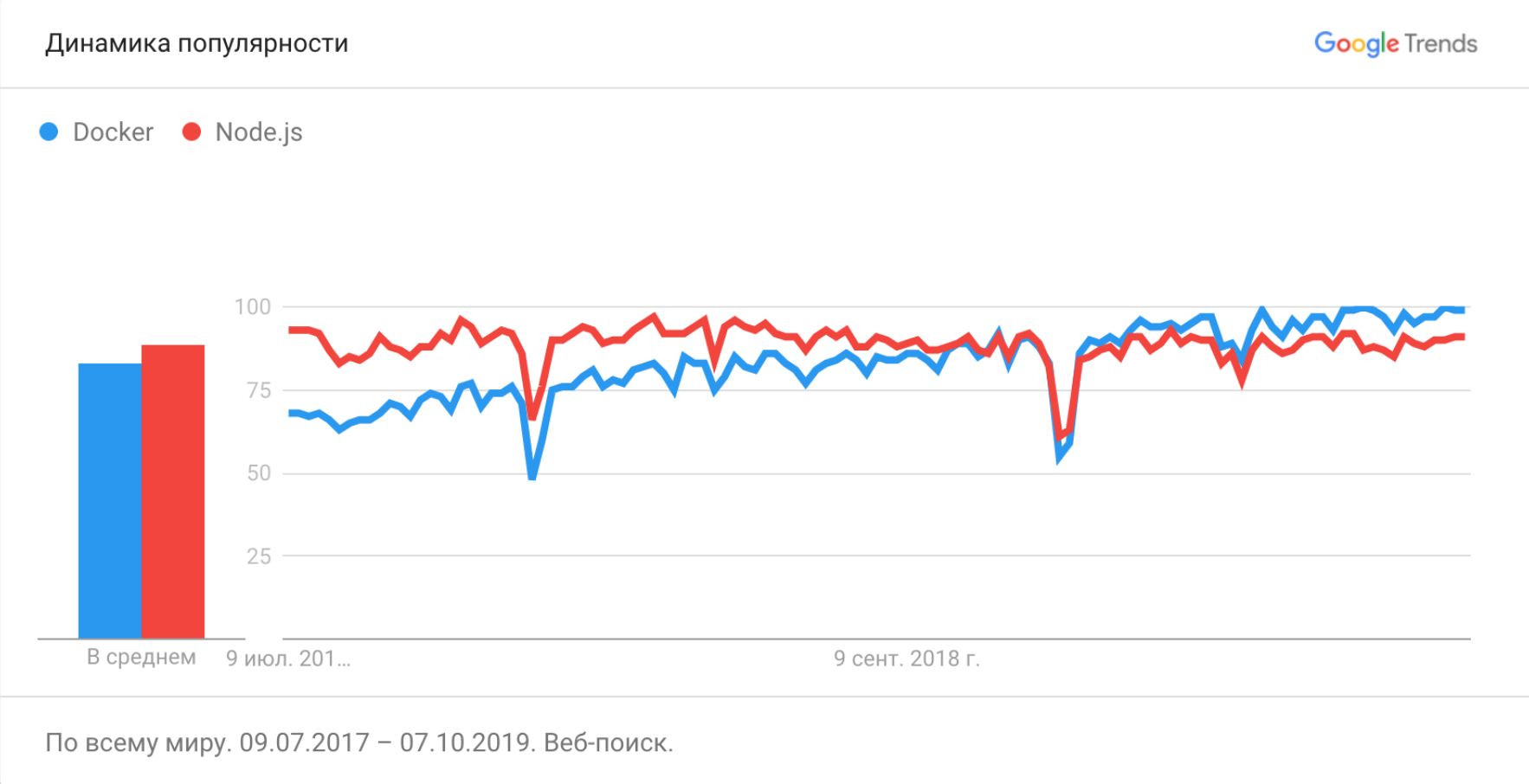

Docker is not a new tool, it was first published back in March 2013 and since then its popularity has only grown.

Here we see that the frequency of requests for Node.js has reached a plateau and is not moving anywhere, while requests for Docker continue to increase.

This toy was at the RIT ++ 2019 conference as part of the DevOps section. And in my experience, not a single DevOps conference goes without reports about Docker , just like front-end conferences do not go without reports about comparing frameworks , setting up a webpack, and professional burnout .

Let us in the front-end party also begin to talk about this technology.

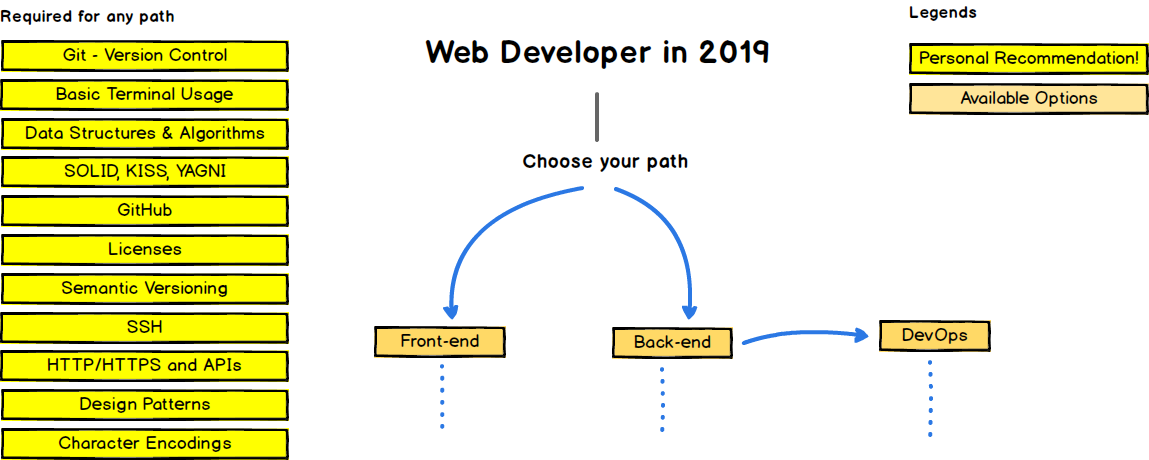

This is a webmaster roadmap . On the left are the skills required for any development path. Indeed, it is difficult to imagine a good web developer who does not know Git, a terminal, or does not know HTTP.

Docker is also present here, but where in the bowels of the DevOps ' development branch is the Infrastructure as a code -> Containers block.

But we know that Docker is also a great development tool, and not just for exploitation. And, in my opinion, he has every chance after a while to get into the Required for any path section and become a mandatory requirement in many vacancies of front-end developers.

We can say that we now live in the Docker Era . Therefore, if you are a front-end developer and have not yet met him, then I will tell you why to do this.

What for?

Case 1. Raise the backend

The first and most useful case that we can consider is launching the API on our cozy macbook. Yes, we know our technologies very well, but deploying something third-party is always not an easy test.

On one of our projects, the front-end developer needed to install such a set on the computer so that the API would work:

* go1.11 * MySQL * Redis * Elasticsearch * Capistrano * syslog * PostgreSQL

Fortunately, we had instructions for deploying the project in README files. They looked something like this:

1. GVM (https://github.com/moovweb/gvm) 2. `gvm install go1.11.13 --binary` 3. `gvm use go1.11.13 --default` 4. golang (`gvm linkthis`) 5. `gb` `go get github.com/constabulary/gb/...` 6. `git config --global url."git@git.example.com:".insteadOf "https://git.example.com/"` 7. `gb vendor restore` 8. 9. `npm run build` (`npm run build:dev` ) 10. `npm start`

And like this:

## Elasticsearch 1. Elasticsearch `brew install elasticsearch` - macOS ( java) 2. * https://github.com/imotov/elasticsearch-analysis-morphology , `/usr/local/Cellar/elasticsearch/2.3.3/libexec/bin/plugin install http://dl.bintray.com/content/imotov/elasticsearch-plugins/org/elasticsearch/elasticsearch-analysis-morphology/2.3.3/elasticsearch-analysis-morphology-2.3.3.zip` - * `brew services restart elasticsearch` 3. `rake environment elasticsearch:import:model CLASS='Tag' FORCE=y ` `rake environment elasticsearch:import:model CLASS='Post' FORCE=y` , ## Postgres comments db 1. `psql -U postgres -h localhost` 2. `create database comments_dev;` ## Redis install and start 1. `brew install redis` 2. `brew services start redis`

What do you think, how long did it take a novice developer to deploy a project and start doing tasks?

About a week.

Naturally, not all terminal commands passed the first time, quite often the instruction became outdated.

This was considered normal and suited everyone. That is, to make a feature in 1 hour of work, it was first necessary to spend 40 hours to deploy all the components locally.

Now the deployment of the project with all the services for development looks like this and with a cold start it takes about 10 minutes .

$ docker-compose up api ... Listening localhost:8080

All service deployment commands are executed during the assembly of Docker images. They are automated and cannot be outdated.

Case 2. Stability

The second case is the stability of the system of our working computer.

Who likes to install some third - party software on their favorite computer? Who likes to install several different databases , new compilers , interpreters ?

And this has to be done when we deploy a locally third-party API.

Moreover, you can break your system and spend several hours restoring it.

Using Docker for development does a pretty good job of this problem. All third-party software spins in insulated containers and is easily removed if necessary.

$ docker rm --volumes api $ docker system prune --all

Case 3. We control the operation

And the third case that I would like to talk about is the ability of the front-end developer to control the operation of his service. It’s up to you to decide how his service will work in production.

I know that putting a project into operation quite often looks like this.

Front: Guys, I did everything. The code in the turnip. Roll out, pliz!

Admin: How does it roll out?

Front: You collect the node and distribute the static web server from the /build

folder

Admin: What version of the node to collect? What is the build command? Which web server to distribute?

As a result, for the administrator, the deployment of your project turns into a no less exciting quest , as for you the deployment of the API on the local machine from the previous cases.

Even if the project will work on your computer, it is not at all necessary that everything will also work on production. And we get the classic problem " it works on my machine ."

Docker helps us with this. The solution is simple, if we packaged our project in Docker, thereby automating its assembly and setting up launch, it will work equally on all servers that support Docker.

A guide for admins comes down to:

Front: Guys, I did everything. Collected a Docker image for you. Roll out, pliz!

Admin: Ok

Well, with what, and with Docker images, admins should definitely be able to work. Not that with our node.

What is a docker?

I hope I could explain why you should take a closer look at this technology if you are a front-end developer and still don't know it. But I never told what it is.

I plan to write about this in the following parts of the article and give some useful recipes for front-end developers.

Content

- Docker for the front-end. Part 1. Why?

- Docker for the front-end. Part 2. What are you?

- Docker for the front-end. Part 3. Some recipes