It took four mathematicians, six months of hard work, one new programming language and many mistakes - and we created a constructor in which everyone can assemble a virtual assistant with AI.

In the material we will tell

- How does a virtual assistant differ from a regular chat bot

- Is it true that virtual assistants understand the language

- How we taught the robot to understand the context and wrote the language lialang

- Case check: how we automated support in three banks

- Creation of Lia Platform and engine for interfaces

- Three steps: how does the platform for assembling virtual assistants work (where anyone, even a non-programmer, can assemble a robot)

Chat bot vs virtual assistant

Advanced chatbots were able to highlight keywords and imitate human dialogue back in the 60s. Hippies threw themselves at LSD, The Beatles gathered stadiums, and Joseph Weizenbaum created Eliza, an interlocutor-psychotherapist who will give odds to many modern bots, and even psychologists.

For example, in the sentence “My father hates me,” Eliza responded to the keyword “father” and asked, “Who else in the family hates you?” But the robo-psychotherapist did not understand the essence of the issues. Modern chatbots also work: keywords, linear scripts and a parody of live dialogue.

But something has changed since the 60s: now, thanks to machine learning and NLP technologies, we can teach chat bots to understand the natural language and context. This is still an imitation, but more meaningful.

To see the difference, let's compare the chatbot and the assistant - imagine that we need to make a virtual sommelier , which helps customers with the choice of wine.

Stage 1

The first stage of the chatbot and virtual assistant is one: we estimate user requests and come up with phrases that they can write. And then we prescribe how the robot will act in response.

The basic request is clear - pick up the wine. But this request can have many parameters: reason, price, country, color, grape variety. Perhaps the user immediately writes the name of the wine to be found. Or he will clarify the details: “Where is it made?” Or maybe out of curiosity, he will start throwing the bot with questions: “Which bottle is the most expensive in the world?” And so on. Moreover, besides the requests in the case, there are “hello”, “bye”, “how are you” and other small talk phrases that need to be asked.

You can fantasize endlessly, but still we won’t be able to predict all the questions of users. When it seems to us that the described situations will be enough to cover 98% of the requests, we stop (although then the harsh reality breaks off and we find out that 80% will be covered at best).

Then we scatter our assumptions about the needs of users for specific requests - intentions. Intentions indicate what the user wants, but discard information about how he wrote about it. This stage is the same for chatbots and assistants.

List of intentions

Intention 1 - I want any wine

Phrases:

- Help me choose a wine

- What wine would you recommend?

- I want the best wine

- ...

What to do with it: randomly select one of the most popular wines and inform the user.

Intention 2 - cheaper wine

Phrases:

- are there any good wines up to 1000 rubles?

- but there is nothing cheaper?

- too expensive for me

- ...

What to do: add a filter to the request for the price and select one of the most popular wines.

Intention 3 - I want wine for meat

Phrases:

- Advise the wine to the steak

“I'll have goulash for dinner.” What to drink?

- ...

What to do about it: a request to the database by the criterion “for meat”, select one of the most popular wines and inform the user.

... (and so on hundreds of different intentions)

Intention 290 - we did not understand the user

Phrases: any other

What to do: issue a stub phrase: “I don’t know what you mean, but the Pinista is delightful at any time of the year.”

Stage 2

At this stage, we begin to create an algorithm by which the robot will work. Here the similarity of the chatbot and virtual assistant ends.

When coding a chatbot, the programmer manually determines the keywords for each intention, and when the user writes, the bot will look for these keywords in the phrase.

When developing an assistant, a programmer teaches an algorithm to compare user replicas according to the lexical meaning. This allows you to find the closest intention.

| Choice wine with a chat bot | Wine selection with a virtual AI assistant | What happened |

|---|---|---|

| User: Hello! I want to choose wine for dinner before 2000r

Bot: Hello! Bot: What price range are we looking for? User: up to 2000r Bot: I can recommend Ramirez de la Piscina. To the best of the tart, handsome man from Spain is suitable for dinner, and for your favorite series. 1240₽ per bottle | User: Hello! I want to choose wine for dinner before 2000r

Assistant: Hello! I can recommend Ramirez de la Piscina. To the best of the tart, handsome man from Spain is suitable for dinner, and for your favorite series. 1240₽ per bottle | Bot - went by keywords to choose + wine. I did not understand the price range and asked again. Assistant - went for the closest intention and retrieved the price range. |

| User: I do not like tart

Bot: Your candidate is Casillero del Diablo Cabernet Sauvignon Reserva Bot: Chilean tart Sauvignon will definitely warm you. Only 872₽ | User: I do not like tart

Assistant: Your candidate is Antigal, "Uno" Malbec. Assistant: Soft Argentinean straight from 2015. The price of a bottle is 1101 ₽ | The chatbot was guided by a keyword "tart" and was mistaken. And the assistant understood that the closest to “I do not like tart” intention was soft wines. |

| User: Well, it's so inexpensive. I’ll probably take it.

Bot: Then try “FYI” Red Blend Bot: Despite the high rating, its price is only $ 10 Bot: Good Choice | User: Well, it's so inexpensive. I’ll probably take it.

Assistant: Good Choice | Robots process offers one at a time. Mr. chatbot looked at the word “inexpensive”, answered according to the template - as a result, he did not guess and got confused. The assistant simply ignored the first sentence, as he understood the second.

|

How it works: a table comparing chatbots and virtual assistants

| Chat bot | Virtual assistant |

|---|---|

| To understand the user, we take keywords.

When creating a chatbot, machine learning and NLU technologies are not used. | To understand the user, we carry out a “smart” comparison and choose the closest intention (a little later explain how it works).

Machine learning and NLU algorithms allow us to measure the distance between replicas. For example, the phrase “spaceship” is closer to “airplane” than “scooter”. And from the "sale of the kidney" - very far away. |

| Preparation: For each intention, write out the keywords (wine_meat: meat, steak, meat, goulash, wine + meat)

Algorithm:

| Preparation: We train the model for smart comparisons

Algorithm:

|

| How to improve:

To reduce errors, add commands and buttons. Then users generally cease to communicate in the language, and will simply click on the buttons. Such improvements lead to the degradation of conversational intelligence. | How to improve:

After users talk to the robot, we will have new examples of phrases and we will distribute them according to their intentions. Over time, the percentage of coverage will increase, the assistant will begin to cope better with communication. Such improvements lead to improved conversational intelligence. |

Is it true that virtual assistants understand the language?

Algorithms can distinguish some phrases from others - but can we say that robots really understand the language?

To answer this question, let us return to a comparison of the lexical meaning of phrases. The meaning for a computer is understandable data types: strings, numbers, and combinations thereof. Therefore, the programmer faces the task of turning the source text into a form suitable for mathematical operations of comparison - a vector.

vectorize(" ") = (0.004, 17.43, -0.021, ..., 18.68) vectorize(" ") = (0.004, 19.73, -0.001, ..., 25.28) vectorize(" ") = (-8.203, 15.22, -9.253, ..., 10.11) vectorize(" ") = (89.23, -68.99, -10.62, ..., -0.982)

For our tasks, the vectors of lexically close phrases should be mathematically close to each other, the lexically distant ones should be far away, the vector of phrases from another opera should be very far away. For example, “I want wine” is closer to “I want white wine” than to “I don't want wine.” And far from “Mars attacks”.

A properly trained neural network will be able to conclude lexical meaning in these vectors. It turns out, in order to compare the meaning of two phrases, you need to compare their vectors.

Therefore, the answer to the question “Do robots understand the language?” Will be like this: they don’t understand how a person is, they just can compare lexical meanings and not confuse the warm with the soft. But when the algorithms can offer suggestive refinements and draw conclusions, we honestly say: yes, understanding has arrived. In the meantime, "understand the language" is just a beautiful marketing phrase.

In fact, the robot only works with analogies, like a three-year-old child. However, if you give the child a sufficient number of examples, he will be able to pretend to be an intellectual and lead a discussion. The “live” operator of the first line of support works the same way - he is described a set of situations and told how to behave in them. Therefore, virtual assistants are well suited for support automation.

How we taught the robot to understand the context: lialang

For normal support, robots need little “understanding” of the natural language - it is important that they can answer questions and stay in context. To do this, we wrote lialang, a dialog markup language where scripts can be described and passed to the robot.

The main task of a lialang programmer is to describe all the situations that can happen in a dialogue between a person and a machine. To do this, in our language you can associate the names of intentions and actions.

Consider a simple example - a greeting:

if intent() { reaction(_) }

It looks like regular code, but a neural grid works behind the intent (...) construct - lialang describes the dialogue in general patterns (“if you were asked for something”) using the usual programming constructs. Of course, in order for this to work, you need to apply machine learning and NLU technologies, because the user can write his request as he wishes.

And here is how to describe contextual situations.

We introduced the “was” construct to catch inappropriate greetings anywhere in the dialogue:

It says: Lia, if they said hello to you, say hello in response. And if after that they again said “hello” - say that you have already said hello.

if intent() { if was_reaction(_) { reaction(___) } else { reaction(_) } }

It says: Lia, if they said hello to you, say hello in response. And if after that they again said “hello” - say that you have already said hello.

A reaction is an action that Lia must perform in response to an intention. In 95% of cases, this is just text. But also the robot can call a function in the code, switch communication to the operator or perform other complex actions.

The code for sending text and functions exists separately from the language - the language describes situations as simply as possible.

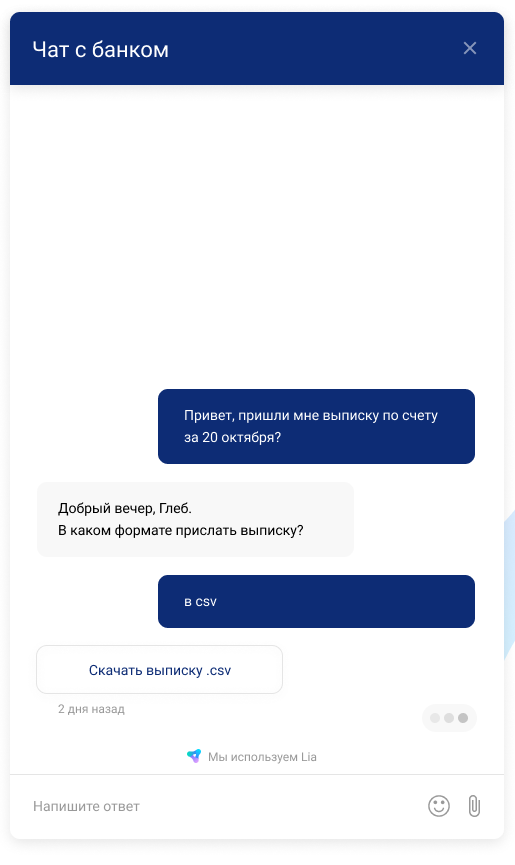

Now let's try to write a more complicated thing - in a chat with the bank, customers often ask for their details. Let's learn how to send them to chat and email using lialang.

if intent(_) or intent(___) { reaction(___) { if intent(__) { reaction(___) } } } if intent(___) { reaction(___) }

Two situations are described here:

- Lia, if you were asked to send the details - send them to the chat. If after they asked “it is necessary to mail”, then send them to the mail.

- Lia, if you were immediately asked to send the details to the mail - send the details to the mail.

So lialang does its job - works in context. Even if a person writes "by mail, please," the robot will understand that we are talking about details.

Lia has learned to support complex scenarios - at the time necessary for the user, she will get / put data from / to CRM, send SMS, help with payment or talk about life.

Gradually, we improved the language: we added variables, functions, entities (dates, addresses, phone numbers, names, etc.), states, and other useful constructs. So writing on it has become even more convenient.

Case check: how we automated support in three banks

As soon as we made the technology, it had to be finalized urgently - we had our first customer. VTB needed to automate support in a new Internet bank for entrepreneurs.

We started quite successfully - especially for a product that was created in four months. Our hybrid robot for VTB was based on neural networks and was immediately effective: it answered more than 800 questions, supported several complex scenarios (statements, tariff changes, user settings) and spoke like a person. As a result, in two months, our Lia reduced the support load by 74%. It became clear: the idea of automating support works.

Further, on the basis of Lia, we automated the FAQ in “Rocketbank” and “DeloBank” - and in two weeks they closed 32% of applications without operators.

It would seem that the boys came to success. However, after the first customers it became clear that the concept needed to be changed. It was hell - we had to manually modify the scripts, make edits, develop the branches. The same thing with simple bots, only harder and requires more strength. In this situation, it was difficult to scale.

Then we decided to make a tool in which the client himself will be able to assemble even a complex assistant. And we will only help with tutorials and educate users.

Lia Platform and engine for interfaces

So, we decided to make a platform for those who do not understand the development. Although lialang contains less than ten different designs, not every manager will teach it to create its own bot. Managers love the mouse.

Therefore, we began to think about such an interface that will be able to do everything that lialang can do. He will not have problems with nested branches, transitions from one script to another, and most importantly - not only our programmers, but anyone who wants to can create scripts.

See how it looks:

Dialogs are a non-linear thing and writing a universal engine for all kinds of conversation schemes is very difficult. But before we thought about schemes, we already had lialang - it became the engine.

Whatever interface designers may have come up with, we do not code brains for it, but write only a small translator of markup from the interface to lialang code. If the interface is redone, we will only need to change the translator - thanks to which the interface command and the core command can exist separately.

How does the platform for creating virtual assistants work?

To assemble your own virtual assistant in Lia, the user needs to go through three stages.

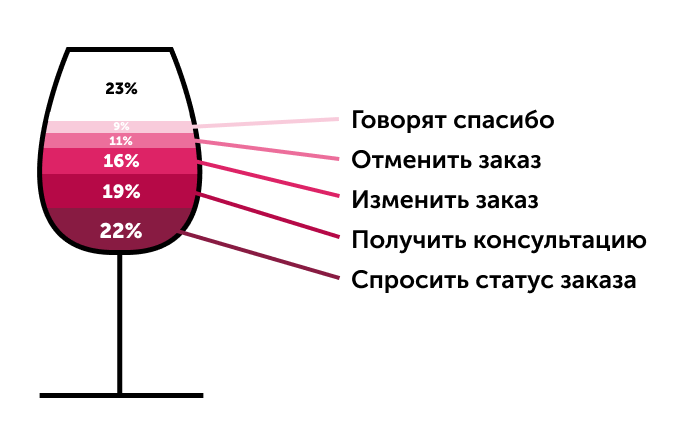

Step 1. Download the chat history with users so that the robot understands and highlights the main scenarios

If the client has a history of conversations with users, he can upload them to the system and get clusters from the most popular queries. It will be very convenient to create intentions from them.

Over time, the effectiveness of Lia will increase. Messages to which the robot could not answer are again distributed into clusters: for example, “who are you?”, “Who are you?”, “What's your name?” And “are you a robot?” Will fall into one group. So the platform is self-learning semi-automatically: the user sees where the gap is and closes it, adding new scenarios - as a result, the percentage of covered requests grows from 30% to 70% in six months.

If there is no correspondence history, skip this step and immediately begin with the second step: we predict what requests users will turn to the assistant.

Step 2. We write down the intent: 10-20 options for the most frequent user requests

At the second stage, we prescribe intentions based on the 10-20 most popular queries: this number of options is enough thanks to neural networks. So a phrase like “I want to order wine” teaches the bot to recognize similar user requests: for example, “Get wine” or “Order wine”.

Another assistant understands and extracts entities: city names, phone numbers, addresses, timestamps, periods, dates and curses - even if you say “tired, bring a box of wine tomorrow”.

In addition, users can add their own objects and manually mark training phrases so that the assistant learns faster. This is one of the most powerful features of the platform, thanks to which it can create robots that work no worse than a human operator.

Step 3. Create a scenario: prescribe several answers or actions

Here the user must come up with answers to user requests. 95% of the average project is usually taken by a simple Question-Answer scenario - answers to popular questions.

By the way, the assistant can respond with pictures, videos and audio files, and if necessary, send a geolocation.

The jump to construct is particularly useful - thanks to it, Lia can switch from one script to another, and then return back, solving several problems. This is useful if you need to step aside in the dialogue, but then return to the right track: for example, ask a clarifying question.

Example dialog jump to:

Assistant: We need to clarify the delivery address, because the recipient is not responding.

Client: And about what? What order? (JumpTo to clarify the order)

Assistant: Order August 21, Antigal, "Uno" Malbec.

Assistant: So can we clarify the current delivery address? (Return to the main script)

When the creator wants his assistant not only to respond with text, but to take actions, he will be able to call the programmer so that the specialist prescribes JS snippets. We allow you to run JavaScript directly during scripts: access the external API, send an e-mail, or do another complicated action.

4. Summary

An assistant can integrate with anything: talk to a person on the phone, in instant messengers or a widget that the client will post on the site.

Assembling a smart assistant in the platform takes from a couple of hours to a month. Then the robot learns to realistically understand requests and recognize patterns - it takes about six months (all this time a living person oversees it). Business can do most of the routine tasks for the rogue Lia girl: from coordinating delivery and calling a taxi to consulting clients.

By the way, we have already transferred the completed projects with banks to the platform. They work just as well, but are much more conveniently moderated.

In the near future, we plan to add extractors, which will allow our users to extract more complex data (for example, the robot will understand the phrase “the day after tomorrow after lunch”). We will also finalize version control so that clients can quickly roll and roll back versions of projects. And also we’ll unleash the organization’s role system.

We expect Gartner's forecasts to be correct - and in 2022, up to 70% of all customer interactions will go through some kind of AI. According to our idea, designers like Lia will help transfer client service to robots even faster.