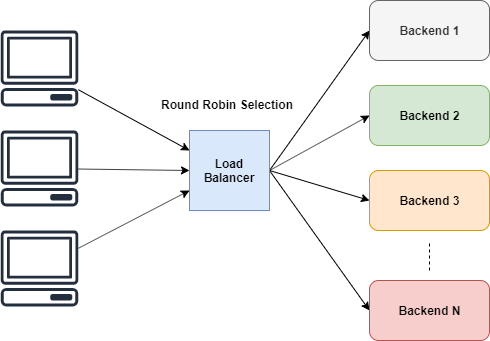

Load balancers play a key role in web architecture. They allow you to distribute the load across several backends, thereby improving scalability. And since we have several backends configured, the service becomes highly available, because in the event of a failure on one server, the balancer can choose another working server.

Having played with professional balancers like NGINX, I tried to create a simple balancer for fun. I wrote it on Go, it is a modern language that supports full parallelism. The standard library in Go has many features and allows you to write high-performance applications with less code. In addition, for ease of distribution, it generates a single statically linked binary.

How our balancer works

Different algorithms are used to distribute the load among the backends. For example:

- Round Robin - the load is distributed evenly, taking into account the same computing power of the servers.

- Weighted Round Robin - Depending on the processing power, servers can be assigned different weights.

- Least Connections - the load is distributed across servers with the least number of active connections.

In our balancer, we implement the simplest algorithm - Round Robin.

Selection at Round Robin

The Round Robin algorithm is simple. It gives all performers the same opportunity to complete tasks.

Select servers in Round Robin to handle incoming requests.

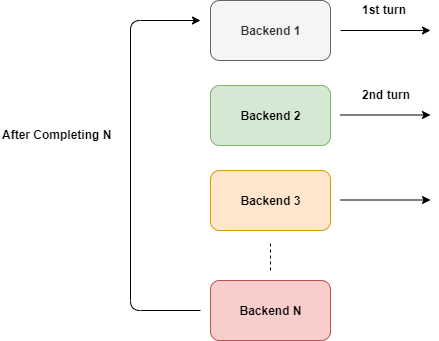

As shown in the illustration, the algorithm selects the servers in a circle, cyclically. But we cannot select them directly , right?

And if the server is lying? We probably do not need to send traffic to it. That is, the server cannot be used directly until we bring it to the desired state. You need to direct traffic only to those servers that are up and running.

Define the structure

We need to track all the details related to the backend. You need to know if he is alive, and track the URL. To do this, we can define the following structure:

type Backend struct { URL *url.URL Alive bool mux sync.RWMutex ReverseProxy *httputil.ReverseProxy }

Do not worry, I will explain the meaning of the fields in the Backend.

Now in the balancer you need to somehow track all the backends. To do this, you can use Slice and a variable counter. Define it in ServerPool:

type ServerPool struct { backends []*Backend current uint64 }

Using ReverseProxy

As we have already determined, the essence of the balancer is in distributing traffic to different servers and returning results to the client. As the Go documentation says:

ReverseProxy is an HTTP handler that takes incoming requests and sends it to another server, proxying responses back to the client.

Exactly what we need. No need to reinvent the wheel. You can simply stream our requests through

ReverseProxy

.

u, _ := url.Parse("http://localhost:8080") rp := httputil.NewSingleHostReverseProxy(u) // initialize your server and add this as handler http.HandlerFunc(rp.ServeHTTP)

Using

httputil.NewSingleHostReverseProxy(url)

you can initialize

ReverseProxy

, which will broadcast requests to the passed

url

. In the example above, all requests were sent to localhost: 8080, and the results were sent to the client.

If you look at the signature of the ServeHTTP method, you can find the signature of the HTTP handler in it. Therefore, you can pass it to

HandlerFunc

in

http

.

Other examples are in the documentation .

For our balancer, you can initiate

ReverseProxy

with an associated

URL

in

Backend

so that ReverseProxy routes requests to the

URL

.

Server selection process

During the next server selection, we need to skip lying servers. But you need to organize the count.

Numerous clients will connect to the balancer, and when each of them asks the next node to transfer traffic, a race condition may occur. To prevent this, we can block

ServerPool

with

mutex

. But this will be redundant, besides we do not want to block

ServerPool

. We just need to increase the counter by one.

The best solution to meet these requirements would be atomic incrementation. Go supports it with the

atomic

package.

func (s *ServerPool) NextIndex() int { return int(atomic.AddUint64(&s.current, uint64(1)) % uint64(len(s.backends))) }

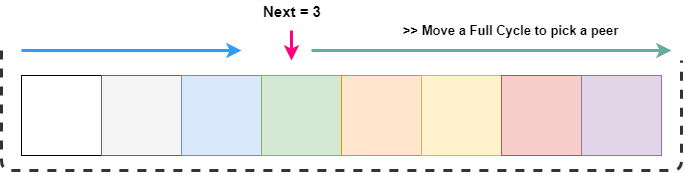

We atomically increase the current value by one and return the index by changing the length of the array. This means that the value should always lie in the range from 0 to the length of the array. In the end, we will be interested in a specific index, not the entire counter.

Choosing a live server

We already know that our requests are cyclically rotated across all servers. And we only need to skip the idle.

GetNext()

always returns a value ranging from 0 to the length of the array. At any time, we can get the next node, and if it is inactive, we need to search further through the array as part of the loop.

We loop through the array.

As shown in the illustration, we want to go from the next node to the end of the list. This can be done using

next + length

. But to select an index, you need to limit it to the scope of the array. This can easily be done using the modify operation.

After we found a working server during the search, it should be marked as current:

// GetNextPeer returns next active peer to take a connection func (s *ServerPool) GetNextPeer() *Backend { // loop entire backends to find out an Alive backend next := s.NextIndex() l := len(s.backends) + next // start from next and move a full cycle for i := next; i < l; i++ { idx := i % len(s.backends) // take an index by modding with length // if we have an alive backend, use it and store if its not the original one if s.backends[idx].IsAlive() { if i != next { atomic.StoreUint64(&s.current, uint64(idx)) // mark the current one } return s.backends[idx] } } return nil }

Avoiding the race condition in the Backend structure

Here you need to remember an important issue. The

Backend

structure contains a variable that several goroutines can modify or request at the same time.

We know that goroutines will read the variable more than write to it. Therefore, for serializing access to

Alive

we chose

RWMutex

.

// SetAlive for this backend func (b *Backend) SetAlive(alive bool) { b.mux.Lock() b.Alive = alive b.mux.Unlock() } // IsAlive returns true when backend is alive func (b *Backend) IsAlive() (alive bool) { b.mux.RLock() alive = b.Alive b.mux.RUnlock() return }

Balancing requests

Now we can formulate a simple method for balancing our requests. It will fail only if all servers fall.

// lb load balances the incoming request func lb(w http.ResponseWriter, r *http.Request) { peer := serverPool.GetNextPeer() if peer != nil { peer.ReverseProxy.ServeHTTP(w, r) return } http.Error(w, "Service not available", http.StatusServiceUnavailable) }

This method can be passed to the HTTP server simply as a

HandlerFunc

.

server := http.Server{ Addr: fmt.Sprintf(":%d", port), Handler: http.HandlerFunc(lb), }

We route traffic only to running servers

Our balancer has a serious problem. We do not know if the server is running. To find out, you need to check the server. There are two ways to do this:

- Active: executing the current request, we find that the selected server is not responding, and mark it as idle.

- Passive: you can ping servers at some interval and check the status.

Actively checking running servers

If any error

ReverseProxy

initiates the

ErrorHandler

callback function. This can be used to detect failures:

proxy.ErrorHandler = func(writer http.ResponseWriter, request *http.Request, e error) { log.Printf("[%s] %s\n", serverUrl.Host, e.Error()) retries := GetRetryFromContext(request) if retries < 3 { select { case <-time.After(10 * time.Millisecond): ctx := context.WithValue(request.Context(), Retry, retries+1) proxy.ServeHTTP(writer, request.WithContext(ctx)) } return } // after 3 retries, mark this backend as down serverPool.MarkBackendStatus(serverUrl, false) // if the same request routing for few attempts with different backends, increase the count attempts := GetAttemptsFromContext(request) log.Printf("%s(%s) Attempting retry %d\n", request.RemoteAddr, request.URL.Path, attempts) ctx := context.WithValue(request.Context(), Attempts, attempts+1) lb(writer, request.WithContext(ctx)) }

In developing this error handler, we used the capabilities of closures. This allows us to capture external variables such as server URLs into our method. The handler checks the retry counter, and if it is less than 3, then we again send the same request to the same server. This is done because, due to temporary errors, the server may drop our requests, but it soon becomes available (the server may not have free sockets for new clients). So you need to set the delay timer for a new attempt after about 10 ms. With each request we increase the counter of attempts.

After the failure of each attempt, we mark the server as idle.

Now you need to assign a new server for the same request. We will do this using the attempt counter using the

context

package. After increasing the counter of attempts, we pass it to

lb

to select a new server to process the request.

We cannot do this indefinitely, so we will check in

lb

whether the maximum number of attempts has been reached before continuing with the processing of the request.

You can simply get the attempt counter from the request, if it reaches the maximum, then we interrupt the request.

// lb load balances the incoming request func lb(w http.ResponseWriter, r *http.Request) { attempts := GetAttemptsFromContext(r) if attempts > 3 { log.Printf("%s(%s) Max attempts reached, terminating\n", r.RemoteAddr, r.URL.Path) http.Error(w, "Service not available", http.StatusServiceUnavailable) return } peer := serverPool.GetNextPeer() if peer != nil { peer.ReverseProxy.ServeHTTP(w, r) return } http.Error(w, "Service not available", http.StatusServiceUnavailable) }

This is a recursive implementation.

Using the context package

The

context

package allows you to save useful data in HTTP requests. We will actively use this to track data related to requests -

Attempt

and

Retry

counters.

First, you need to set the keys for the context. It is recommended to use not string, but unique numerical values. Go has an

iota

keyword for incremental implementation of constants, each of which contains a unique value. This is a great solution for defining numeric keys.

const ( Attempts int = iota Retry )

You can then extract the value, as we usually do with the

HashMap

. The default value may depend on the current situation.

// GetAttemptsFromContext returns the attempts for request func GetRetryFromContext(r *http.Request) int { if retry, ok := r.Context().Value(Retry).(int); ok { return retry } return 0 }

Passive Server Validation

Passive checks identify and recover fallen servers. We ping them at a certain interval to determine their status.

To ping, try to establish a TCP connection. If the server responds, we mark it working. This method can be adapted to call specific endpoints like

/status

. Make sure to close the connection after it is created to reduce the additional load on the server. Otherwise, he will try to maintain this connection and will eventually exhaust his resources.

// isAlive checks whether a backend is Alive by establishing a TCP connection func isBackendAlive(u *url.URL) bool { timeout := 2 * time.Second conn, err := net.DialTimeout("tcp", u.Host, timeout) if err != nil { log.Println("Site unreachable, error: ", err) return false } _ = conn.Close() // close it, we dont need to maintain this connection return true }

Now you can iterate the servers and mark their statuses:

// HealthCheck pings the backends and update the status func (s *ServerPool) HealthCheck() { for _, b := range s.backends { status := "up" alive := isBackendAlive(b.URL) b.SetAlive(alive) if !alive { status = "down" } log.Printf("%s [%s]\n", b.URL, status) } }

To run this code periodically, you can run a timer in Go. It will allow you to listen to events in the channel.

// healthCheck runs a routine for check status of the backends every 2 mins func healthCheck() { t := time.NewTicker(time.Second * 20) for { select { case <-tC: log.Println("Starting health check...") serverPool.HealthCheck() log.Println("Health check completed") } } }

In this code, the

<-tC

channel will return a value every 20 seconds.

select

allows you to define this event. In the absence of a

default

situation, it waits until at least one case can be executed.

Now run the code in a separate goroutine:

go healthCheck()

Conclusion

In this article, we examined many questions:

- Round Robin Algorithm

- ReverseProxy from the standard library

- Mutexes

- Atomic operations

- Short circuits

- Callbacks

- Selection operation

There are many more ways to improve our balancer. For example:

- Use heap to sort live servers to reduce search scope.

- Collect statistics.

- Implement the weighted round-robin algorithm with the least number of connections.

- Add support for configuration files.

And so on.

The source code is here .