- Understand descriptions and specifications of encryption devices

- Distinguish “paper” characteristics from really important ones in real life

- Go beyond the usual set of vendors and include any products that are suitable for solving the task

- Ask the right questions in the negotiations.

- Compose Tender Requirements (RFP)

- Understand what characteristics you have to sacrifice if some device model is selected

What can be estimated

In principle, the approach is applicable to any standalone devices suitable for encrypting network traffic between remote Ethernet segments (intersite encryption). That is, the “boxes” in a separate case (well, the blades / modules for the chassis are also included here), which connect through one or more Ethernet ports to a local (campus) Ethernet network with unencrypted traffic, and through another port (ports) - to a channel / network through which already encrypted traffic is transmitted to other, remote segments. Such an encryption solution can be deployed in a private or carrier network through various types of “transport” (“dark” optical fiber, frequency separation equipment, switched Ethernet, as well as “pseudowires” laid through a network with a different routing architecture, most often MPLS), using VPN technology or without it.

Network Encryption in a Distributed Ethernet Network

The devices themselves can be either specialized (intended exclusively for encryption), or multifunctional (hybrid, convergent ), that is, they also perform other functions (for example, a firewall or router). Different vendors refer their devices to different classes / categories, but it doesn’t matter - the only important thing is whether they can encrypt cross-site traffic and what characteristics they have.

Just in case, I remind you that “network encryption”, “traffic encryption”, “encryption” are informal terms, although they are often used. In Russian regulatory legal acts (including those that introduce GOSTs), you most likely will not meet them.

Encryption Levels and Transfer Modes

Before proceeding with the description of the characteristics themselves, which will be used for evaluation, you will first have to deal with one important thing, namely the “encryption level”. I noticed that it is often mentioned both in official documents of vendors (in descriptions, manuals, etc.), and in informal discussions (at negotiations, at trainings). That is, it is as if everyone is well aware of what is at stake, but personally witnessed some confusion.

So what exactly is an “encryption level”? It is clear that we are talking about the level number of the OSI / ISO reference network model at which encryption takes place. We read GOST R ISO 7498-2–99 “Information technology. Interconnection of open systems. The basic reference model. Part 2. Information Security Architecture. ” From this document it can be understood that the level of confidentiality service (one of the mechanisms for which encryption is precisely) is the protocol level, the service data block (“payload”, user data) of which is encrypted. As it is also written in the standard, a service can be provided both at the same level, “on its own”, and with the help of a lower level (for example, this is most often implemented in MACsec).

In practice, two modes of transmitting encrypted information through the network are possible (IPsec immediately comes to mind, but the same modes are also found in other protocols). In transport (sometimes it is also called native) mode, only the service data block is encrypted, and the headers remain “open”, unencrypted (sometimes additional fields with overhead information of the encryption algorithm are added, and other fields are modified, recounted). In tunnel mode, the entire protocol data block (i.e., the packet itself) is encrypted and encapsulated in a service data block of the same or higher level, that is, it is framed by new headers.

The encryption level in combination with some kind of transmission mode is neither good nor bad, so it cannot be said, for example, that L3 in transport mode is better than L2 in tunnel mode. It’s just that many characteristics depend on them, according to which the devices are evaluated. For example, flexibility and compatibility. To work in a network L1 (relay of a bitstream), L2 (frame switching) and L3 (packet routing) in transport mode, solutions are needed that are encrypted at the same or higher level (otherwise the address information will be encrypted and the data will not get to its destination) , and the tunnel mode allows you to overcome this limitation (although sacrificing other important characteristics).

Transport and tunnel L2 encryption modes

Now let's move on to the analysis of the characteristics.

Performance

For network encryption, performance is a complex, multidimensional concept. It happens that a certain model, superior in one performance characteristic, inferior in another. Therefore, it is always useful to consider all the components of encryption performance and their impact on the performance of the network and its applications. Here you can draw an analogy with a car, for which not only the maximum speed is important, but also the acceleration time to "hundreds", fuel consumption and so on. Vendor companies and their potential customers pay great attention to performance characteristics. As a rule, it is performance that is ranking in the line of vendors of encryption devices.

It is clear that performance depends on the complexity of the network and cryptographic operations performed on the device (including how well these tasks can be parallelized and pipelined), as well as on the performance of the equipment and the quality of the firmware. Therefore, in older models, a more productive hardware is used, sometimes it is possible to equip it with additional processors and memory modules. There are several approaches to implementing cryptographic functions: on a universal central processing unit (CPU), a specialized custom integrated circuit (ASIC), or on a programmable logic integrated circuit (FPGA). Each approach has its pros and cons. For example, a CPU can become a bottleneck of encryption, especially if the processor does not have specialized instructions to support the encryption algorithm (or if they are not used). Specialized microcircuits lack flexibility; it’s not always possible to “reflash” them to improve performance, add new features, or eliminate vulnerabilities. In addition, their use becomes profitable only with large volumes of output. That is why the “golden mean” has become so popular - the use of FPGA (FPGA in Russian). It is on the FPGA that the so-called crypto accelerators are made - built-in or plug-in specialized hardware modules for supporting cryptographic operations.

Since we are talking specifically about network encryption, it is logical that the performance of solutions must be measured at the same values as for other network devices - bandwidth, frame loss percentage and latency. These values are defined in RFC 1242. By the way, nothing is written about the jitter variation often mentioned in this RFC. How to measure these values? Approved in any standards (official or unofficial type RFC) specifically for network encryption, I did not find a method. It would be logical to use the technique for network devices, enshrined in the RFC 2544 standard. Many vendors follow it - many, but not all. For example, test traffic is served in only one direction instead of both, as recommended by the standard. Anyway.

Measuring the performance of network encryption devices still has its own characteristics. Firstly, it is correct to carry out all measurements for a pair of devices: although the encryption algorithms are symmetric, the delays and packet losses during encryption and decryption will not necessarily be equal. Secondly, it makes sense to measure the delta, the effect of network encryption on the total network performance, comparing two configurations with each other: without and without encryption devices. Or, as in the case of hybrid devices that combine several functions in addition to network encryption, with and without encryption. This effect may be different and depend on the connection scheme of the encryption devices, on the operating modes, and finally, on the nature of the traffic. In particular, many performance parameters depend on the length of the packets, which is why to compare the performance of different solutions, they often use graphs of the dependence of these parameters on the length of the packets, or they use IMIX - traffic distribution by packet lengths, which roughly reflects the real one. If we take the same basic configuration for comparison without encryption, we can compare network encryption solutions implemented in different ways without going into these differences: L2 with L3, store-and-forward (store-and-forward) ) with cut-through, specialized with convergent, GOST with AES and so on.

Connection diagram for performance testing

The first characteristic that we pay attention to is the “speed” of the encryption device, that is , the bandwidth of its network interfaces, the bit rate. It is defined by network standards that are supported by interfaces. For Ethernet, the usual numbers are 1 Gb / s and 10 Gb / s. But, as we know, in any network the maximum theoretical throughput at each of its levels is always less than the bandwidth: part of the band is "eaten up" by inter-frame intervals, service headers, and so on. If the device is able to receive, process (in our case, encrypt or decrypt) and transmit traffic at the full speed of the network interface, that is, with the theoretical bandwidth maximum for this level of the network model, then they say that it works at line speed . For this, it is necessary that the device does not lose, does not discard packets at any size and at any frequency. If the encryption device does not support operation at line speed, then usually indicate its maximum throughput in the same gigabits per second (sometimes with an indication of the length of the packets - the shorter the packets, the usually lower the throughput). It is very important to understand that the maximum bandwidth is the maximum lossless (even if the device can "pump" traffic through it at a higher speed, but at the same time losing some of the packets). In addition, you need to pay attention to the fact that some vendors measure the total bandwidth between all pairs of ports, so these figures do not mean much if all encrypted traffic goes through a single port.

Where is work at line speed (or, otherwise, without packet loss) particularly important? In channels with high bandwidth and long delays (for example, satellite), where you have to set a large TCP window size to maintain a high transmission speed, and where packet loss drastically reduces network performance.

But not all bandwidth is used to transfer useful data. We have to reckon with the so-called bandwidth overhead (overhead). This is part of the bandwidth of the encryption device (in percent or in bytes per packet), which is actually lost (cannot be used to transfer application data). Overhead costs arise, firstly, due to the increase in size (additions, "stuffing") of the data field in encrypted network packets (depends on the encryption algorithm and its mode of operation). Secondly, due to the increase in the length of packet headers (tunnel mode, service insertion of the encryption protocol, self-insertion, etc., depending on the protocol and mode, the operation of the cipher and transmission mode) - usually these overheads are the most significant, and for them pay attention in the first place. Thirdly, due to packet fragmentation when exceeding the maximum data block size (MTU) (if the network can split a packet with two MTUs exceeding it, duplicating its headers). Fourth, due to the appearance on the network of additional service (control) traffic between encryption devices (for exchanging keys, installing tunnels, etc.). Low overhead is important where bandwidth is limited. This is especially manifested in traffic from small packets, for example, voice - overhead there can "eat" more than half the channel speed!

Throughput

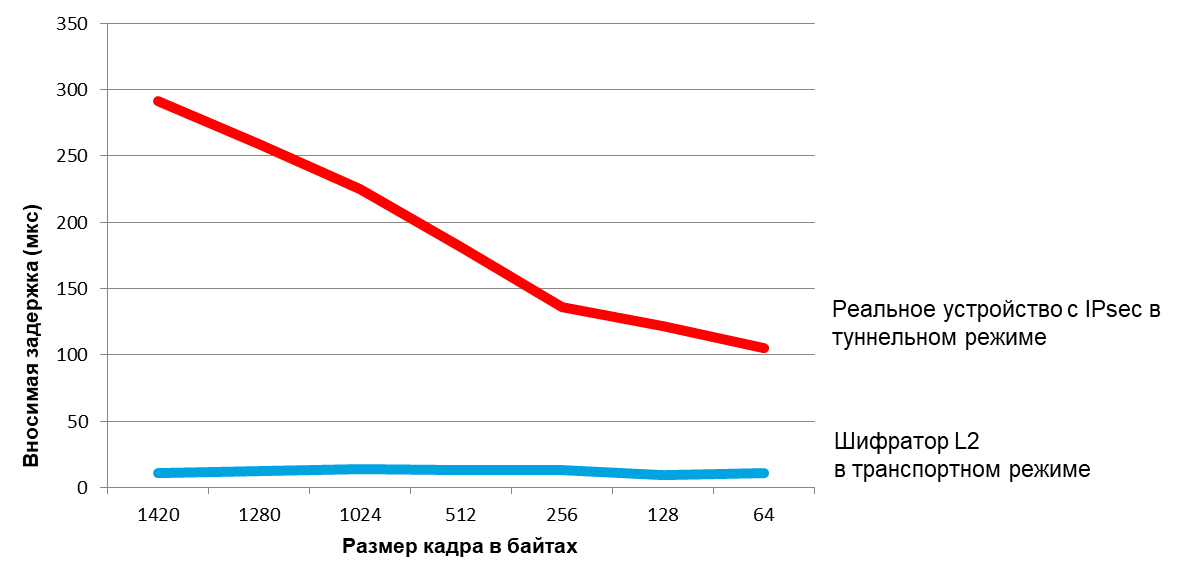

Finally, there is still an insertion delay — the difference (in fractions of a second) in the network delay (the time it takes for the data to go from entering the network to exiting it) between data transfer without and with network encryption. Generally speaking, the smaller the latency (“latency”) of the network, the more critical the delay introduced by the encryption devices becomes. The delay is introduced by the encryption operation itself (it depends on the encryption algorithm, block length and cipher operation mode, as well as on the quality of its implementation in software), as well as the processing of the network packet in the device. The insertion delay depends both on the packet processing mode (end-to-end or “save-and-transmit”) and on the platform performance (the “hardware” implementation on FPGA or ASIC is usually faster than the “software” on the CPU). L2 encryption almost always has a lower insertion delay than encryption on L3 or L4: the effect is that devices encrypting on L3 / L4 are often converged. For example, for high-speed Ethernet encoders implemented on FPGA and encrypting on L2, the delay due to the encryption operation is vanishingly small - sometimes when you enable encryption on a pair of devices, the total delay introduced by them even decreases! A small delay is important where it is comparable to the total delays in the channel, including the signal propagation delay, which is approximately 5 μs per kilometer. That is, we can say that for urban networks (tens of kilometers across) microseconds can solve a lot. For example, for synchronous database replication, high-frequency trading, the same blockchain.

Insertion Delay

Scalability

Large distributed networks can include many thousands of nodes and network devices, hundreds of segments of local area networks. It is important that encryption solutions do not impose their additional restrictions on the size and topology of a distributed network. This applies primarily to the maximum number of host and network addresses. Such limitations can be encountered, for example, when implementing a multi-drop topology protected by an encryption network (with independent secure connections or tunnels) or selective encryption (for example, by protocol number or VLAN). If at the same time the network addresses (MAC, IP, VLAN ID) are used as keys in the table, the number of rows in which is limited, then these limitations appear here.

In addition, large networks often have several structural levels, including a core network, each of which has its own addressing scheme and its own routing policy. To implement this approach, special frame formats (such as Q-in-Q or MAC-in-MAC) and routing protocols are often used. In order not to impede the construction of such networks, encryption devices must correctly handle such frames (that is, in this sense, scalability will mean compatibility - more on that below).

Flexibility

Here we are talking about supporting various configurations, wiring diagrams, topologies, and more. For example, for switched networks based on Carrier Ethernet technologies, this means supporting different types of virtual connections (E-Line, E-LAN, E-Tree), different types of service (both on ports and VLAN) and different transport technologies (they already listed above). That is, the device must be able to work both in linear (point-to-point) and multipoint mode, set up separate tunnels for different VLANs, and allow random delivery of packets inside a secure channel. The ability to select different modes of operation of the cipher (including with or without content authentication) and different modes of packet transmission allows you to balance the strength and performance depending on current conditions.

It is also important to support both private networks, the equipment of which belongs to one organization (or is rented to it), and operator networks, different segments of which are managed by different companies. It’s good if the solution allows you to manage both your own resources and an external organization (according to the managed service model). In carrier networks, another function is important - support for multi-tenancy (shared by different customers) in the form of cryptographic isolation of individual customers (subscribers) whose traffic passes through the same set of encryption devices. As a rule, this requires the use of separate sets of keys and certificates for each customer.

If the device is purchased for a specific scenario, then all these features may not be very important - you just need to make sure that the device supports what you need now. But if the solution is acquired “for growth”, to support future scenarios as well, and is chosen as the “corporate standard”, then flexibility will not be superfluous - especially taking into account restrictions on the interoperability of devices of different vendors (more on that below).

Simplicity and convenience

Serviceability is also a multi-factor concept. It can approximately be said that these are the total time spent by specialists of a certain qualification needed to support a solution at different stages of its life cycle. If there are no costs, and the installation, configuration, operation is completely automatic, then the costs are zero, and the convenience is absolute. Of course, this does not happen in the real world. A reasonable approach is the bump-in-the-wire model, or transparent connection, in which adding or removing encryption devices does not require any manual or automatic changes to the network configuration. This simplifies the maintenance of the solution: you can safely turn the encryption function on and off, and if necessary simply “bypass” the device with a network cable (that is, connect directly those ports of the network equipment to which it was connected). True, there is one minus - the attacker can do the same. To implement the “node on the wire” principle, it is necessary to take into account the traffic of not only the data layer , but also the control and management layers — devices must be transparent to them. Therefore, such traffic can be encrypted only when there are no recipients of these types of traffic in the network between the encryption devices, since if it is discarded or encrypted, the network configuration may change when you enable or disable encryption. The encryption device can be transparent for signaling at the physical level. In particular, when a signal is lost, it should transmit this loss (that is, turn off its transmitters) back and forth ("for itself") in the direction of the signal.

Also important is the support in the separation of powers between the departments of information security and IT, in particular, the network department. The encryption solution must support the organization’s access control and audit model. The need for interaction between different departments to carry out routine operations should be minimized. Therefore, an advantage in terms of convenience is for specialized devices that support exclusively encryption functions and are as transparent as possible for network operations. Simply put, the IS service employees should not have a reason to contact the “networkers” to change the network settings. And those, in turn, should not need to change the encryption settings when servicing the network.

Another factor is the capabilities and convenience of controls. They should be clear, logical, provide import-export settings, automation and so on. Immediately you need to pay attention to what control options are available (usually their own control environment, web interface and command line) and with what set of functions in each of them (there are restrictions). An important function is the support of out-of-band management, that is, through a dedicated control network, and in-band (in-band) management, that is, through a common network through which useful traffic is transmitted. Controls should signal all emergency situations, including IS incidents. Routine, repetitive operations should be performed automatically. This primarily relates to key management. They should be generated / distributed automatically. PKI support is a big plus.

Compatibility

That is, device compatibility with network standards. And this refers not only to industrial standards adopted by reputable organizations such as IEEE, but also branded protocols of industry leaders, such as, for example, Cisco. There are two principal ways to ensure compatibility: either through transparency , or through explicit protocol support (when an encryption device becomes one of the network nodes for some protocol and processes the control traffic of this protocol). Compatibility with networks depends on the completeness and correctness of the implementation of control protocols. It is important to support different options for the PHY level (speed, transmission medium, coding scheme), Ethernet frames of various formats with any MTU, and various L3 service protocols (primarily the TCP / IP family).

Transparency is ensured by mutation mechanisms (temporarily changing the contents of open headers in traffic between encryptors), skipping (when individual packets remain unencrypted) and indentation of the beginning of encryption (when usually encrypted packet fields are not encrypted).

How transparency is ensured.

Therefore, always specify how support for a particular protocol is provided. Often support in transparent mode is more convenient and reliable.

Interoperability

This is also compatible, but in a different sense, namely the ability to work with other models of encryption devices, including other manufacturers. Much depends on the state of standardization of encryption protocols. There are simply no generally accepted encryption standards on L1.

L2 encryption for Ethernet networks have 802.1ae (MACsec) standard, but it does not use through (end-to-end), and the inter-PN, "Hop-by-hop" encryption, and in its original version is unsuitable for use in distributed networks, therefore, its proprietary extensions appeared that overcome this limitation (of course, due to interoperability with equipment from other manufacturers). True, in 2018, support for distributed networks was added to the 802.1ae standard, but there is still no support for GOST encryption algorithm sets. Therefore, proprietary, non-standard L2 encryption protocols, as a rule, are characterized by greater efficiency (in particular, lower bandwidth overhead) and flexibility (the ability to change algorithms and encryption modes).

At higher levels (L3 and L4) there are recognized standards, especially IPsec and TLS, then everything is not so simple. The fact is that each of these standards is a set of protocols, each of which with different versions and mandatory or optional extensions for implementation. In addition, some manufacturers prefer to use their proprietary encryption protocols on L3 / L4. Therefore, in most cases it is not worth counting on full interoperability, but it is important that at least the interaction between different models and different generations of the same manufacturer is ensured.

Reliability

To compare different solutions, you can use either the mean time between failures, or the availability factor. If these figures are not (or there is no trust in them), then a qualitative comparison can be made. The advantage will be for devices with convenient control (less risk of configuration errors), specialized encryptors (for the same reason), as well as solutions with a minimum time for detection and elimination of a failure, including the means of hot backup of nodes and devices as a whole.

Cost

As far as cost is concerned, as with most IT solutions, it is advisable to compare the total cost of ownership. To calculate it, you can not reinvent the wheel, but use any suitable technique (for example, from Gartner) and any calculator (for example, the one that is already used in the organization to calculate TCO). It is clear that for a network encryption solution, the total cost of ownership consists of the direct costs of buying or renting the solution itself, infrastructure for the deployment of equipment and the costs of deployment, administration and maintenance (it does not matter, in-house or in the form of a third-party organization), as well as indirectcosts from downtime (caused by loss of end-user productivity). There is probably only one subtlety. The impact of solution performance can be taken into account in different ways: either as indirect costs caused by a drop in productivity, or as “virtual” direct costs for the purchase / upgrade and maintenance of network tools that compensate for the drop in network performance due to the use of encryption. In any case, expenses that are difficult to calculate with sufficient accuracy are better to “bracket” the calculation: in this way there will be more confidence in the final value. And, as usual, in any case, it makes sense to compare different devices by TCO for a specific scenario of their use - real or typical.

Durability

And the last characteristic is the durability of the solution. In most cases, durability can only be assessed qualitatively by comparing different solutions. We must remember that encryption devices are not only a means, but also an object of protection. They can be exposed to various threats. In the foreground are threats of confidentiality, reproduction and modification of messages. These threats can be realized through vulnerabilities of the cipher or its individual modes, through vulnerabilities in encryption protocols (including at the stages of establishing a connection and generating / distributing keys). The advantage will be for solutions that allow changing the encryption algorithm or switching the cipher mode (at least through a firmware update), for solutions that provide the most complete encryption, hiding from the attacker not only user data,but also address and other service information, as well as those solutions that not only encrypt, but also protect messages from reproduction and modification. For all modern encryption, electronic signature, key generation and other algorithms that are enshrined in the standards, the strength can be assumed the same (otherwise you can just get lost in the wilds of cryptography). Should it certainly be GOST algorithms? Everything is simple here: if the application scenario requires certification of the FSB for cryptographic information protection (and in Russia this is most often the case) for most network encryption scenarios it is), then we only choose between certified ones. If not, then it makes no sense to exclude devices without certificates from consideration.For all modern encryption, electronic signature, key generation and other algorithms that are enshrined in the standards, the strength can be assumed the same (otherwise you can just get lost in the wilds of cryptography). Should it certainly be GOST algorithms? Everything is simple here: if the application scenario requires certification of the FSB for cryptographic information protection (and in Russia this is most often the case) for most network encryption scenarios it is), then we only choose between certified ones. If not, then it makes no sense to exclude devices without certificates from consideration.For all modern encryption, electronic signature, key generation and other algorithms that are enshrined in the standards, the strength can be assumed the same (otherwise you can just get lost in the wilds of cryptography). Should it certainly be GOST algorithms? Everything is simple here: if the application scenario requires certification of the FSB for cryptographic information protection (and in Russia this is most often the case) for most network encryption scenarios it is), then we only choose between certified ones. If not, then it makes no sense to exclude devices without certificates from consideration.if the application scenario requires certification of the FSB for cryptographic information protection (and in Russia this is most often the case) for most network encryption scenarios it is), then we only choose between certified ones. If not, then it makes no sense to exclude devices without certificates from consideration.if the application scenario requires certification of the FSB for cryptographic information protection (and in Russia this is most often the case) for most network encryption scenarios it is), then we only choose between certified ones. If not, then it makes no sense to exclude devices without certificates from consideration.

Another threat is the threat of hacking, unauthorized access to devices (including through physical access outside and inside the case). The threat can be realized through implementation

vulnerabilities - in hardware and in code. Therefore, the advantage will be for solutions with a minimum “surface for attack” through the network, with housings protected from physical access (with tamper sensors, with sounding protection and automatic reset of key information when opening the case), as well as those that allow firmware updates when the vulnerability in the code becomes known. There is another way: if all the devices being compared have FSB certificates, then the cryptographic information protection certificate class according to which the certificate is issued can be considered an indicator of resistance to cracking.

Finally, another type of threat is errors in configuration and operation, the human factor in its purest form. Here another advantage of specialized encryptors is shown over convergent solutions, which are often oriented towards seasoned “networkers,” and can cause difficulties for “ordinary”, wide-profile information security specialists.

To summarize

In principle, one could offer a certain integral indicator for comparing different devices, something like

where p is the weight of the indicator, and r is the rank of the device according to this indicator, and any of the above characteristics can be divided into "atomic" indicators. Such a formula could be useful, for example, when comparing tender proposals according to pre-agreed rules. But you can get by with a simple table like

| Characteristic | Device 1 | Device 2 | ... | Device N |

|---|---|---|---|---|

| Throughput | + | + | +++ | |

| Overhead | + | ++ | +++ | |

| Delay | + | + | ++ | |

| Scalability | +++ | + | +++ | |

| Flexibility | +++ | ++ | + | |

| Interoperability | ++ | + | + | |

| Compatibility | ++ | ++ | +++ | |

| Simplicity and convenience | + | + | ++ | |

| fault tolerance | +++ | +++ | ++ | |

| Cost | ++ | +++ | + | |

| Durability | ++ | ++ | +++ |

I will be glad to answer questions and constructive criticisms.