Today there will be a small article about installing “turbine” NVIDIA GeForce GTX 1070 Founders Edition cards in the Nvidia Tesla S2050 Server box, which allows you to install 4 GPU cards and connect them to the server via Nvidia P797 HIC interface cards over full width PCI-E 16x 2.0 bus. The box was preliminarily refined so that it could work not only with the Nvidia Tesla M2050 GPU cards.

Review of GTX 1070 Founders Edition cards here .

Video review of the Nvidia Tesla S2050 box here:

Second review here:

A bit of history - at the time of the Fermi architecture GPU and the generation of the GTX 480-580 graphics cards, Nvidia has "long and successfully" promoted the CUDA GPU architecture and related software, as well as specialized Tesla series computing cards and professional Quadro graphics cards, characterized by a GPU chip with a large number of “double-precision” cores, firmware BIOS cards and the presence of ECC memory error control.

Large customers “tested” the advantages given by this architecture, but the “chips” themselves were already “small”. A specialized card for GPU computing Nvidia Tesla M2050 built on the generation of Fermi architecture had 6 Gb of memory on board, but only 448 CUDA cores, the card was a close relative of the GTX 480. Then there were Tesla M2070, M2090 cards with 512 CUDA cores (GTX 580 relatives ) and all the same 6 gigs of RAM, but this did not affect the situation much. More cores were needed and customers were willing to pay for it.

A simple way - to increase the number of cards in the system, I stumbled over the number of PCI-E 16x slots, which usually does not exceed 4 in the most expensive gaming PCs and dual-processor servers. In addition, 1U and 2U height servers do not allow installing “video cards” of normal sizes, but Nvidia really wanted money and its engineers solved the problem. There were two solutions. Long - the development of a new Kepler architecture with a large number of cores of improved design.

It was quick to create an external “box” with PCI-E 16x switches and its own power supplies for installing four GPU cards and connecting them to the system via P797 HIC interface cards with special cables that allow connecting 2 GPUs to one PCI-E 16x 2.0 server cards in the box without trimming the width of the tire. So the “magic boxes” of the Nvidia Tesla S2050 Server were created for connecting passive-cooled GPU cards with a TDP of 225 W. Although strictly speaking, the Nvidia Tesla S2050 is just external video card boxes, based on which the boxes for the Dell C410x GPU were made, and they themselves were created on the basis of earlier Tesla S870 boxes for earlier GPUs.

I did not encounter Nvidia Tesla S870 boxes, although those who came across wrote that there was no control of installed GPU cards in it. But in the later S2050, card control was added so that users did not install "cards not provided by the company." The built-in box microcontroller polls PCI-E 16x 2.0 slots and if it doesn’t detect a single Tesla M2050 card, it turns off the front fans and leaves power on the electronics, which leads to failure of both cards and PCI-E 16x irons that heat up, including There is a simple solution to this problem.

In order to use in this box any other GPU-cards with PCI-E 16x 2.0 or 3.0 cards working in the box using PCI-E 16x 2.0, or a video card, two modifications of the box must be performed. The first is to close the contacts of the button for controlling the opening of the box lid, which is located on a narrow backplane in the middle of the case. It is pressed by a plastic duct, which, when fitted to your cards, will be displaced and the power of the box will stop turning off.

The second problem - I blocked the fans with non-native cards by cutting the blue wire in the bundle of the front panel fans. The blue wire controls the speed of the fans and after cutting it, the controller cannot turn them off, although the speed is not regulated now, but this is not critical in my opinion.

For my purposes, 2 Tesla S2050 boxes with cables and interface cards were bought to connect 8 GPUs to the ASUS RS720 server for working with deep learning. The boxes are designed for GPUs with passive longitudinal purge radiators, but Tesla K20M 5Gb and K20X 6 Gb, which have now become affordable, do not satisfy me in terms of memory size. Tesla K40 12 Gb - still too expensive. The budget option turned out to be GTX 1070 8Gb, which have now started to throw off miners in large quantities.

According to the design of the cooling radiator, only cards with longitudinal blowing are suitable for boxing, in other words, versions of cards with a centrifugal turbine. For cards with 1-2-3 fans, the radiator fins are located across the cards on the heat pipes and in the box they will not work as they should. And for turbine cards, the radiator fins are located along, which predetermined my choice in favor of the GTX 1070 Founders Edition. A couple of which my good friend gave me for my birthday, concurrently, the main sponsor of my projects is Dmitry from Khimki.

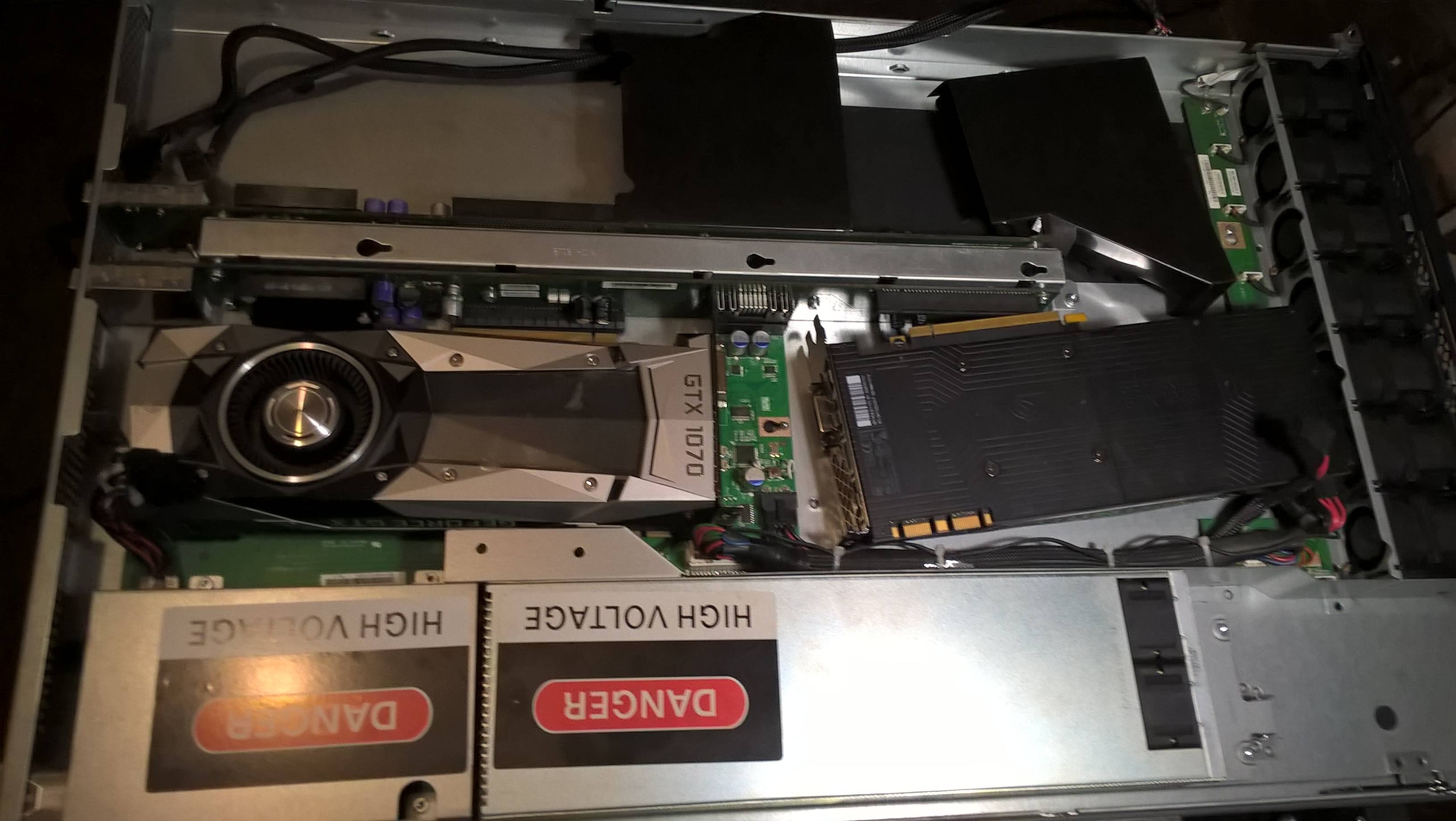

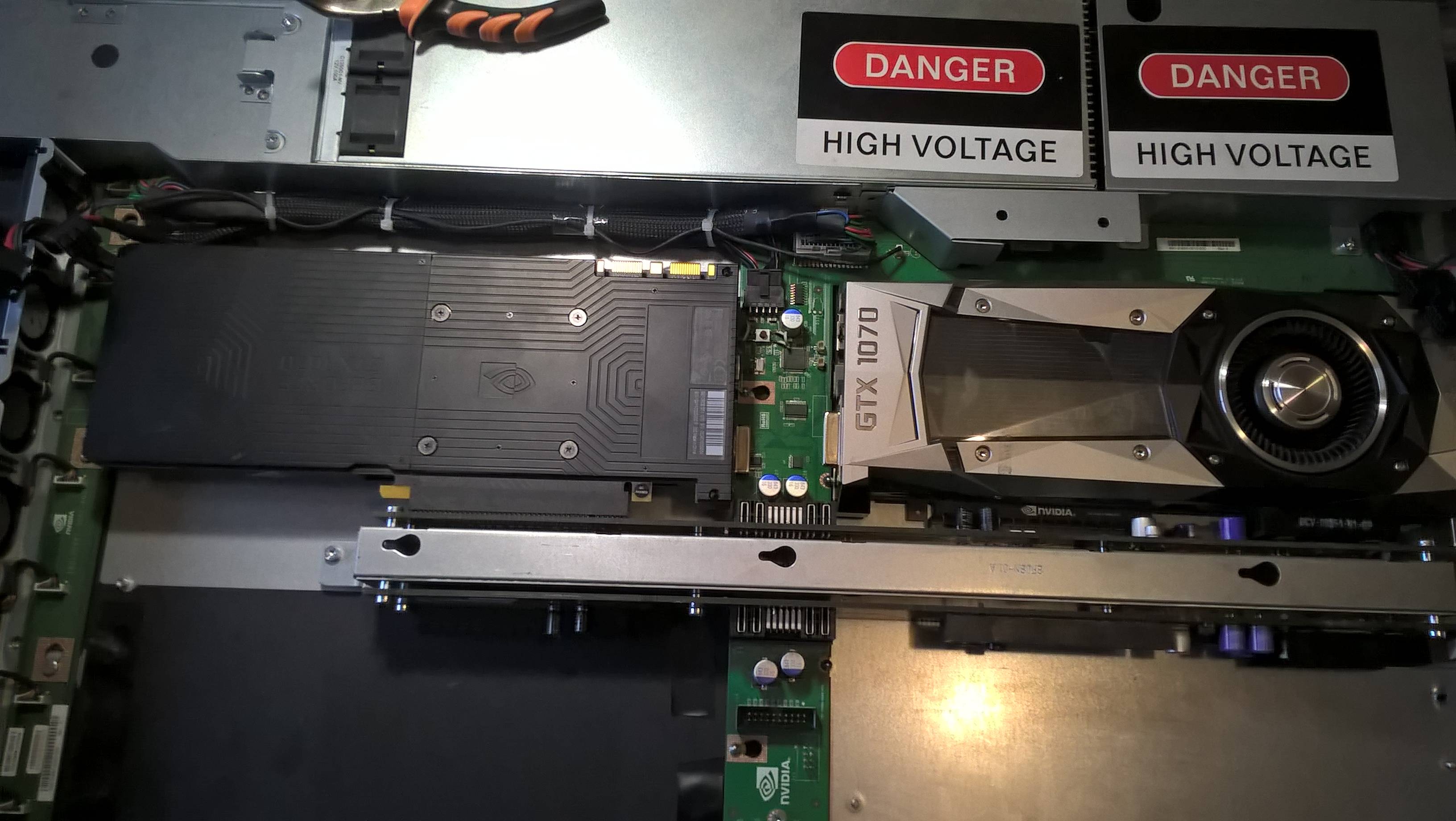

Trying cards for boxing, photo essay with all the nuances.

Trying the general plan.

I unscrewed the rear mounting strips from the cards, they interfered, now I try on.

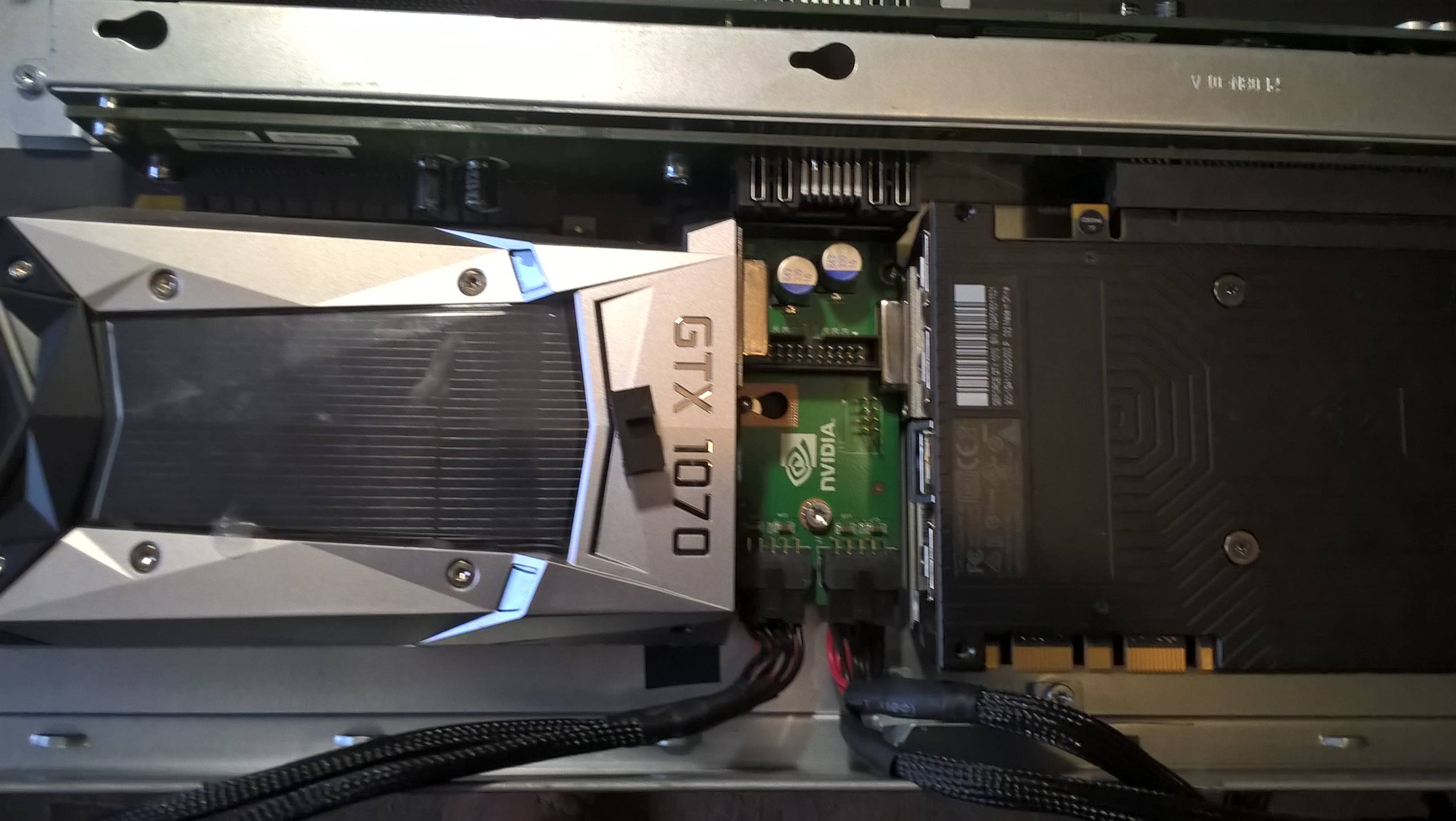

The connectors abut the backplane in places. It is necessary to lay an electrical tape so that nothing closes. It would be better to unsolder the connectors, of course, but I don’t have a soldering station and a competent enough expert friend too.

And here the video card connector rests on the connector on the backplane. I solved this problem by carefully removing the black plastic from the connector pins on the box board with the platypus. Anyway, I have nothing to include in it.

In some places you will have to bend the connector housings by putting the insulation inside so as not to short the terminals in the connector.

Continued trying on cards in boxing.

The same “modified button” so that power is supplied to the electronics even with the lid open or a displaced duct.

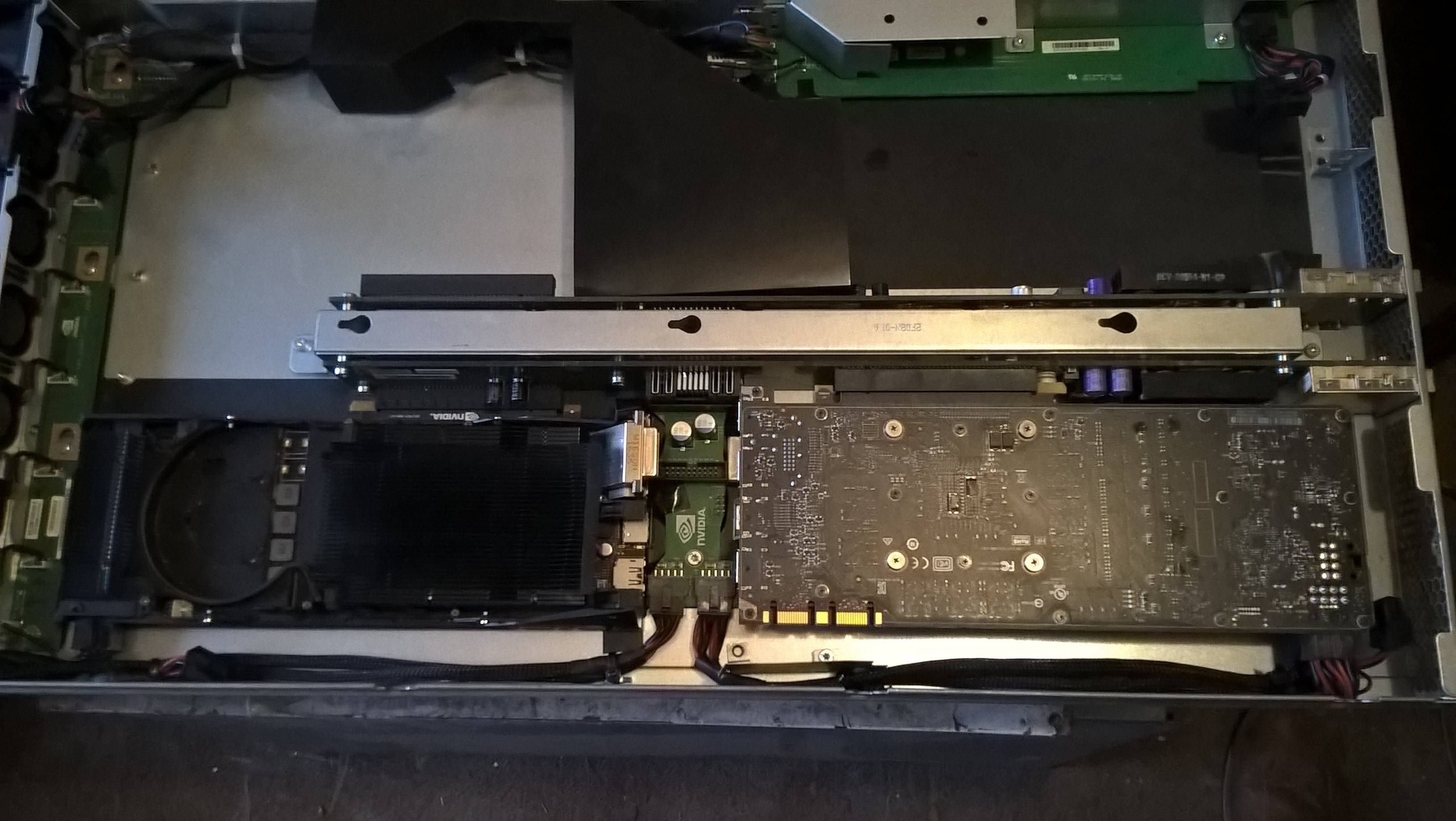

In addition, I had to modify the cards. The finalization of the cards is reduced to the removal of the decorative casings and the turbines themselves, which interfere with the purging of the card radiators by the front coolers of the box.

And this is the result. Later I will connect the box to the server, pump up the OS and I will test the cards under load, depending on the temperature changes. But there shouldn’t be anything bad, the box blew M2050 cards with a TDP of 225 W, while the GTX 1070 TDP of 150 W has a supply of cooling and power.