Companies that track user activity on the Internet need reliable identification of each person without his knowledge. Fingerprinting through the browser fits perfectly. No one will notice if the web page asks to draw a graphic fragment through the canvas or generates a sound signal of zero volume, measuring the response parameters.

The method works by default in all browsers except Tor. It does not require any user permissions.

Total tracking

Recently, NY Times journalist Kashmir Hill discovered that a certain little-known company called Sift had accumulated 400-page dossiers on it . There is a shopping list for several years, all messages to hosts on Airbnb, a Coinbase wallet launch log on a mobile phone, IP addresses, iPhone pizza orders and much more. A similar collection is conducted by several scoring companies. They take into account up to 16,000 factors when compiling a “trust rating” for each user. Sift trackers are installed on 34,000 sites and mobile applications .

Recently, NY Times journalist Kashmir Hill discovered that a certain little-known company called Sift had accumulated 400-page dossiers on it . There is a shopping list for several years, all messages to hosts on Airbnb, a Coinbase wallet launch log on a mobile phone, IP addresses, iPhone pizza orders and much more. A similar collection is conducted by several scoring companies. They take into account up to 16,000 factors when compiling a “trust rating” for each user. Sift trackers are installed on 34,000 sites and mobile applications .

Since tracking cookies and scripts do not always work well or are disabled on the client, tracking of users is supplemented by fingerprinting - this is a set of methods for obtaining a unique “fingerprint” of the browser / system. The list of installed fonts, plugins, screen resolution and other parameters in total give enough bits of information to get a unique ID. Fingerprinting through canvas works well.

Fingerprinting through the Canvas API

The web page instructs the browser to draw a graphic object from several elements.

<canvas class="canvas"></canvas>

const canvas = document.querySelector('.canvas'); const ctx = canvas.getContext('2d'); // Maximize performance effect by // changing blending/composition effect ctx.globalCompositeOperation = 'lighter'; // Render a blue rectangle ctx.fillStyle = "rgb(0, 0, 255)"; ctx.fillRect(25,65,100,20); // Render a black text: "Hello, OpenGenus" var txt = "Hello, OpenGenus"; ctx.font = "14px 'Arial'"; ctx.fillStyle = "rgb(0, 0, 0)"; ctx.fillText(txt, 25, 110); // Render arcs: red circle & green half-circle ctx.fillStyle = 'rgb(0,255,0)'; ctx.beginPath(); ctx.arc(50, 50, 50, 0, Math.PI*3, true); ctx.closePath(); ctx.fill(); ctx.fillStyle = 'rgb(255,0,0)'; ctx.beginPath(); ctx.arc(100, 50, 50, 0, Math.PI*2, true); ctx.closePath(); ctx.fill();

The result looks something like this:

A Canvas API function called toDataURL () returns a URI with data that matches this result:

console.log(canvas.toDataURL()); /* Ouputs something like: "data:image/png;base64,iVBORw0KGgoAAAANSUhEUgAAAAUAAAAFCAYAAACNby mblAAAWDElEQVQImWNgoBMAAABpAAFEI8ARexAAAElFTkSuQmCC" */

This URI is different on different systems. It is then hashed and used along with the other data bits that make up the system’s unique fingerprint. Among other things:

- installed fonts (about 4.37 bits of identifying information);

- Installed plug-ins in the browser (3.08 bits);

- HTTP_ACCEPT headers (16.85 bits)

- user-agent;

- tongue;

- Timezone;

- screen size

- camera and microphone;

- OS version

- and etc.

The canvas fingerprint hash adds an additional 4.76 bits of identifying information. WebGL fingerprint hash is 4.36 bits.

Fingerprint test

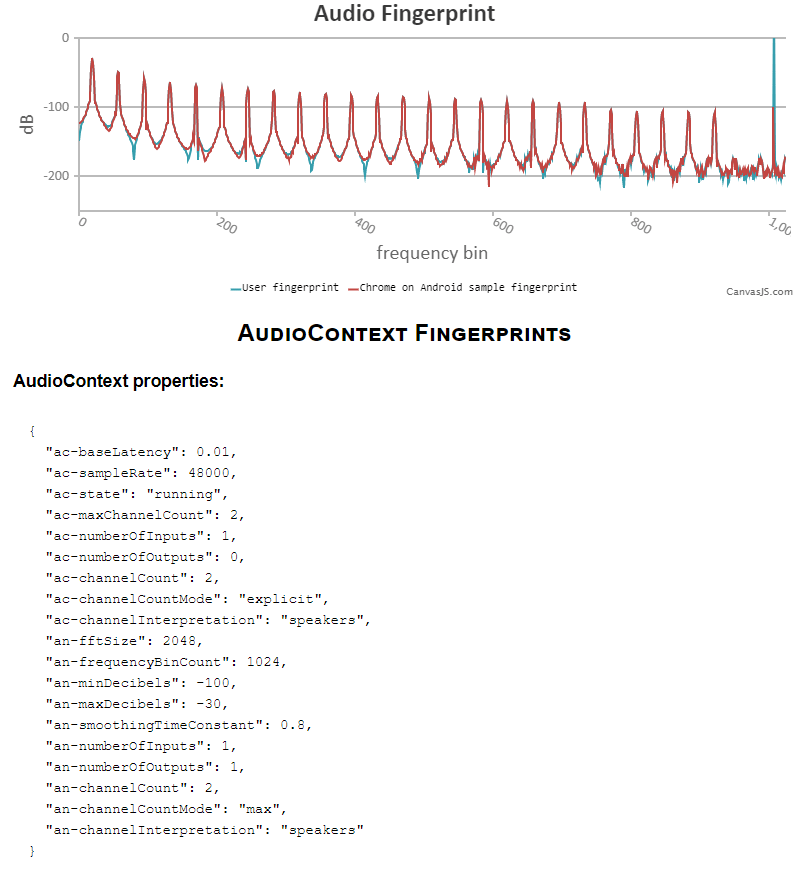

Recently, in addition to a set of parameters, another one has been added: an audio fingerprint through the AudioContext API .

Back in 2016, this identification method was already used by hundreds of sites , such as Expedia, Hotels.com, etc.

Fingerprinting through the AudioContext API

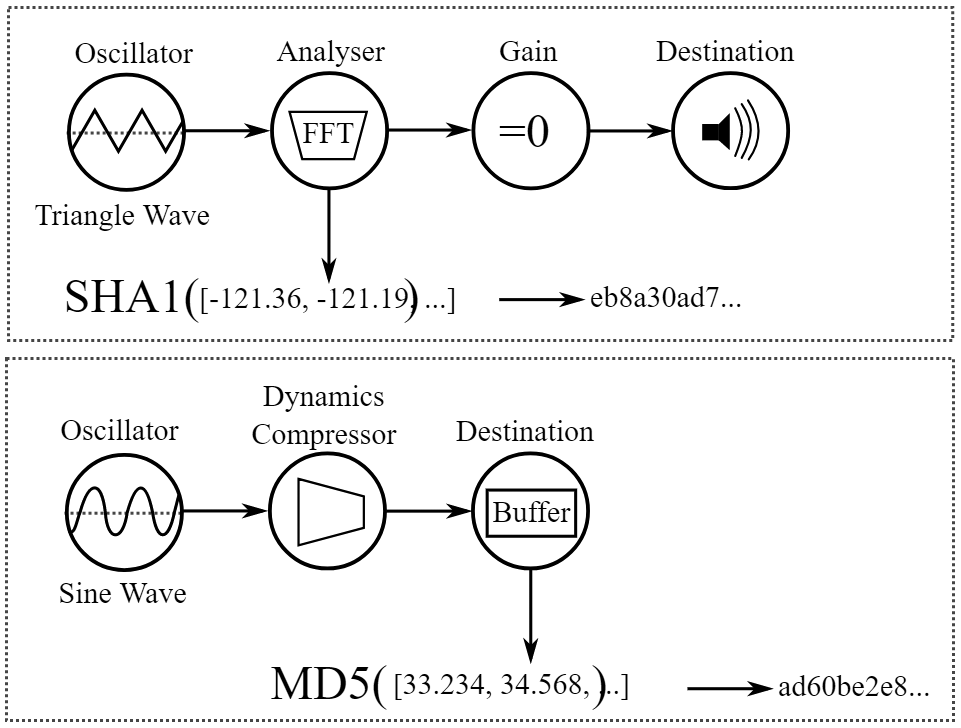

The algorithm of actions is the same: the browser performs the task, and we record the result of the execution and calculate a unique hash (fingerprint), only in this case the data is extracted from the audio stack. Instead of the Canvas API, the AudioContext API is being accessed; it is the Web Audio API that all modern browsers support.

The browser generates a low-frequency audio signal, which is processed taking into account the sound settings and equipment installed on the device. In this case, no sound is recorded or reproduced. Speakers and microphone are not involved.

The advantage of this fingerprinting method is that it is browser independent, so it can track the user even after switching from Chrome to Firefox, then to Opera and so on.

Fingerprint Test via AudioContext API

How to get a fingerprint, walkthrough :

- First, you need to create an array to store frequency values.

let freq_data = [];

- Then an AudioContext object and various nodes are created to generate a signal and collect information using the built-in methods of the AudioContext object.

// Create nodes const ctx = new AudioContext(); // AudioContext Object const oscillator = ctx.createOscillator(); // OscillatorNode const analyser = ctx.createAnalyser(); // AnalyserNode const gain = ctx.createGain(); // GainNode const scriptProcessor = ctx.createScriptProcessor(4096, 1, 1); // ScriptProcessorNode

- Turn off the volume and connect the nodes to each other.

// Disable volume gain.gain.value = 0; // Connect oscillator output (OscillatorNode) to analyser input oscillator.connect(analyser); // Connect analyser output (AnalyserNode) to scriptProcessor input analyser.connect(scriptProcessor); // Connect scriptProcessor output (ScriptProcessorNode) to gain input scriptProcessor.connect(gain); // Connect gain output (GainNode) to AudioContext destination gain.connect(ctx.destination);

- Using

ScriptProcessorNode

, we create a function that collects frequency data during audio processing.

- The function creates a typed array

Float32Array

with a length equal to the number (frequency) of data values inAnalyserNode

, and then fills it with values.

- These values are then copied to the array we created earlier (

freq_data

) so that we can easily write them to the output.

- Turn off the nodes and display the result.

scriptProcessor.onaudioprocess = function(bins) { // The number of (frequency) data values bins = new Float32Array(analyser.frequencyBinCount); // Fill the Float32Array array of these based on values analyser.getFloatFrequencyData(bins); // Copy frequency data to 'freq_data' array for (var i = 0; i < bins.length; i = i + 1) { freq_data.push(bins[i]); } // Disconnect the nodes from each other analyser.disconnect(); scriptProcessor.disconnect(); gain.disconnect(); // Log output of frequency data console.log(freq_data); };

- The function creates a typed array

- We begin to reproduce the tone, so that sound is generated and processed in accordance with the function.

// Start playing tone oscillator.start(0);

/ * Output: [ -119.79788967947266, -119.29875891113281, -118.90072674835938, -118.08164726269531, -117.02244567871094, -115.73435120521094, -114.24555969238281, -112.56678771972656, -110.70404089034375, -108.64968109130886, ... ] * /

This combination of values is hashed to create a fingerprint, which is then used with other identification bits.

To protect against such tracking, you can use extensions like AudioContext Fingerprint Defender , which mix random noise into the fingerprint.

NY Times provides email addresses where you can contact tracking companies and ask them to show the information collected on you.

- Zeta Global : online form

- Retail Equation : returnactivityreport@theretailequation.com

- Riskified : privacy@riskified.com

- Kustomer : privacy@kustomer.com

- Sift : privacy@sift.com, the online form is deactivated after the publication of the article

SPECIAL CONDITIONS for PKI solutions for small and medium-sized businesses until 11/30/2019 using the promo code AL003HRFR. Offer valid for new customers. For details, contact the managers +7 (499) 678 2210, sales-ru@globalsign.com.