Developers from the University of Edinburgh introduced a new algorithm for creating realistic character movements in games. Trained on Motion Capture trajectories, the neural network is trying to copy the movements of real people, but at the same time adapts them to the characters of video games.

One neural network is capable of managing several actions in the game at once. Opening doors, moving items, using furniture. At the same time, she dynamically changes the position of the legs and arms so that the character can realistically hold drawers of different sizes, sit on chairs of different sizes, and also crawl into passages of different heights.

Usually, under the control of characters in games using AI, it means complete control of efforts in the limbs, based on some kind of physical engine that mimics the laws of physics. This is the domain of machine learning called Reinforcement Learning. Unfortunately, in this way, realistic movements cannot yet be achieved.

On the other hand, you can try to train the neural network to simulate the movements of real people captured using Motion Capture. In this way, about a year ago, significant progress was made in realistic animation of 3d characters.

There were several consecutive scientific works on this topic, but the most complete description can be found in Towards a Virtual Stuntman 's work on the DeepMimic neural network ( https://www.youtube.com/watch?v=vppFvq2quQ0 ).

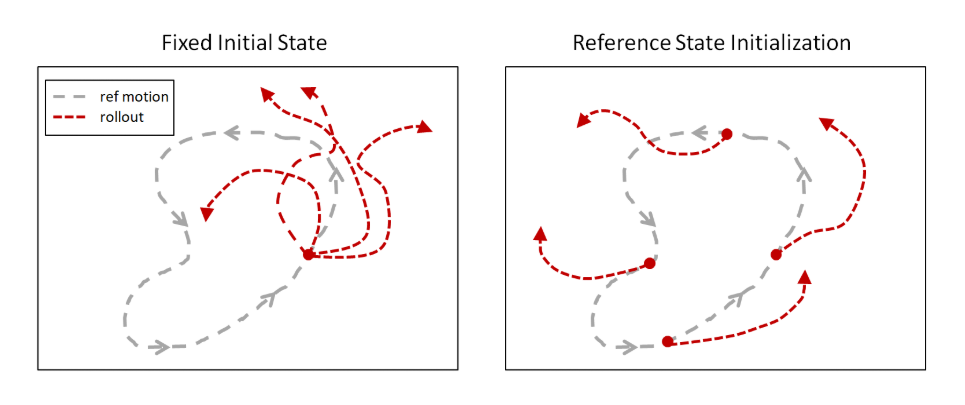

The main idea is to simulate human movements during training to start the episode not from the very beginning of the Motion Capture track, as they did before, but from random points along the entire path. Existing Reinforcement Learning algorithms investigate the vicinity of the starting point, so most often they did not reach the end of the path. But if each episode begins along the entire track, then the chance increases that the neural network will learn to repeat the entire trajectory.

Later, this idea was picked up in completely different areas. For example, by teaching people to play a neural network through games, and also starting episodes not from the beginning, but from random points (specifically, in this case, from the end, and gradually moving to the beginning), OpenAI taught the neural network to play Montezuma's Revenge . Which did not yield to the usual Reinforcement Learning algorithms before.

Without this trick, attempts to train the neural network to copy complex movements ended in failure because the neural network found a shorter path. Although not giving such a big reward as for the entire trajectory, but still there was some kind of reward. For example, instead of doing a somersault back, the neural network simply bounced slightly and flopped on its back.

But with this approach, the neural network without any problems studies the trajectory of almost any complexity.

The main problem of DeepMimic, which prevented its direct application to video games, is that it was not possible to train the neural network to perform several different animations at once. It was necessary to train a separate neural network for each animation. The authors tried to combine them in different ways, but more than 3-4 animations could not be combined.

In the new work, this problem is also not completely resolved, but much progress has been made towards a smooth transition between different animations.

It should be noted that this problem affects all currently existing similar animation neural networks. For example, this neural network , also trained on imitation of Motion Capture, is able to honestly control a huge number of muscles (326!) Of a humanoid character on a physical engine. Adapting to different weights of lifted weights and various joint injuries. But at the same time, for each animation, a separate trained neural network is needed.

It should be understood that the goal of such neural networks is not just to repeat human animation. And repeat it on the physics engine. At the same time, Reinforcement Learning algorithms make this training reliable and resistant to interference. Then such a neural network can be transferred to a physical robot that differs in geometry or in mass from a person, but it will still continue to realistically repeat the movements of people (starting from scratch, as already mentioned, this effect has not yet been achieved). Or, as in the work above, you can virtually explore how a person with leg injuries will move in order to develop more comfortable prostheses.

Back in the first DeepMimic there were the beginnings of such an adaptation. It was possible to move the red ball, and the character threw the ball at him each time. Aiming and measuring the throwing force to hit the target exactly. Although he was trained on the only Motion Capture track, which does not provide such an opportunity.

Therefore, this can be considered full-fledged AI training, and imitation of human movements simply allows you to accelerate learning and make movements visually more attractive, familiar to us (although from the point of view of the neural network they may not be the most optimal at the same time).

New work went even further in this direction.

There is no physical engine, it is a purely animation system for video games. But the emphasis is on realistic switching between multiple animations. And to interact with game items: moving items, using furniture, opening doors.

The architecture of the neural network consists of two parts. One (Gating network), based on the current state of the state and the current goal, chooses which animation to use, and the other (Motion prediction network) predicts the next frames of the animation.

All this was trained on a set of Motion Capture tracks using simulation Reinforcement Learning.

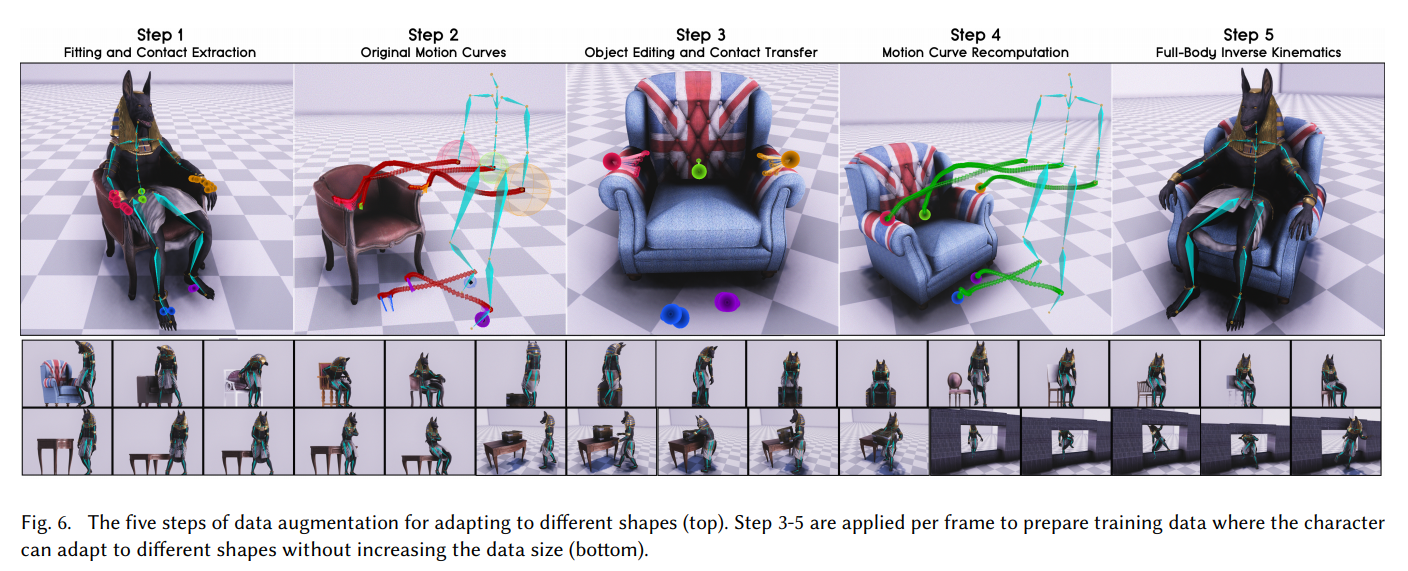

But the main achievement of this work is different. In how the developers taught the neural network to work with objects of different sizes and squeeze into passages of different widths or heights. So that the positions of the arms and legs look realistic and correspond to the size of the object with which the character interacts in the game.

The secret was simple: augmentation!

First, from the Motion Capture track, they determined the contact points of the hands with the armrests of the chair. Then they replaced the model of the chair with a wider one, and recalculated the Motion Capture trajectory so that the hands touched the armrests at the same points, but on a wider chair. And they forced the neural network to simulate this new trajectory generated by Motion Capture. Similarly with the dimensions of the boxes, the height of the aisles, etc.

Repeating this many times with various 3d models of the environment with which the player will interact, the neural network has learned to realistically handle objects of different sizes.

To interact with the environment in the game itself, it was additionally necessary to voxelize the objects around so that it worked as sensors at the input of the neural network.

The result is a very good animation for the game characters. With smooth transitions between actions and with the ability to realistically interact with objects of various sizes.

I strongly recommend watching the video if anyone has not already done so. It describes in great detail how they achieved this.

This approach can be used for animation, including four-legged animals, obtaining the unsurpassed quality and realism of the movements of animals and monsters:

References

Video

Project page with source

PDF file with a detailed description of the work: SIGGRAPH_Asia_2019 / Paper.pdf