The first part - organizational and managerial - should be useful primarily to those who are responsible for test automation and create such systems as a whole. Project managers, group leaders and owners of functional and automatic testing services, all who care about the question “how to build a cost-effective end-2-end testing of their IT system”, will find here a specific plan and methodology.

A source

Part 1 - Organizational and managerial. Why did we need automation. Organization of the development and management process. Organization of use

Initially, at the start there was a large and complex information system (we will call it the “System”) with numerous complex, long and interconnected business scenarios. All scripts were tested as E2E via web interfaces exclusively in manual mode (there were more than one and a half thousand of such scenarios of the most critical priority only). Moreover, all these scenarios had to be completed at least once during the regression of each new release or hotfix before the next deployment update to the product.

At a certain moment, when it became completely unbearable to click the mouse in manual mode, we decided to automate it all. That is what they did through the development of a separate service based on java + selenium + selenide + selenoid, which is further called the "testing framework" or simply - "Autotests" .

Historically, the testing framework code was developed by two teams. First, the first team created a prototype with a couple dozen scenarios. Then the second team for a year scaled the prototype both in breadth (number of tests) and in depth (typical coding and implementation patterns were introduced).

I am the team and team leader of the second team that adopted the prototype framework for scaling (in May 2018).

At the time of this writing, the task set a year ago was completed and the project team was provided with a stable automation service . It was not in vain that I emphasized the service , because initially the task was not set as developing an application, but as providing a service-service for testing automation to a “functional testing” group. And this feature subsequently greatly influenced the organization of development and the architecture of the testing framework.

Total

About 1,500 test scenarios were automated: in each test, from 200 to 2000 user operations.

The total capacity of the service is up to 60 simultaneously working browsers, and this is not the limit (the number can be increased by 5 times due to virtual machines).

The total duration of a full regression is no more than 3 hours, and the PreQA test is less than an hour.

An extensive range of features has been implemented:

- local use (real-time execution) and remote (via Bamboo plans);

- limiting the composition of running tests by filter;

- a detailed report with the results of each step of the test script (through the Allure framework);

- downloading and uploading files from / to the browser, followed by checking the results of their processing in terms of the format and contents of the files;

- accounting and control of the asynchronous nature of the angular interface. Including control of hung requests (pending request) between Angular and REST services;

- control of browser logs;

- video test recording;

- removal of the snapshot of the page at the point of "fall" of the test;

- event transmission in ELK;

- much more on the little things ...

Why was all this needed?

At the start, the purpose of the system was quite simple and clear.

Imagine that you have a large registry system for managing an extensive range of documents and their life cycle, which provide a couple of hundred business processes. Moreover, there are millions of people, suppliers - tens of thousands, services - thousands, complex documents, including framework and template, and providing business processes are provided in hundreds of different ways ...

All this turns into one and a half thousand test scenarios, and this is only the highest priority and only positive.

In the process of automation, various nuances were revealed that required the use of various solutions.

For example, one script could contain up to hundreds of separate operations, including such interesting ones as: “Download an EXCEL file with data and verify that the system processes each record from the file” (to solve this problem, it took several steps to prepare the data and then check the result of loading it into System). And now we add the restriction of reuse of test data: the test data for the successful completion of most test scenarios should be “fresh” and not previously used in similar scenarios (during the tests, the state of the data in the System changes, as a result of which they cannot be reused for the same checks).

A source

At some point, manual testing of the System as part of the regression ceased to seem economically viable and fast enough, and they decided to automate it through the web user interface.

In other words, the functional testing group opens the “page”, selects the “test group”, clicks the “execute” button (we used Bamboo). Then Autotests (hereinafter referred to as Autotests. Designate the created product for testing in general) automatically emulate the actions of users in the System through the browser (“press” the necessary buttons, enter values in the fields, etc.), upon completion, display a detailed report on all steps and completed actions and results of the verification (correspondence of the expected reaction of the System to its actual behavior).

Total, the purpose of Autotests is the automation of manual E2E testing. This is an “external” system that does not take part in the development process of the system under test and is in no way connected with the unit or integration tests used by the developers.

Goals

It was necessary to significantly reduce the labor costs for conducting End-2-End testing and to increase the speed of complete and reduced regressions in terms of volume.

Additional goals

- ensure high speed of development of autotests with a high level of autonomy (the need for preliminary filling with test data of the System stands / setting up Autotests to run at each stand should be minimized);

- optimize expenses (time and financial) for communications between automation, functional testing and system development teams;

- minimize the risk of discrepancies between the actually implemented autotests and the initial expectations of the functional testing team (the functional testing team should unconditionally trust the results of the AutoTests).

Tasks

The main task of the development was formulated very simply - to automate over the next 6 months 1000 test scenarios of the highest priority.

The predicted number of basic test actions ranged from 100 to 300, which gave us about 200 thousand test methods with 10-20 lines of code, without taking into account the general and auxiliary classes of helpers, data providers and data models.

Thus, it turned out that, taking into account time constraints (130 working days), it was necessary to do a minimum of 10 tests per day and at the same time ensure the relevance of the implemented self-tests taking into account the changes occurring in the System (the System is actively developing).

According to expert estimates, the labor required to develop one autotest was 4-8 hours. So we got a team of at least 5 people (in reality, in the peak of the development of auto tests, the team had more than 10 automation engineers).

The tasks that needed to be solved were also understandable.

- Configure processes and command:

- define the process of interaction with the customer (functional testing group), fix the description format of the test case as input for the automation team;

- organize the development and maintenance process;

- form a team.

- Develop autotests with the following features:

- automatically click on the buttons in the browser with a preliminary check for the presence of elements and the necessary information on the page;

- provide work with complex elements such as Yandex.Map;

- ensure the loading of automatically generated files into the System, ensure the upload of files from the System with verification of their format and content.

- Provide a record from the browser screenshots, videos and internal logs.

- To provide the ability to integrate with external systems such as a mail server, task tracking system (JIRA) to check the integration processes between the tested System and external systems.

- Provide a documented report on all actions taken, including a display of entered and verified values, as well as all necessary investments.

- Perform tests in the required volume in parallel mode.

- Deploy autotests in existing infrastructure.

- Refine the already automated test scripts of the consonant target concept (speed of refinement - about 50 tests per week sprint).

As I mentioned in the introduction, at the start we had a working MVP prototype, implemented by another team, which had to be increased from 20 tests to 1000, adding new features along the way, and ensuring acceptable scalability and flexibility of making changes.

The presence of a working prototype additionally at the input gave us a technological stack, which included: Java SE8, JUnit4, Selenium + Selenide + Selenoid, Bamboo as a “runner” of tests and a “builder” of Allure reports. Since the prototype worked fine and provided the necessary basic functionality, we decided not to change the technological stack, but to focus on developing scalability of the solution, increasing stability and developing missing required features.

Basically, everything looked feasible and optimistic. Moreover, we completely coped with the tasks at a given time.

The following describes the individual technological and process aspects of developing AutoTests.

Description of Autotests. User Stories and Features

A source

Autotests implement the following set of user stories in the context of their use by the testing group:

- manual testing automation;

- automatic full regression;

- quality control of assemblies in the CI \ CD chain.

The implementation details and architectural decisions will be discussed in Part 2 - Technical. Architecture and technical stack. Implementation details and technical surprises .

Automatic and manual testing (User stories)

As a tester, I want to perform the target E2E test, which will be performed without my direct participation (in automatic mode) and will provide me with a detailed report in the context of the steps taken, including the data entered and the results obtained, as well as:

- It should be possible to select different target stands before starting the test;

- should be able to manage the composition of the running tests from all implemented;

- at the end of the test, you need to get a video of the test from the browser screen;

- when the test crashes, you need to get a screenshot of the active browser window.

Automatic full regression

As a testing group, I want to perform all the tests every night on a specific test bench in automatic mode, including all the features of "Automatic manual testing".

Assembly quality control in the CI \ CD chain

As a testing group, I want to conduct automatic testing of deployable updates of the System on a dedicated preQA-stand before updating the target functional test stage stands, which were later used for functional testing.

Implemented basic features

A source

Here is a brief set of the main implemented functions of AutoTests, which turned out to be vital or simply useful. Details of the implementation of some interesting functions will be in the second part of the article.

Local and remote use

The function offered two options for running Autotests - local and remote.

In local mode, the tester ran the required autotest at his workplace and at the same time could observe what was happening in the browser. The launch was done through the "green triangle" in IntelliJ IIDEA -). The function was very useful at the start of the project for debugging and demonstrations, but now it is used only by the developers of autotests.

In remote mode, the tester starts the autotest using the interface of the Bamboo plan with the parameters of the composition of the running tests, a stand and some other parameters.

The function was implemented using the environment variable MODE = REMOTE | LOCAL, depending on which either a local or remote web browser was initialized in the Selenoid cloud.

Limiting the composition of running tests by filter

The function provides an opportunity to limit the composition of running tests in remote user mode for the convenience of users and to reduce testing time. Two-stage filtration is used. The first step blocks the execution of tests based on the FILTER_BLOCK variable and is used primarily to exclude large groups of tests from running. The second step “skips” only tests that match the FILTER variable.

The value of the filters is specified as a set of regular expressions REGEXP1, ..., REGEXPN, applied by the principle of "OR".

When starting in manual mode, the tester was asked to set a special environment variable as a list of regular expressions applicable to the special @ Filter (String value) annotation, which annotated all test methods in the test classes. For each test, this annotation is unique and is constructed on the basis of a set of tags separated by an underscore. We use the following minimum template SUBSYSTEM_FUNCTION_TEST-ID_ {DEFAULT}, where the DEFAULT tag is for tests included in automatic night regression.

The function is implemented through a custom extension of the org.junit.runners.BlockJUnit4ClassRunner class (details will be given in Part 2-1 of the continuation of this article)

Documenting a report with results for all steps

The test results are displayed for all test actions (steps) with all the required information that is available in the Allure Framework. To list them does not make sense. There is enough information both on the official website and on the Internet as a whole. There were no surprises using the Allure Framework, and in general I recommend it for use.

The main functions used are:

- display of each test step (the name of the step corresponds to its name in the test specification - test script);

- displaying step parameters in a human-readable form (through the required implementation of the toString method of all transmitted values);

- Attaching screenshots, videos and various additional files to the report;

- classification of tests by types and subsystems, as well as linking autotest with test specifications in the test link management system Test Link through the use of specialized annotations.

Download and upload files from / to the browser with their subsequent verification and analysis

Working with files is an extremely important aspect of test scripts. It was necessary to provide both upload various files, and download.

Downloading files implied, first of all, loading dynamically generated EXCEL files into the System in accordance with the context of test script execution. Download was implemented using standard methods provided by selenium tools.

Uploading files implied downloading files by pressing the “button” in the browser to a local directory with the subsequent “transfer” of this file to the server where the AutoTests were run (the server on which the remote Bamboo agent was installed). Further, this file was parsed and analyzed in terms of format and content. The main file types were EXCEL and PDF files.

The implementation of this function turned out to be a non-trivial task, primarily due to the lack of standard file handling capabilities: at the moment, the function is implemented only for the Chrome browser through the “chrome: // downloads /” service page.

I will tell you in detail about the implementation details in the second part.

Accounting and control of the asynchronous nature of the Angular interface. Pending request control between Angular and REST services

Since the object of our testing was based on Angular, we had to learn to “fight” with the asynchronous nature of the frontend and timeouts.

In general, in addition to org.openqa.selenium.support.ui.FluentWait, we use a specially designed waiting method, which through Javascript checks for "incomplete" interactions with front-end REST services, and based on this dynamic timeout, tests get information whether it is possible to follow further or wait a little longer.

From the point of view of functionality, we were able to significantly reduce the time taken to complete the tests due to the rejection of static expectations where there is no way to differently determine the completion of the operation. In addition, this allowed us to define “hanging” REST services with performance problems. For example, they caught a REST service for which the number of entries displayed on the page was set to 10,000 elements.

Information about the “frozen” REST service with all parameters of its call, due to which the test “falls” due to infrastructural reasons, is added to the results of the dropped test, and is also additionally broadcast as an event in ELK. This allows you to immediately transfer the identified problems to the appropriate development teams of the System.

Browser Log Control

The browser log control function was added to control errors on the SEVERE level pages to receive additional information for dropped tests, for example, to monitor errors like "... Failed to load resource: the server responded with a status of 500 (Internal Server Error)".

The composition of the page processing errors in the browser is applied to each test result, and is also additionally unloaded as events in the ELK.

Video recording of the test and removing the snapshot of the page at the point of "fall" of the test

Functions are implemented for the convenience of diagnosing and parsing dropped tests.

Video recording is enabled separately for the selected remote test run mode. The video is attached as an attachment to the results in the Allure report.

A screenshot is taken automatically when the test crashes, and the results are also applied to the Allure report.

Passing events to ELK

The function of sending events to ELK is implemented to enable statistical analysis of the general behavior of AutoTests and the stability of the test object. At the moment, events have been sent to complete the tests with results and duration, as well as browser errors of the SEVERE level and fixed "hung" REST services.

Development Organization

A source

Development team

So, we needed at least 5 developers. Add another person to compensate for unplanned absences. We get 6. Plus a team lead, which is responsible for cross-cutting functionality and code review.

Thus, it was necessary to take 6 Java developers (in reality, at the peak of the development of autotests, the team exceeded 10 automation engineers).

Given the general state of the market and a fairly simple technological stack, the team was formed mainly of interns, most of them either just graduated from universities or were in their last year. In fact, we were looking for people with a basic knowledge of Java. Preference was given to manual testing specialists who would like to become programmers, and to motivated candidates with some (insignificant) development experience who in the future wanted to become programmers.

Reliance on beginner juniors required the introduction of compensation mechanisms in the form of a specific career offer, a clear schedule of work and the division of the application into separate functional domains (more on this in Part 2 - Technical. Architecture and technical stack. Implementation details and technical surprises ).

Organizing the process of developing autotests, we took into account forecasts of high staff turnover and built up work with juniors so that the Autotest project became the necessary first step in the developer's career in our company. A sort of annual residency for graduates or conditional CodeRush courses. This approach turned out to be correct.

Development process

The development process has virtually no dedicated iterations. Developers, guided by their areas of responsibility, “pull” tasks from the backlog and begin to implement them.

The tasks are primarily the development of new tests or refinement (updating) of existing ones. Completed tasks go through code review through the merge request procedure (using GitLab). After a successful code review, the results immediately “merge” into the main branch (actually into the productive) and become available for use immediately.

The key aspects of this process are the continuous speed of test delivery to the product and the possibility of independent work of developers. In our process, there is virtually no group work in the implementation of autotests / autotests of a particular subsystem, which is expressed in the prohibition of dividing automation tasks into subtasks.

Code review necessarily goes a step, and if at this step the results (code) do not correspond to the code convention and architectural requirements, the task returns for revision. Code review makes team lead.

Before submitting a task to a code review, the developer must always link the results of the test to it, and the results must be successful in all steps.

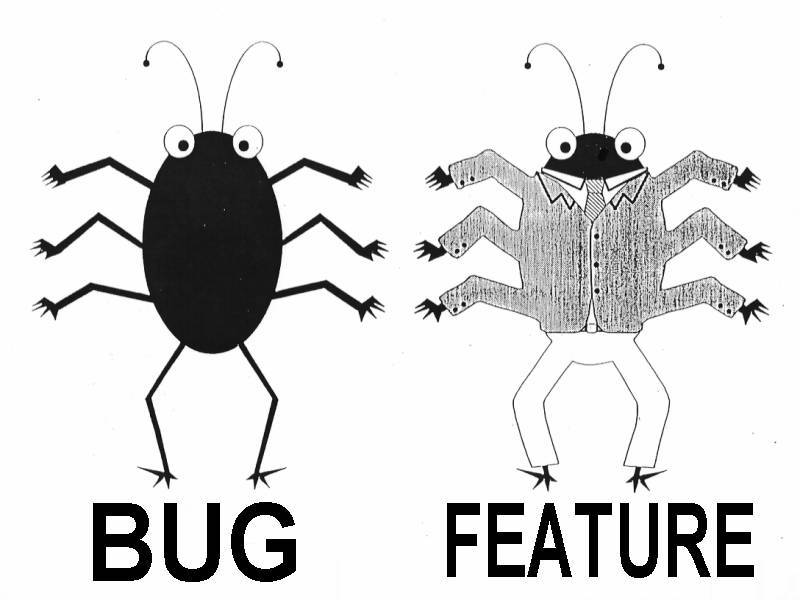

An interesting point: often in the development process it turned out that the autotest could not pass successfully, because either the test script is outdated or the subsystem under test contains a bug that was overcome through workarounds during the manual test. In such cases, the development of autotest stopped until the moment when the functional testing group understands whether this is a bug or a feature, and corrects the specification and / or system.

An interesting point: often in the development process it turned out that the autotest could not pass successfully, because either the test script is outdated or the subsystem under test contains a bug that was overcome through workarounds during the manual test. In such cases, the development of autotest stopped until the moment when the functional testing group understands whether this is a bug or a feature, and corrects the specification and / or system.

Assessment of the complexity of the implementation was carried out in the "test steps", which were actually determined by an automated test scenario by simple summation of the steps. In practice, this was the sum of all the paragraphs in the test case.

In addition to this ongoing process on a weekly basis, we held hourly team meetings to retrospectively review the latest work done, identify organizational and technical difficulties, and identify additional measures to improve the development process, if possible. A complete analogy with the sprint retrospective event.

Specification of Development Tasks

The initial development tasks in the backlog came as test specifications (test scenarios) from the functional testing group, which acted as an internal user and stakeholder for the AutoTests.

The development of a convenient and effective format for specifying autotests is an important part of the built process. On the one hand, we planned to minimize ambiguous and vague interpretations with conditional branches, which required constant refinement in words of the required target behavior of the autotests. On the other hand, it was necessary to ensure the use of existing test specifications without significant processing. In the end, we came to the next manifest.

It is necessary to use the imperative mood when describing the steps of test cases (open the page, enter values in the fields, etc.), which in essence are requirements for checking the functionality of the System. If you need to specify additional (auxiliary) information, you must explicitly mark it as “additional information” / “note” (it is important that the team does not have confusion whether this information is a guide to action or is provided for reference only).

One test case should contain a linear sequence of test steps without the possibility of branching the test logic at any of the steps (example of branching: if the result of executing a step is an error, then perform such steps, if the result is success, then perform others Steps). In addition, it is not allowed to describe several test scripts with different preconditions / different steps within the framework of one test case: the rule "One test case describes one linear test scenario (positive or negative)" applies here.

In order to avoid duplicate groups of steps in test cases, it is possible to completely reuse the steps of the test case (donor case) as part of the steps of another test case (“main” case). Thus, in the specification of the “main” test case, it was allowed to indicate, as one of the steps, the need to complete the donor case as “Complete all steps from case X” (while partial steps from the donor case are not allowed).

Repository Organization

Organization of the repository was quite simple, meeting the requirements of the development process. There was only one main branch master, from which feature branches branched. Feature branches through merge request merged into the main branch immediately upon completion of the code review.

At the start of the project, we decided that we would not support different releases of autotests - this was not necessary, therefore we did not create separate release branches.

Features:

(+) a simple process of continuous delivery of functions for small teams;

(+) allows you to deploy functions as they are developed without the need for release planning at the code level;

(+) allows you to "catch up" with the real development without introducing release delay;

(-) ONLY ONE version of the framework is supported (current);

(-) In previous versions of AutoTests, only hotfix bugs are supported.

As a result, we came to the following rules.

Developer

- Take the branch of the required type from MASTER.

- Run development.

- Perform testing on the required stand FEATURE branches.

- If the automation tool worked on the branch independently and it has a linear history, you should perform a “compression of the linear comment history” through rebase.

- Post the final commit to Gitlab and create a merge request for a team leader or person in charge. In the merge request, specify:

- name - task code in the Jira tracking bug system;

- description - link to the result of testing the branch in Bamboo.

GateKeeper (run by team lead)

- Check the performance of the development branch according to the results of the auto test in Bamboo.

- Conduct an audit of the code for compliance with the convention and the rules of architectural design.

- Pour (merge) FEATURE into DEVELOP, in case of conflict, the task is returned to the developer to resolve the conflict in the working order.

- Close task to development.

This article was co-written with the project manager and owner of the kotalesssk product.

This part 1, devoted to the organization of the development and use process, ends. Technical aspects of the implementation will be further described in part 2.

The second part - the technical one - is focused primarily on the leaders of automation groups UI end-2-end testing and leading test automation. Here they will find specific recipes for the architectural organization of code and deployment, which supports the mass-parallel development of large groups of tests in the face of constant variability of test specifications. In addition, you can find in the second part the full list of functions necessary for UI tests with some implementation details. And a list of surprises that you might also come across.