The second part of the translation of Raymond Kurzweil 's essay, The Law of Accelerating Returns .

(The first part is here )

Thought experiment

Let's take a closer look at who I am and who the new Ray is. First of all, my body and my brain are me?

Keep in mind that the particles that make up my body and brain are constantly changing. We are not at all a constant collection of particles. The cells in our body change at different speeds, but the particles (like atoms and molecules) that make up our cells change very quickly. I am not at all the same set of particles that was even a month ago. I am a structure of matter and energy that are semi-permanent (in the sense that they change only gradually), while our material content changes constantly, and very quickly. Rather, we look like patterns created by a running stream of water. Rushing over stones, water forms a special, unique pattern. It can remain relatively unchanged for several hours, or even years. Naturally, the substance itself, which forms the pattern - water, is replaced every millisecond. The same is true for Ray Kurzweil. Like water in a stream, my particles are constantly changing, but the pattern that people recognize as Ray Kurzweil has a certain level of continuity. This leads us to the idea that we should not associate our fundamental identity with a specific set of particles, but rather with the structure of energy and matter that we represent. Many modern philosophers seem to be partial to this argument of "identity in structure."

Wait a minute (again)!

If you scanned my brain and recreated a new Ray while I was sleeping, I would not even have to know about it (with nanobots this would be a fully feasible scenario). If then you come to me and say, “Good news, Ray, we have successfully restored the file of your mind so that you no longer need your old brain,” I may suddenly find a flaw in this “identity by structure” argument. I could wish the new Ray success and realize that it uses my “structure”, but still I would come to the conclusion that he is not me, because I'm still here. How could he be me? But, in the end, I would not even know that he even existed.

Let's look at another confusing scenario. Suppose I replace a small number of biological neurons with functionally equivalent non-biological ones (they can provide certain advantages, such as greater reliability and durability, but this is not essential for this thought experiment). After I completed this procedure, am I still the same person? My friends certainly think so. After all, I still have the same self-derogatory humor, the same stupid yes-smile, I'm still the same guy.

It should be clear where I'm driving. Gradually, region by region, I will ultimately replace my entire brain with almost identical (possibly improved) non-biological equivalents (preserving all concentrations of neurotransmitters and other details that represent my training, skills and memories). At every moment I feel that the procedures were successful. At every moment, I feel like I am the same guy. After each procedure, I claim that I am the same guy. My friends agree. There is no old Ray and a new Ray, there is only one Ray, which looks, essentially, unchanged.

However, consider the following. This gradual replacement of my brain with its non-biological equivalent is essentially identical to the following sequence:

- scan Ray and recreate Ray's mind into a new (non-biological) Ray, and then

- remove old ray

But above we came to the conclusion that in this scenario, the new Ray is not the same as the old Ray. And if old Ray is removed, then Ray ceases to exist. Thus, the gradual replacement scenario essentially ends with the same result: a New Ray was created, and the old Ray was destroyed, even if we had never seen it disappear. What seems to be the continued existence of only one Ray is actually the creation of a new Ray and the annihilation of the old Ray.

On the third hand , the scenario of gradual replacement is practically no different from what usually happens with our biological essence, in the sense that our particles are always quickly replaced. So, am I really constantly being replaced by someone who, by coincidence, is very similar to me old?

I am trying to illustrate why consciousness is not such an easy topic. If we talk about consciousness as a certain type of intellectual skill: for example, the ability to reflect on oneself and the situation, then the problem is not complicated at all, since any skill, ability or form of intelligence that needs to be determined will be reproduced in non-biological objects (i.e. machines) for several decades. With this type of objective view of consciousness, riddles disappear. But a completely objective look does not penetrate the essence of the problem, because the essence of consciousness is a subjective experience, not the objective correlates of this experience.

Will these machines of the future be capable of spiritual experience?

They will certainly qualify for this. They will claim to be human beings and have the full range of emotional and spiritual experiences that people claim. And this will not be empty claims; they will exhibit the full spectrum of rich, complex, and subtle behavior that is associated with these feelings. How do these undoubtedly convincing claims and behaviors relate to the subjective experience of such recreated people? We continue to return to the objectively existing, but ultimately immeasurable problem of consciousness.

People often talk about consciousness as if it were a clear attribute of an entity that can be easily detected, identified, and measured. If there is one important suggestion that we can make regarding why the problem of consciousness is so controversial, then it is:

There is no objective test that could definitively determine its presence.

Science is objective measurements and logical conclusions based on them, but the very nature of objectivity lies in the fact that you cannot measure subjective experience - you can only measure its manifestations, such as behavior (and in the behavior I include the actions of the constituent components of an entity, such like neurons). This limitation is connected with the very nature of the concepts of “objective” and “subjective”. In fact, we cannot penetrate the subjective experience of another subject using direct objective measurement. Of course, we can bring any arguments in this favor, for example, “look inside the brain of this non-human being, see how its working methods are similar to the methods of the human brain.” Or “look how his behavior resembles human behavior.” But, in the end, these are just arguments. No matter how convincing the behavior of the recreated person is, some observers will refuse to accept the consciousness of this entity if it does not eject neurotransmitters, is not based on protein synthesis under the control of DNA, or does not have some other specific biological human attribute.

We assume that other people are conscious, but this is still an assumption, and among people there is no consensus on the consciousness of non-human entities, such as other higher animals. The issue will be even more controversial regarding future non-biological entities with human behavior and intelligence.

So, how do we respond to a statement of consciousness from non-biological intelligence (statement of machines)? From a practical point of view, we will accept their claims. Keep in mind that non-biological entities in the twenty-first century will be extremely intelligent, they will be able to convince us that they are conscious. They will have all the subtle and emotional signals that convince us today that people are conscious. They can make us laugh and cry. And they will be angry if we do not accept their demands. Although this is, in fact, political forecasting, not a philosophical argument.

About tubes and quantum computing

A few years ago, Roger Penrose, a renowned physicist and philosopher, suggested that the subtle structures in neurons, called tubes, perform an exotic form of computation called “quantum computation”. Quantum computing is computing using so-called “qubits” that take all possible combinations of decisions at the same time. This can be seen as an extreme form of parallel processing (because all combinations of qubit values are checked simultaneously). Penrose suggests that the tubes and their quantum computational capabilities complicate the concept of reconstructing neurons and reconstructing a state of consciousness.

However, little suggests that the tubes contribute to the thought process. Even extensive models of human knowledge and abilities are more than explained by current estimates of brain size based on modern models of neuron functioning that do not include ducts. In fact, even with these tubeless models, it seems that the brain is conservatively designed with a much larger (several orders of magnitude) number of connections than it needs for its capabilities and performance. Recent experiments (for example, experiments at the Institute of Nonlinear Science in San Diego), showing that hybrid biological and non-biological networks work similarly to completely biological networks, although they are not final, convincingly indicate that our models of neuronal functioning without tubules are adequate . The computer model for processing auditory information by Loyd Watts uses an order of magnitude less computation than the network of neurons she models, and there is no hint of the need for quantum computing for this.

However, even if the ducts are important, this does not change the forecasts that I discussed above to any significant extent. According to my model of computational growth, if the tubes increased the complexity of neurons a thousand times (and keep in mind that our modern models of neurons without tubes are already quite complex, including about a thousand connections per neuron, multiple non-linearities and other details), this would delay our achievement human brain performance is only about 9 years old. With an increase in complexity a million times, the delay will be only 17 years. Billion will give about 24 years (keep in mind that computing performance is growing at a double exponential rate).

As for quantum computing, again, nothing suggests that the brain is doing quantum computing. The fact that quantum technology is possible does not mean that the brain implements it. After all, we have no lasers in our brains, or at least no radio. Although some scientists claim to detect the collapse of a quantum wave in the brain, no one has proposed human capabilities that really require the ability to perform quantum computing.

However, even if the brain performs quantum calculations, this does not significantly change the prospects for achieving the power of computer calculations of the level of the human brain (and higher) and does not make it impossible to load the brain model. First of all, if the brain performs quantum computing, it will only confirm that quantum computing is feasible. There would be nothing in such a discovery to suggest that quantum computing is limited by biological mechanisms. The biological mechanisms of quantum computing, if they exist, can be reproduced. Indeed, recent experiments with small quantum computers have been successful. Even a conventional transistor is based on the quantum effect of electron tunneling.

Penrose suggests that it is impossible to exactly reproduce a set of quantum states, so absolutely exact copying is impossible. Well, how accurate should the copy be? At the moment I am in a completely different quantum state (and, accordingly, with many other non-quantum differences) than I was a minute ago (and of course, in a completely different state than I was before I wrote this paragraph). If we work out the technology of loading consciousness to such an extent that the “copies” will be as close to the original as the original still changes within one minute, this will be enough for any conceivable purpose, and will not require copying quantum states. As technology advances, the accuracy of the copy can become as high as the original changes over shorter periods of time (for example, one second, one millisecond, one microsecond).

When Penrose was told that neurons (and even neural connections) are too large for quantum computing, he proposed the theory of tubes as a possible mechanism for neural quantum computing. Thus, the concepts of quantum computing and tubes were introduced together. If someone is looking for obstacles to reproducing brain functions, this is an entertaining theory, but it is not able to raise any real obstacles. So far, there is no evidence, but even if they appear, the process will only drag on for a decade or two. There is no reason to believe that biological mechanisms (including quantum computing) inherently cannot be reproduced using non-biological materials and mechanisms. Dozens of modern experiments successfully perform just such replication.

Non-invasive non-surgical reversible programmable distributed brain implant, a total virtual reality environment with full immersion, experienced translators and brain expansion.

How will we apply technology that is more intelligent than its creators? You might be tempted to answer “Caution!” But let's take a look at some examples.

Let's look at a few examples of nanobot technology, which, based on miniaturization and cost reduction trends, will be possible over the next 30 years. In addition to scanning your brain, nanobots can also expand our experience and our capabilities.

Nanobot technology will provide complete immersion, fully convincing virtual reality as follows. Nanobots occupy positions in close physical proximity to each interneuronal connection emanating from all our senses (for example, eyes, ears, skin). We already have electronic device technology that allows us to interact with neurons in both directions, and does not require direct physical contact with neurons. For example, scientists at the Max Planck Institute have developed “neural transistors” that can detect a firing of a nearby neuron or, conversely, can trigger a firing of a neuron or suppress it. This means two-way communication between neurons and electronic neural transistors. Institute scientists demonstrated their invention by controlling the movement of a live leech from their computer. Again, the main aspect of nanobot-based virtual reality is that it is not yet feasible for reasons of size and cost.

When we want to feel the real reality, nanobots simply remain in their places (in the capillaries) and do nothing. If we want to enter virtual reality, they suppress all input signals coming from real feelings, and replace them with signals that will come from a virtual environment. You (that is, your brain) could decide to make your muscles and limbs move as usual, but nanobots again intercept these interneuronal signals, suppress the movement of your real limbs, and instead make your virtual limbs move, and provide appropriate movement and reorientation in the virtual environment.

The network will provide many virtual research environments. Some will be recreation of real places, others will be a bizarre environment that has no “real” counterpart. Some would be completely impossible in the physical world (for example, because they violate the laws of physics). We will be able to "go" into these virtual environments ourselves, or we will meet there with other people, both real and simulated. Of course, in the end there will not be a clear difference between them.

By 2030, a visit to the website will mean a complete immersion in virtual reality. In addition to encompassing all the senses, these common environments can include emotional overlays, as nanobots will be able to evoke neurological correlates of emotions, sexual pleasure, and other derivatives of our sensory experiences and mental reactions.

In the same way that people today broadcast their lives through webcams in their bedrooms, the “experienced translators” of 2030 will broadcast their entire stream of sensations, and if desired, their emotions and other secondary reactions. We can connect (by going to the appropriate website) and experience the life of other people, as in the plot concept of “Being John Malkovich”. Particularly interesting sensations can be archived and relived at any time.

We do not need to wait 2030 to experience the virtual reality environment, at least for visual and auditory sensations. A complete immersion with simulations of the visual-auditory environment will be available by the end of this decade with images transmitted directly to our retinas with glasses and contact lenses. All the electronics for computing, image reproduction, and a very high bandwidth wireless Internet connection will be built into our glasses and woven into our clothes, so computers as separate objects will disappear.

In my opinion, the most significant consequence of the Singularity will be the fusion of biological and non-biological intelligence. Firstly, it is important to note that long before the end of the 21st century, thinking based on non-biological substrates will dominate. Biological thinking stuck at a speed of 10 26 calculations per second (for all the biological brains of mankind), and this figure will not change significantly, even with the achievements of bioengineering in our genome. Non-biological intelligence, on the other hand, is growing at a double exponential rate and will far exceed biological intelligence long before the middle of this century. However, in my opinion, this non-biological intelligence should still be considered a person, since it is completely derived from our human-machine civilization. The fusion of these two worlds of intelligence is not just a fusion of biological and non-biological thinking, but, more importantly, it is a new method and organization of thinking.

One of the key ways these two worlds can interact is through nanorobots. Nanobot technology can expand our minds in almost any imaginable way. Our brain today is relatively constant in its design. Although we add new patterns of interneuronal connections and concentrations of neurotransmitters during the normal learning process, the current total capacity of the human brain is very limited, amounting to only a hundred trillion compounds. Brain implants based on a widely distributed network of intelligent nanobots will ultimately expand our memory a trillion times, and otherwise significantly improve all of our sensory, recognition and cognitive abilities. Since nanobots communicate with each other over a wireless local area network, they can create any set of new neural connections, they can break existing connections (suppressing the operation of neurons), they can create new hybrid biological and non-biological networks, and also add new vast non-biological networks.

The use of nanobots as extenders of the brain’s capabilities is a significant improvement over the idea of the surgically installed neural implants that are starting to be used today (for example, neuroimplants in the ventral posterior nucleus, subthalamic nucleus and ventrally lateral thalamus to counteract Parkinson’s disease, tremors from other neurological diseases, cochlear implants, etc.) Nanobots will be administered without surgery, in fact, simply by injection or even swallowing them. All of them can be instructed to leave the body, so the process is easily reversible. They are programmable, in the sense that one minute they can provide virtual reality, and the other - various brain extensions. They can change their configuration and, obviously, can change their software. Perhaps most importantly, there are a lot of them, and therefore they can occupy billions or trillions of positions throughout the brain, while a surgically inserted neural implant can only be placed in one or more than a few places.

Double exponential economic growth in the 90s was not a bubble

Another manifestation of the law of accelerating returns as it approaches the Singularity can be found in the world of economics, a world vital for both the emergence of the law of accelerating returns and its consequences. It is an economic imperative of a competitive market that pushes technology forward and fuels the law of accelerated returns. In turn, the law of accelerating returns, especially as we approach the Singularity, transforms economic relations.

Almost all economic models taught in economic lessons used by the Board of Governors of the Federal Reserve System to determine monetary policy, government agencies to determine economic policy and all kinds of economic forecasters are fundamentally wrong, because they are based on an intuitive linear view of history, not historically based exponential representation. The reason these linear models work for a while is the same as for the most part people have an intuitively linear look: exponential trends seem (and are approximately) linear for a short period of time, especially in the early stages of the exponential trend, when what's happening. But as soon as a “curve break” is reached and exponential growth begins, linear models are destroyed. The exponential trends underlying productivity growth are only entering this explosive phase.

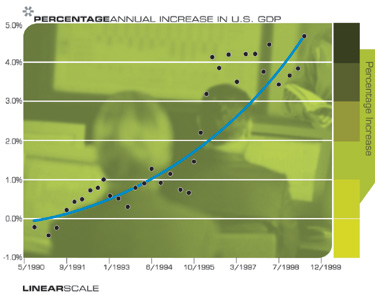

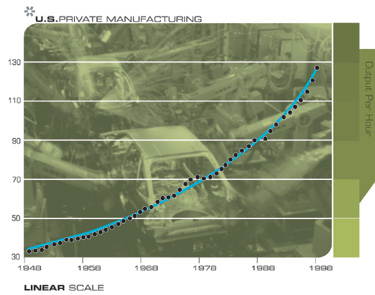

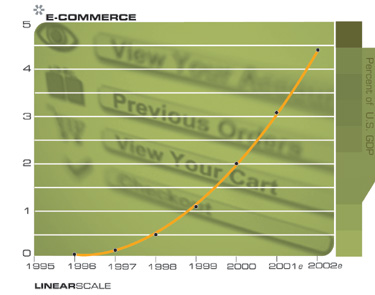

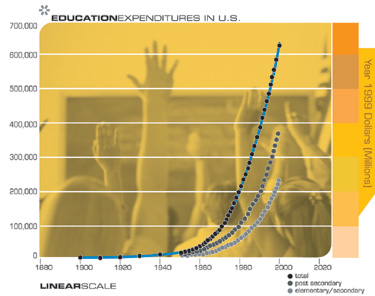

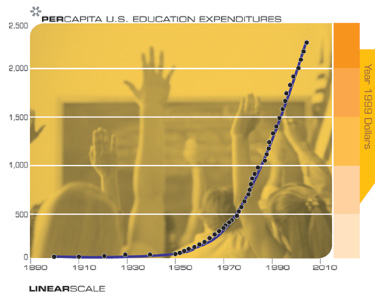

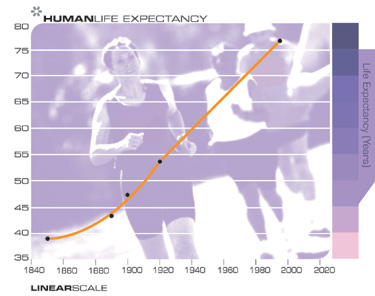

The economy (viewed as a whole and per capita) has grown exponentially during this century:

There is also a second level of exponential growth, but until recently, the second exponent was at an early stage, so the growth rate growth was not noticeable. , .

( ) . , . , « — », , . . . , , , , . , , , , . , .

, , , , , - , , (, 1000 ). (Pete Klenow) (Mark Bils) , 1,5% 20 - . - . (BLS), , , 0,5% , , , , , 1% .

, . 1,6% 1994 , 2,4% , . , 30 2000 , 5,3%. 4,4% 1995 1999 , 6,5% .

1990- . . , , , , , : , , , , . .

( ) , .

. . BP Amoco 1 10 1991 . - , , . (Roland Berger) Deutsche Bank, 1200 . Morgan Stanley , Ford, GM DaimlerChrysler 2700 . , .

-

| (: ) | |||

| 1985 | 1995 | 2000 | |

| Price | 5000$ | 500$ | 50$ |

| (# ) | 1000 | 10,000 | 100,000 |

| ? | Not | Not | Yes |

| () | 180 | 60 | 5 |

| Accuracy | Bad | Average | Good |

, , , , , , , , ( ) , .

«» 3,5% , « », , , «», . , «» , , 3,5%. 5%.

, . , , , , . , - . , «», , . .

, , , ( ): , , -, , , , .

, . . , , , , , , .

- , . . . , , . , , 50 . . , 0,6% 0,25% , .

, , . , , , . , . « », ( , ) .

, «B2B» ( ) «B2C» ( ) - , «» , , () -.

, , . , , , . , , - . 3,5% ! , . , , , . , , , , , . , , , 30- ( , 30- 2000 ).

. 120 ( ) . . , . , , ( ) . , , , , .

, «» , , . , () , . , , , . , , . () , .

! , 40 , .

Right. , , . 20 , 40 .

, .

, , «» , . ( (. .)) «» . « ».

, . .

, . , , , , , .

, , .

, , .

, , , ?

, . , , , , . , .

, , , , , . ( , , ), , . . , ( ) , , . , , , : , , , .

. , . , , . , , (.. ) , , , , .

, « ?» « ?» (, , ) . «» , , . , «». , .

, , , , . , ( ) (.. , XIX , ).

If we provided a description of the dangers that exist today, to people who lived a couple of hundred years ago, they would consider it insane to take such a risk. On the other hand, how many people in 2000 really wanted to return to the short, cruel, disease-filled, poverty, disaster-prone lives that 99 percent of humanity lived a couple of centuries ago? We can romanticize the past, but until recently, most of humanity lived an extremely fragile life, where one completely ordinary bad luck could mean complete disaster. A significant part of our species still lives in such conditions, which is at least one of the reasons for the continuation of technological progress and the accompanying economic growth.

People often go through three stages in studying the impact of future technology: reverence and surprise at its ability to overcome age-old problems, then a sense of fear of the new set of serious dangers that accompany these new technologies, and then, finally, the hope and realization that the only viable and the responsible way is to choose a cautious course, following which you can take advantage of all the expected opportunities and try to avoid dangers.

In his WIRED article “ Why We Don't Need the Future, ” Bill Joy eloquently described the plague invasion of past centuries and how new, self-replicating technologies, such as mutant bioengineered pathogens and “nanobots,” can bring you out of control for a long time forgotten epidemics. These dangers are actually real. On the other hand, Joy admits, thanks to technological advances such as antibiotics and improved sanitation, we have eliminated the prevalence of such epidemics. The suffering in the world continues and requires our close attention. Should we tell millions of people suffering from cancer and other destructive conditions that we are canceling the development of all bioengineered treatments because there is a risk that these same technologies may someday be used for malicious purposes? By asking a rhetorical question, I understand that there are people who want to do just that, but I think that most people will agree that such a widespread rejection is not the answer.

The continuing ability to alleviate human suffering is one of the important reasons for further technological progress. Equally convincing are the obvious economic benefits that I talked about above that will continue to accelerate over the coming decades. The constant acceleration of many interconnected technologies is gold-paved roads (here I use the plural, because technology is clearly not the only way). In a competitive environment, walking along these roads is an economic imperative. Rejecting technological progress would be economic suicide for individuals, companies and countries.

All this leads us to the problem of rejection, which is Bill Joy's most controversial recommendation and his personal choice. I feel that failure at a certain level is part of a responsible and constructive response to these real dangers. However, the problem is precisely at what level should we abandon technology?

Ted Kaczynski would make us give up everything. This, in my opinion, is neither desirable nor feasible, and the futility of such a position is only emphasized by the senselessness of Kazinsky's deplorable tactics.

Another approach would be to abandon certain directions; for example, nanotechnology can be considered too dangerous. But such a rejection by "broad strokes" is also untenable. Nanotechnology is simply the inevitable end result of the constant trend towards miniaturization that permeates all of our technology. This is not a single centralized order, this is the direction of the development of many projects with a huge variety of goals.

One observer wrote:

“Another reason that an industrial society cannot be reformed ... is that modern technology is a single system in which all parts depend on each other. You cannot get rid of the “bad” parts of a technology and keep only the “good” parts. Take, for example, modern medicine. Progress in medicine depends on progress in chemistry, physics, biology, computer science and other fields. Modern treatment requires expensive high-tech equipment that can only be created by a technologically advanced, economically rich society. Obviously, without the full range of technologies and what is connected with it, there can be no great progress in medicine. ”

The observer that I quote is still the same Ted Kazinski. Although one could properly oppose Kazinsky as an authority, I believe that he is right about the highly interconnected nature of the benefits and risks. Nevertheless, Kazinsky and I clearly diverge in the overall assessment of the relative balance between them. With Bill Joy, we discussed this issue both publicly and privately, and we both believe that technology will and should develop, and that we should actively deal with their dark side. If Bill and I disagree on something, then these are concrete cases of rejection of technologies, which are both possible and desirable.

A widespread abandonment of technology will only drive them underground, where development will continue without ethical restrictions and regulation. In such a situation, only less reliable, less responsible users (for example, terrorists) will gain the experience of using technologies.

I truly believe that failure at a certain level should be part of our ethical response to the dangers of technology in the twenty-first century. One constructive example of this is the proposed ethical rule of the Foresight Institute, founded by pioneer of nanotechnology Eric Drexler, that nanotechnologists agree to abandon the development of physical objects that can self-reproduce in the natural environment. Another example is the prohibition of self-replicating physical objects that contain their own codes for self-replication. In what Ralph Merkle, the nanotechnologist, calls “Broadcast Architecture,” such objects will have to receive similar codes from a centralized secure server, which will ensure that there is no unwanted replication. Broadcast Architecture is not possible in the biological world, so here we have at least one mechanism that can make nanotechnology safer than biotechnology. On the other hand, nanotechnology is potentially more dangerous because nanobots can be physically stronger than protein-based entities and more intelligent. Ultimately, it will become possible to combine them using nanotechnology to provide codes to biological objects (replacing DNA), in which case biological objects can use a much more secure Broadcast Architecture.

Our ethics, as the ethics of responsible technologists, among other professional ethical principles, should include such narrowly targeted restrictions. Other protective measures should include control by regulatory authorities, the development of an “immune” response for specific technologies, and computer-controlled surveillance by law enforcement organizations. Many people do not know that our intelligence services are already using advanced technologies, such as automatic word detection, to monitor a significant flow of telephone conversations. As we move forward, the balance of our inalienable rights to privacy with our need to protect against the malicious use of powerful technologies of the twenty-first century will be one of many serious problems. This is one of the reasons that issues like “bookmarks” in encryption systems (thanks to which law enforcement would have access to information that was otherwise inaccessible) or the FBI Carnivore email tracking system were so controversial.

As a positive example, we can consider one recent technological challenge. Today, there is a new form of a completely non-biological self-reproducing entity that was not there only a few decades ago: a computer virus. When this form of destructive intrusion first appeared, serious concerns were expressed that, as it becomes more sophisticated, software pathogens could destroy the very environment of the computer network in which they live. However, the “immune system” that arose in response to this problem has proven to be very effective. Although destructive, self-replicating software objects do damage from time to time, such damage is only a small part of the benefits we get from the computers and communication channels that connect them. No one would suggest we do away with computers, local networks, and the Internet because of software viruses.

One might argue that computer viruses do not have the deadly potential of biological viruses or the destructiveness of nanotechnology. Although true, it only confirms my observations. The fact that computer viruses are usually not fatal to humans only means that more and more people want to create and release them. It also means that our response to danger is much less intense. And vice versa, when it comes to self-reproducing objects that are potentially fatal on a large scale, our reaction at all levels will be much more serious.

Technology will remain a double-edged sword, and the history of the twenty-first century has not yet been written. They represent a tremendous force that will be used to achieve the goals of all mankind. We have no choice but to work hard to apply these accelerating technologies to advance our human values, despite the fact that it often seems that there is no consensus on what these values should be.

Life forever

As soon as the technology of transfer of consciousness is improved and fully developed, will it allow us to live forever? The answer depends on what we mean by life and death. Consider what we are doing today with the files of our personal computer. When we switch from an old personal computer to a newer model, we do not discard all our files; rather, we copy them to new equipment. Although our files do not necessarily last forever, the longevity of our personal computer software is completely separate and not related to the hardware on which it runs. However, when it comes to our personal mind file, when our human equipment fails, the software of our lives dies with it. However, this will not happen if we have the means to save and restore thousands of trillions of bytes of information representing a circuit that we call our brain.

Consequently, the longevity of a conscious file will not depend on the continued viability of any particular hardware. Ultimately, software-based people, although they go far beyond the rigid framework of understanding people as we know them today, will live on the Internet, projecting bodies when they need them or when they want, including virtual bodies in various spheres of virtual reality, bodies - holographic projections, physical bodies, consisting of swarms of nanobots or other nanotechnologies.

Consequently, a person based on software will be free from the limitations of any particular mental environment. Today, each of us is limited to only a hundred trillion ties, but people at the end of the twenty-first century will be able to develop their thinking and think without limits. We can consider this as a form of immortality, although it is worth noting that data and information will not necessarily exist forever. Although the longevity of information does not depend on the viability of the equipment on which it is processed, longevity depends on its relevance, usefulness and availability. If you have ever tried to extract information from an obsolete form of storage device in an old little-known format (for example, from a tape reel from a mini-computer of the 1970s), you will understand the problems associated with maintaining software viability. However, if we diligently maintain our mind files, store current backups and transfer them to current formats and media, then we can achieve a form of immortality, at least for people based on software. Our mind file - our personality, skills, memories - all this is lost today, when our biological equipment fails. When we can access, save and restore this information, its durability will no longer depend on our hardware.

Is this form of immortality conceptually the same as the physical person, as we know him today, living forever? In a sense, this is so, because, as I pointed out earlier, our modern Self is also not an unchanging aggregate of matter. Only our structures of matter and energy are preserved, and even they tend to gradually change. Similarly, the very structure of the human program will be preserved, developed and gradually changed.

But is this person, based on my mind file, which migrates through a multitude of computational substrates and survives any particular mental environment, really me? We return to the same issues of consciousness and identity that have been discussed since the time of the dialogues of Plato. As we live the twenty-first century, they will not remain the subject of sophisticated philosophical debate, but will become vital issues, practical, political and legal.

A related question: “Is death desirable?” A significant part of our efforts is aimed at avoiding it. We are making extraordinary efforts to delay her arrival, and it is indeed often often considered an unexpected and tragic event. However, it can be difficult for us to live without her. We believe that death gives meaning to our lives. This gives the importance and value of time. Time can become meaningless if there is too much of it.

The next step in evolution and the purpose of life

Still, I consider the liberation of the human mind from its serious physical limitations in terms of volume and lifetime as a necessary next step in evolution. In my opinion, evolution is the goal of life. That is, the purpose of life in general, and of our lives, in particular, is to develop. Therefore, Singularity is not a serious danger that should be avoided. In my opinion, the next paradigm shift is the goal of our civilization.

What does it mean to develop? Evolution is moving toward greater complexity, more elegance, more knowledge, a higher level of intelligence, more beauty, more creativity and more other abstract and subtle attributes such as love. And we consider all these things, only in an infinite form, inherent in God: infinite knowledge, infinite wisdom, infinite beauty, infinite creativity, infinite love and so on. Of course, even accelerating progress in evolution will never allow infinity to be reached, but since it grows exponentially, it certainly moves quickly in this direction. That is, evolution is inexorably moving towards our concept of God, although it never reaches this ideal. Thus, the liberation of our thinking from the severe limitations of its biological form can be considered as an important spiritual search.

In making this statement, it is important to emphasize that terms such as evolution, fate, and spiritual search are observations of the end result, and not the basis for predictions. I do not claim that technology will develop to the level of man and beyond beyond it simply because it is our destiny and the result of a spiritual search. Rather, my predictions are the result of a methodology based on the dynamics underlying the (double) exponential growth of technological processes. The main driving force behind technology is the economic imperative. We are moving towards machines with human-level intelligence (and higher) as a result of millions of small achievements, each of which has its own economic rationale.

(Kurzweil Applied Intelligence): , , , , , , . , , . , (, Speech Systems), . , , . , , . «», , . , .

. . . , . « » - ( ) . 1974 (OCR) (Kurzweil Computer Products, Inc.), IPO. , 25 . , 1995 1999 , 1 15 .

, . , , , , , ( ) . , .

. , , . , , , . , , .

, , , . 10 26 ( ), ( ). - , 2030 . XXI , . - - .

, , : , , , , , . , , .

, , , , . . , , , , , ?

SETI ( )

, 2099 , - , , , , , .

SETI ( )? , , , , () . , , , , , , (, ), (.. ), .

, SETI, , . SETI , .

Scientific American

( Scientific American) , SETI 10 7 , ( ) 10 25 — II ( 10 6 10 18 . .). .

- (Seth Shostak) SETI , , Project Phoenix, 100 , , 500 , , , .

, , («») , , SETI II, , . , , , ( — ) , , II.

, SETI, , , , , .

, . ? , , , . . ?

, , .

- , , , , . , , , . , , ( — , ), , . , , .

? , , , . , , . , , , .

, ( ) , ; , ( , ). ( ), , , — , . . , , , , . - , - ? , , . , ( ), , - , .

, , , , , , , .

, , , , . , , , . , SETI. , , , . , , , , .

SETI , (, ) ( , ) , , , ( ) . , , . , , ?

, , , . , . , , Dodge, - ( !) .

, ? , ? . , . , , , , . . , , , . , , .

.

, , . . , , SETI - , ( ) , , , .

, , . , , , , . , , , . - , , . , , . , , . SETI , , , , , , . , , . , , , , , . , , SETI , , , .

,

, . , , , . , , .

, , , . , (, ), , , . . , . ; . , , . . — , , - . , .

, . , . , , ( , ), , . ( ) (, , ) , . .

? , .

( ), , . — . ; ; . , , , . , .

Brett Rampata/Digital Organism

MaxWolf 2019

« 2 000 » ,

I “approached the projectile” several times, but each time something stopped me ... Starting from the scale of the enterprise, but 150 kilobytes of text is not the amount that can be translated from the raid, and ending with the fact that the original text was published already in 2001. That is, almost twenty years have passed since then, and this is a colossal period of time, especially in the light of the ideas that the author promotes. But the more time passed from the last attempt, the more obvious the idea became that this text should be in Russian. The last straw was the “black label” sent by the “corporation of good”: the Google Translation Toolkit, in which I sporadically translated this “Law ...” for the last two years, when the time was given, one at least, at least two, of a paragraph, closes on December 4, 2019 years ... you need to hurry.

Some time ago, it seems, Sergei Khaprov, I came across an interesting consideration that Russian (mainly, of course, due to the peculiarities of the development of the Soviet project) is the second after the English, and, apparently, the last for the foreseeable future, ontologically complete language, that is, the language in which knowledge is presented from all areas of human activity.

Alas, due to both objective and subjective reasons, the fabric of this fullness has become quite dilapidated over the last thirty-odd years, and many gaping holes have formed in it, especially noticeable in those areas of scientific knowledge where development is faster than the average hospital (I myself, by the way , I felt it well when translating the section on five parallel paths of sound: “high-level” Russian-language terms for the described brain structures exist, but starting from a certain level of detail (I’ll assume that the one that opened was learned m just in the last few decades) - the emptiness, only slightly diluted transfers the most recent time, often between a weakly consistent terminology). From time to time, watching discussions arise in different places of the public space, the subject of which the participants themselves are not fully aware of, although they began to write a lot on this topic in English already from the mid-90s of the last century, I decided to make my modest contribution to public education translation into Russian of one of the fundamental materials on the topic of the prospects of technological and humanitarian evolution of mankind for the next several decades.

In general, Ray Kurzweil has already written a dozen books, and all of them, one way or another, are devoted to this problem (unfortunately, only two have been translated into Russian). And the Law of Accelerating Returns is considered or touched upon in each, starting with The Age of Spiritual Machines, where it was first mentioned. The most detailed book on this subject, perhaps, “The Singularity Is Near”, published in 2005, but it is almost an order of magnitude larger than a real essay, so for amateur entertainment the translations are too large. By the way, in the second (and last) Kurzweil’s book, The Evolution of the Mind (also known as How to create a mind), a separate chapter is devoted to the law of accelerating returns in its application to the brain, where you can find almost the same graphs, as in this essay, supplemented by data from the years since its writing. It is not surprising, but the trends that the author paid attention to back in the 80s of the last century still work perfectly. However, if you are interested in the factual side of the reliability of his forecasts (and you read in English), Ray himself wrote a separate essay in 2010, “How my predictions are faring,” where he brings together and disassembles in a rather detailed way the sales of his main forecasts (spoiler: almost everything came true).

The topic itself gradually penetrates into the Russian-speaking public consciousness, it has energetic supporters , categorical opponents , and just practitioners who are trying to thoughtfully use the practical aspects of the ideas of exponential growth. In any case, I think that to get acquainted with one of the first works on the topic of accelerating development will be useful to everyone interested, regardless of personal relationship.

Finally, the last remark of an already rather lengthy preface. When translating, I preferred the harmony of verbosity (I hope, not to the detriment of the meaning), and the Russian name “Law of Accelerating Returns” itself still makes me a little jarred. However, the author considered the title of his essay as an allusion to the economic Law of diminishing returns , so I took the established Russian name of this phenomenon as a model.