And if something exists, then on the Internet about it already exists ... an open project! See how the Open Data Hub helps scale new technologies and avoid the difficulties of implementing them.

For all the benefits of artificial intelligence (AI) and machine learning (ML), organizations often have difficulty scaling these technologies. The main problems with this, as a rule, are as follows:

- Information exchange and cooperation - it is practically impossible to exchange information without unnecessary efforts and to cooperate in the mode of fast iterations.

- Access to data - for each task it needs to be built anew and manually, which is time-consuming.

- On-demand access - there is no way to obtain on-demand access to machine learning tools and platform, as well as to computing infrastructure.

- Production - the models remain at the prototype stage and are not brought to industrial exploitation.

- Tracking and explaining AI results - reproducibility, tracking, and explaining AI / ML results are difficult.

Left unresolved, these problems adversely affect the speed, efficiency and productivity of valuable data processing and analysis specialists. This leads to their frustration, disappointment in work, and as a result, business expectations regarding AI / ML come to naught.

Responsibility for solving these problems lies with IT professionals who need to provide data analysts - right, something like a cloud. If more developed, then we need a platform that gives freedom of choice and has convenient, easy access. At the same time, it is fast, easily reconfigurable, scalable on demand and resistant to failures. Building such a platform on the basis of open source technologies helps to not become dependent on the vendor and maintain a long-term strategic advantage in terms of cost control.

A few years ago, something similar happened in application development and led to the emergence of microservices, hybrid cloud environments, IT automation and agile processes. To cope with all this, IT professionals began to use containers, Kubernetes and open hybrid clouds.

Now this experience is being applied to answer Al's challenges. Therefore, IT professionals create platforms that are based on containers, allow you to create AI / ML services as part of agile processes, accelerate innovation and are built with an eye on a hybrid cloud.

We will start building such a platform with Red Hat OpenShift, our container Kubernetes platform for a hybrid cloud that has a fast-growing ecosystem of software and hardware ML solutions (NVIDIA, H2O.ai, Starburst, PerceptiLabs, etc.). Some of Red Hat's customers, such as the BMW Group, ExxonMobil, and others, have already deployed containerized ML-tool chains and DevOps processes based on this platform and its ecosystem to bring their ML-architectures to commercial operation and speed up the work of data analysts.

Another reason why we launched the Open Data Hub project is to demonstrate an example of architecture based on several open source projects and show how to implement the entire life cycle of an ML solution based on the OpenShift platform.

Open Data Hub Project

This is an open source project that develops within the framework of the corresponding development community and implements a full cycle of operations - from loading and converting initial data to the formation, training and maintenance of the model - when solving AI / ML tasks using containers and Kubernetes on the OpenShift platform. This project can be considered as a reference implementation, an example of how to build an open AI / ML as a Service solution based on OpenShift and related open source tools such as Tensorflow, JupyterHub, Spark and others. It is important to note that Red Hat itself uses this project to provide its AI / ML services. In addition, OpenShift integrates with key software and hardware ML-solutions from NVIDIA, Seldon, Starbust and other vendors, which facilitates the construction and launch of their own machine learning systems.

The Open Data Hub project focuses on the following categories of users and use cases:

- A data analyst who needs a solution for implementing ML projects, organized by the type of cloud with self-service functions.

- A data analyst who needs the maximum selection from the wide variety of the latest open source AI / ML tools and platforms.

- A data analyst who needs access to data sources when training models.

- Data analyst who needs access to computing resources (CPU, GPU, memory).

- Date is an analyst who needs the opportunity to collaborate and share the results of work with colleagues, receive feedback and introduce improvements using the fast iteration method.

- A data analyst who wants to interact with developers (and devops teams) so that his ML models and work results go into production.

- A data engineer who needs to provide data analytics with access to a variety of data sources in compliance with safety standards and requirements.

- An administrator / operator of IT systems who needs the ability to easily control the life cycle (installation, configuration, updating) of open source components and technologies. We also need appropriate management and quota tools.

The Open Data Hub project combines a number of open source tools to implement a complete AI / ML operation. The Jupyter Notebook is used here as the main working tool for data analytics. This toolkit is now widely popular with data processing and analysis professionals, and the Open Data Hub allows them to easily create and manage Jupyter Notebook workspaces using the built-in JupyterHub. In addition to creating and importing notebooks Jupyter, the Open Data Hub project also contains a number of ready-made notebooks in the form of the AI Library.

This library is a collection of open-source machine learning components and sample scripting solutions that simplify rapid prototyping. JupyterHub is integrated with the OpenShift RBAC access model, which allows you to use existing OpenShift accounts and implement single sign-on. In addition, JupyterHub offers a convenient user interface called spawner, with which the user can easily configure the amount of computing resources (processor cores, memory, GPU) for the selected Jupyter Notebook.

After the data analyst creates and sets up the laptop, the Kubernetes scheduler, which is part of OpenShift, takes care of the rest. Users can only perform their experiments, save and share the results of their work. In addition, advanced users can directly access the OpenShift CLI shell directly from Jupyter notebooks to enable Kubernetes primitives, such as Job, or OpenShift functionality, such as Tekton or Knative. Or you can use the convenient OpenShift GUI called the “OpenShift Web Console” for this.

Going to the next step, the Open Data Hub gives you the ability to manage data pipelines. For this, a Ceph object is used, which is provided as an S3-compatible object data warehouse. Apache Spark streams data from external sources or the Ceph S3 built-in storage, and also allows you to perform preliminary data conversions. Apache Kafka provides advanced management of data pipelines (where you can perform multiple downloads, as well as operations of transformation, analysis and storage of data).

So, the data analyst got access to the data and built a model. Now he has a desire to share the results with colleagues or application developers, and to provide them with his model on the principles of service. To do this, you need an output server, and the Open Data Hub has such a server, it is called Seldon and allows you to publish the model as a RESTful service.

At some point, there are several such models on the Seldon server, and there is a need to monitor how they are used. To do this, the Open Data Hub offers a collection of relevant metrics and a report engine based on the widely used open source monitoring tools Prometheus and Grafana. As a result, we get feedback for monitoring the use of AI models, in particular in the production environment.

Thus, the Open Data Hub provides a cloud-like approach throughout the entire AI / ML operation cycle, from access and data preparation to training and industrial operation of the model.

Putting it all together

Now the question is how to organize this for the OpenShift administrator. And here comes the special Kubernetes operator for Open Data Hub projects.

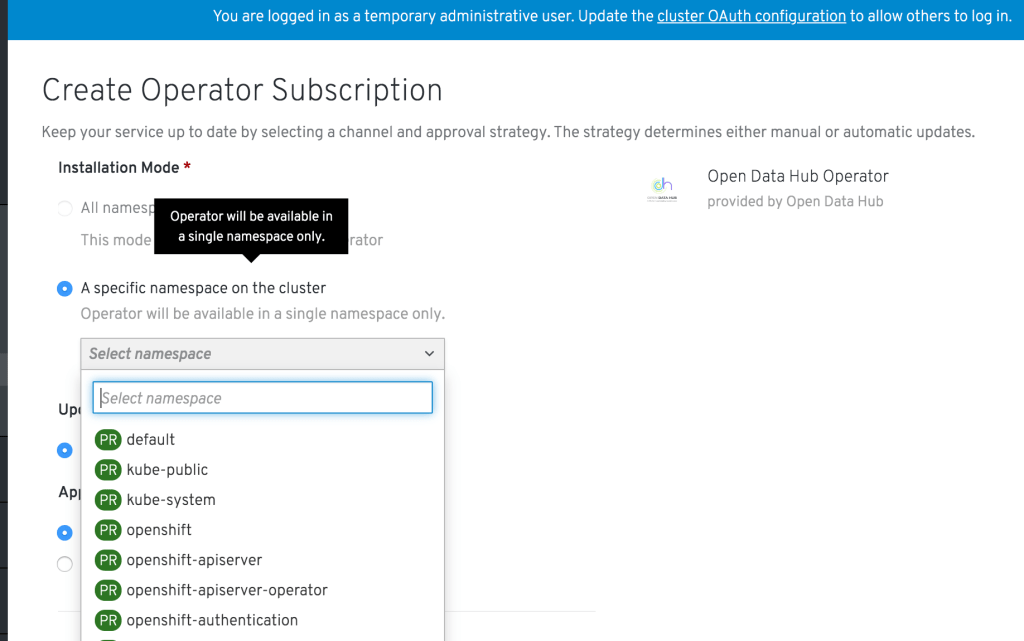

This operator manages the installation, configuration, and life cycle of the Open Data Hub project, including the deployment of such tools as JupyterHub, Ceph, Spark, Kafka, Seldon, Prometheus, and Grafana. The Open Data Hub project can be found in the OpenShift web console, in the community-operators section. Thus, the OpenShift administrator can specify that the corresponding OpenShift projects are categorized as the “Open Data Hub Project”. This is done once. After that, the data analyst through the OpenShift web console enters his project space and sees that the corresponding Kubernetes operator is installed and available for his projects. He then creates an instance of the Open Data Hub project with one click and immediately accesses the tools described above. And all this can be configured in high availability and fault tolerance mode.

If you want to try the Open Data Hub project with your own hands, start with the installation instructions and an introductory tutorial . Technical details of the Open Data Hub architecture can be found here ; project development plans are here . In the future, it is planned to implement additional integration with Kubeflow, solve a number of issues with data regulation and security, and organize integration with systems based on the Drools and Optaplanner rules. You can express your opinion and become a member of the Open Data Hub project on the community page.

We summarize: serious problems with scaling prevent organizations from fully realizing the potential of artificial intelligence and machine learning. Red Hat OpenShift has long been successfully used to solve similar problems in the software industry. The Open Data Hub project, implemented as part of the open source development community, offers a reference architecture for organizing a full AI / ML operation cycle based on the OpenShift hybrid cloud. We have a clear and thoughtful development plan for this project, and we are serious about creating an active and fruitful community for developing open AI solutions on the OpenShift platform around it.