We continue to deal with the CAMERA2 Android API.

In a previous article, we mastered the camera to take photos using the new API. Now let's take a video. In general, initially, my main goal was to stream live video from an Android camera using Media Codec, but it so happened that at first Media Recorder got on the scene and wanted to share with the most respectable audience how well he can record video clips. Therefore, we will start streaming the next time, but for now we’ll figure out how to add Media Recorder to the new API. The post about him turned out to be pretty banal, so only beginners and perfect teapots can look under the cat.

So Media Recorder

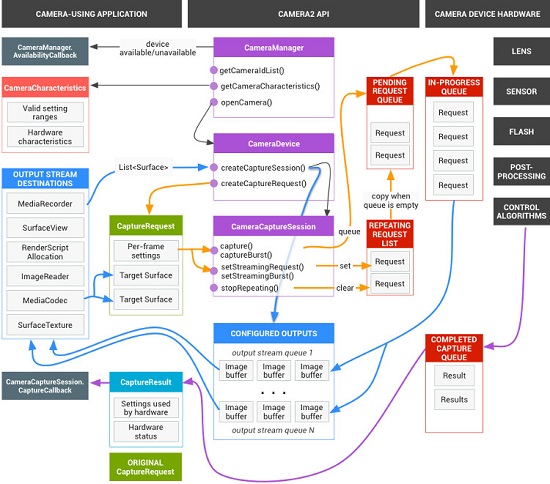

As we can see from the very name of the class and the picture above, we need Media Recorder in order to take somewhere the source of the audio or video or all together and record in the end, all this in a file in the desired, and most importantly accessible format.

In our case, the task is simple, we take video and audio from the camera and microphone and write to a file in MPEG_4 format. Some perverts used to slip a network socket for Media Recorder instead of a file in order to be able to drive a video over the network, but fortunately, these cave times are already in the past. We will do the same in the next article, but take the already civilized Media Codec for this.

As everyone remembers from the previous Camera API from far 2011 , then connecting MediaRecorder was not difficult. It is pleasant to note that no difficulty arises now. And let us not be scared by the picture of the full scheme of the camera.

We just need to fasten the Media Recorder to the Surface surface onto which the image from the camera is displayed, and then he will do everything himself. With audio, it’s even more trivial, just set the required formats, and Media Recorder will deal with the sound on its own, without bothering us with all kinds of callbacks.

Remember how surprised the Japanese friend from the last post:

One of the reasons why Camera2 is perplexed is how many callbacks you need to use to take one shot.

And here, on the contrary, it is surprising how few callbacks are needed to record a video file. Only two.

And now we will write them

As the source, we take the code from the last article and throw out everything related to photographing and leave, in fact, only the resolution and initialization of the camera. We also leave only one camera - the front.

private CameraManager mCameraManager = null; private final int CAMERA1 = 0; protected void onCreate(Bundle savedInstanceState) { super.onCreate(savedInstanceState); setContentView(R.layout.activity_main); Log.d(LOG_TAG, " "); if (checkSelfPermission(Manifest.permission.CAMERA) != PackageManager.PERMISSION_GRANTED || (ContextCompat.checkSelfPermission(MainActivity.this, Manifest.permission.WRITE_EXTERNAL_STORAGE) != PackageManager.PERMISSION_GRANTED) || (ContextCompat.checkSelfPermission(MainActivity.this, Manifest.permission.RECORD_AUDIO) != PackageManager.PERMISSION_GRANTED) ) { requestPermissions(new String[]{Manifest.permission.CAMERA, Manifest.permission.WRITE_EXTERNAL_STORAGE, Manifest.permission.RECORD_AUDIO}, 1); } mCameraManager = (CameraManager) getSystemService(Context.CAMERA_SERVICE); try { // myCameras = new CameraService[mCameraManager.getCameraIdList().length]; for (String cameraID : mCameraManager.getCameraIdList()) { Log.i(LOG_TAG, "cameraID: " + cameraID); int id = Integer.parseInt(cameraID); // myCameras[id] = new CameraService(mCameraManager, cameraID); } } catch (CameraAccessException e) { Log.e(LOG_TAG, e.getMessage()); e.printStackTrace(); } public class CameraService { private String mCameraID; private CameraDevice mCameraDevice = null; private CameraCaptureSession mSession; private CaptureRequest.Builder mPreviewBuilder; public CameraService(CameraManager cameraManager, String cameraID) { mCameraManager = cameraManager; mCameraID = cameraID; } private CameraDevice.StateCallback mCameraCallback = new CameraDevice.StateCallback() { @Override public void onOpened(CameraDevice camera) { mCameraDevice = camera; Log.i(LOG_TAG, "Open camera with id:" + mCameraDevice.getId()); //startCameraPreviewSession(); Media Recorder } @Override public void onDisconnected(CameraDevice camera) { mCameraDevice.close(); Log.i(LOG_TAG, "disconnect camera with id:" + mCameraDevice.getId()); mCameraDevice = null; } @Override public void onError(CameraDevice camera, int error) { Log.i(LOG_TAG, "error! camera id:" + camera.getId() + " error:" + error); } };

As we can see, the RECORD_AUDIO option has been added in permissions. Without it, Media Recorder can only record bare video without sound. And if we still try to specify sound formats without permission, then it will not start at all. Therefore, we allow recording sound and so on, remembering, of course, that in real code in the main stream, such things are not good to do, but good only in the demo.

Next, initialize the Media Recorder itself in a separate method

private void setUpMediaRecorder() { mMediaRecorder = new MediaRecorder(); mMediaRecorder.setAudioSource(MediaRecorder.AudioSource.MIC); mMediaRecorder.setVideoSource(MediaRecorder.VideoSource.SURFACE); mMediaRecorder.setOutputFormat(MediaRecorder.OutputFormat.MPEG_4); mCurrentFile = new File(Environment.getExternalStoragePublicDirectory(Environment.DIRECTORY_DCIM), "test"+count+".mp4"); mMediaRecorder.setOutputFile(mCurrentFile.getAbsolutePath()); CamcorderProfile profile = CamcorderProfile.get(CamcorderProfile.QUALITY_480P); mMediaRecorder.setVideoFrameRate(profile.videoFrameRate); mMediaRecorder.setVideoSize(profile.videoFrameWidth, profile.videoFrameHeight); mMediaRecorder.setVideoEncodingBitRate(profile.videoBitRate); mMediaRecorder.setVideoEncoder(MediaRecorder.VideoEncoder.H264); mMediaRecorder.setAudioEncoder(MediaRecorder.AudioEncoder.AAC); mMediaRecorder.setAudioEncodingBitRate(profile.audioBitRate); mMediaRecorder.setAudioSamplingRate(profile.audioSampleRate); try { mMediaRecorder.prepare(); Log.i(LOG_TAG, " "); } catch (Exception e) { Log.i(LOG_TAG, " "); } }

Here, too, everything is clear and understandable and no explanation is required.

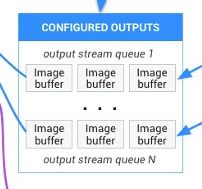

Next comes the most crucial stage - adding Media Recorder to Surface. In the last post, we displayed the image from the camera on the Surface and shot a frame from it using Image Reader. To do this, we simply specified both components in the Surface list.

Arrays.asList(surface,mImageReader.getSurface())

Here the same thing, only instead of ImageReader we specify:

(Arrays.asList(surface, mMediaRecorder.getSurface()).

There, in general, you can sculpt anything with a comma, all the components you use and even Media Codec. That is, you can take photos in one window, shoot video and stream it. Surface good - allows. True, is it possible to do everything at the same time? I won’t tell you this. In theory, judging by the picture of the camera - you can.

It should, like, just scatter across different streams. So there is a field for experiments.

But back to Media Recorder

Almost we did everything. Unlike photographing, we do not need any additional requests for shooting, we do not need any analogue of ImageSaver - our hard-working recorder does everything by himself. And it’s nice.

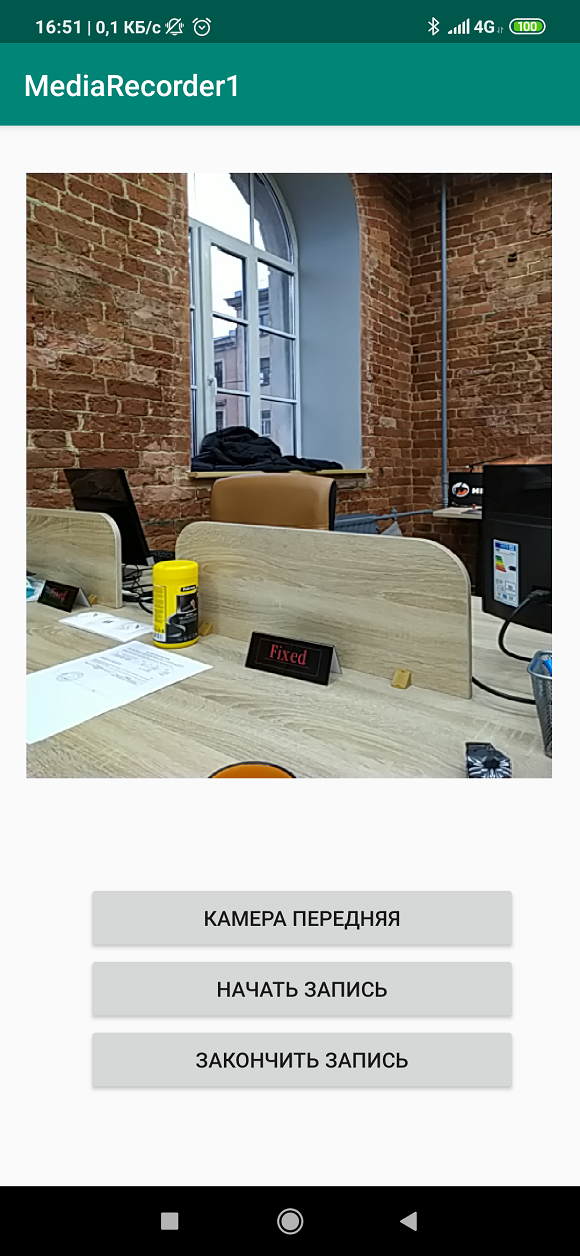

As a result, the program takes on a completely minimalist look.

package com.example.mediarecorder1; import androidx.appcompat.app.AppCompatActivity; import androidx.core.content.ContextCompat; import android.Manifest; import android.content.Context; import android.content.pm.PackageManager; import android.graphics.SurfaceTexture; import android.hardware.camera2.CameraAccessException; import android.hardware.camera2.CameraCaptureSession; import android.hardware.camera2.CameraDevice; import android.hardware.camera2.CameraManager; import android.hardware.camera2.CaptureRequest; import android.media.CamcorderProfile; import android.os.Bundle; import android.media.MediaRecorder; import android.os.Environment; import android.os.Handler; import android.os.HandlerThread; import android.util.Log; import android.view.Surface; import android.view.TextureView; import android.view.View; import android.widget.Button; import java.io.File; import java.util.Arrays; public class MainActivity extends AppCompatActivity { public static final String LOG_TAG = "myLogs"; CameraService[] myCameras = null; private CameraManager mCameraManager = null; private final int CAMERA1 = 0; private int count =1; private Button mButtonOpenCamera1 = null; private Button mButtonRecordVideo = null; private Button mButtonStopRecordVideo = null; public static TextureView mImageView = null; private HandlerThread mBackgroundThread; private Handler mBackgroundHandler = null; private File mCurrentFile; private MediaRecorder mMediaRecorder = null; private void startBackgroundThread() { mBackgroundThread = new HandlerThread("CameraBackground"); mBackgroundThread.start(); mBackgroundHandler = new Handler(mBackgroundThread.getLooper()); } private void stopBackgroundThread() { mBackgroundThread.quitSafely(); try { mBackgroundThread.join(); mBackgroundThread = null; mBackgroundHandler = null; } catch (InterruptedException e) { e.printStackTrace(); } } protected void onCreate(Bundle savedInstanceState) { super.onCreate(savedInstanceState); setContentView(R.layout.activity_main); Log.d(LOG_TAG, " "); if (checkSelfPermission(Manifest.permission.CAMERA) != PackageManager.PERMISSION_GRANTED || (ContextCompat.checkSelfPermission(MainActivity.this, Manifest.permission.WRITE_EXTERNAL_STORAGE) != PackageManager.PERMISSION_GRANTED) || (ContextCompat.checkSelfPermission(MainActivity.this, Manifest.permission.RECORD_AUDIO) != PackageManager.PERMISSION_GRANTED) ) { requestPermissions(new String[]{Manifest.permission.CAMERA, Manifest.permission.WRITE_EXTERNAL_STORAGE, Manifest.permission.RECORD_AUDIO}, 1); } mButtonOpenCamera1 = findViewById(R.id.button1); mButtonRecordVideo = findViewById(R.id.button2); mButtonStopRecordVideo = findViewById(R.id.button3); mImageView = findViewById(R.id.textureView); mButtonOpenCamera1.setOnClickListener(new View.OnClickListener() { @Override public void onClick(View v) { if (myCameras[CAMERA1] != null) { if (!myCameras[CAMERA1].isOpen()) myCameras[CAMERA1].openCamera(); } } }); mButtonRecordVideo.setOnClickListener(new View.OnClickListener() { @Override public void onClick(View v) { if ((myCameras[CAMERA1] != null) & mMediaRecorder != null) { mMediaRecorder.start(); } } }); mButtonStopRecordVideo.setOnClickListener(new View.OnClickListener() { @Override public void onClick(View v) { if ((myCameras[CAMERA1] != null) & (mMediaRecorder != null)) { myCameras[CAMERA1].stopRecordingVideo(); } } }); mCameraManager = (CameraManager) getSystemService(Context.CAMERA_SERVICE); try { // myCameras = new CameraService[mCameraManager.getCameraIdList().length]; for (String cameraID : mCameraManager.getCameraIdList()) { Log.i(LOG_TAG, "cameraID: " + cameraID); int id = Integer.parseInt(cameraID); // myCameras[id] = new CameraService(mCameraManager, cameraID); } } catch (CameraAccessException e) { Log.e(LOG_TAG, e.getMessage()); e.printStackTrace(); } setUpMediaRecorder(); } private void setUpMediaRecorder() { mMediaRecorder = new MediaRecorder(); mMediaRecorder.setAudioSource(MediaRecorder.AudioSource.MIC); mMediaRecorder.setVideoSource(MediaRecorder.VideoSource.SURFACE); mMediaRecorder.setOutputFormat(MediaRecorder.OutputFormat.MPEG_4); mCurrentFile = new File(Environment.getExternalStoragePublicDirectory(Environment.DIRECTORY_DCIM), "test"+count+".mp4"); mMediaRecorder.setOutputFile(mCurrentFile.getAbsolutePath()); CamcorderProfile profile = CamcorderProfile.get(CamcorderProfile.QUALITY_480P); mMediaRecorder.setVideoFrameRate(profile.videoFrameRate); mMediaRecorder.setVideoSize(profile.videoFrameWidth, profile.videoFrameHeight); mMediaRecorder.setVideoEncodingBitRate(profile.videoBitRate); mMediaRecorder.setVideoEncoder(MediaRecorder.VideoEncoder.H264); mMediaRecorder.setAudioEncoder(MediaRecorder.AudioEncoder.AAC); mMediaRecorder.setAudioEncodingBitRate(profile.audioBitRate); mMediaRecorder.setAudioSamplingRate(profile.audioSampleRate); try { mMediaRecorder.prepare(); Log.i(LOG_TAG, " "); } catch (Exception e) { Log.i(LOG_TAG, " "); } } public class CameraService { private String mCameraID; private CameraDevice mCameraDevice = null; private CameraCaptureSession mSession; private CaptureRequest.Builder mPreviewBuilder; public CameraService(CameraManager cameraManager, String cameraID) { mCameraManager = cameraManager; mCameraID = cameraID; } private CameraDevice.StateCallback mCameraCallback = new CameraDevice.StateCallback() { @Override public void onOpened(CameraDevice camera) { mCameraDevice = camera; Log.i(LOG_TAG, "Open camera with id:" + mCameraDevice.getId()); startCameraPreviewSession(); } @Override public void onDisconnected(CameraDevice camera) { mCameraDevice.close(); Log.i(LOG_TAG, "disconnect camera with id:" + mCameraDevice.getId()); mCameraDevice = null; } @Override public void onError(CameraDevice camera, int error) { Log.i(LOG_TAG, "error! camera id:" + camera.getId() + " error:" + error); } }; private void startCameraPreviewSession() { SurfaceTexture texture = mImageView.getSurfaceTexture(); texture.setDefaultBufferSize(640, 480); Surface surface = new Surface(texture); try { mPreviewBuilder = mCameraDevice.createCaptureRequest(CameraDevice.TEMPLATE_RECORD); /**Surface for the camera preview set up*/ mPreviewBuilder.addTarget(surface); /**MediaRecorder setup for surface*/ Surface recorderSurface = mMediaRecorder.getSurface(); mPreviewBuilder.addTarget(recorderSurface); mCameraDevice.createCaptureSession(Arrays.asList(surface, mMediaRecorder.getSurface()), new CameraCaptureSession.StateCallback() { @Override public void onConfigured(CameraCaptureSession session) { mSession = session; try { mSession.setRepeatingRequest(mPreviewBuilder.build(), null, mBackgroundHandler); } catch (CameraAccessException e) { e.printStackTrace(); } } @Override public void onConfigureFailed(CameraCaptureSession session) { } }, mBackgroundHandler); } catch (CameraAccessException e) { e.printStackTrace(); } } public void stopRecordingVideo() { try { mSession.stopRepeating(); mSession.abortCaptures(); mSession.close(); } catch (CameraAccessException e) { e.printStackTrace(); } mMediaRecorder.stop(); mMediaRecorder.release(); count++; setUpMediaRecorder(); startCameraPreviewSession(); } public boolean isOpen() { if (mCameraDevice == null) { return false; } else { return true; } } public void openCamera() { try { if (checkSelfPermission(Manifest.permission.CAMERA) == PackageManager.PERMISSION_GRANTED) { mCameraManager.openCamera(mCameraID, mCameraCallback, mBackgroundHandler); } } catch (CameraAccessException e) { Log.i(LOG_TAG, e.getMessage()); } } } @Override public void onPause() { stopBackgroundThread(); super.onPause(); } @Override public void onResume() { super.onResume(); startBackgroundThread(); } }

add LAYOUT to it

<?xml version="1.0" encoding="utf-8"?> <androidx.constraintlayout.widget.ConstraintLayout xmlns:android="http://schemas.android.com/apk/res/android" xmlns:app="http://schemas.android.com/apk/res-auto" xmlns:tools="http://schemas.android.com/tools" android:layout_width="match_parent" android:layout_height="match_parent" tools:context=".MainActivity"> <TextureView android:id="@+id/textureView" android:layout_width="356dp" android:layout_height="410dp" android:layout_marginTop="32dp" app:layout_constraintEnd_toEndOf="parent" app:layout_constraintHorizontal_bias="0.49" app:layout_constraintStart_toStartOf="parent" app:layout_constraintTop_toTopOf="parent" /> <LinearLayout android:layout_width="292dp" android:layout_height="145dp" android:layout_marginStart="16dp" android:orientation="vertical" app:layout_constraintBottom_toBottomOf="parent" app:layout_constraintEnd_toEndOf="parent" app:layout_constraintStart_toStartOf="parent" app:layout_constraintTop_toBottomOf="@+id/textureView" app:layout_constraintVertical_bias="0.537"> <Button android:id="@+id/button1" android:layout_width="match_parent" android:layout_height="wrap_content" android:text=" " /> <Button android:id="@+id/button2" android:layout_width="match_parent" android:layout_height="wrap_content" android:text=" " /> <Button android:id="@+id/button3" android:layout_width="match_parent" android:layout_height="wrap_content" android:text=" " /> </LinearLayout> </androidx.constraintlayout.widget.ConstraintLayout>

And a little addition to the manifest

<uses-permission android:name="android.permission.RECORD_AUDIO"/>

Everything works and successfully writes files.

The only thing is that there is no protection from the fool, and therefore, if it is unreasonable to poke on-screen buttons in a random order, then you can break everything.