Backup, Part 6: Comparing Backup Tools

This article will compare backup tools, but first you need to find out how they quickly and well deal with recovering data from backups.

For ease of comparison, recovery from a full backup will be considered, especially since all candidates support this mode of operation. For simplicity, the numbers are already averaged (arithmetic average of several starts). The results will be summarized in a table, which will also contain information about the features: the presence of a web interface, ease of setup and operation, the ability to automate, the presence of various additional features (for example, checking data integrity), etc. The graphs will show the server load, where the data will be used (not the server for storing backups).

Data recovery

Rsync and tar will be used as a reference point, since it is on these that the simplest backup scripts are usually based .

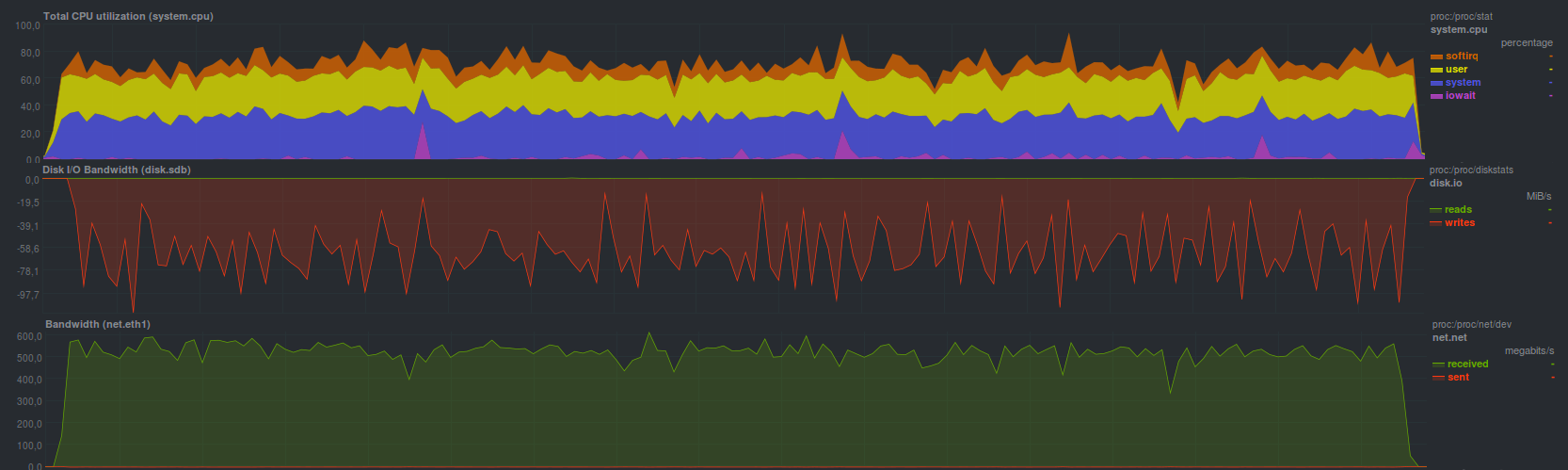

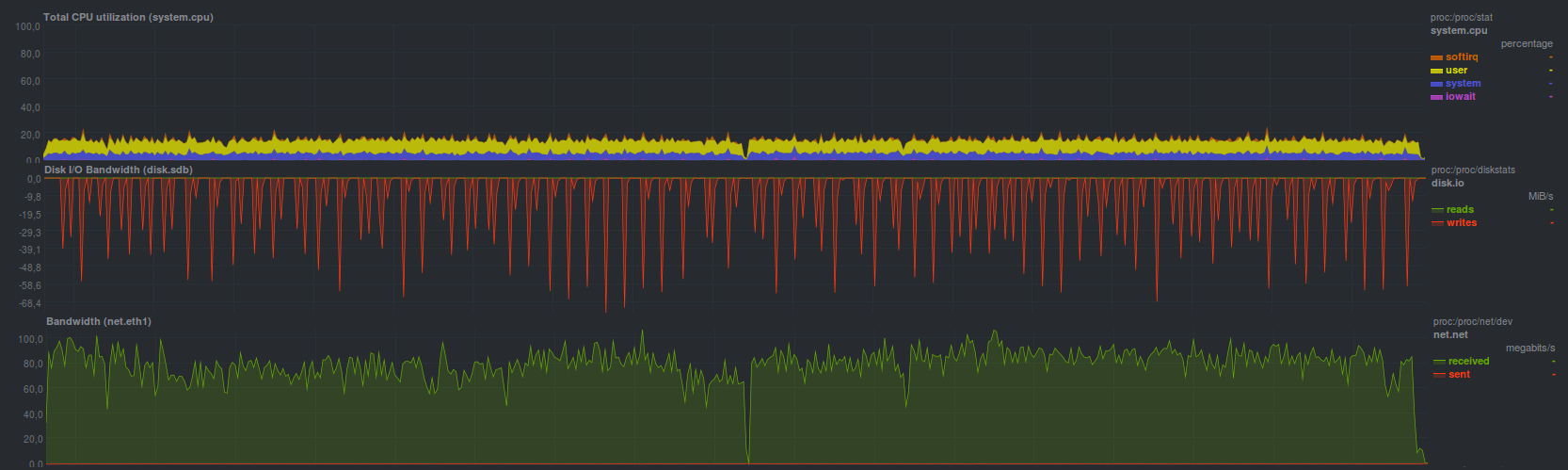

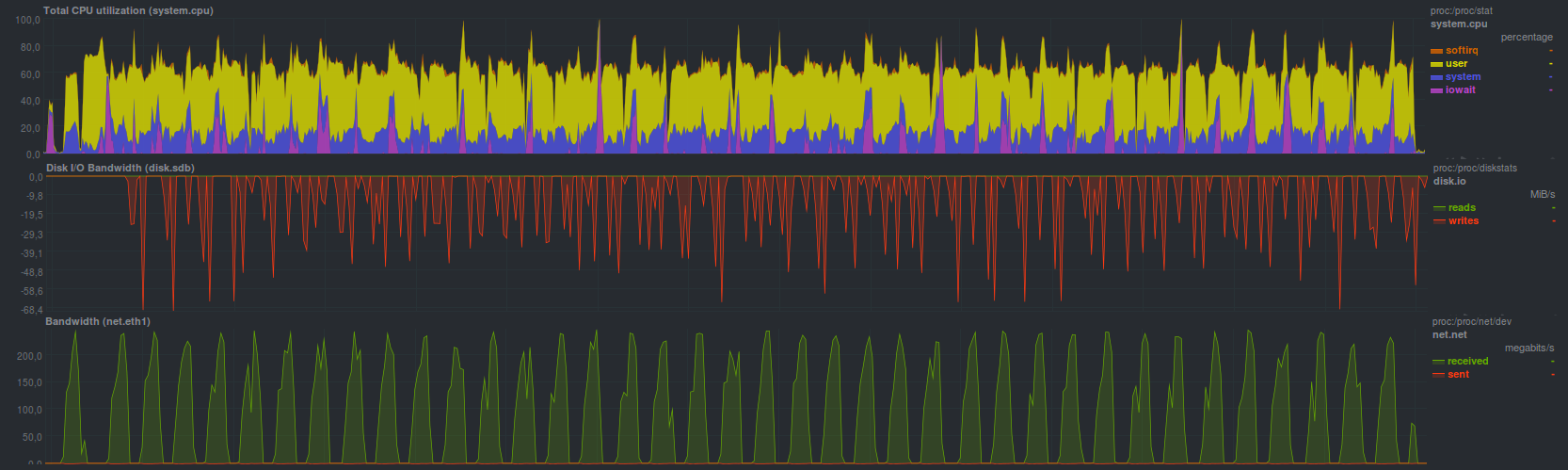

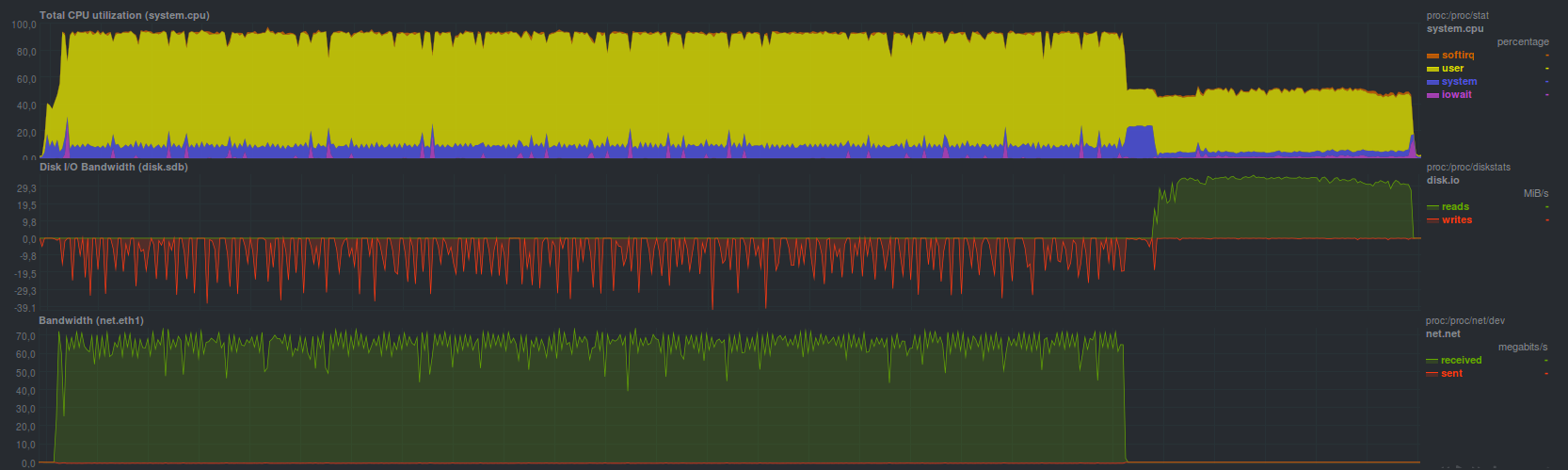

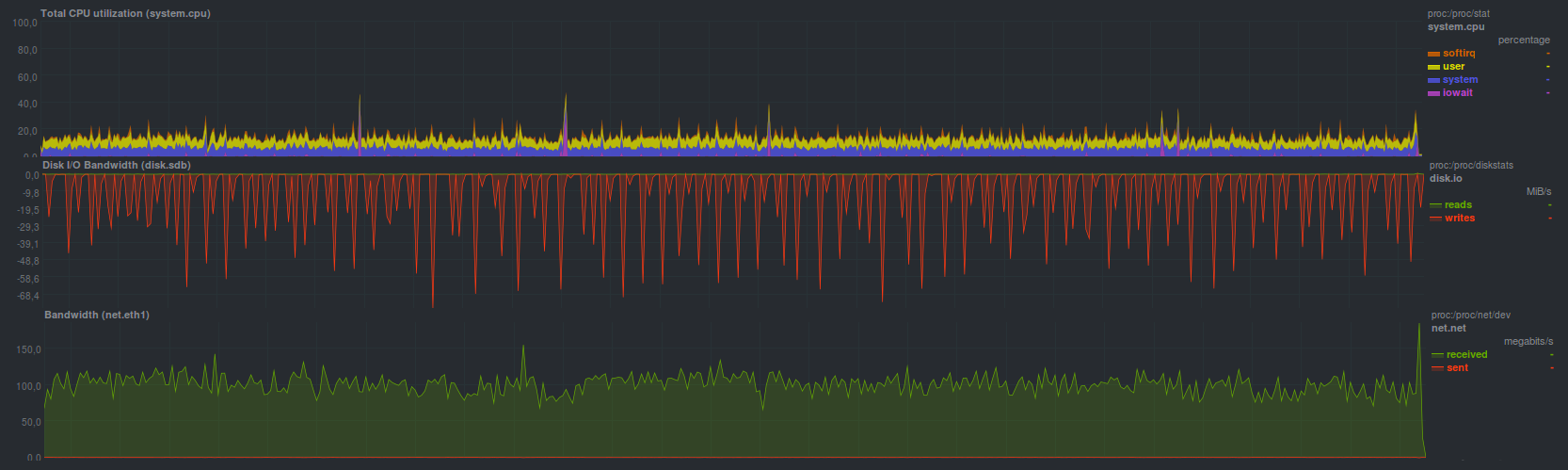

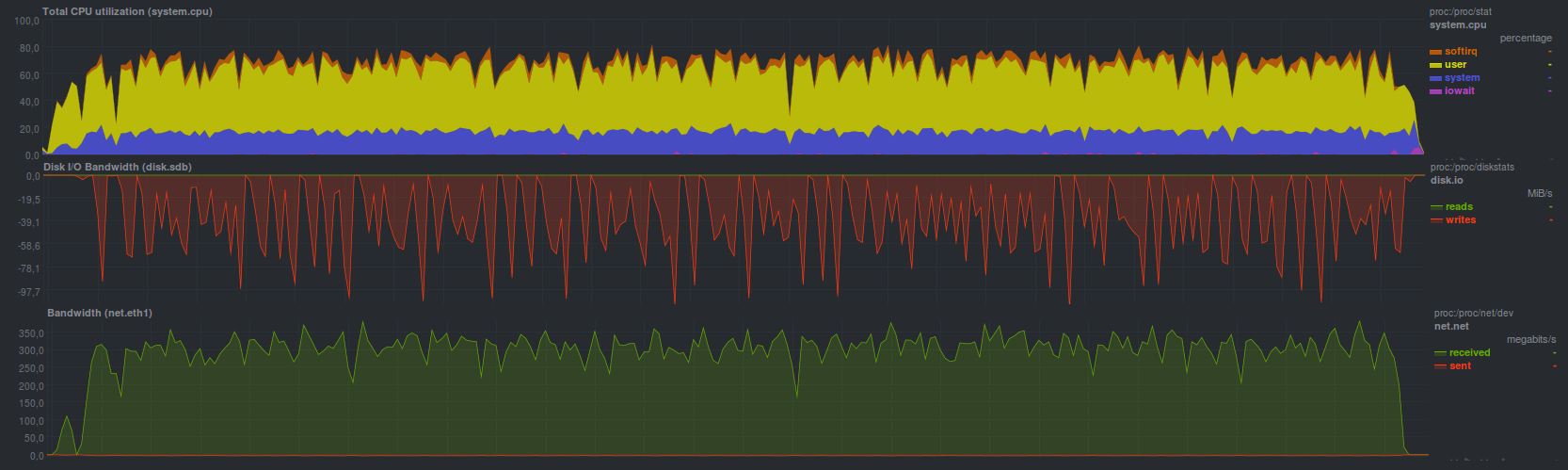

Rsync completed a test dataset in 4 minutes and 28 seconds by showing

such a load

The recovery process ran into a limitation of the disk subsystem of the backup storage server (sawtooth graphics). You can also clearly see the loading of one core without any problems (low iowait and softirq - there are no problems with the disk and network, respectively). Since the other two programs, namely rdiff-backup and rsnapshot, are based on rsync and also offer regular rsync as a recovery tool, they will have approximately the same load profile and backup recovery time.

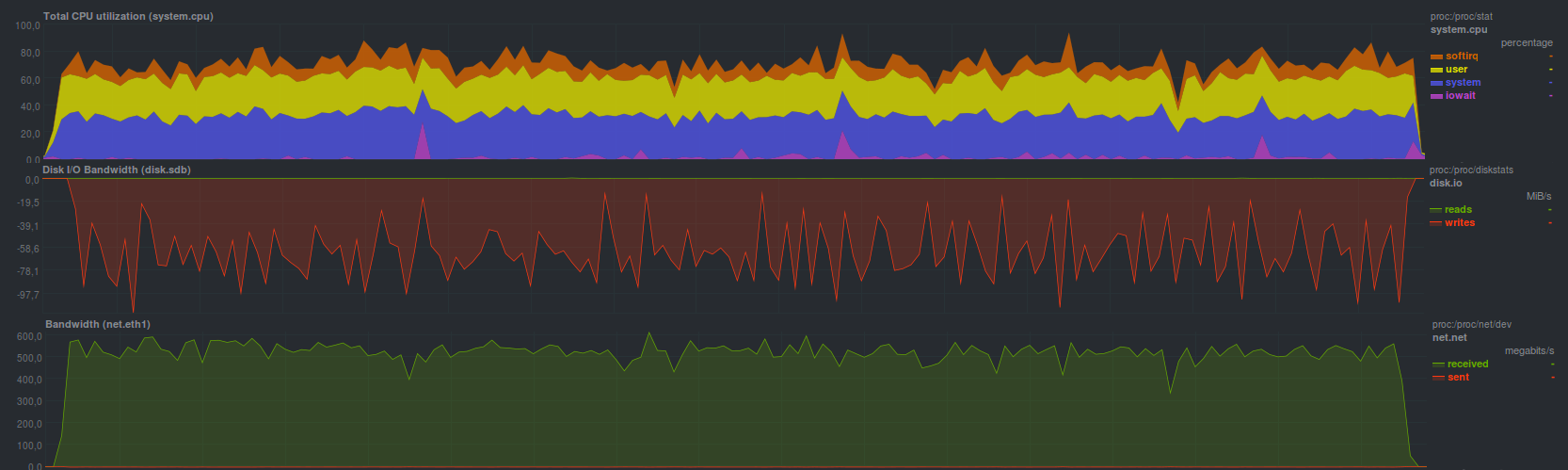

Tar dealt a little faster for

2 minutes and 43 seconds:

Full system load was higher by an average of 20% due to increased softirq - increased overhead during the operation of the network subsystem.

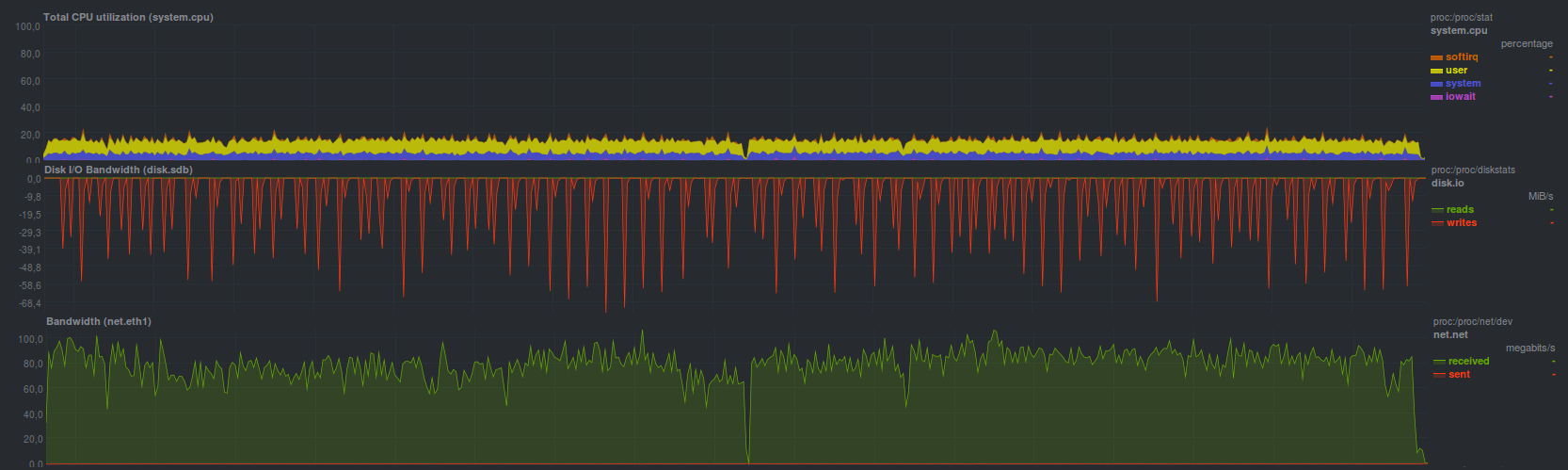

If the archive is additionally compressed, the recovery time increases to 3 minutes 19 seconds with

such load on the main server (unpacking on the side of the main server):

The unpacking process occupies both processor cores, since two processes work. In general, the expected result. Also, a comparable result (3 minutes and 20 seconds) was obtained when running gzip on the server side with backups, the load profile on the main server was very similar to running tar without the gzip compressor (see the previous graph).

In rdiff-backup, you can synchronize the last backup made using regular rsync (the results will be similar), but older backups still need to be restored using the rdiff-backup program, which managed to restore in 17 minutes and 17 seconds, showing

such a load:

Perhaps this was conceived, in any case, the authors propose such a solution to limit the speed. The process of restoring a backup takes a little less than half of one core, with proportionally comparable performance (i.e. 2-5 times slower) on a disk and network with rsync.

Rsnapshot for recovery suggests using regular rsync, so its results will be similar. In general, that’s how it happened.

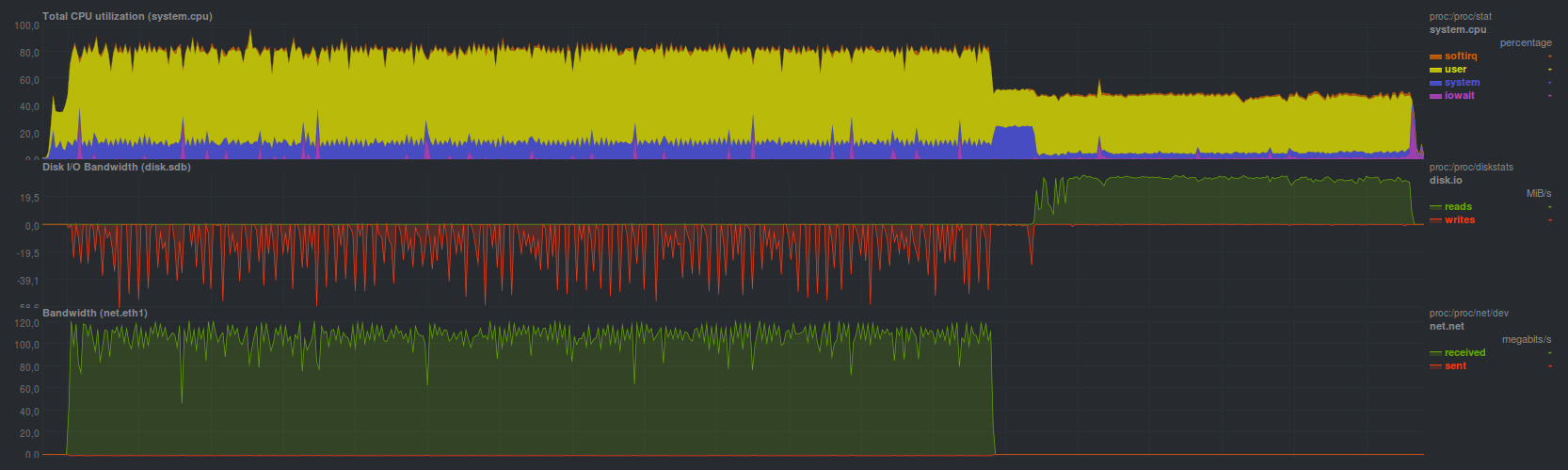

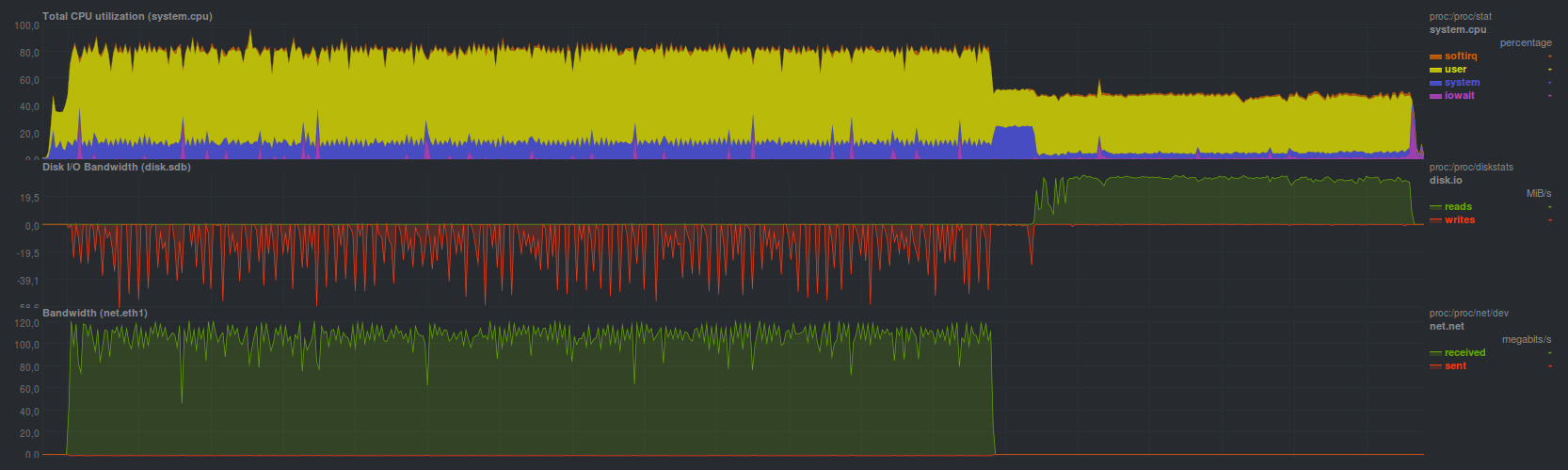

Burp coped with the task of restoring a backup in 7 minutes and 2 seconds with

such a load:

It worked fast enough, and at least much more conveniently than pure rsync: you don’t need to remember any flags, a simple and intuitive cli-interface, built-in support for multiple copies - it’s true two times slower. If you need to restore data from the last backup made, you can use rsync, with a few caveats.

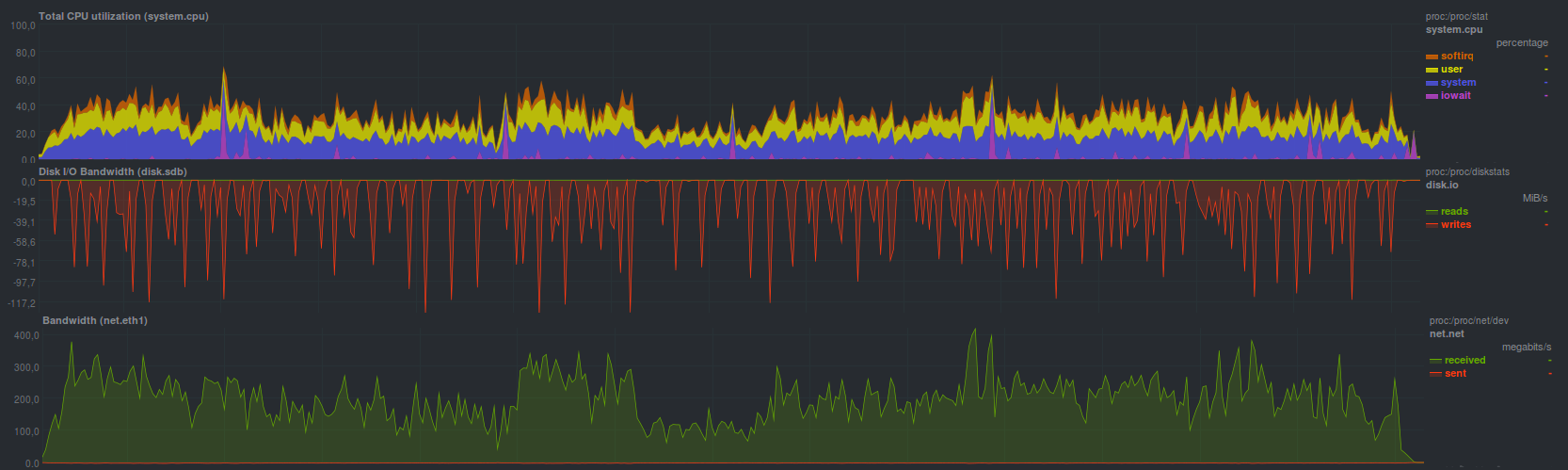

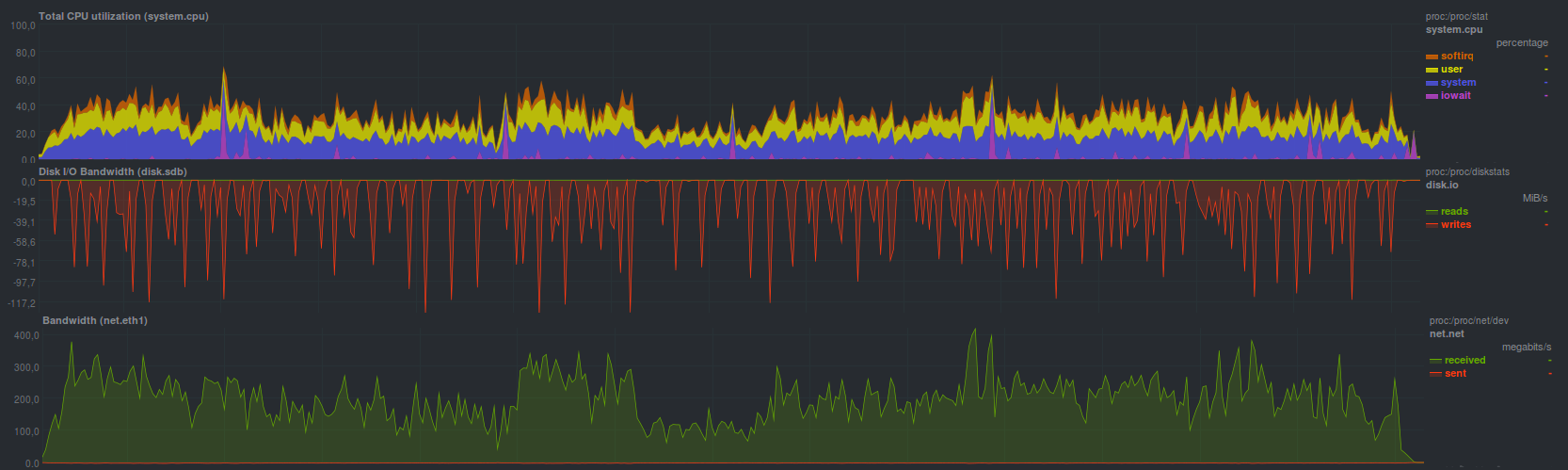

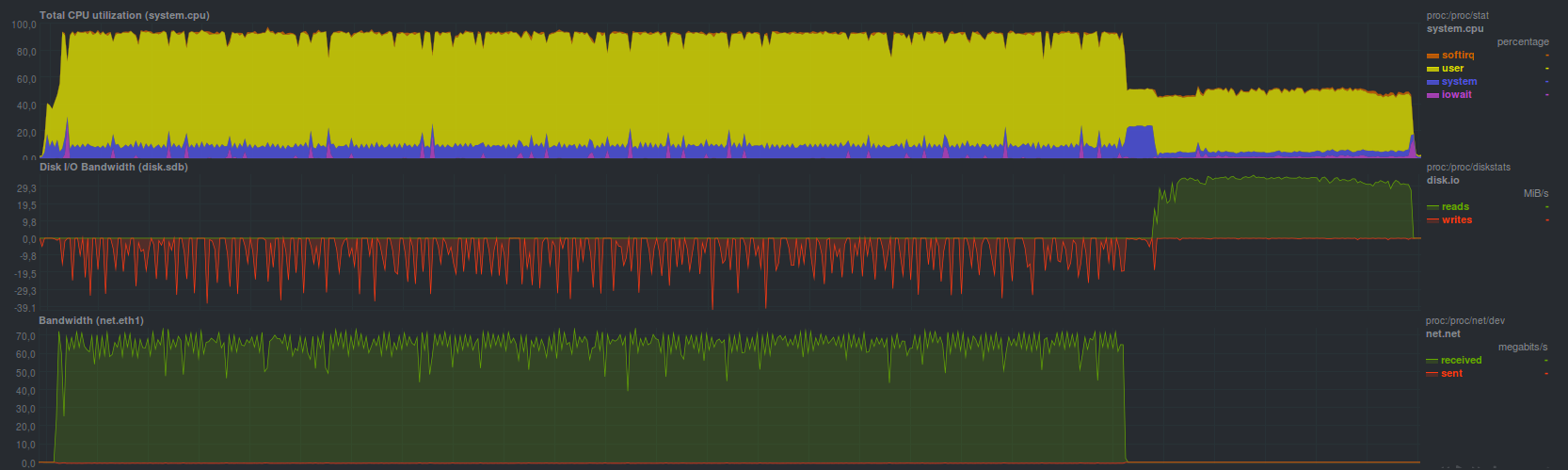

BackupPC showed about the same speed and load when turning on rsync transfer mode by deploying a backup for

7 minutes and 42 seconds:

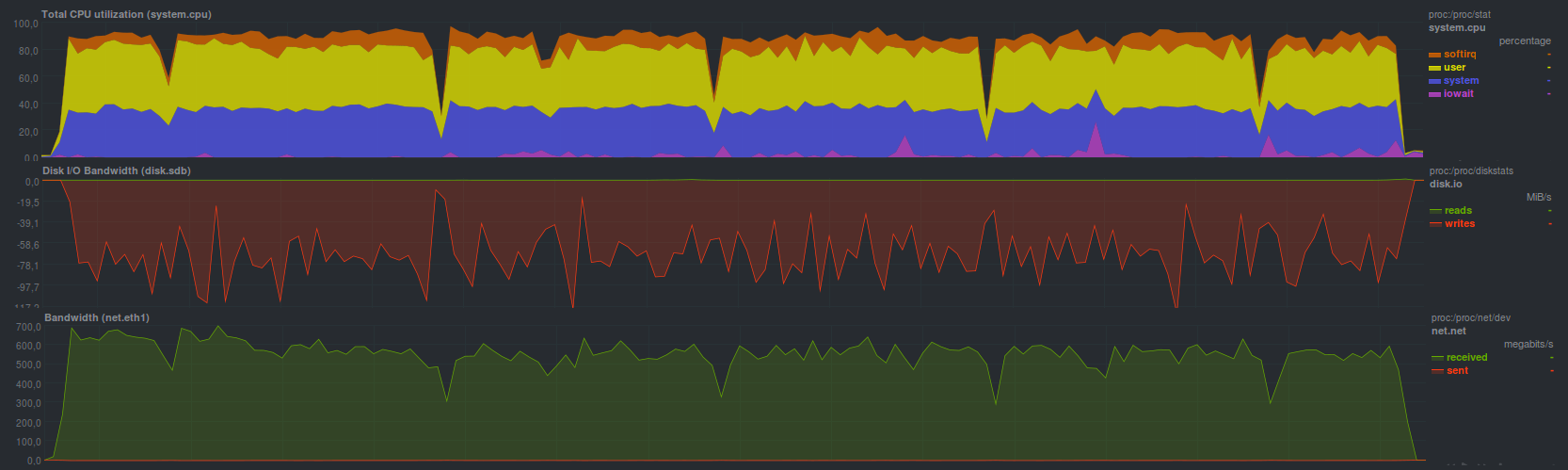

But in data transfer mode with tar BackupPC coped more slowly: in 12 minutes and 15 seconds, the processor load was generally lower

one and a half times:

Duplicity without encryption showed slightly better results, having coped with restoring a backup in 10 minutes and 58 seconds. If you activate encryption using gpg - recovery time increases to 15 minutes and 3 seconds. Also, when creating a repository for storing copies, you can specify the size of the archive that will be used when splitting the incoming data stream. In general, on ordinary hard drives, also in connection with a single-threaded mode of operation, there is not much difference. It may appear with different block sizes when using hybrid storage. The load on the main server during recovery was as follows:

without encryption

with encryption

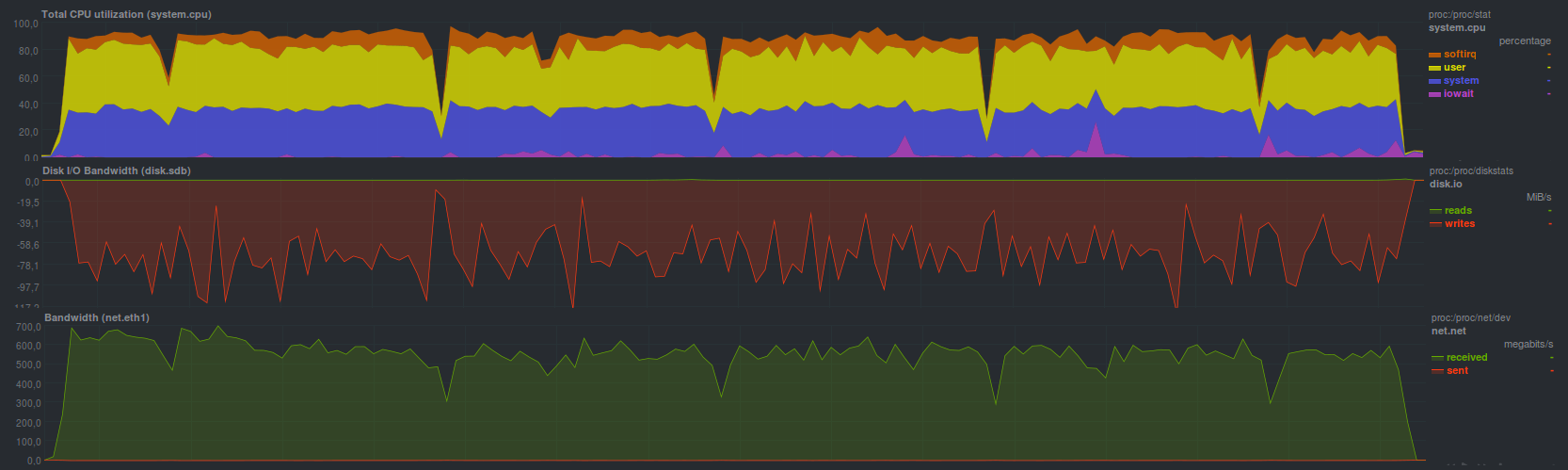

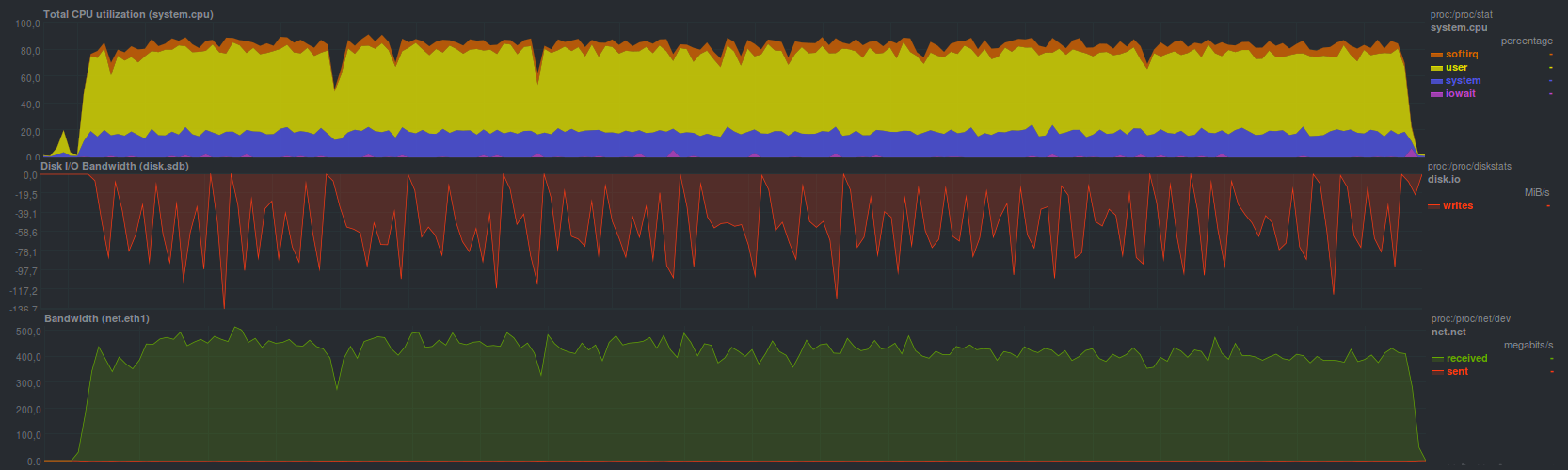

Duplicati showed a comparable recovery speed, coping in 13 minutes and 45 seconds. Another 5 minutes was taken by checking the correctness of the recovered data (a total of about 19 minutes). The load was

high enough:

When aes encryption was activated internally, the recovery time was 21 minutes and 40 seconds, and the processor load was maximum (both cores!) During recovery; when checking data, only one thread was active, occupying one processor core. Checking the data after recovery took the same 5 minutes (almost 27 minutes in total).

Result

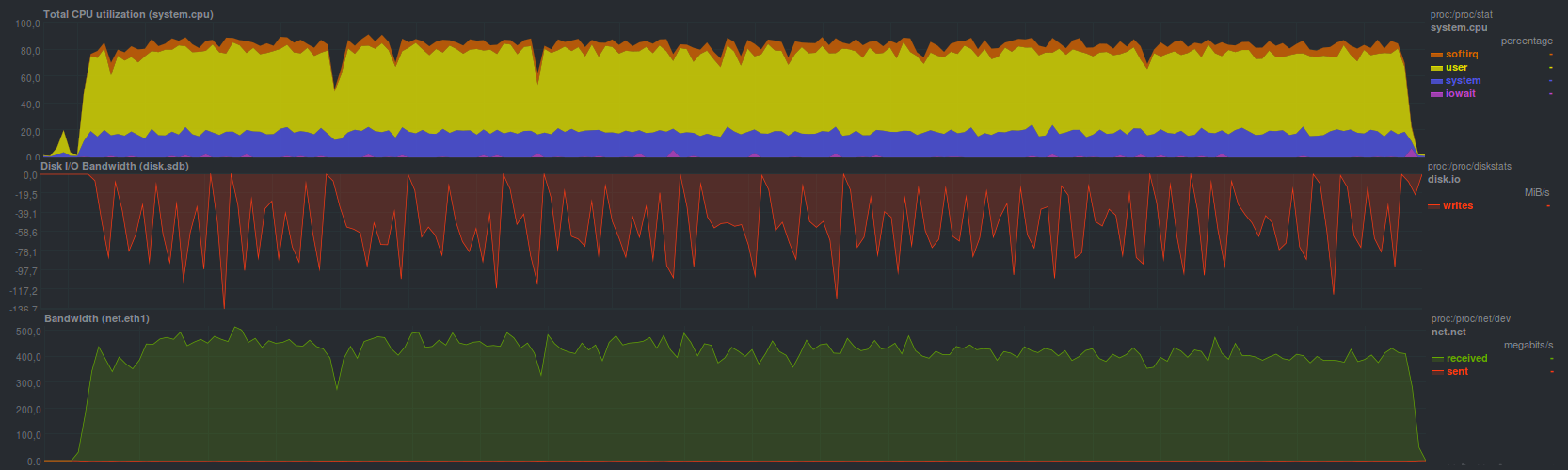

Duplicati handled recovery a bit faster when using an external gpg program for encryption, but in general, the differences from the previous mode are minimal. The operating time was 16 minutes 30 seconds, with data verification in 6 minutes. The load was

such:

AMANDA , using tar, managed in 2 minutes 49 seconds, which, in principle, is very close to regular tar. System load in principle

the same:

When restoring a backup using zbackup , the following results were obtained:

encryption, compression lzma

Operating time 11 minutes and 8 seconds

Operating time 11 minutes and 8 seconds

aes encryption, lzma compression

Operating time 14 minutes

Operating time 14 minutes

aes encryption, lzo compression

Operating time 6 minutes, 19 seconds

Operating time 6 minutes, 19 seconds

Overall, not bad. Everything depends on the processor speed on the backup server, which is quite clearly visible by the time the program runs with different compressors. From the backup server side, a regular tar was launched, so if you compare with it, recovery works 3 times slower. It may be worth checking the work in multi-threaded mode, with more than two threads.

BorgBackup in non-encrypted mode managed a little slower than tar, in 2 minutes 45 seconds, however, unlike the same tar, it became possible to deduplicate the repository. The load at the same time turned out

following:

If you activate blake-based encryption, the speed of restoring a backup slows down a bit. Recovery time in this mode is 3 minutes 19 seconds, and the load is released

such:

AES encryption works a little slower, recovery time 3 minutes 23 seconds, especially load

not changed:

Since Borg can work in multi-threaded mode - the processor load is maximum, while when you activate additional functions, the operating time simply increases. Apparently, it is worth exploring multithreading work similarly to zbackup.

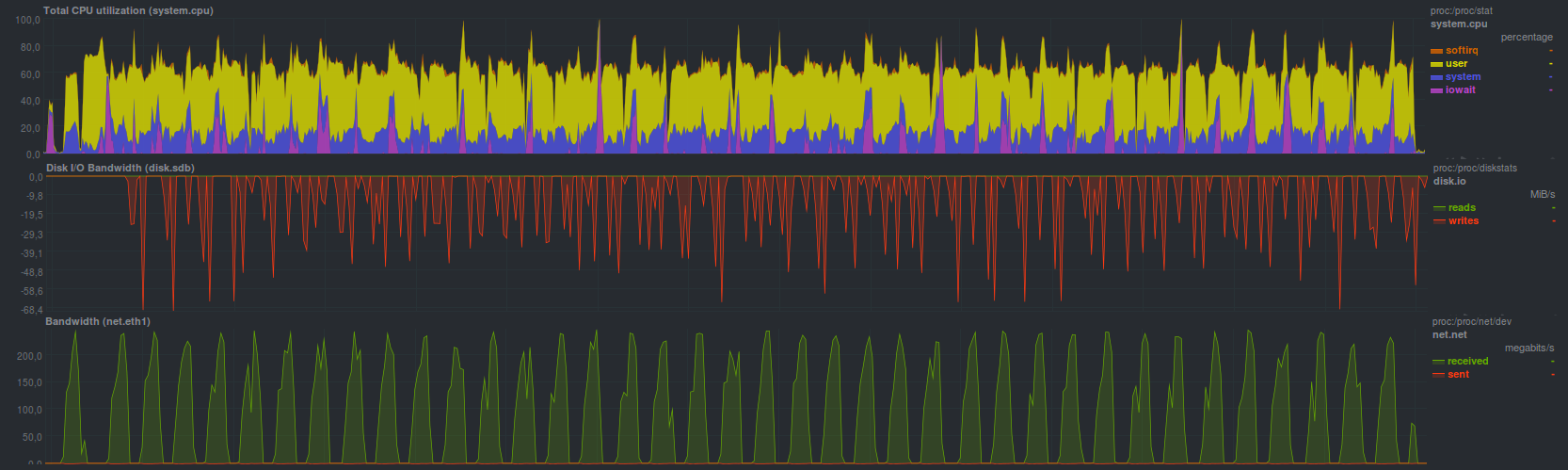

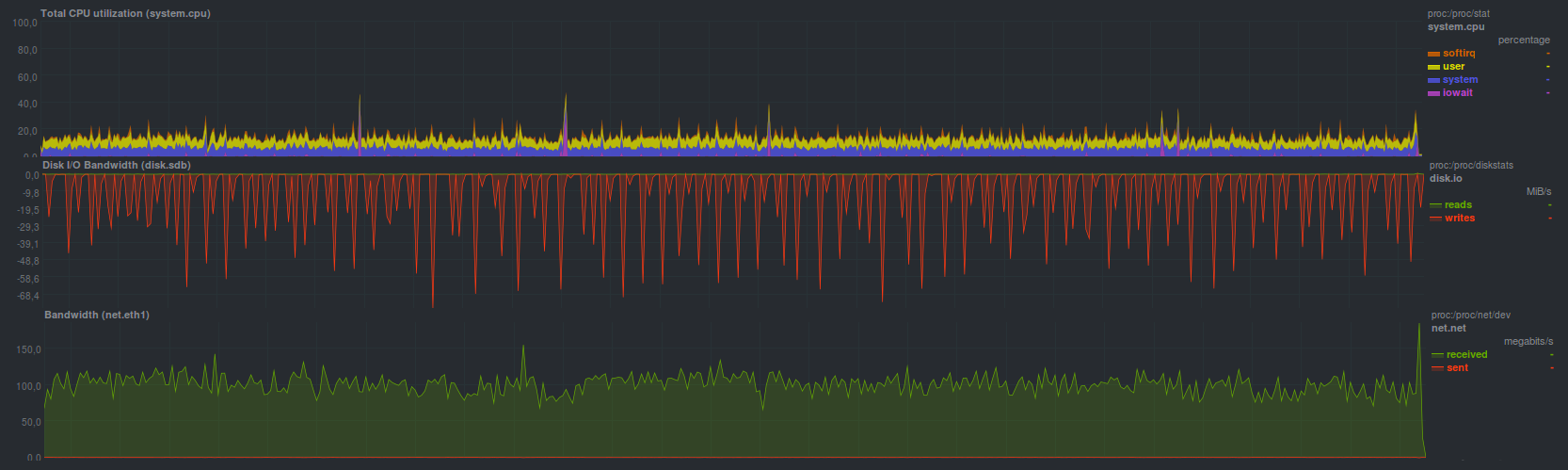

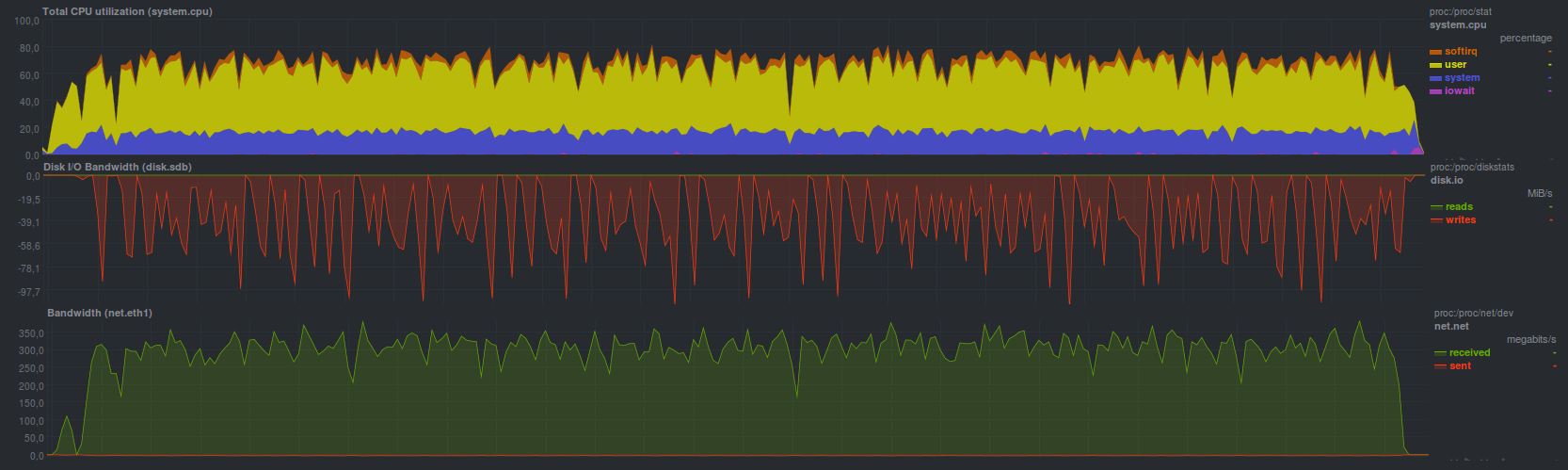

Restic coped with the recovery a bit more slowly, the operating time was 4 minutes 28 seconds. The load looked

So:

Apparently, the recovery process works in several threads, but the efficiency is not as high as that of BorgBackup, but it is comparable in time to the usual rsync.

Using UrBackup, I was able to recover data in 8 minutes and 19 seconds, while the load was

such:

Still not very high load is visible, even lower than that of tar. In some places bursts, but not more than loading one core.

Selection and justification of criteria for comparison

As mentioned in a previous article, the backup system must meet the following criteria:

- Simplicity in work

- Versatility

- Stability

- Rapidity

It is worth considering each item separately in more detail.

Simplicity of work

It’s best when there is one button “Make everything good”, but if you return to real programs, it will be most convenient to have some familiar and standard principle of operation.

Most users will most likely be better off if they don’t need to memorize a bunch of keys for cli, configure a bunch of different, often obscure options via web or tui, and set up alerts about failed operation. This also includes the ability to easily “fit” a backup solution into an existing infrastructure, as well as automating the backup process. Immediately the ability to install a package manager, or in one or two commands of the type "download and unzip".

curl | sudo bash

curl | sudo bash

is a complicated method because you need to verify that it arrives by reference.

For example, of the candidates considered, a simple solution is burp, rdiff-backup, and restic, which have mnemonically remembered keys for different operating modes. A little more complicated are borg and duplicity. The most difficult was AMANDA. The rest of the ease of use is somewhere in between. In any case, if you need more than 30 seconds to read the user manual, or you need to go to Google or another search engine, and also scroll through the long help sheet, the solution is complicated, one way or another.

Some of the candidates considered are able to automatically send a message by e-mail \ jabber, while others rely on configured alerts in the system. Moreover, most often complex decisions have not quite obvious notification settings. In any case, if the backup program gives a non-zero return code, which will be correctly understood by the system service of periodic tasks (the message will fly away to the system administrator or immediately to the monitoring) - the situation is simple. But if the backup system, which does not work on the backup server, cannot be set up in an obvious way, the complexity is already excessive. In any case, issuing warnings and other messages only to the web interface and / or to the log is bad practice, since most often they will be ignored.

As for automation, a simple program can read environment variables that specify its mode of operation, or it has a developed cli that can completely duplicate behavior when working through a web interface, for example. This also includes the possibility of continuous work, the availability of opportunities for expansion, etc.

Versatility

Partially echoes the previous subsection in terms of automation, it should not be a special problem to “fit” the backup process into the existing infrastructure.

It is worth noting that the use of non-standard ports (well, except for the web interface) for work, the implementation of encryption in a non-standard way, the exchange of data with a non-standard protocol are signs of a non-universal solution. For the most part, all candidates have one way or another for the obvious reason: simplicity and versatility are usually not compatible. Burp is an exception, there are others.

As a sign - the ability to work using regular ssh.

Work speed

The most controversial and controversial point. On the one hand, they started the process, it worked as quickly as possible and does not interfere with the main tasks. On the other hand, there is a surge in traffic and CPU load during the backup. It is also worth noting that the fastest backup programs are usually the poorest in features that are important to users. Again: if in order to get one unfortunate text file the size of several tens of bytes with a password, and because of it the whole service costs (yes, I understand that here the backup process is most often not guilty), and you need to re-read sequentially all the files in the repository or deploy the whole archive - the backup system is never fast. Another point that often becomes a stumbling block is the speed of deploying a backup from the archive. This is a clear advantage for those who can simply copy or move files to the right place without special manipulations (rsync for example), but most often the problem needs to be solved in an organizational way, empirically: measure the recovery time of the backup and openly inform users about it.

Stability

It should be understood as follows: on the one hand, it should be possible to deploy the backup back in any way, on the other hand, resistance to various problems: network breakdown, disk failure, deleting part of the repository.

Comparison of backup tools

| Copy Time | Copy Recovery Time | Easy installation | Easy setup | Simple use | Easy automation | Do I need a client \ server? | Check repository integrity | Differential copies | Work through pipe | Versatility | Independence | Repository Transparency | Encryption | Compression | Deduplication | Web interface | Cloud upload | Windows support | Score | ||

| Rsync | 4m15s | 4m28s | Yes | no | no | no | Yes | no | no | Yes | no | Yes | Yes | no | no | no | no | no | Yes | 6 | |

| Tar | pure | 3m12s | 2m43s | Yes | no | no | no | no | no | Yes | Yes | no | Yes | no | no | no | no | no | no | Yes | 8.5 |

| gzip | 9m37s | 3m19s | Yes | ||||||||||||||||||

| Rdiff-backup | 16m26s | 17m17s | Yes | Yes | Yes | Yes | Yes | no | Yes | no | Yes | no | Yes | no | Yes | Yes | Yes | no | Yes | eleven | |

| Rsnapshot | 4m19s | 4m28s | Yes | Yes | Yes | Yes | no | no | Yes | no | Yes | no | Yes | no | no | Yes | Yes | no | Yes | 12.5 | |

| Burp | 11m9s | 7m2s | Yes | no | Yes | Yes | Yes | Yes | Yes | no | Yes | Yes | no | no | Yes | no | Yes | no | Yes | 10.5 | |

| Duplicity | no encryption | 16m48s | 10m58s | Yes | Yes | no | Yes | no | Yes | Yes | no | no | Yes | no | Yes | Yes | no | Yes | no | Yes | eleven |

| gpg | 17m27s | 15m3s | |||||||||||||||||||

| Duplicati | no encryption | 20m28s | 13m45s | no | Yes | no | no | no | Yes | Yes | no | no | Yes | no | Yes | Yes | Yes | Yes | Yes | Yes | eleven |

| aes | 29m41s | 21m40s | |||||||||||||||||||

| gpg | 26m19s | 16m30s | |||||||||||||||||||

| Zbackup | no encryption | 40m3s | 11m8s | Yes | Yes | no | no | no | Yes | Yes | Yes | no | Yes | no | Yes | Yes | Yes | no | no | no | 10 |

| aes | 42m0s | 14m1s | |||||||||||||||||||

| aes + lzo | 18m9s | 6m19s | |||||||||||||||||||

| Borgbackup | no encryption | 4m7s | 2m45s | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | no | Yes | Yes | Yes | Yes | no | Yes | 16 |

| aes | 4m58s | 3m23s | |||||||||||||||||||

| blake2 | 4m39s | 3m19s | |||||||||||||||||||

| Restic | 5m38s | 4m28s | Yes | Yes | Yes | Yes | no | Yes | Yes | Yes | Yes | Yes | no | Yes | no | Yes | no | Yes | Yes | 15,5 | |

| Urbackup | 8m21s | 8m19s | Yes | Yes | Yes | no | Yes | no | Yes | no | Yes | Yes | no | Yes | Yes | Yes | Yes | no | Yes | 12 | |

| Amanda | 9m3s | 2m49s | Yes | no | no | Yes | Yes | Yes | Yes | no | Yes | Yes | Yes | Yes | Yes | no | Yes | Yes | Yes | 13 | |

| Backuppc | rsync | 12m22s | 7m42s | Yes | no | Yes | Yes | Yes | Yes | Yes | no | Yes | no | no | Yes | Yes | no | Yes | no | Yes | 10.5 |

| tar | 12m34s | 12m15s | |||||||||||||||||||

Table Legend:

- Green, the operating time is less than five minutes, or the answer is “Yes” (except for the column “Need a client \ server?”), 1 point

- Yellow, operating time five to ten minutes, 0.5 points

- Red, the operating time is more than ten minutes, or the answer is “No” (except for the column “Need a client \ server?”), 0 points

According to the table above, the most simple, fast, and at the same time convenient and powerful backup tool is BorgBackup. Restic took the second place, the rest of the considered candidates were placed approximately the same with a spread of one or two points at the end.

I thank everyone who read the cycle to the end, I suggest discussing options, suggesting your own, if any. As the discussion progresses, the table may be supplemented.

The result of the cycle will be the final article, in which there will be an attempt to bring out an ideal, fast and manageable backup tool that allows you to quickly deploy a copy back and at the same time have the convenience and simplicity of configuration and maintenance.

Announcement

Backup, part 1: Why do you need a backup, an overview of methods, technologies

Backup, Part 2: Overview and Testing rsync-based backup tools

Backup, Part 3: Overview and Testing duplicity, duplicati

Backup, Part 4: Overview and Testing zbackup, restic, borgbackup

Backup, part 5: Testing bacula and veeam backup for linux

Backup, Part 6: Comparing Backup Tools

Backup Part 7: Conclusions

All Articles