Serverless Telegram bot in Yandex.cloud, or 4.6 kopecks per 1000 messages

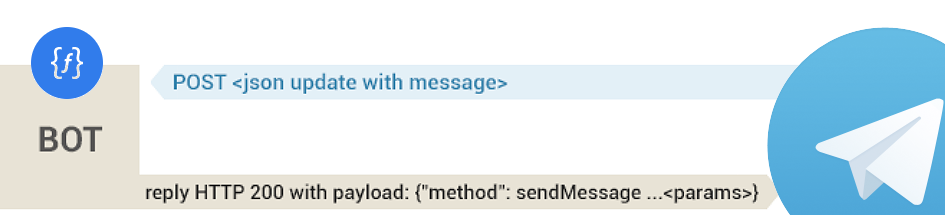

"Whenever you receive a webhook update, you have two options"

From Telegram Bot Api Faq

Hello, Habr!

For a long time, the serverless concept (or more precisely, its implementation as an AWS Lambda service) was for me a relatively clear, but very abstract idea. She often sounded in Radio-T, discussions on the reddit, but never entered my life. Working projects do not live in the cloud, but home projects - why? Virtual computers are getting cheaper, Docker has been mastered, and everything works fine.

But the presentation of Yandex Cloud Functions, and in particular the announced prices for this service, provided new food for thought.

TL; DR - on a rainy Friday night we will write a simple Telegram bot in javascript that can respond to requests with simple messages. If this is your home project - its use will almost certainly cost you much cheaper than the most budget VPS.

Go.

What is Serverless in the most common sense?

I will not go very deep into the jungle; review articles on this topic regularly appear on Habré. This is an opportunity to place a function in the cloud, in some of the programming languages supported by the platform, to set the condition for its operation - and that’s all. When the trigger happens, the virtual environment rises, the function works in it, and turns off. Together with the environment.

What are the advantages of this approach?

Security

You get a secure isolated environment with the latest version of the compiler / interpreter.

Instead of monitoring updates for packages on a real OS in the Virtual Machine and setting up security policies and a firewall, you upload the program to the server and it works.

Stability and resiliency

Instead of configuring pm2, setting up a reboot policy, monitoring the memory leak and comprehending the nuances of the deployment - yes, you just upload the program to the server, and the service provider takes care of the rest.

Price, especially in the conditions of home light workloads

When charging, the amount of memory reserved for the function during its execution and the number of calls are taken into account. According to the documentation, 10,000,000 function launches, running 800ms with a 512 MB memory limit, will cost 3,900 rubles.

What does this mean for me? My typical pet project is a bot that answers scheduled questions for an event well known in narrow circles. You need to run it once a year, for several days. Last year, he answered 1000 requests from participants, 128mb is more than enough for him, the execution time of the function is 300ms. Such a use case will cost 0.046₽ .

Yes, 4.6 kopecks. Plus, I will not spend time on settings, which is even more pleasant. There are no rules for pm2, no actualization of the Dockerfile or environment, and a cherry on the cake - SLA 99.9.

Of the unsolved problems so far (but, I suppose, this is a matter of time) - binding of external domains, as well as fine-tuning of http methods that serve as a trigger for the function. Now the http trigger fires on any of DELETE, GET, HEAD, OPTIONS, PATCH, POST or PUT requests for an auto-generated entry point like https://functions.yandexcloud.net/xxxxxxxxxxxxxxxx .

Of the good news, this is full-fledged https that meets all Telegram requirements for working with api via webHooks. But AWS Lambda has add-ons in the form of API Gateway, and the trigger setting itself is wider if you need it.

An obvious limitation of the serverless approach itself, regardless of the platform - you have to use exactly what they give. You cannot write code in unsupported programming languages or use non-standard compiler / interpreter parameters. There may also be additional restrictions designed to protect all participants in the development process.

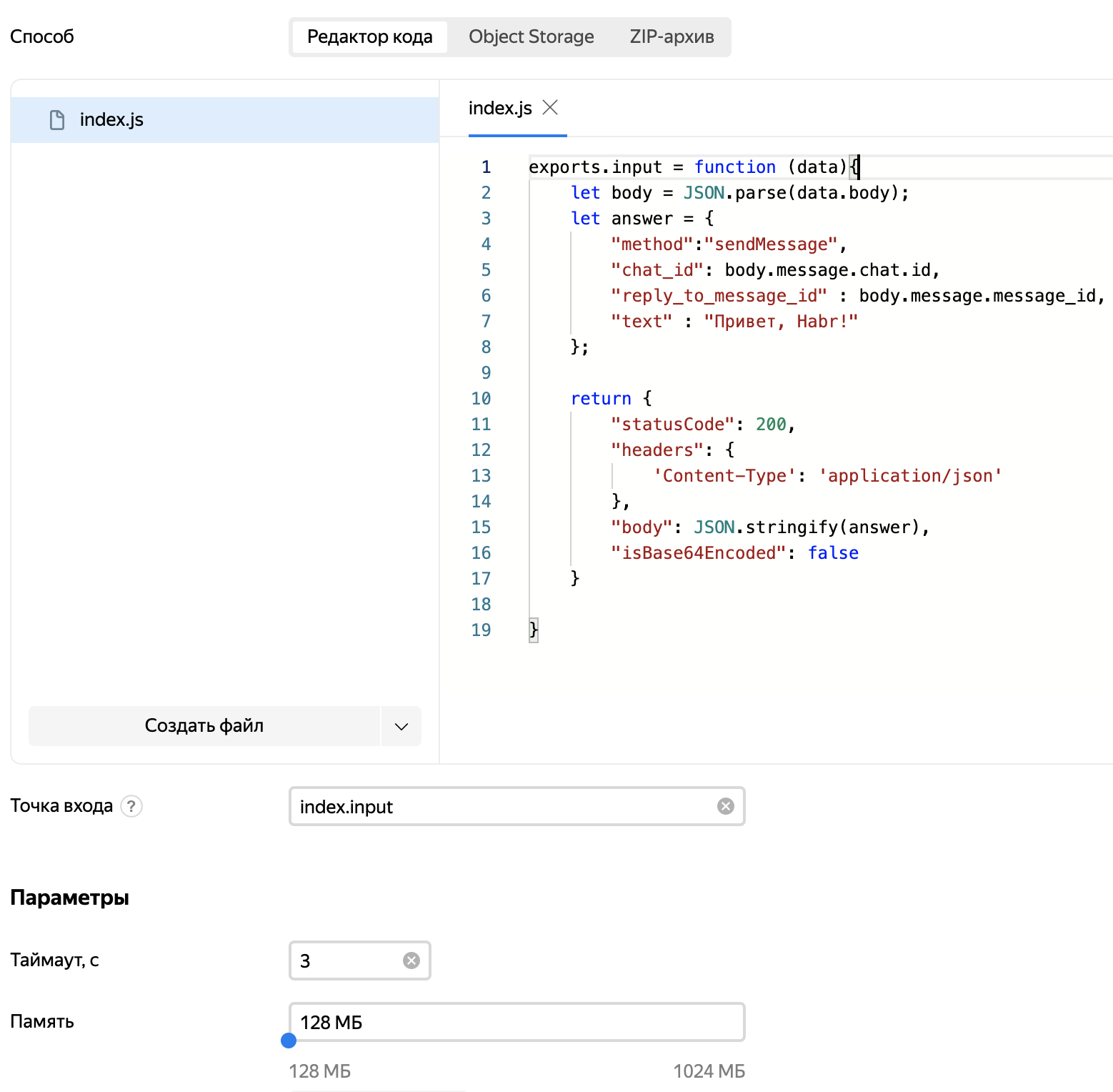

How to create a .js file to work in Yandex.Cloud?

Short guide through the web interface:

- create Function

- create a file in the web interface with any name and extension js

- choose an interpreter - nodejs10 or nodejs12

- in the file we write a function with one parameter in exports.myFunction (well, in an arbitrary field in exports)

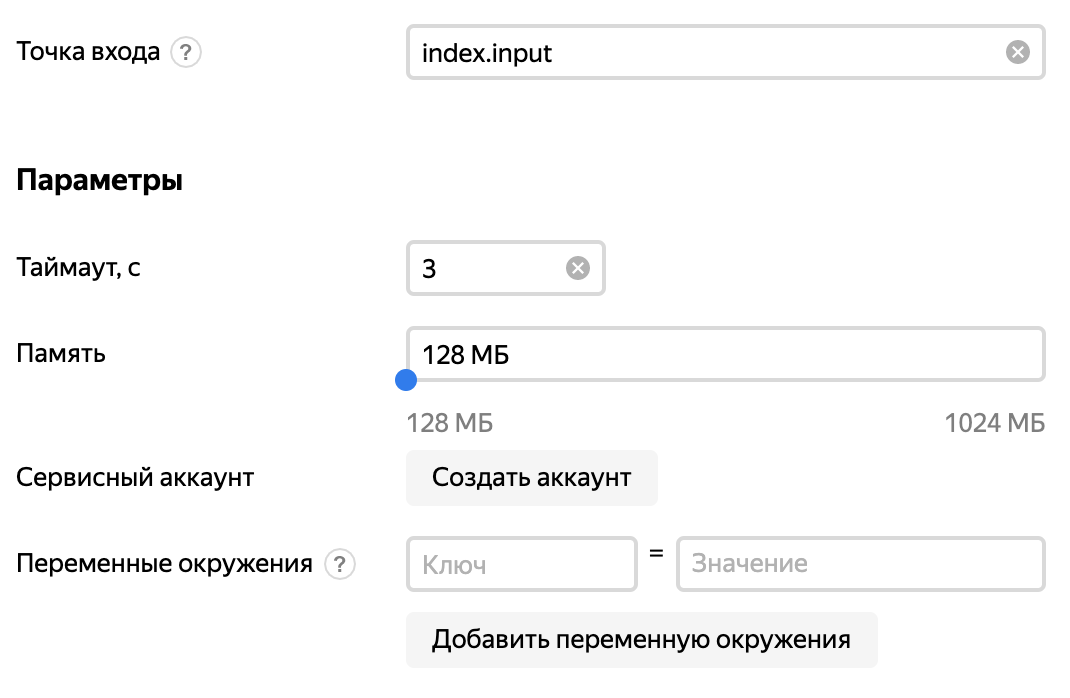

- indicate the timeout of the function, RAM (128MB-1024MB in increments of 128MB), entry point (filename.myFunction)

- make the function public

A function written in a file can:

Get the http request data through the input parameter:

the function does not receive request in its pure form, and of course does not control the progress of the request - it receives in its only parameter an object with information about the request:

{ "httpMethod": "< HTTP >", "headers": "< HTTP->", "multiValueHeaders": "< HTTP->", "queryStringParameters": "< queryString->", "multiValueQueryStringParameters": "< queryString->", "requestContext": "< >", "body": "< >", "isBase64Encoded": <true false> }

Reply to http request

according to the documentation :

{ "statusCode": <HTTP >, "headers": "< HTTP->", "multiValueHeaders": "< HTTP->", "body": "< >", "isBase64Encoded": <true false> }

So, something Friday, useless

First, look at what has already been written before us - implementations of such bots for AWS Lambda wagon and small trolley .

They have one problem - in order not to reinvent the wheel, and to provide a familiar interface, all of these implementations, upon receipt of the request, initiate a post to the telegram api server. But you can do it easier.

As you can see on KDPV, and the quote at the beginning of the post - when working through webHook, telegram listens to the response to its update message in order to understand whether it was processed by our bot. Moreover, he is ready to accept the message as part of the same answer.

According to the documentation, the answer should contain only one function (checked sendMessage and sendPhoto ). For many projects this will be enough.

We will follow the traditions and give greetings to the Khabrovsk citizens:

exports.input = function (data){ let body = JSON.parse(data.body); let answer = { "method":"sendMessage", "chat_id": body.message.chat.id, "reply_to_message_id" : body.message.message_id, "text" : ", Habr!" }; return { "statusCode": 200, "headers": { 'Content-Type': 'application/json' }, "body": JSON.stringify(answer), "isBase64Encoded": false } }

Set the settings to minimum:

And tell Telegram that we will use webHook:

curl -F "url=https://functions.yandexcloud.net/{secret_function_id}" https://api.telegram.org/bot{secret_bot_key}/setWebhook

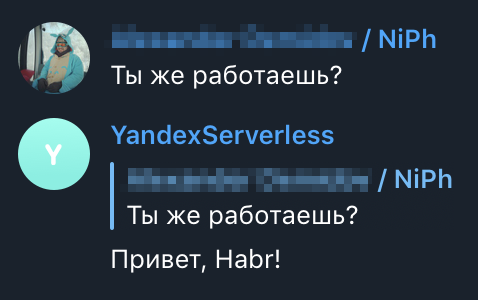

All. The bot is working.

You can chat with him: @YandexServerlessBot

To summarize - in some cases serverless is extremely cheap, convenient, and saves a lot of time, and any documentation should be read carefully: then it can pleasantly surprise.

If you are interested, welcome to the Yandex Cloud Functions documentation , there are a lot of interesting things, from integration with other cloud services to debug, load schedules, etc.

Conference video is also available on YouTube .

UPD : As further research has shown (thanks to IRT for the tip), tg servers are accessible without such tricks, so you can safely use traditional api requests.

All Articles