12 new Azure Media Services artificial intelligence

Microsoft's mission is to give every person and organization on the planet the opportunity to achieve more. The media industry is a great example of translating this mission into reality. We are living in an era when more and more content is being created and consumed, in ever greater ways and on more devices. At IBC 2019, we shared the latest innovations that we are currently working on and talked about how they can help transform your media process.

Details under the cut!

This page is on our website.

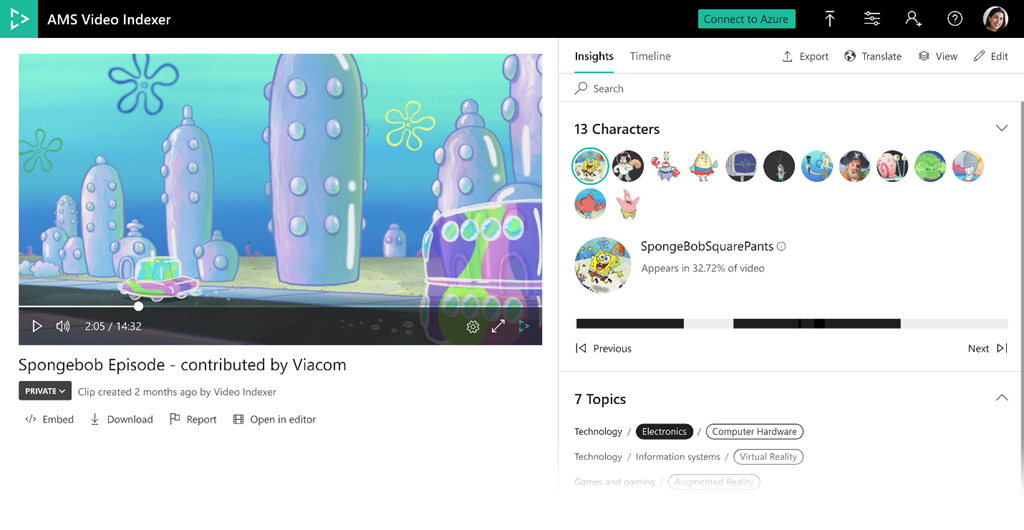

Last year at IBC, we made our award-winning Azure Media Services Video Indexer available to the public, and this year it got even better. The Video Indexer automatically extracts information and metadata from media files, such as spoken words, faces, emotions, themes, and brands, and you don’t need to be a machine learning expert to use it.

Our latest offers include preliminary versions of two very popular and differentiated functions - recognition of animated characters and transcribing multilingual speech, as well as several additions to existing models available today in the Video Indexer.

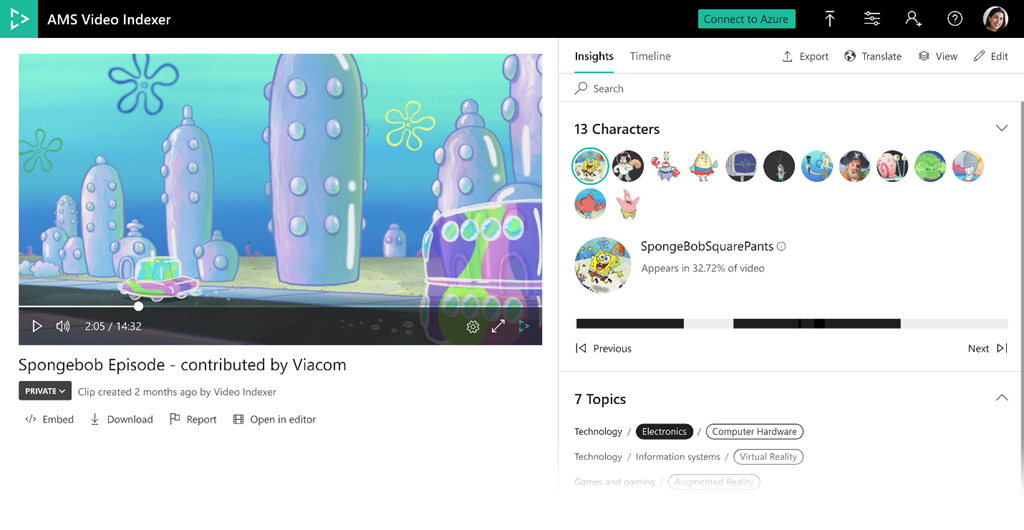

Animated content, cartoons are one of the most popular types of content, but standard machine vision models created for recognizing human faces do not work very well with it, especially if the content contains characters without human features. In the new preview version, Video Indexer is integrated with Microsoft's Azure Custom Vision service, creating a new set of models that automatically detect and group animated characters and make it easy to tag and recognize them using integrated custom machine vision models.

The models are integrated into a single conveyor, which allows anyone to use this service without any knowledge in the field of machine learning. Results are available through the Video Indexer portal, which does not require writing code, or through the REST API for quick integration into your own applications.

We created these models for working with animated characters along with some consumers who provided real animated content for training and testing. The value of the new functionality was well described by Andy Gutteridge, senior director of studio technology and post-production Viacom International Media Networks, who was one of the data providers: “Adding a robust AI-based animated content detection feature will allow us to quickly and efficiently find and catalog character metadata from our library content.

Most importantly, it will give our creative teams the opportunity to instantly find the right content, minimize the time spent on managing the media, and allow us to focus on creativity. ”

You can start exploring the recognition of animated characters from the documentation page .

Some media resources, such as news, newsreels, and interviews, contain recordings of people speaking different languages. Most of the existing options for translating speech into text require a preliminary indication of the sound recognition language, which makes it difficult to transcribe multilingual videos.

Our new feature for automatically identifying a spoken language for various types of content uses machine learning technology to identify languages found in media resources. After detection, each language segment automatically undergoes the transcription process in the corresponding language, and then all segments are combined into one transcription file consisting of several languages.

The resulting decryption is available as part of the output of the JSON Video Indexer and in the form of files with subtitles. The output decryption is also integrated with Azure Search, which allows you to immediately search for different language segments in videos. In addition, multilingual transcription is available when working with the Video Indexer portal, so you can view the transcript and the identified language by time or go to specific places in the video for each language and see multilingual transcription in the form of signatures during video playback. You can also translate the resulting text into any of the 54 available languages through the portal and API.

Read more about the new multi-language content recognition feature and its use in the Video Indexer in the documentation .

We are also adding new models to the Video Indexer and improving existing ones, including those described below.

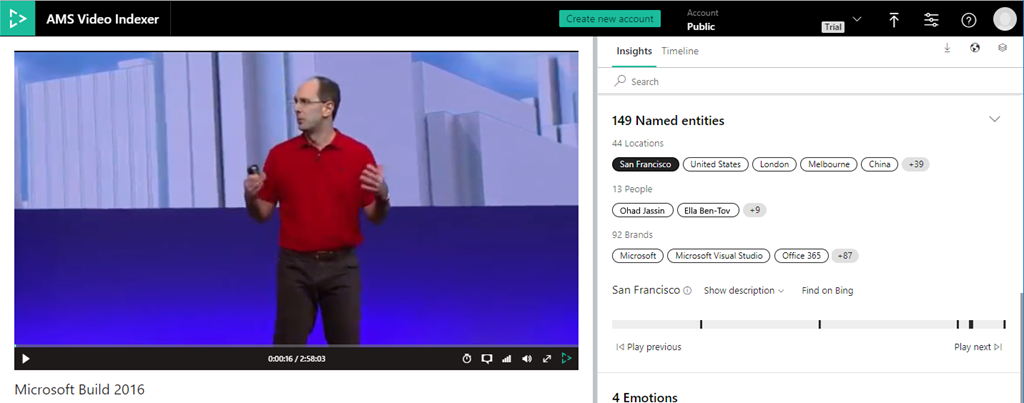

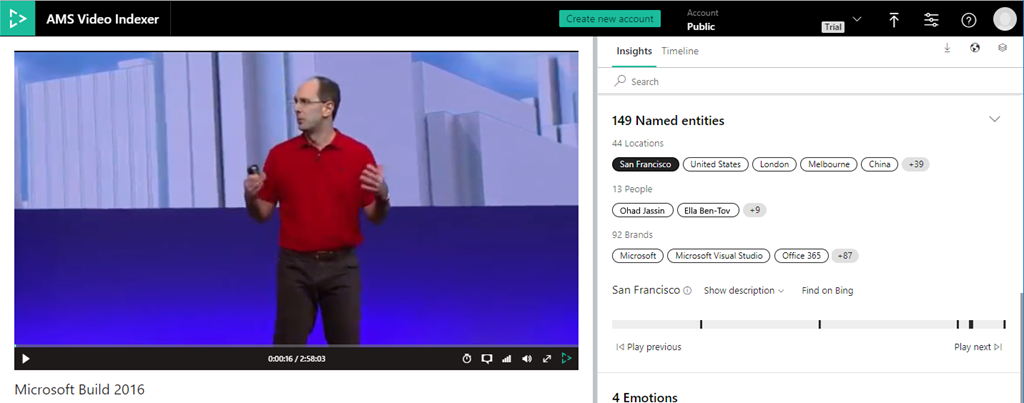

We have expanded our existing brand discovery capabilities to include well-known names and locations, such as the Eiffel Tower in Paris and Big Ben in London. When they appear in the generated decryption or on the screen using optical character recognition (OCR), the corresponding information is added. With this new feature, you can search all the people, places, and brands that appear in the video and view information about them, including time intervals, descriptions, and links to the Bing search engine for more information.

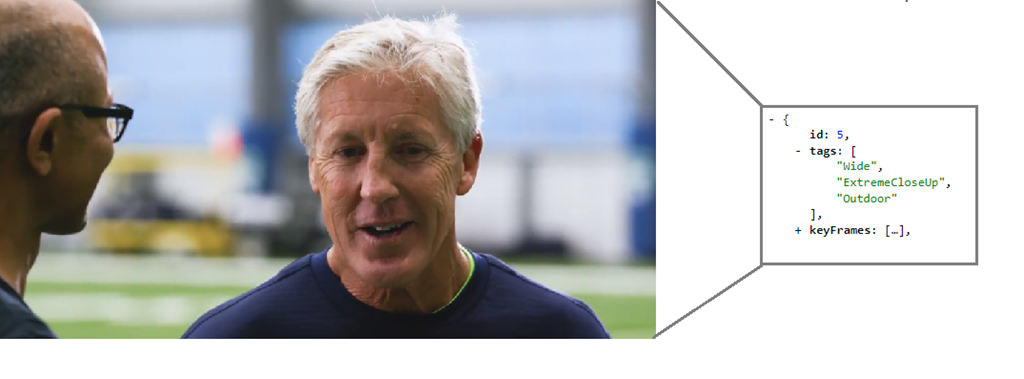

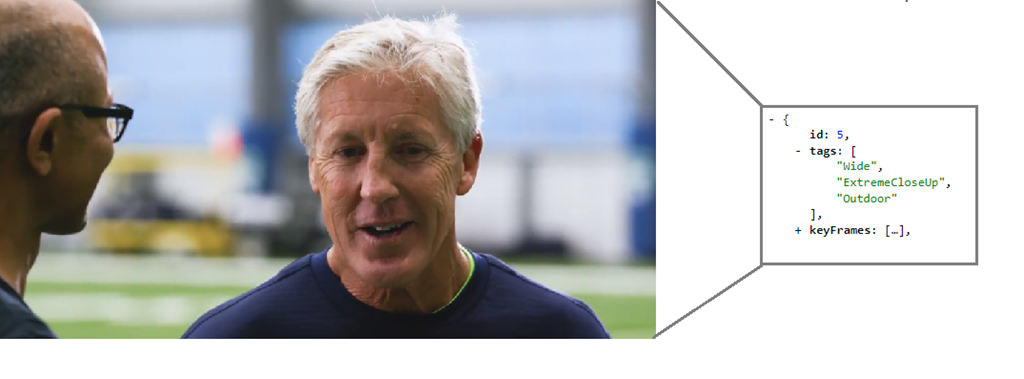

This new feature adds a set of “tags” to the metadata attached to individual frames in the JSON details to represent their editorial type (for example, wide frame, medium frame, close-up, very close-up, two shots, several people, outdoors, indoors, etc.). These frame type characteristics are useful when editing video for clips and trailers, as well as when searching for a specific frame style for artistic purposes.

Learn more about frame type detection in the Video Indexer.

Our subject detection model identifies the subject of a video based on transcription, optical character recognition (OCR), and celebrities discovered, even if the subject is not explicitly stated. We correlate these discovered topics with four areas of classification: Wikipedia, Bing, IPTC and IAB. This enhancement allows us to include a second level IPTC classification.

Taking advantage of these enhancements is as easy as reindexing your current Video Indexer library.

In the preview version of Azure Media Services, we also offer two new features for live streaming.

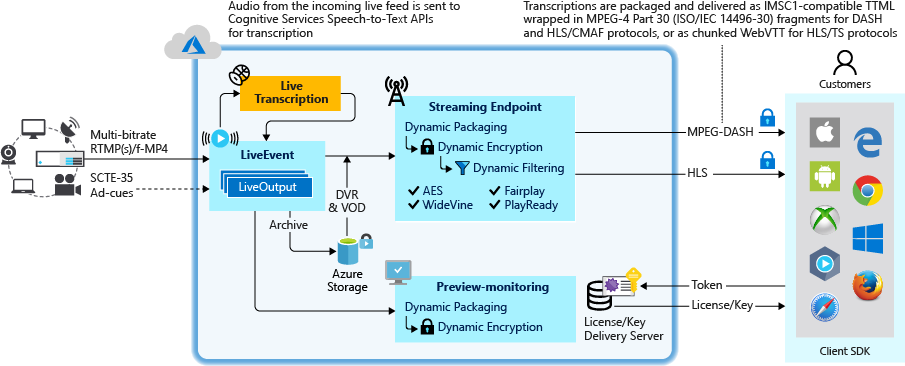

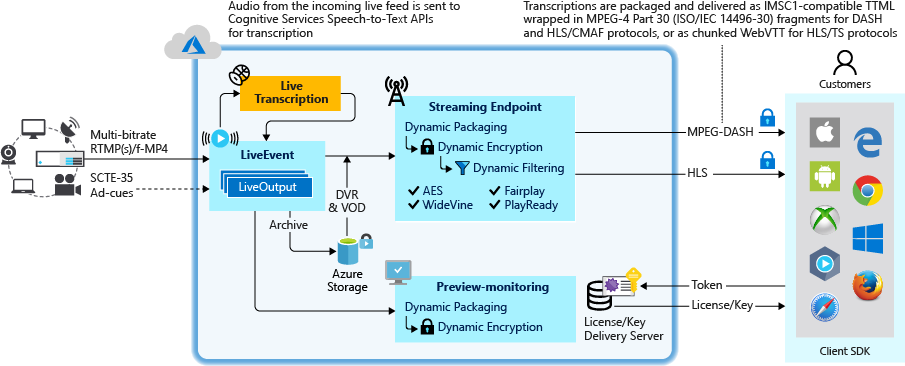

Using Azure Media Services for real-time streaming, you can now get an output stream that includes an automatically generated text track in addition to audio and video content. Text is created by transcribing real-time audio based on artificial intelligence. Custom methods are applied before and after converting speech to text to improve results. The text track is packaged in IMSC1, TTML, or WebVTT, depending on whether it comes in DASH, HLS CMAF, or HLS TS.

Using our API v3, you can create channels using OTT (over-the-top) technology, manage and conduct live broadcasts through them, and use all other Azure Media Services features, such as live video broadcasts on demand (VOD, video on demand), packaging and digital rights management (DRM).

For a preview of these features, visit the Azure Media Services community page.

Content broadcast on broadcast channels often has an audio track with verbal explanations of what is happening on the screen in addition to the normal audio signal. This makes programs more accessible to visually impaired viewers, especially if the content is mostly visual. The new audio description function allows you to annotate one of the audio tracks as an audio description track (AD, audio description), so that players can make the AD track accessible to viewers.

Broadcasting companies often use time-based metadata embedded in the video to transmit a signal about the insertion of advertisements or custom metadata events onto the client’s player. In addition to SCTE-35 signaling modes, we now also support ID3v2 and other user schemes defined by the application developer for use by the client application.

Bitmovin Introduces Bitmovin Video Encoding and Bitmovin Video Player for Microsoft Azure. Customers can now use these encoding and playback solutions in Azure and use advanced features such as three-stage encoding, support for AV1 / VC codecs, multilingual subtitles and pre-integrated video analytics for QoS, advertising and video tracking.

Evergent showcases its User Lifecycle Management Platform on Azure. As a leading provider of revenue and customer lifecycle management solutions, Evergent leverages Azure AI to help premium entertainment providers improve customer engagement and retention by creating targeted service packages and offers at critical times in their lifecycle.

Haivision will showcase its intelligent cloud-based multimedia routing service, SRT Hub, which helps customers transform workflows from start to finish using Azure Data Box Edge and transform workflows using Hublets from Avid, Telestream, Wowza, Cinegy and Make.tv.

SES has developed an Azure-based broadcast-grade media package for its satellite-based and managed media services customers. SES will showcase solutions for fully manageable playback services, including master playback, localized playback, ad detection and replacement, and high-quality 24 × 7 real-time multi-channel encoding on Azure.

SyncWords makes available on Azure convenient cloud-based tools and signature creation automation technology. These offers will make it easier for media organizations to automatically add subtitles, including in a foreign language, to the work processes of video processing in real time and offline on Azure.

Tata Elxsi , an international technology services company, has integrated its OTT SaaS TEPlay platform with Azure Media Services to deliver OTT content from the cloud. Tata Elxsi also migrated Falcon Eye's QoE solution, which provides analytics and metrics for decision making, to Microsoft Azure.

Verizon Media makes its streaming platform available on Azure as a beta. Verizon Media Platform is an enterprise-grade OTT solution that includes DRM, ad insertion, personalized personalized sessions, dynamic content replacement, and video delivery. Integration simplifies workflows, global support and scalability, and provides access to a number of unique features available in Azure.

Details under the cut!

This page is on our website.

Video Indexer introduces support for animations and multilingual content

Last year at IBC, we made our award-winning Azure Media Services Video Indexer available to the public, and this year it got even better. The Video Indexer automatically extracts information and metadata from media files, such as spoken words, faces, emotions, themes, and brands, and you don’t need to be a machine learning expert to use it.

Our latest offers include preliminary versions of two very popular and differentiated functions - recognition of animated characters and transcribing multilingual speech, as well as several additions to existing models available today in the Video Indexer.

Recognition of animated characters

Animated content, cartoons are one of the most popular types of content, but standard machine vision models created for recognizing human faces do not work very well with it, especially if the content contains characters without human features. In the new preview version, Video Indexer is integrated with Microsoft's Azure Custom Vision service, creating a new set of models that automatically detect and group animated characters and make it easy to tag and recognize them using integrated custom machine vision models.

The models are integrated into a single conveyor, which allows anyone to use this service without any knowledge in the field of machine learning. Results are available through the Video Indexer portal, which does not require writing code, or through the REST API for quick integration into your own applications.

We created these models for working with animated characters along with some consumers who provided real animated content for training and testing. The value of the new functionality was well described by Andy Gutteridge, senior director of studio technology and post-production Viacom International Media Networks, who was one of the data providers: “Adding a robust AI-based animated content detection feature will allow us to quickly and efficiently find and catalog character metadata from our library content.

Most importantly, it will give our creative teams the opportunity to instantly find the right content, minimize the time spent on managing the media, and allow us to focus on creativity. ”

You can start exploring the recognition of animated characters from the documentation page .

Identification and transcription of content in several languages

Some media resources, such as news, newsreels, and interviews, contain recordings of people speaking different languages. Most of the existing options for translating speech into text require a preliminary indication of the sound recognition language, which makes it difficult to transcribe multilingual videos.

Our new feature for automatically identifying a spoken language for various types of content uses machine learning technology to identify languages found in media resources. After detection, each language segment automatically undergoes the transcription process in the corresponding language, and then all segments are combined into one transcription file consisting of several languages.

The resulting decryption is available as part of the output of the JSON Video Indexer and in the form of files with subtitles. The output decryption is also integrated with Azure Search, which allows you to immediately search for different language segments in videos. In addition, multilingual transcription is available when working with the Video Indexer portal, so you can view the transcript and the identified language by time or go to specific places in the video for each language and see multilingual transcription in the form of signatures during video playback. You can also translate the resulting text into any of the 54 available languages through the portal and API.

Read more about the new multi-language content recognition feature and its use in the Video Indexer in the documentation .

Additional updated and improved models

We are also adding new models to the Video Indexer and improving existing ones, including those described below.

Retrieving Entities Associated with People and Places

We have expanded our existing brand discovery capabilities to include well-known names and locations, such as the Eiffel Tower in Paris and Big Ben in London. When they appear in the generated decryption or on the screen using optical character recognition (OCR), the corresponding information is added. With this new feature, you can search all the people, places, and brands that appear in the video and view information about them, including time intervals, descriptions, and links to the Bing search engine for more information.

Editor Detection Model

This new feature adds a set of “tags” to the metadata attached to individual frames in the JSON details to represent their editorial type (for example, wide frame, medium frame, close-up, very close-up, two shots, several people, outdoors, indoors, etc.). These frame type characteristics are useful when editing video for clips and trailers, as well as when searching for a specific frame style for artistic purposes.

Learn more about frame type detection in the Video Indexer.

Advanced IPTC Mapping Detailing

Our subject detection model identifies the subject of a video based on transcription, optical character recognition (OCR), and celebrities discovered, even if the subject is not explicitly stated. We correlate these discovered topics with four areas of classification: Wikipedia, Bing, IPTC and IAB. This enhancement allows us to include a second level IPTC classification.

Taking advantage of these enhancements is as easy as reindexing your current Video Indexer library.

New Live Streaming Functionality

In the preview version of Azure Media Services, we also offer two new features for live streaming.

AI real-time transcription takes live broadcasts to the next level

Using Azure Media Services for real-time streaming, you can now get an output stream that includes an automatically generated text track in addition to audio and video content. Text is created by transcribing real-time audio based on artificial intelligence. Custom methods are applied before and after converting speech to text to improve results. The text track is packaged in IMSC1, TTML, or WebVTT, depending on whether it comes in DASH, HLS CMAF, or HLS TS.

Real-time linear coding for 24/7 OTT channels

Using our API v3, you can create channels using OTT (over-the-top) technology, manage and conduct live broadcasts through them, and use all other Azure Media Services features, such as live video broadcasts on demand (VOD, video on demand), packaging and digital rights management (DRM).

For a preview of these features, visit the Azure Media Services community page.

New package generation features

Sound Track Description Support

Content broadcast on broadcast channels often has an audio track with verbal explanations of what is happening on the screen in addition to the normal audio signal. This makes programs more accessible to visually impaired viewers, especially if the content is mostly visual. The new audio description function allows you to annotate one of the audio tracks as an audio description track (AD, audio description), so that players can make the AD track accessible to viewers.

Insert ID3 Metadata

Broadcasting companies often use time-based metadata embedded in the video to transmit a signal about the insertion of advertisements or custom metadata events onto the client’s player. In addition to SCTE-35 signaling modes, we now also support ID3v2 and other user schemes defined by the application developer for use by the client application.

Microsoft Azure partners showcase end-to-end solutions

Bitmovin Introduces Bitmovin Video Encoding and Bitmovin Video Player for Microsoft Azure. Customers can now use these encoding and playback solutions in Azure and use advanced features such as three-stage encoding, support for AV1 / VC codecs, multilingual subtitles and pre-integrated video analytics for QoS, advertising and video tracking.

Evergent showcases its User Lifecycle Management Platform on Azure. As a leading provider of revenue and customer lifecycle management solutions, Evergent leverages Azure AI to help premium entertainment providers improve customer engagement and retention by creating targeted service packages and offers at critical times in their lifecycle.

Haivision will showcase its intelligent cloud-based multimedia routing service, SRT Hub, which helps customers transform workflows from start to finish using Azure Data Box Edge and transform workflows using Hublets from Avid, Telestream, Wowza, Cinegy and Make.tv.

SES has developed an Azure-based broadcast-grade media package for its satellite-based and managed media services customers. SES will showcase solutions for fully manageable playback services, including master playback, localized playback, ad detection and replacement, and high-quality 24 × 7 real-time multi-channel encoding on Azure.

SyncWords makes available on Azure convenient cloud-based tools and signature creation automation technology. These offers will make it easier for media organizations to automatically add subtitles, including in a foreign language, to the work processes of video processing in real time and offline on Azure.

Tata Elxsi , an international technology services company, has integrated its OTT SaaS TEPlay platform with Azure Media Services to deliver OTT content from the cloud. Tata Elxsi also migrated Falcon Eye's QoE solution, which provides analytics and metrics for decision making, to Microsoft Azure.

Verizon Media makes its streaming platform available on Azure as a beta. Verizon Media Platform is an enterprise-grade OTT solution that includes DRM, ad insertion, personalized personalized sessions, dynamic content replacement, and video delivery. Integration simplifies workflows, global support and scalability, and provides access to a number of unique features available in Azure.

All Articles