Intel Nervana NNP-T and NNP-I - specialized chips for AI

Recognizing the importance of artificial intelligence, Intel is taking another step in that direction. A month ago, at the Hot Chips 2019 conference, the company officially introduced two specialized chips designed for training and inference of neural networks. The chips were named Intel Nervana NNP- T (Neural Network Processor) and Intel Nervana NNP- I, respectively. Under the cut you will find the characteristics and schemes of new products.

Intel Nervana NNP-T (Spring Crest)

Neural network training time, along with energy efficiency, is one of the key parameters of the AI system, which determines the scope of its application. The computing power used in the largest models and training sets doubles every three months. At the same time, a limited set of calculations is used in neural networks, mainly convolutions and matrix multiplication, which opens up great scope for optimizations. Ideally, the device we need should be balanced in terms of consumption, communications, computing power, and scalability.

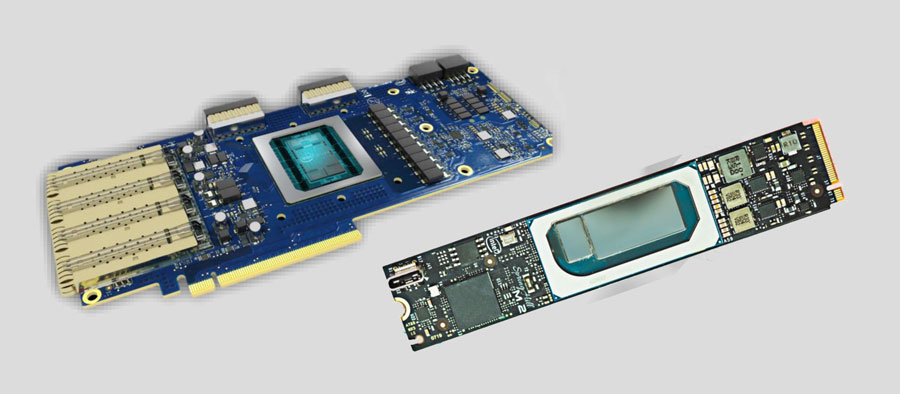

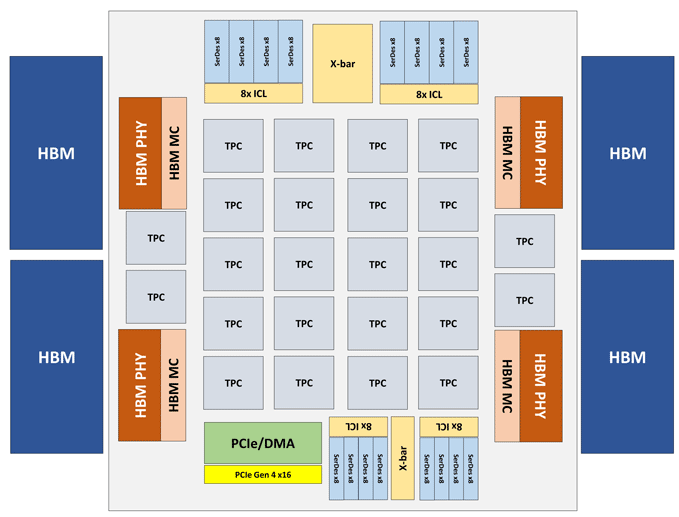

The Intel Nervana NNP-T module is made in the form of a PCIe 4.0 x16 card or OAM. The main NNP-T computing element is the 24-piece Tensor Processing Cluster (TPC), providing up to 119 TOPS performance. A total of 32 GB of HBM2-2400 memory is connected through 4 HBM ports. On board there is also a serialization / deserialization unit on 64 lines, SPI, I2C, GPIO interfaces. The amount of distributed memory on the chip is 60 MB (2.5 MB per TPC).

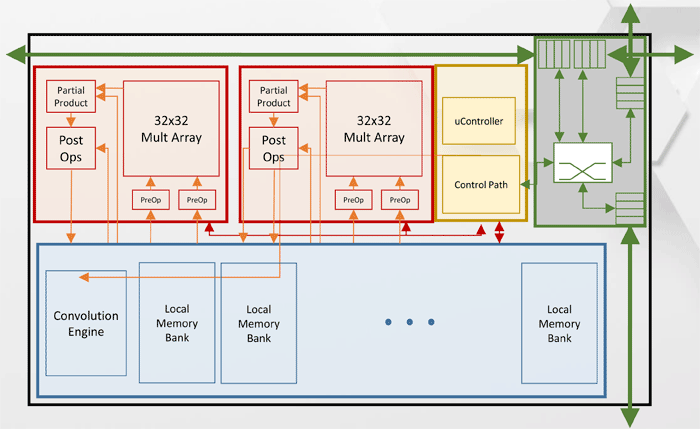

Tensor Processing Cluster (TPC) Architecture

Other Intel Nervana NNP-T performance specifications.

| Process technology | SMC CLN16FF + |

| Interposer Area | 680 mm 2 , 1200 mm 2 |

| Number of transistors | 27 billion |

| Size and type of case SoC | 60x60 mm, 3325 pin BGA |

| Base frequency | 1.1 GHz |

| Working consumption | 150-250 watts |

As you can see from the diagram, each TPC has two 32x32 matrix multiplication cores with BFloat16 support. Other operations are performed in the format BFloat16, or FP32. In total, up to 8 cards can be installed on one host, the maximum scalability - up to 1024 nodes.

Intel Nervana NNP-I (Spring Hill)

When designing Intel Nervana NNP-I, the aim was to provide maximum energy efficiency with inference on the scale of large data centers - about 5 TOP / W.

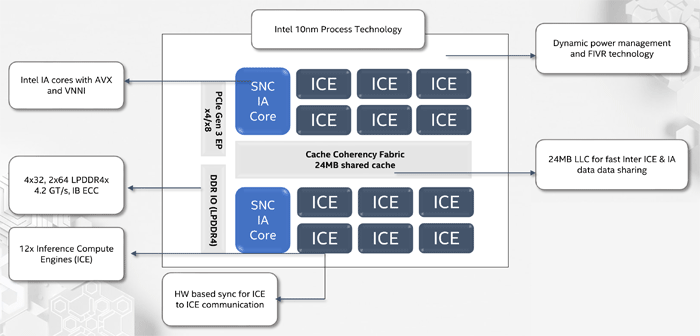

NNP-I is a SoC, made according to the 10-nm process technology and includes two standard x86 cores with support for AVX and VNNI, as well as 12 specialized Inference Compute Engine (ICE) cores. The maximum performance is 92 TORS, TDP - 50 watts. The amount of internal memory is 75 MB. Structurally, the device is made in the form of an expansion card M.2.

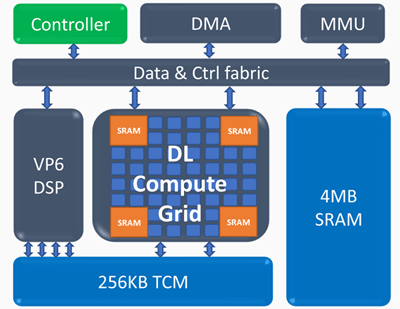

Inference Compute Engine (ICE) Architecture

Key elements of the Inference Compute Engine:

Deep Learning Compute Grid

- 4k MAC (int8) per cycle

- scalable support for FP16, INT8, INT 4/2/1

- large amount of internal memory

- nonlinear operations and pooling

Programmable vector processor

- high performance - 5 VLIW 512 b

- advanced NN support - FP16 / 16b / 8b

The following performance indicators of Intel Nervana NNP-I were obtained: on a 50-layer ResNet network, a speed of 3600 inferences per second was achieved with an energy consumption of 10 W, that is, energy efficiency is 360 images per second in terms of Watt.

All Articles