Temperature Sampling

Recently I came across a question in the ODS chat: why does the letter-by-letter algorithm generate the text of a letter not from p

(the probability vector of the next letter predicted by the language model ), but from p'=softmax(log(p)/t)

( where t

is some other obscure positive scalar)?

A quick and incomprehensible answer: t

is "temperature", and it allows you to control the variety of generated texts. And for the sake of a long and detailed answer, in fact, this post was written.

Bit of math

First, softmax(x)=exp(x)/sum(exp(x))

remind you that softmax(x)=exp(x)/sum(exp(x))

(exp, log, and the division of vectors is componentwise). It turns out that if t=1

, then the logarithm and the exponent are mutually annihilated, and we get p'=p

. That is, with a unit temperature, this transformation does not change anything.

What happens if you make the temperature very high? Softmax gets (almost) zeros, and at the output we get (almost) the same numbers, about 1/n

, where n

is the dimension p

(the number of letters in the alphabet). That is, at very high temperatures, we hammer on what the language model predicted, and a sample of all letters is equally probable.

And if we set the temperature close to zero, then each component p'

will be a very-large-exponent divided by the sum of very-large-exponents. In the limit, that letter, which corresponded to the largest value of p

, will dominate all the others, and p'

will tend to unity for it and to zero for all other letters. That is, at very low temperatures, we always choose the most likely letter (even if its absolute probability is not so high - say, only 5%).

Main conclusion

That is, temperature sampling is a general view of different types of sampling, taking into account the predictions of the model to varying degrees. This is necessary to maneuver between model confidence and diversity. You can raise the temperature to generate more diverse texts, or lower it to generate texts in which the model is more confident on average. And, of course, this all applies not only to the generation of texts, but in general to any probabilistic models.

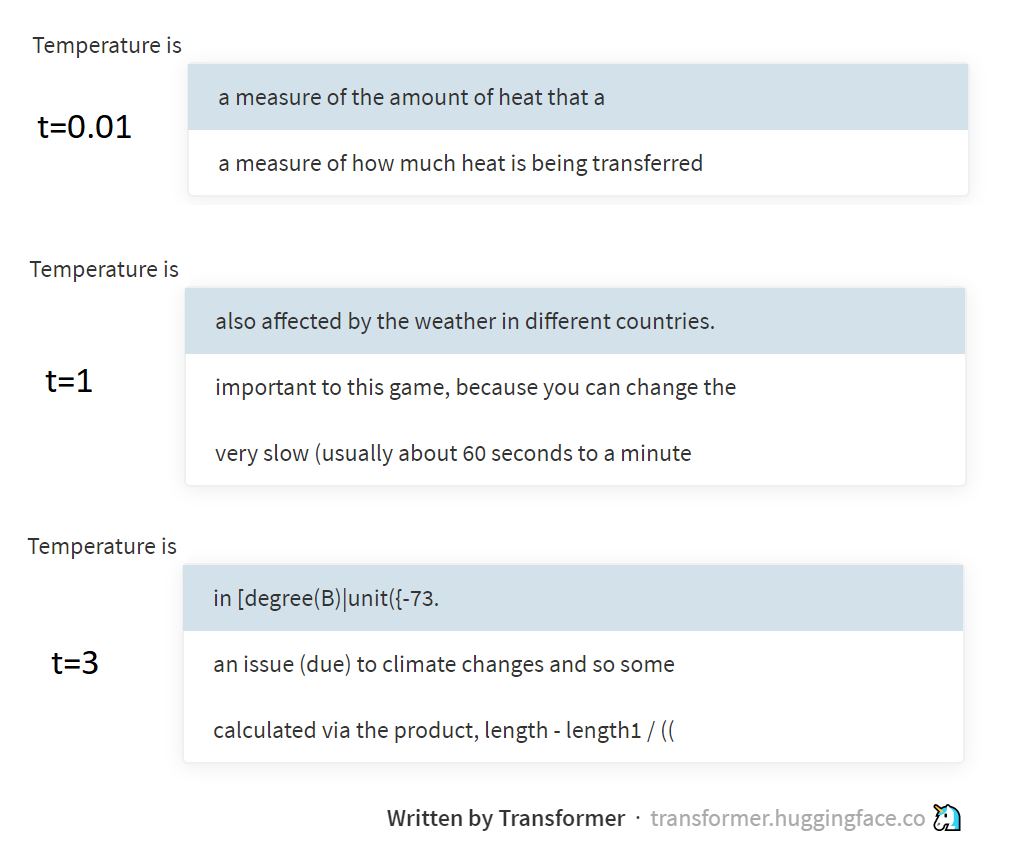

The picture above shows examples of sampling at different temperatures from the GPT-2 model through this wonderful page supported by the Hugging Face group. GPT-2 generates texts not by letters or words, but by BPE tokens (specially selected sequences of letters), but the essence of this does not really change.

A bit of physics

And what does the temperature have to do with it, you may ask. The answer is a reference to the Boltzmann distribution used in thermodynamics. This distribution describes the probability of the states in which the system is located (for example, several gas molecules locked in a bottle), depending on the temperature of the system and the level of potential energy corresponding to these states. In the transition from a high-energy state to a low-energy state, it is released (converted into heat), but on the contrary, it is spent. Therefore, the system will often end up in low-energy states (it’s easier to get into them, more difficult to leave, and impossible to forget ), but the higher the temperature, the more often the system will still jump into high-energy states too. Boltzmann, in fact, described these regularities by the formula, and got exactly the same p=softmax(-energy/t)

.

I already wrote in my essay on entropy ( 1 , 2 , 3 ), but did not really explain how statistical entropy is related to the concept of entropy in physics. But actually, through the Boltzmann distribution, they seem to be connected. But here I’m better off sending you off to read physics works, and I myself will go further with NLP. Low to you perplexions!

PS This text was written at a temperature of about 38 °, so do not be surprised if it is a little more random than you would expect ¯\_(ツ)_/¯

All Articles