Second Place History in Mini AI Cup 4: Paper IO

My name is Igor Volkov. I work in a consulting company in the positions of Java developer, architect, team leader, technical manager. Different roles depending on the current needs of the project. I drew attention to the contests from mail.ru for a long time, but it turned out to participate actively only at Paper IO.

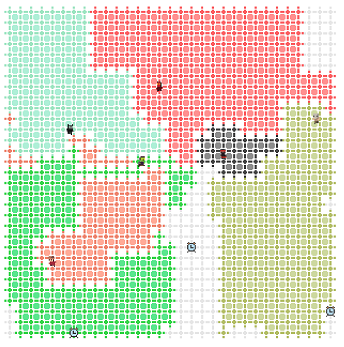

This time, the organizers proposed to implement a bot management strategy based on the popular game. Read more about the rules here . The code of my strategy can be found here , and examples of games on the championship website .

At the very beginning of the competition, as it seemed to me, the most common pop-up implementation idea was the use of MCTS . Therefore, I spent a little time experimenting with this algorithm. Having never figured out how to use it effectively to solve the problem, I decided to start by generating a lot of rectangular routes (with two, and then with three turns) and their subsequent evaluation.

Strategy Algorithm

The high-level algorithm of the strategy can be represented by the following 6 points:

- Read the state of the world

- Convert message objects to work objects

- Form a set of rectangular routes

- Rate each of the generated routes

- Choose the best route

- Send team

This algorithm has not changed during the competition. Only the method of forming bot routes and their assessment were modified.

The SimpleStrategy class contains the initial version of the strategy, and the BestStrategy class is an improved version, which took 2nd place in the competition.

Reading the state of the world

The state of the world is transmitted as a JSON object via STDIN . I saw in pom.xml that you can use the Gson library and the task of reading the state of the world has been greatly simplified. Using the Gson library , deserialized the JSON string read from the standard input stream into an instance of the Message class. The code is in Main.java (lines 44-49) .

Create work objects

Using transport objects in the main program code is usually not very convenient and architecturally wrong. For example, for some reason, the organizers may change the format of messages. Therefore, it is necessary to transform transport objects into workers, which will be used in the main program code. The Player and PlayerState classes preserve the state of the bot, and the utility class MessagePlayer2PlayerConverter helps to create these classes based on data from the transport message. The Bonus class contains information about the bonus of a cell in the playing field. The code for creating work objects is located in Main.java (lines 61-74) .

Route formation

In the first versions of the strategy ( SimpleStrategy ), the path was set using the MovePlanWithScore and Move classes. Move sets the direction of movement and how many cells the bot should move in this direction, and MovePlanWithScore contains the route specified by the Move array and an estimate of this route. An array could contain from one to four Move objects. Despite the fact that only rectangular routes with no more than three turns are considered, in fact the bot route is obtained in the form of a broken line. This is achieved by choosing a new best rectangular route on each turn. The route generation function , implemented as nested loops, forms a list from MovePlanWithScore for its further evaluation.

Such formation of the bot's trajectories was not very effective in terms of the performance of the subsequent assessment, since it was necessary to calculate the same trajectories several times, but it was very useful for understanding the mechanics of the game.

In later versions of the strategy, BestStrategy began to use the route tree. The MoveNode class reflects the node of this tree. The tree is fully formed at the start of the strategy. Pay attention to the init method of the MoveNode class . It is very similar to generating routes from the SimpleStrategy class. Fundamentally, the route in question is not much different from the first version.

The formation of routes, I think, could have been improved a bit more by adding another twist. But there was not enough time for optimization.

Route Rating

Wherever the bot was located, the best route ending on its territory was always chosen for him. To evaluate the route, I introduced two indicators: score and risk. Score - approximately reflects the number of points scored per tick of the path, and risk - the number of ticks that are not enough to complete the path (for example, due to the fact that the opponent can grab by the tail). Risk did not appear immediately. In the first version, if the bot suddenly found in the middle of the path that it did not have time to complete the route, it “went crazy,” since all the dangerous paths were equally bad for it. Of all the routes considered, the most “safe” one is selected with the maximum number of points per tick of the path.

To assess the safety of the route, I calculate the reachability matrix: for each cell of the playing field I find a tick on which an opponent's bot can appear in it. First, only a tick, and later added a tail length calculation. Bonuses that can be picked up along the way were also not taken into account in the first versions of the strategy. The TimeMatrixBuilder class calculates matrices of ticks and tail lengths of rival bots. These matrices are then used to assess risk. If my bot is on its territory at the time of calculating the next move - the maximum risk level is assigned to dangerous routes, if the bot was already on the way in a foreign or neutral territory, the risk is assessed as the difference between the ticks of the completion of the path (the bot came to its territory) and the tick when it can threaten danger (for example, someone else's bot can step on the tail).

In the first versions of the strategy, the score was considered only on the basis of the captured territory and the bonuses were slightly taken into account. I use a recursive algorithm to find the captured cells. Many contestants complained about the strangeness and excessive computational complexity of the algorithm used by the organizers at Local Runner . I suppose that this was done intentionally so as not to give the participants of the competition ready-made solutions.

Strange, but despite the primitiveness of the first versions of the strategy, it showed itself quite well: 10th place in the sandbox. True, in the prefinal round he began to quickly fall down: other participants improved their strategies.

My bot often died. First of all, due to the fact that a route was being built to the territory that was captured by rivals bots. The path unexpectedly lengthened and my bot was caught by the tail. Often died due to an incorrect prediction of the length of the tail and the speed of the opponent’s bot. For example, an opponent’s bot taking a slowdown was dangerous, since, in approximate calculation, the strategy assumed that he should have already left the cell, and he was still there. To combat these problems, I began to calculate a larger number of indicators for each cell of the playing field (classes AnalyticsBuilder and CellDetails ).

Counting cells of the playing field

- Tick at which the opponent’s bot can occupy the cage (tick grab at the tail)

- Tick at which the opponent’s bot can enter the cell

- Tail length at the entrance to the cage

- Tick at which the opponent’s bot can get out of the cage

- Tail length when leaving the cage

- Tick at which cell can be captured by the opponent's bot

- Tick at which the cell may be the target for capturing territory

- Tick on which the cell can be hit with a saw

The depth of analytics is limited to 10 moves. I think it was possible to achieve greater depth by refusing to count individual rivals or introduce floating depth, but there was not enough time for optimization. In addition to AnalyticsBuilder, he began to use SimpleTickMatrixBuilder if there was a lack of rendering depth for AnalyticsBuilder . Analytics results are used by BestStrategy .

The rating function has also improved slightly:

- I began to take into account bonuses: a penalty for taking a deceleration bonus and a bonus for taking acceleration and saw bonuses. As a result, the bot began to successfully avoid bad bonuses and picked up good ones along the way.

- He began to take into account the clash of heads. Added a few points for the victory clash. The farther a possible collision, the less points.

- To reduce the likelihood of the environment, he added a few points for taking the opponent's boundary cells.

- Reduced the value of empty cells at the border: the farther from the center, the lower the value. Watching the fights of the finals, I came to the conclusion that for the very fact of capturing an empty cell, there was no need to accrue points at all. The value of an empty cell should depend on the proximity to large clusters of enemy cells. Unfortunately, in the final it was no longer possible to edit the strategy.

- Added points for surrounding the opponent’s bot head. Not sure if this somehow helped. Maybe with the simplest strategies.

- He added points even for a futile grab by the tail (the opponent’s bot managed to capture the territory at the same tick in which my bot stepped on his tail). I’m definitely not sure, but I think that this prevented the opponent’s bots from capturing someone else’s territory and they often had to return to their own.

- In case of detection of possible death from capture greatly increased the cost of the boundary cells of the opponent’s territory.

Strategy debugging

The first versions of the strategy contained a large number of typos and errors: apparently, the result of nightly programming. For example, in the Cell class, the index was considered incorrectly: instead of this.index = x * Game.sizeY + y

, this.index = x * Game.width + y

. At first I tried to rely only on tests, but my intuition suggested that without visualization and without playing previously played matches it would be difficult to find errors in the code and analyze the reasons for making erroneous decisions. As a result, the DebugWindow visualizer appeared , in which you can view previously played games step by step, as well as start debugging on the desired tick. Not very nice, whipped up code, but it helped me a lot when debugging. For example, an error was immediately detected with an incorrect calculation of the cell index. Many contestants displayed debugging information in the console, but it seemed to me not enough.

Optimization

In order not to waste time creating objects and running the GC, I tried to create some objects in advance. These are the cells of the playing field ( Cell class). Additionally for each cell identified neighbors. Created a tree of possible paths in advance (class MoveNode ).

I assumed that many scenarios would have to be simulated, and in the process the current state would deteriorate and it would have to be restored every time. Therefore, to preserve the state of the world, I tried to use as much as possible packed structures. To store the occupied territory - BitSet (class PlayerTerritory ). Each cell of the playing field is numbered, and the cell number corresponds to the bit number in BitSet. To store the tail, I used BitSet together with ArrayDeque (class PlayerTail ).

True, I did not get to playing various scenarios due to lack of time. And since the main function of calculating the path became recursive and the whole state could be stored on the stack, the latest optimizations were not very useful to me.

Unrealized ideas

When assessing the risk of the route of my bot, I took into account each opponent independently. In fact, each of the rivals is also afraid to die. Therefore, it would be worth considering this in a risk assessment. At least, this definitely had to be taken into account in the final games.

Accounting for the future death of an opponent. Sometimes it happened that the bot captures the territory of the opponent, and the opponent unexpectedly dies. It's a shame, because as a result, you capture only empty cells.

Accounting for empty cells that will be captured in the near future as an evaluation function.

Recommendations and thanks

I recommend that all developers actively participate in AI Cups contests. This develops thinking and helps through the game to learn new algorithms. And as my experience has shown, a little zeal is enough to occupy a prize place and even a simple and not very optimal code can bring results.

Many thanks to the organizers. Despite some technical problems, the competition turned out to be interesting. I look forward to the next!

All Articles