Do not fall into the trap of premature optimization

Donald Knuth once said the words that later became famous: “The real problem is that programmers, not where they need to, and not when they need to, spend too much time caring for efficiency. Premature optimization is the root of all evils (or at least most of them) in programming. ”

The author of the material, the translation of which we are publishing today, wants to talk about how he once fell into the trap of premature optimization, and how he understood from his own bitter experience that premature optimization is the root of all evils.

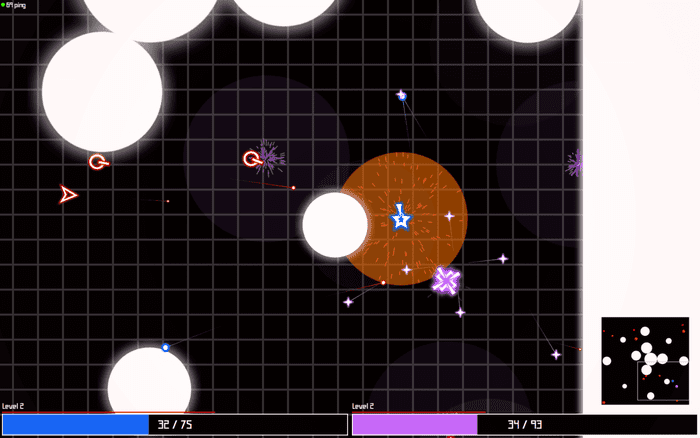

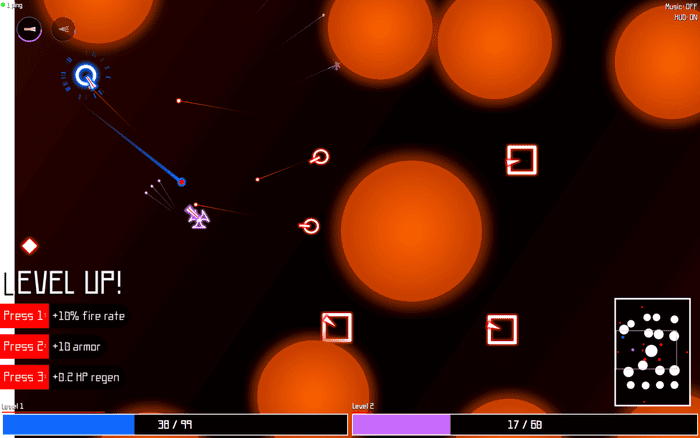

A few years ago I worked on the web game GeoArena Online (then I sold it , the new owners posted it on geoarena.io ). It was a multiplayer game in the style of "the last survivor." There, the player controlled the ship, fighting one on one against another player.

Game GeoArena Online

Game GeoArena Online

A dynamic game, the world of which is full of particles and effects, requires serious computing resources. As a result, the game on some old computers "slowed down" in particularly tense moments. I, a man who is not indifferent to productivity issues, took up with interest the solution to this problem. “How do I speed up the GeoArena client-side JavaScript part,” I asked myself.

After searching a bit on the Internet, I discovered the fast.js library. It was a "collection of micro-optimizations aimed at simplifying the development of very fast JavaScript programs." This library was accelerated by the availability of faster implementations of the built-in standard methods like Array.prototype.forEach () .

I found this extremely interesting. GeoArena used many arrays, performed many operations with arrays, so using fast.js could very well help me in speeding up the game. The following results of

performance research have been included in README for fast.js.

How can a method implemented in some external library be faster than its standard version? The thing is that there was one trick (they, these tricks, are found everywhere you look). The library was only suitable for working with arrays that were not sparse.

Here are a couple of simple examples of such arrays:

In order to understand why the library cannot work normally with sparse arrays, I looked into its source code. It turned out that the

implementation in fast.js is based on for loops. A quick implementation of the

method would look something like this:

A call to the

method

three values:

Calling

only leads to the conclusion of two values:

This difference appears due to the fact that JS specifications regarding the use of callback functions indicate that such functions should not be called on remote or uninitialized array indices (they are also called “holes”). The implementation of

did not check the array for holes. This led to an increase in speed at the cost of correct work with sparse arrays. This was perfect for me, as sparse arrays were not used in GeoArena.

At this point, I should just have a quick test on fast.js. I should install the library, change the standard methods of the

object to methods from fast.js and test the performance of the game. But instead, I moved in a completely different direction.

The manic perfectionist living in me wanted to squeeze absolutely everything out of optimizing the performance of the game. The fast.js library simply did not seem to me to be a good enough solution, since its use implied calling its methods. Then I thought: “What if I replace the standard methods of arrays by simply embedding new, faster implementations of these methods in the code? That would save me the need for library method calls. ”

It was this idea that led me to the ingenious idea, which was to create a compiler, which I brazenly called faster.js . I planned to use it instead of fast.js. For example, here is the source code snippet:

The faster.js compiler would convert this code to the following - faster, but looking worse:

The creation of faster.js was prompted by the same idea that underpinned fast.js. Namely, we are talking about micro-optimizations of performance due to the rejection of support for sparse arrays.

At first glance, faster.js seemed to me an extremely successful development. Here are some results from a faster.js performance study:

Full test results can be found here . They were held in Node v8.16.1, on the 15-inch MacBook Pro 2018.

Is my development 2000% faster than standard implementation? Such a serious increase in productivity is, without a doubt, something that can have the strongest positive impact on any program. Right?

No, not true.

Consider a simple example.

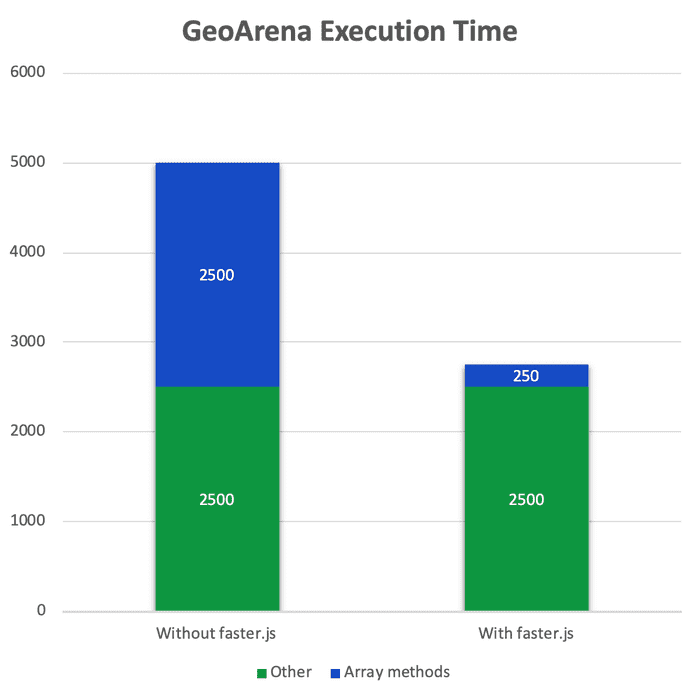

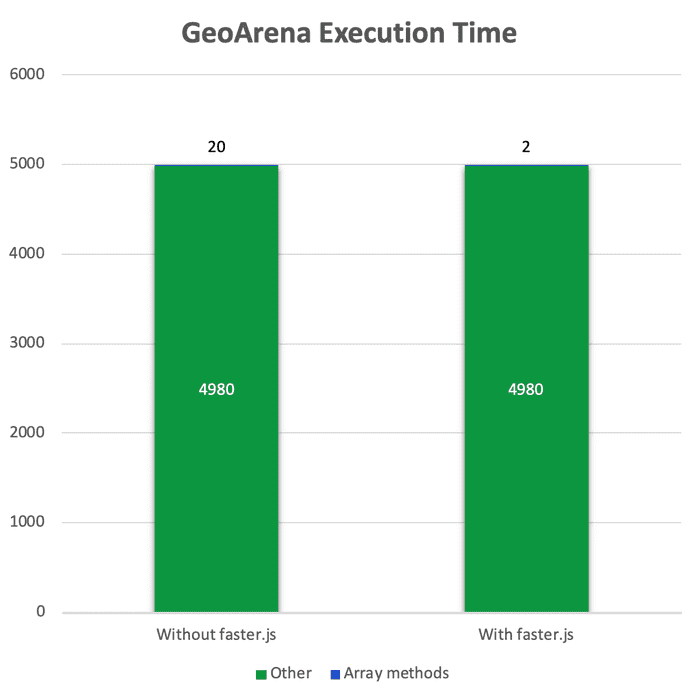

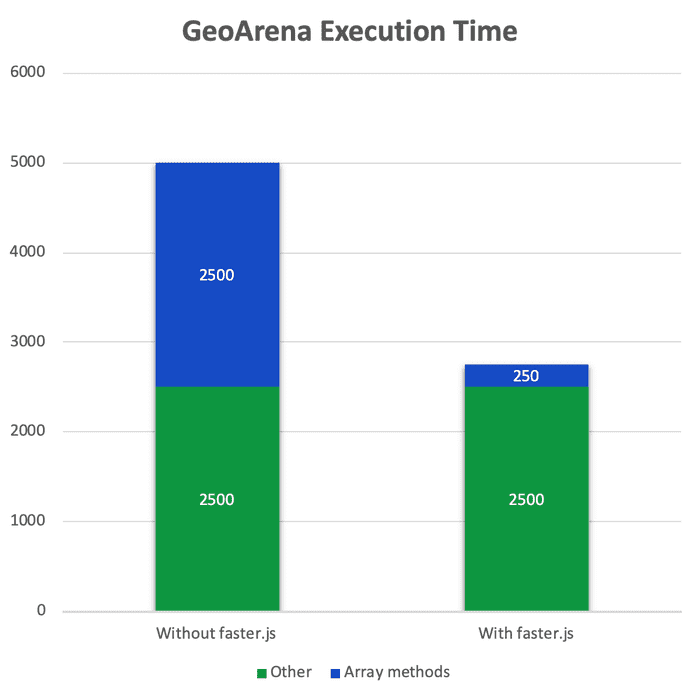

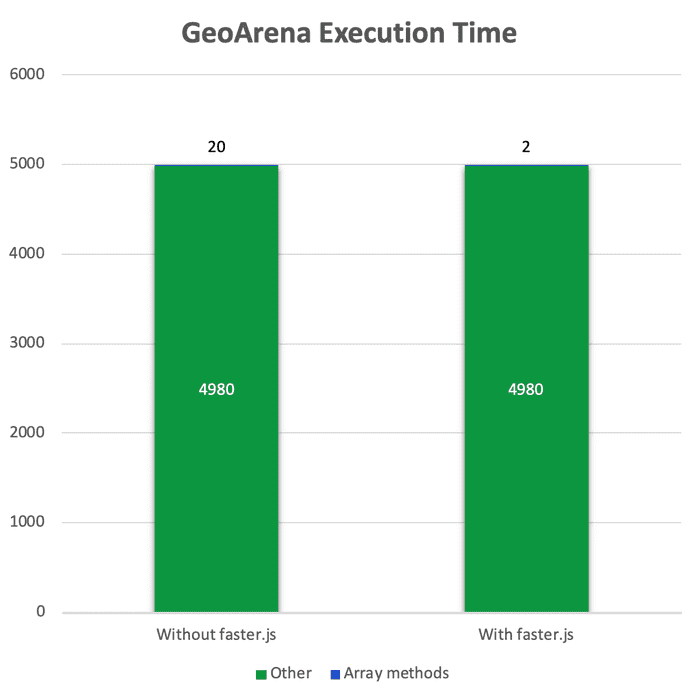

And here is the question that really interests us: “What part of these 5000 ms is spent on the implementation of array methods?”.

Suppose half. That is - 2500 ms is spent on array methods, the remaining 2500 ms - on everything else. If so, then using faster.js will give a huge performance boost.

Conditional example: the execution time of the program is very much reduced

As a result, it turns out that the total computational time has been reduced by 45%.

Unfortunately, all these arguments are very, very far from reality. GeoArena, of course, uses many array methods. But the real distribution of code execution time for different tasks looks something like the following.

Harsh reality

Sadly, what can I say.

This is exactly the mistake Donald Knuth warned about. I didn’t put my efforts into what they should be applied to, and I did not do it when it was worth doing.

Here simple mathematics comes into play. If something takes only 1% of the program’s execution time, then optimization of this will, in the best case, yield only a 1% increase in productivity.

This is exactly what Donald Knuth had in mind when he said "not where it is needed." And if you think about what “where you need it”, it turns out that these are the parts of programs that represent performance bottlenecks. These are the pieces of code that make a significant contribution to the overall performance of the program. Here the concept of "productivity" is used in a very broad sense. It may include the runtime of the program, the size of its compiled code, and something else. A 10% improvement in that part of the program that greatly affects performance is better than a 100% improvement in some little thing.

Knut also spoke of the application of efforts "not when necessary." The point of this is that you need to optimize something only when it is necessary. Of course, I had a good reason to think about optimization. But remember that I started developing faster.js and before that I didn’t even try to test the fast.js library in GeoArena? The minutes spent testing fast.js in my game would save me weeks of work. I hope you don’t fall into the same trap that I fell into.

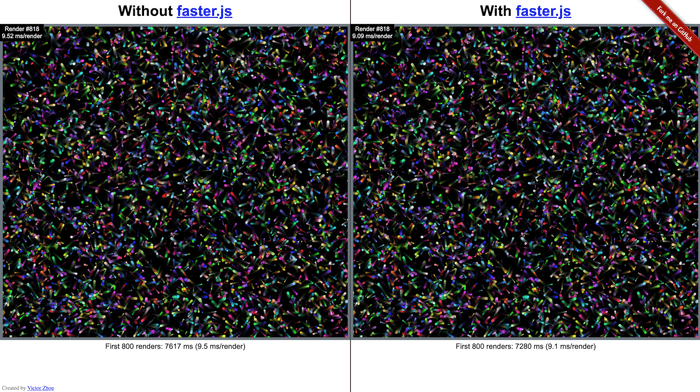

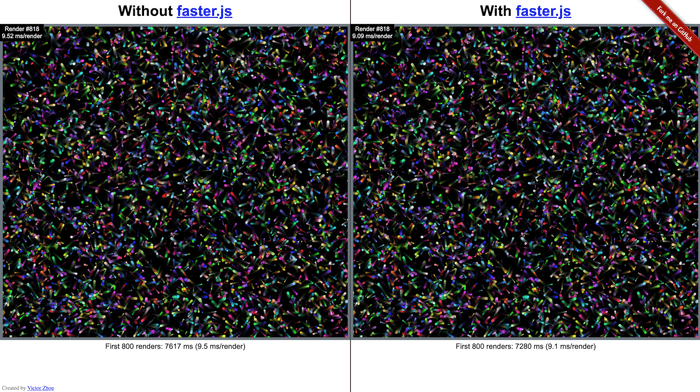

If you are interested in experimenting with faster.js, you can take a look at this demo. What results you get depends on your device and browser. Here, for example, what happened in my Chrome 76 on the 15-inch MacBook Pro 2018.

Faster.js test results

You might be interested in learning about the actual results of using faster.js in GeoArena. I, when the game was still mine (as I said, I sold it), conducted some basic research. As a result, it turned out the following:

In general, faster.js has its pros and cons, but this development of mine did not have much impact on the performance of GeoArena. I would have understood this much earlier if I had bothered to first test the game using fast.js.

May my story serve as a warning to you.

Dear readers! Did you fall into the trap of premature optimization?

The author of the material, the translation of which we are publishing today, wants to talk about how he once fell into the trap of premature optimization, and how he understood from his own bitter experience that premature optimization is the root of all evils.

Game GeoArena Online

A few years ago I worked on the web game GeoArena Online (then I sold it , the new owners posted it on geoarena.io ). It was a multiplayer game in the style of "the last survivor." There, the player controlled the ship, fighting one on one against another player.

Game GeoArena Online

Game GeoArena Online

A dynamic game, the world of which is full of particles and effects, requires serious computing resources. As a result, the game on some old computers "slowed down" in particularly tense moments. I, a man who is not indifferent to productivity issues, took up with interest the solution to this problem. “How do I speed up the GeoArena client-side JavaScript part,” I asked myself.

Fast.js library

After searching a bit on the Internet, I discovered the fast.js library. It was a "collection of micro-optimizations aimed at simplifying the development of very fast JavaScript programs." This library was accelerated by the availability of faster implementations of the built-in standard methods like Array.prototype.forEach () .

I found this extremely interesting. GeoArena used many arrays, performed many operations with arrays, so using fast.js could very well help me in speeding up the game. The following results of

forEach()

performance research have been included in README for fast.js.

Native .forEach() vs fast.forEach() (10 items) ✓ Array::forEach() x 8,557,082 ops/sec ±0.37% (97 runs sampled) ✓ fast.forEach() x 8,799,272 ops/sec ±0.41% (97 runs sampled) Result: fast.js is 2.83% faster than Array::forEach().

How can a method implemented in some external library be faster than its standard version? The thing is that there was one trick (they, these tricks, are found everywhere you look). The library was only suitable for working with arrays that were not sparse.

Here are a couple of simple examples of such arrays:

// - : 1 . const sparse1 = [0, , 1]; console.log(sparse1.length); // 3 // - const sparse2 = []; // ... - . 0 - 4 . sparse2[5] = 0; console.log(sparse2.length); // 6

In order to understand why the library cannot work normally with sparse arrays, I looked into its source code. It turned out that the

forEach()

implementation in fast.js is based on for loops. A quick implementation of the

forEach()

method would look something like this:

// . function fastForEach(array, f) { for (let i = 0; i < array.length; i++) { f(array[i], i, array); } } const sparseArray = [1, , 2]; const print = x => console.log(x); fastForEach(sparseArray, print); // print() 3 . sparseArray.forEach(print); // print() 2 .

A call to the

fastForEach()

method

fastForEach()

three values:

1 undefined 2

Calling

sparseArray.forEach()

only leads to the conclusion of two values:

1 2

This difference appears due to the fact that JS specifications regarding the use of callback functions indicate that such functions should not be called on remote or uninitialized array indices (they are also called “holes”). The implementation of

fastForEach()

did not check the array for holes. This led to an increase in speed at the cost of correct work with sparse arrays. This was perfect for me, as sparse arrays were not used in GeoArena.

At this point, I should just have a quick test on fast.js. I should install the library, change the standard methods of the

Array

object to methods from fast.js and test the performance of the game. But instead, I moved in a completely different direction.

My development called faster.js

The manic perfectionist living in me wanted to squeeze absolutely everything out of optimizing the performance of the game. The fast.js library simply did not seem to me to be a good enough solution, since its use implied calling its methods. Then I thought: “What if I replace the standard methods of arrays by simply embedding new, faster implementations of these methods in the code? That would save me the need for library method calls. ”

It was this idea that led me to the ingenious idea, which was to create a compiler, which I brazenly called faster.js . I planned to use it instead of fast.js. For example, here is the source code snippet:

// const arr = [1, 2, 3]; const results = arr.map(e => 2 * e);

The faster.js compiler would convert this code to the following - faster, but looking worse:

// faster.js const arr = [1, 2, 3]; const results = new Array(arr.length); const _f = (e => 2 * e); for (let _i = 0; _i < arr.length; _i++) { results[_i] = _f(arr[_i], _i, arr); }

The creation of faster.js was prompted by the same idea that underpinned fast.js. Namely, we are talking about micro-optimizations of performance due to the rejection of support for sparse arrays.

At first glance, faster.js seemed to me an extremely successful development. Here are some results from a faster.js performance study:

array-filter large ✓ native x 232,063 ops/sec ±0.36% (58 runs sampled) ✓ faster.js x 1,083,695 ops/sec ±0.58% (57 runs sampled) faster.js is 367.0% faster (3.386μs) than native array-map large ✓ native x 223,896 ops/sec ±1.10% (58 runs sampled) ✓ faster.js x 1,726,376 ops/sec ±1.13% (60 runs sampled) faster.js is 671.1% faster (3.887μs) than native array-reduce large ✓ native x 268,919 ops/sec ±0.41% (57 runs sampled) ✓ faster.js x 1,621,540 ops/sec ±0.80% (57 runs sampled) faster.js is 503.0% faster (3.102μs) than native array-reduceRight large ✓ native x 68,671 ops/sec ±0.92% (53 runs sampled) ✓ faster.js x 1,571,918 ops/sec ±1.16% (57 runs sampled) faster.js is 2189.1% faster (13.926μs) than native

Full test results can be found here . They were held in Node v8.16.1, on the 15-inch MacBook Pro 2018.

Is my development 2000% faster than standard implementation? Such a serious increase in productivity is, without a doubt, something that can have the strongest positive impact on any program. Right?

No, not true.

Consider a simple example.

- Imagine that the average GeoArena game requires 5,000 milliseconds (ms) of computation.

- The faster.js compiler speeds up the execution of array methods by an average of 10 times (this is an approximate estimate, moreover, it is overestimated; in most real applications there is not even double acceleration).

And here is the question that really interests us: “What part of these 5000 ms is spent on the implementation of array methods?”.

Suppose half. That is - 2500 ms is spent on array methods, the remaining 2500 ms - on everything else. If so, then using faster.js will give a huge performance boost.

Conditional example: the execution time of the program is very much reduced

As a result, it turns out that the total computational time has been reduced by 45%.

Unfortunately, all these arguments are very, very far from reality. GeoArena, of course, uses many array methods. But the real distribution of code execution time for different tasks looks something like the following.

Harsh reality

Sadly, what can I say.

This is exactly the mistake Donald Knuth warned about. I didn’t put my efforts into what they should be applied to, and I did not do it when it was worth doing.

Here simple mathematics comes into play. If something takes only 1% of the program’s execution time, then optimization of this will, in the best case, yield only a 1% increase in productivity.

This is exactly what Donald Knuth had in mind when he said "not where it is needed." And if you think about what “where you need it”, it turns out that these are the parts of programs that represent performance bottlenecks. These are the pieces of code that make a significant contribution to the overall performance of the program. Here the concept of "productivity" is used in a very broad sense. It may include the runtime of the program, the size of its compiled code, and something else. A 10% improvement in that part of the program that greatly affects performance is better than a 100% improvement in some little thing.

Knut also spoke of the application of efforts "not when necessary." The point of this is that you need to optimize something only when it is necessary. Of course, I had a good reason to think about optimization. But remember that I started developing faster.js and before that I didn’t even try to test the fast.js library in GeoArena? The minutes spent testing fast.js in my game would save me weeks of work. I hope you don’t fall into the same trap that I fell into.

Summary

If you are interested in experimenting with faster.js, you can take a look at this demo. What results you get depends on your device and browser. Here, for example, what happened in my Chrome 76 on the 15-inch MacBook Pro 2018.

Faster.js test results

You might be interested in learning about the actual results of using faster.js in GeoArena. I, when the game was still mine (as I said, I sold it), conducted some basic research. As a result, it turned out the following:

- Using faster.js speeds up the execution of the main game cycle in a typical game by about 1%.

- Due to the use of faster.js, the size of the game’s bundle grew by 0.3%. This slowed down the loading of the game page a bit. The size of the bundle has grown due to the fact that faster.js converts the standard short code into faster, but also longer code.

In general, faster.js has its pros and cons, but this development of mine did not have much impact on the performance of GeoArena. I would have understood this much earlier if I had bothered to first test the game using fast.js.

May my story serve as a warning to you.

Dear readers! Did you fall into the trap of premature optimization?

All Articles