Kafka and microservices: an overview

Hello. In this article I will tell you why we chose Kafka nine months ago in Avito, and what it is. I will share one of the use cases - a message broker. And finally, let's talk about what advantages we got from applying the Kafka as a Service approach.

Problem

First, a little context. Some time ago, we began to move away from monolithic architecture, and now in Avito there are already several hundred different services. They have their own repositories, their own technology stack and are responsible for their part of the business logic.

One of the problems with a large number of services is communication. Service A often wants to know the information that service B has. In this case, service A accesses service B through a synchronous API. Service B wants to know what happens with services G and D, and those, in turn, are interested in services A and B. When there are many such “curious” services, the connections between them turn into a tangled ball.

Moreover, at any time, service A may become unavailable. And what to do in this case, service B and all other services tied to it? And if you need to make a chain of consecutive synchronous calls to complete a business operation, the probability of failure of the entire operation becomes even higher (and it is the higher, the longer this chain).

Technology selection

OK, the problems are clear. You can eliminate them by making a centralized messaging system between services. Now each of the services is enough to know only about this messaging system. In addition, the system itself must be fault tolerant and horizontally scalable, as well as in case of accidents, accumulate a call buffer for their subsequent processing.

Let's now choose the technology on which message delivery will be implemented. To do this, first understand what we expect from her:

- Messages between services should not be lost;

- Messages may be duplicated

- messages can be stored and read to a depth of several days (persistent buffer);

- services can subscribe to data of interest to them;

- several services can read the same data;

- Messages may contain detailed, bulk payload (event-carried state transfer);

- sometimes you need a message order guarantee.

It was also crucial for us to choose the most scalable and reliable system with high throughput (at least 100k messages at a few kilobytes per second).

At this stage, we said goodbye to RabbitMQ (it’s hard to keep stable at high rps), SkyTools PGQ (not fast enough and poorly scalable) and NSQ (not persistent). All of these technologies are used in our company, but they did not fit the task at hand.

Next, we began to look at new technologies for us - Apache Kafka, Apache Pulsar and NATS Streaming.

The first to drop Pulsar. We decided that Kafka and Pulsar are fairly similar solutions. And despite the fact that Pulsar is tested by large companies, it is newer and offers lower latency (in theory), we decided to leave Kafka out of the two, as the de facto standard for such tasks. We will probably return to Apache Pulsar in the future.

And there were two candidates left: NATS Streaming and Apache Kafka. We studied both solutions in some detail, and both of them came up to the task. But in the end, we were afraid of the relative youth of NATS Streaming (and the fact that one of the main developers, Tyler Treat, decided to leave the project and start its own - Liftbridge). At the same time, the Clustering mode of NATS Streaming did not allow for strong horizontal scaling (this is probably no longer a problem after adding partitioning mode in 2017).

However, NATS Streaming is a cool technology written in Go and supported by the Cloud Native Computing Foundation. Unlike Apache Kafka, it doesn’t need Zookeeper to work (it may be possible to say the same about Kafka soon ), since inside it implements RAFT. At the same time, NATS Streaming is easier to administer. We do not exclude that in the future we will return to this technology.

Nevertheless, Apache Kafka has become our winner today. In our tests, it proved to be quite fast (more than a million messages per second for reading and writing with a message volume of 1 kilobyte), reliable enough, well-scalable and proven experience in selling by large companies. In addition, Kafka supports at least several large commercial companies (for example, we use the Confluent version), and Kafka has a developed ecosystem.

Review Kafka

Before starting, I immediately recommend an excellent book - “Kafka: The Definitive Guide” (it is also in the Russian translation, but the terms break the brain a little). In it you can find the information necessary for a basic understanding of Kafka and even a little more. The Apache documentation itself and the Confluent blog are also well written and easy to read.

So, let's take a look at how Kafka is a bird's eye view. The basic Kafka topology consists of producer, consumer, broker and zookeeper.

Broker

A broker is responsible for storing your data. All data is stored in binary form, and the broker knows little about what they are and what their structure is.

Each logical type of event is usually located in its own separate topic (topic). For example, an ad creation event may fall into the item.created topic, and a change event may fall into item.changed. Topics can be considered as classifiers of events. At the topic level, you can set such configuration parameters as:

- volume of stored data and / or their age (retention.bytes, retention.ms);

- data redundancy factor (replication factor);

- maximum size of one message (max.message.bytes);

- the minimum number of consistent replicas at which data can be written to the topic (min.insync.replicas);

- the ability to failover to a non-synchronous lagging replica with potential data loss (unclean.leader.election.enable);

- and many more ( https://kafka.apache.org/documentation/#topicconfigs ).

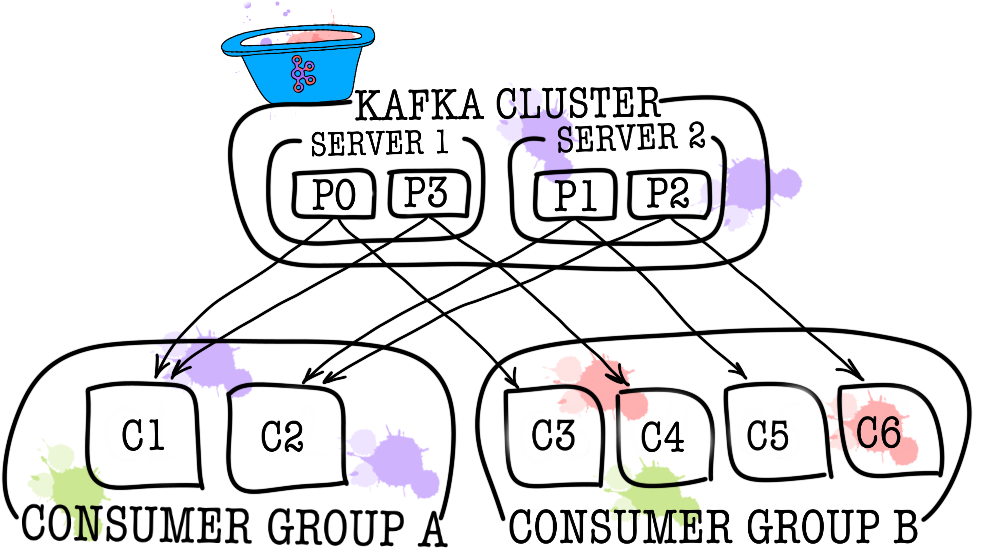

In turn, each topic is divided into one or more partitions (partition). It is in the partition that events ultimately fall. If there is more than one broker in the cluster, then partitions will be distributed equally among all brokers (as far as possible), which will allow you to scale the load on writing and reading in one topic to several brokers at once.

On disk, data for each partition is stored in the form of segment files, by default equal to one gigabyte (controlled via log.segment.bytes). An important feature is that data is deleted from partitions (when retention is triggered) just by segments (you cannot delete one event from a partition, you can delete only the whole segment, and only inactive).

Zookeeper

Zookeeper acts as a metadata repository and coordinator. It is he who is able to say whether brokers are alive (you can look at it through the eyes of a zookeeper through the zookeeper-shell command ls /brokers/ids

), which of the brokers is the controller ( get /controller

), whether the partitions are in synchronous state with their replicas ( get /brokers/topics/topic_name/partitions/partition_number/state

). Also, producer and consumer will go to zookeeper first to find out which topic and partition are stored on which broker. In cases when a replication factor greater than 1 is specified for the topic, the zookeeper will indicate which partitions are leaders (they will be written to and read from). In the event of a broker crash, it is in the zookeeper that information about new leader partitions will be written (as of version 1.1.0 asynchronously, and this is important ).

In older versions of Kafka, zookeeper was also responsible for storing offsets, but now they are stored in a special topic __consumer_offsets

on the broker (although you can still use zookeeper for these purposes).

The easiest way to turn your data into a pumpkin is just the loss of information with zookeeper. In such a scenario, it will be very difficult to understand what and where to read from.

Producer

Producer is most often a service that directly writes data to Apache Kafka. Producer selects a topic, in which his thematic messages will be stored, and begins to write information to it. For example, a producer could be an ad service. In this case, he will send events such as “ad created”, “ad updated”, “ad deleted”, etc. to thematic topics. Each event is a key-value pair.

By default, all events are distributed among the partitions of the topic round-robin if the key is not specified (losing order), and through MurmurHash (key) if the key is present (ordering within the same partition).

It is immediately worth noting here that Kafka guarantees the order of events within only one partition. But in fact, often this is not a problem. For example, you can guaranteedly add all changes of the same announcement to one partition (thereby preserving the order of these changes within the announcement). You can also pass a sequence number in one of the event fields.

Consumer

Consumer is responsible for retrieving data from Apache Kafka. If you go back to the example above, the consumer can be a moderation service. This service will be subscribed to the topic of the announcement service, and when a new advertisement appears, it will receive it and analyze it for compliance with some specified policies.

Apache Kafka remembers what recent events the consumer received (the __consumer__offsets

service topic is used for this), thereby guaranteeing that upon successful reading, the consumer will not receive the same message twice. Nevertheless, if you use the option enable.auto.commit = true and completely give the job of tracking the consumer’s position in the topic to Kafka, you can lose data . In production code, the position of the consumer is most often controlled manually (the developer controls the moment when the commit of the read event must occur).

In cases where one consumer is not enough (for example, the flow of new events is very large), you can add a few more consumers by linking them together in the consumer group. Consumer group logically is exactly the same consumer, but with the distribution of data among the group members. This allows each participant to take their share of messages, thereby scaling the reading speed.

Test results

Here I will not write a lot of explanatory text, just share the results. Testing was carried out on 3 physical machines (12 CPU, 384GB RAM, 15k SAS DISK, 10GBit / s Net), brokers and zookeeper were deployed in lxc.

Performance testing

During testing, the following results were obtained.

- The speed of recording messages in 1KB size at the same time by 9 producers - 1300000 events per second.

- Reading speed of 1KB messages at the same time by 9 consumers - 1,500,000 events per second.

Fault tolerance testing

During testing, the following results were obtained (3 brokers, 3 zookeeper).

- The abnormal termination of one of the brokers does not lead to the suspension or inaccessibility of the cluster. The work continues as usual, but the remaining brokers have a big load.

- The abnormal termination of two brokers in the case of a cluster of three brokers and min.isr = 2 leads to the inaccessibility of the cluster for writing, but for readability. In case min.isr = 1, the cluster continues to be available for reading and writing. However, this mode contradicts the requirement for high data security.

- Abnormal termination of one of the Zookeeper servers does not lead to a cluster shutdown or inaccessibility. Work continues as normal.

- An abnormal termination of two Zookeeper servers leads to a cluster inaccessibility until at least one of the Zookeeper servers is restored. This statement is true for a Zookeeper cluster of 3 servers. As a result, after research, it was decided to increase the Zookeeper cluster to 5 servers to increase fault tolerance.

Kafka as a service

We made sure that Kafka is an excellent technology that allows us to solve the task set for us (implementing a message broker). However, we decided to prohibit the services from directly accessing Kafka and blocking it from above with the data-bus service. Why did we do this? There are actually several reasons.

Data-bus took over all the tasks related to integration with Kafka (implementation and configuration of consumers and producers, monitoring, alerting, logging, scaling, etc.). Thus, integration with the message broker is as simple as possible.

Data-bus allowed to abstract from a specific language or library to work with Kafka.

The data bus allowed other services to abstract from the storage layer. Maybe at some point we will change Kafka to Pulsar, and no one will notice anything (all services know only about the data-bus API).

The data bus took over the validation of event schemas.

Using data-bus authentication is implemented.

Under the cover of data-bus, we can, without downtime, discreetly update Kafka versions, centralize configurations of producers, consumers, brokers, etc.

Data-bus allowed us to add features we need that are not in Kafka (such as topic audit, monitoring anomalies in the cluster, creating DLQ, etc.).

Data-bus allows failover to be implemented centrally for all services.

At the moment, to start sending events to the message broker, it is enough to connect a small library to the code of your service. It's all. You have the opportunity to write, read and scale with one line of code. The whole implementation is hidden from you, only a few sticks like the size of the batch sticks out. Under the hood, the data-bus service raises the necessary number of producer and consumer instances in Kubernetes and adds the necessary configuration to them, but all this is transparent for your service.

Of course, there is no silver bullet, and this approach has its limitations.

- Data-bus needs to be supported on its own, unlike third-party libraries.

- Data-bus increases the number of interactions between services and the message broker, which leads to lower performance compared to bare Kafka.

- Not everything can be so simply hidden from services, we don’t want to duplicate the functionality of KSQL or Kafka Streams in the data-bus, so sometimes you have to allow services to go directly.

In our case, the pros outweighed the cons, and the decision to cover the message broker with a separate service was justified. During the year of operation, we did not have any serious accidents and problems.

PS Thanks to my girlfriend, Ekaterina Oblyalyaeva, for the cool pictures for this article. If you like them, there are even more illustrations.

All Articles