The story of a monolith

Part one, in which the reader will get acquainted with a brief history of the appearance of 2GIS internal products and the evolution of the data delivery system from several scripts to a full-fledged application.

Today I will tell you a story that began 9 years ago at DublGIS.

I just got a job there. I had to deal with the module for exporting data from a large internal system for our external products. And in this article I want to share with you how we split this monolith into several parts, how one monster generated several dozen products, and how all these products and projects were integrated at the data level among themselves.

I must say that this is just a review article without any technical details. The technique will be in the second part, in which we will talk about data export. In the meantime, only light reading without matan with a superficial mention of technology.

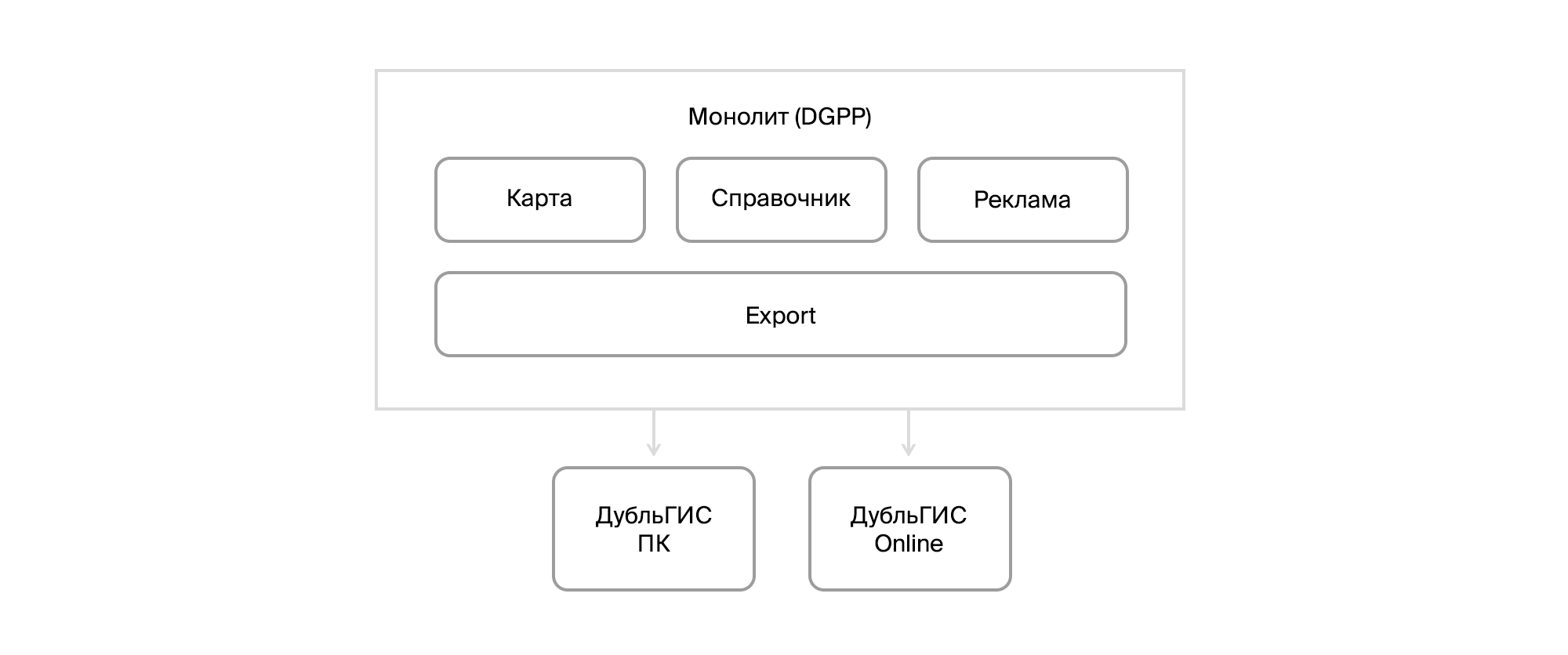

Go. Far 2010 Then 2GIS was still a tube DoubleGIS, from external products there was a desktop version and a primitive online and a version for PDAs. And the insides consisted of an update delivery system for users of our PC version and a monster called DGPP, which combined tools for editing the directory of organizations and maps, CRM and exporting data to end products. The database was in Firebird. The system was decentralized. Each city had its own installation and its own database. Primitive integration was provided through file exports / imports. And on this, by and large, that's all.

DGPP itself was a C ++ application with a set of VBA scripts. Initially, a third-party office developed this system for DublGIS, and only with time did the company take the development of the system under its roof, forming its own R&D. Developing DGPP has become harder and harder.

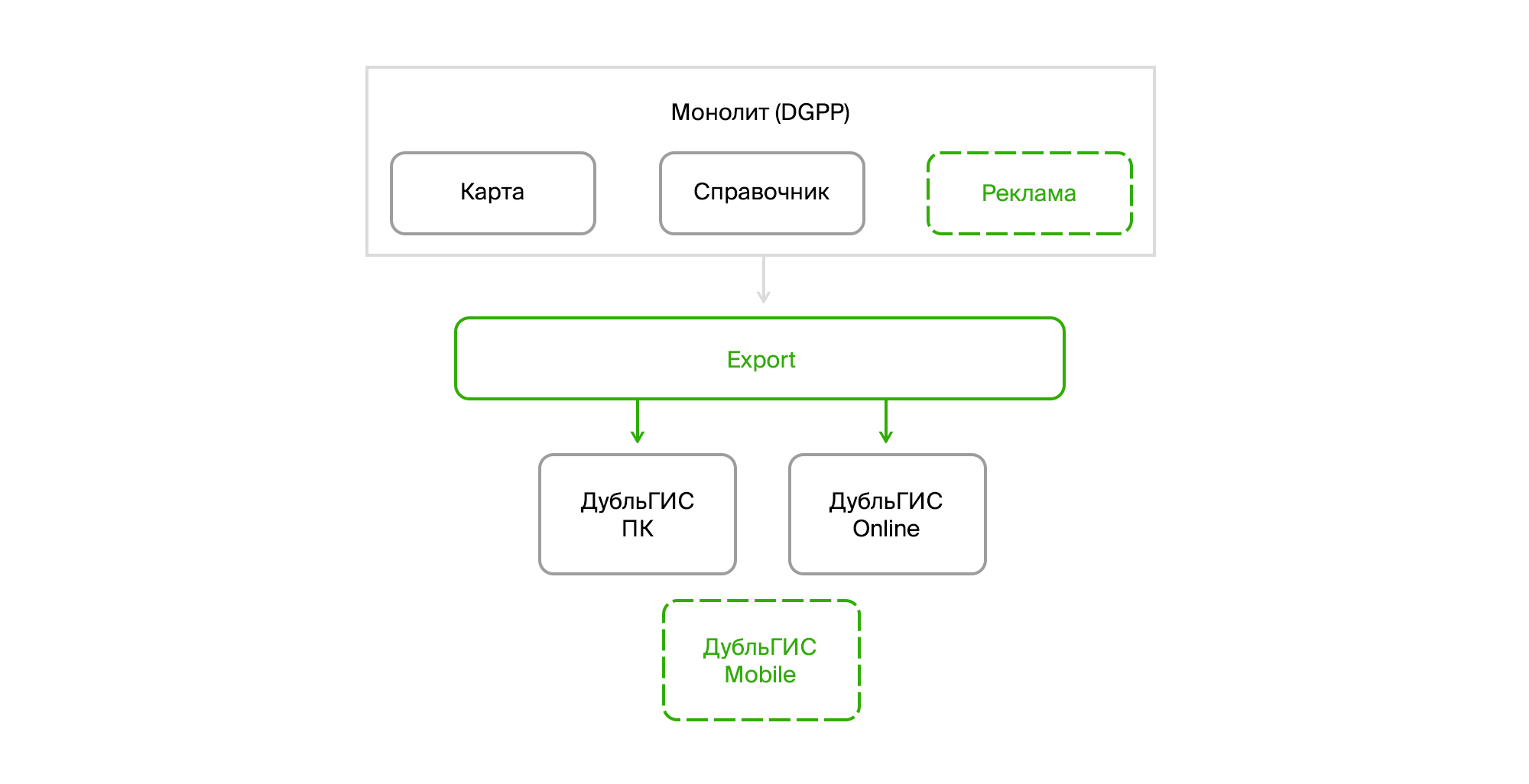

And this was the time of the rapid development of DublGIS. New cities were opening. The mobile version for smartphones was being prepared. New features appeared. An advertising model was developing. In general, a large number of changes were required, and they had to be done quickly.

The first thing we started to disassemble DGPP into pieces was export. We pulled it into a separate application, which is a windows service with a muzzle on WPF. In parallel, work was underway on a new CRM. To save time at that time, Microsoft Dynamics CRM was chosen as the base platform.

As for export, you just had to learn how to extract data from Firebird and pull out all the logic for preparing data from VBA scripts. In addition, there were some algorithms for transport data implemented on the pluses. They had to be rewritten to sharps.

Here it is worth paying attention to one point. Our desktop DoubleGIS consumed data in a special binary format, which was prepared by a C ++ library wrapped in a COM object. And then it was quite logical to use it, simply connecting as a reference to the project. Not the best solution, but I’ll talk about this later.

As time went on, we launched a mobile version and a new CRM. A new external product has flashed on the horizon - the public API 2GIS. And from DGPP they have already begun to isolate a subsystem for working with a directory of organizations codenamed InfoRussia.

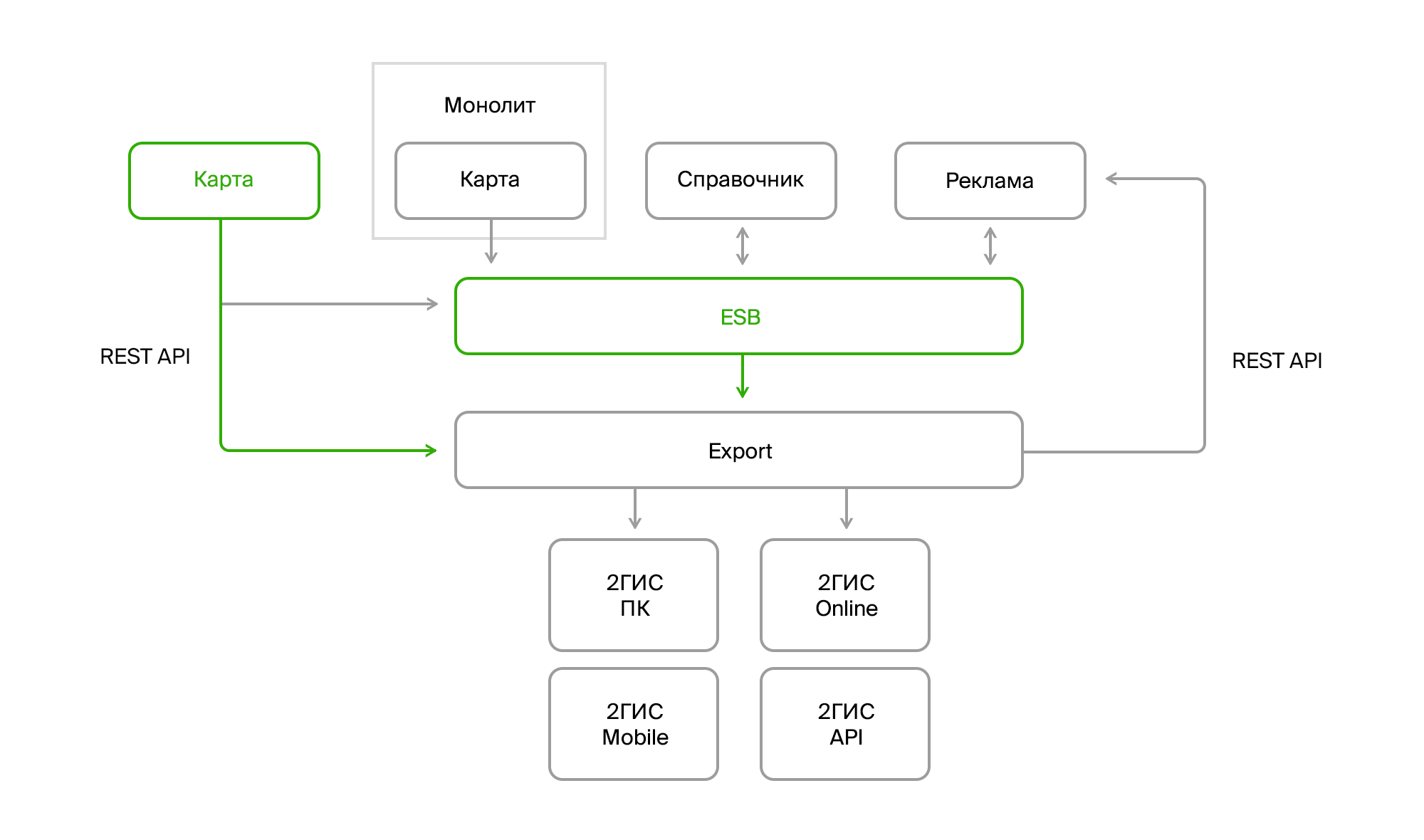

In the export, it became necessary to read advertising data from two systems: the old DGPP and the new CRM. Moreover, the implementation of CRM was going on gradually, that is, in some cities only DGPP remained so far, while in others DGPP worked simultaneously with a directory and map and CRM with commercial information. In addition, in the long run the release of InfoRussia, which took place over several months, was shining.

New systems introduced complexity not only by their appearance. They changed the concept of deployment. Unlike the decentralized DGPP that stood in every city, these systems were centralized, each with its own plump DBMS. In addition, they needed to exchange data.

To solve this problem, we implemented our Enterprise Service Bus (ESB). At that time, there were practically no mature solutions that would ensure the fulfillment of some important functional requirements for us: the ability to transmit XML, the order of messages and the guarantee of delivery. Then we stopped at SQL Server Broker, which gave out of the box everything that was required. True, he had a rather mediocre delivery speed, but at that time she was quite happy with us.

The last step was the release of a map service called Fiji. He partially uploaded his data to the bus. This concerned directories and classifiers. The geodata could be taken from him through the Rest API, which was also used by the Fiji client itself , written in WPF .

In this architecture, export was central. Through it, all data streams merged into a single repository and distributed to end products and our users.

In addition, it was just a small part of the iceberg. Automation of internal processes, the emergence of new requirements and new features led to the emergence of a huge number of products. It was necessary to do analytics, work with user feedback, introduce new sales models and new commercial products, develop search, transport algorithms and, in general, external products.

On the one hand, export has a large number of data providers, and on the other, a large number of consumers, each of whom wanted to receive data in a specific form and run it through special preparation algorithms.

And so my story came to an end.

In the second part of the article I will tell you about Export and share how he survived all these changes. There will be no stories, but there will be specific approaches that we have applied in solving a specific list of tasks.

All Articles