Neural Networks and Deep Learning: An Online Tutorial, Chapter 6, Part 2: Recent Progress in Image Recognition

Content

In 1998, when the MNIST database appeared, it took weeks to train the most advanced computers, which achieved much worse results than today's computers, which take less than an hour to get using the GPU. Therefore, MNIST is no longer a task pushing the boundaries of technology; the speed of training suggests that this task is well suited for studying this technology. In the meantime, research goes further, and modern work studies much more complex problems. In this section, I will briefly describe some examples of ongoing work related to image recognition using neural networks.

This section is different from the rest of the book. In the book, I focused on presumably long-lived ideas - backpropagation, regularization, convolutional networks. I tried to avoid the results considered fashionable at the time of writing, whose long-term value seemed dubious. In science, such results most often turn out to be one-day events, quickly disappear and do not have a long-term effect. Given this, the skeptic would say: “Of course, recent progress in image recognition can be considered an example of such a one-day trip? In two or three years, everything will change. So, are these results likely to be of interest to a small number of professionals competing in the foreground? Why discuss them at all? ”

Such a skeptic will be right in that the small details of recent works are gradually losing perceived importance. However, over the past few years there have been incredible improvements in solving particularly complex problems of image recognition using deep neural networks (GNS). Imagine a historian of science writing material about computer vision in 2100. They will define the years 2011-2015 (and probably several years after that) as a period of significant breakthroughs driven by deep convolution networks (GSS). This does not mean that the GOS will still be used in 2100, not to mention such details as an exception, ReLU, and more. But this all the same means that there is an important transition in the history of ideas at the current moment. It is like observing the discovery of an atom, the invention of antibiotics: the invention and discovery of a historical scale. Therefore, without going into details, it is worth getting some idea of the interesting discoveries being made today.

Work 2012 LRMD

Let me start with the work of 2012, authored by a group of researchers from Stanford and Google. I will call her LRMD, by the first letters of the names of the first four authors. LRMD used NS to classify images from the ImageNet database, which is a very difficult task of pattern recognition. The data they used from 2011 ImageNet included 16 million full-color images, divided into 20,000 categories. Images were downloaded from the Internet and classified by Amazon's Mechanical Turk. Here are some of them:

They belong to the categories, respectively: mumps, brown root fungus, pasteurized milk, roundworms. If you want to practice, I recommend you visit the list of hand tools from ImagNet, where differences are made between mounds, end planers, planers for chamfering and dozens of other types of planers, not to mention other categories. I don’t know about you, but I can’t distinguish with certainty all these tools. This is obviously far more challenging than MNIST! The LRMD network got a decent result of 15.8% image recognition accuracy from ImageNet. This may not seem like such an impressive result, but it was a huge improvement over the previous result of 9.3%. Such a leap suggests that NSs can offer an effective approach to very complex image recognition tasks, such as ImageNet.

Work 2012 KSH

The work of LRMD in 2012 was followed by the work of Krizhevsky, Sutskever and Hinton (KSH). KSH trained and validated GSS using a limited subset of ImagNet data. This subset is defined by the popular machine learning competition - ImageNet Large-Scale Visual Recognition Challenge (ILSVRC). Using this subset gave them a convenient way to compare their approach with other leading techniques. The ILSVRC-2012 set contains about 1.2 million images from 1000 categories. The verification and confirmation sets contain 150,000 and 50,000 images, respectively, from the same 1000 categories.

One of the challenges of the ILSVRC competition is that many images from ImageNet contain multiple objects. For example, in the image, the Labrador Retriever runs after a soccer ball. T.N. The “correct” classification from ILSVRC may correspond to the Labrador Retriever label. Is it necessary to take away points from the algorithm if it marks the image like a soccer ball? Due to such ambiguity, the algorithm was considered correct if ImageNet classification was among the 5 most probable guesses of the algorithm regarding the content of the image. According to this criterion, out of the top 5, KSH's GSS achieved an accuracy of 84.7%, much better than the previous opponent, which achieved an accuracy of 73.8%. When using a more stringent metric, when the label must exactly match the prescribed, the accuracy of KSH reached 63.3%.

It is worth briefly describing the KSH network, as it inspired so much work that followed. It is also, as we shall see, closely connected with the networks that we trained in this chapter, although it is more complex. KSH used GSS trained on two GPUs. They used two GPUs because their particular card (NVIDIA GeForce GTX 580) did not have enough memory to store the entire network. Therefore, they split the network into two parts.

The KSH network has 7 layers of hidden neurons. The first five hidden layers are convolutional (some use max pooling), and the next 2 are fully connected. The output softmax layer consists of 1000 neurons corresponding to 1000 image classes. Here is a sketch of the network, taken from the work of KSH. Details are described below. Note that many layers are divided into 2 parts corresponding to two GPUs.

In the input layer there is a 3x224x224 neuron indicating the RGB values for an image of 224x224 size. Recall that ImageNet contains images of various resolutions. This presents a problem, since the input network layer is usually of a fixed size. KSH dealt with this by scaling each image so that its short side had a length of 256 pixels. Then they cut out an area of 256x256 pixels from the middle of the resized image. Finally, KSH retrieves random 224x224 image pieces (and their horizontal reflections) from 256x256 images. This random cut is a way of expanding training data to reduce retraining. This especially helps to train such a large network as KSH. And finally, these 224x224 images are used as input to the network. In most cases, the cropped image contains the main object from the original image.

We pass to the hidden layers of the KSH network. The first hidden layer is convolutional, with a max-pulling step. It uses local receptive fields of size 11x11, and a step of 4 pixels. In total, 96 feature cards are obtained. Feature cards are divided into two groups of 48 pieces, with the first 48 cards being on one GPU, and the second on the other. Max-pooling in this and subsequent layers is carried out by 3x3 sections, but the pooling sections can overlap, and are located at a distance of only 2 pixels from each other.

The second hidden layer is also convolutional, with max pooling. It uses local 5x5 receptive fields, and it has 256 feature cards, broken into 128 pieces for each GPU. Feature maps use only 48 incoming channels, and not all 96 exits from the previous layer, as usual. This is because any feature card receives input from the GPU on which it is stored. In this sense, the network is moving away from convolutional architecture, which we described earlier in this chapter, although, obviously, the basic idea remains the same.

The third, fourth and fifth layers are convolutional, but without max pooling. Their parameters: (3) 384 feature maps, local receptive fields 3x3, 256 incoming channels; (4) 384 attribute maps, local receptive fields 3x3, 192 incoming channels; (5) 256 feature maps, local receptive fields 3x3, 192 incoming channels. On the third layer, data is exchanged between the GPUs (as shown in the picture) so that the feature maps can use all 256 incoming channels.

The sixth and seventh hidden layers are fully connected, 4096 neurons each.

The output layer is softmax, consists of 1000 units.

The KSH network takes advantage of many techniques. Instead of using sigmoid or hyperbolic tangent as an activation function, it uses ReLUs, which greatly accelerate learning. The KSH network contains about 60 million learning parameters, and therefore, even with a large set of training data, it is subject to retraining. To cope with this, the authors expanded the training set by randomly cropping pictures, as described above. They then used the L2-regularization variant and the exception. The network was trained using stochastic gradient descent based on momentum and with mini-packets.

This is a brief overview of many of KSH's key insights. I omitted some details; look for them in the article yourself. You can also look at the project of Alex Krizhevsky cuda-convnet (and his followers), containing code that implements many of the ideas described. A version of this network based on Theano has also been developed . You can recognize ideas in the code that are similar to those that we developed in this chapter, although using multiple GPUs complicates matters. The Caffe framework has its own version of the KSH network, see their " zoo models " for details.

2014 ILSVRC Competition

Since 2012, progress has been quite rapid. Take the 2014 ILSVRC competition. As in 2012, participants had to train networks for 1.2 million images from 1000 categories, and one of the 5 probable predictions in the correct category was a quality criterion. The winning team , consisting mainly of Google employees, used the GSS with 22 layers of neurons. They named their network GoogLeNet, after LeNet-5. GoogLeNet achieved 93.33% accuracy in terms of the five best options, which seriously improved the results of the winner of 2013 (Clarifai, from 88.3%) and the winner of 2012 (KSH, from 84.7%).

How good is GoogLeNet 93.33% accuracy? In 2014, a research team wrote a review of the ILSVRC competition. One of the issues addressed was how well people would be able to cope with the task. For the experiment, they created a system that allows people to classify images with ILSVRC. As one of the authors of the work, Andrei Karpaty, explains, in an informative entry on his blog, it was very difficult to bring the effectiveness of people to GoogLeNet indicators:

The task of marking up images with five categories out of 1000 possible quickly became extremely difficult, even for those of my friends in the laboratory who had been working with ILSVRC and its categories for some time. First, we wanted to submit the task to Amazon Mechanical Turk. Then we decided to try to hire students for money. Therefore, I organized a marking party among experts in my laboratory. After that, I developed a modified interface that used GoogLeNet predictions to reduce the number of categories from 1000 to 100. Still, the task was difficult - people skipped categories, giving errors of the order of 13-15%. In the end, I realized that in order to even get closer to the result of GoogLeNet, the most effective approach would be for me to sit down and go through an impossible long learning process and the subsequent process of thorough markup. At first, the marking was at a speed of the order of 1 piece per minute, but accelerated over time. Some images were easy to recognize, while others (for example, certain dog breeds, species of birds or monkeys) required several minutes of concentration. I got very good at distinguishing between dog breeds. Based on my sample of images, the following results were obtained: GoogLeNet was mistaken in 6.8% of cases; my error rate was 5.1%, which was about 1.7% better.

In other words, an expert who worked very carefully, only by making serious efforts, was able to slightly get ahead of the STS. Karpaty reports that the second expert, trained on fewer images, managed to reduce the error by only 12% when selecting up to 5 labels per image, which is much less than GoogLeNet.

Awesome results. And since the advent of this work, several teams have reported on the development of systems whose error rate when choosing the 5 best tags was even less than 5.1%. Sometimes these achievements were covered in the media as the emergence of systems capable of recognizing images better than people. And although the results are generally striking, there are many nuances that cannot be considered that computer vision works better on these systems than on humans. In many ways, the ILSVRC competition is a very limited task - the results of an image search in an open network will not necessarily correspond to what the program meets in a practical task. And, of course, the criterion “one of the five best marks” is quite artificial. We still have a long way to go to solve the problem of image recognition, not to mention the more general task of computer vision. But still it’s very cool to see how much progress has been achieved in solving such a difficult task in just a few years.

Other tasks

I focused on ImageNet, however, there are quite a few other projects using NS for image recognition. Let me briefly describe some interesting results obtained recently, just to get an idea of modern work.

One inspiring practical set of results was obtained by a team from Google, which applied the GSS to the task of address plate recognition in Google Street View. In their work, they report how they found and automatically recognized nearly 100 million address plates with accuracy comparable to human work. And their system is fast: it was able to decrypt data from all Google Street View images in France in less than an hour! They write: “Getting this new dataset has significantly increased the quality of Google Maps geocoding in several countries, especially where there were no other geocoding sources.” Then they make a more general statement: “We believe that, thanks to this model, we solved the problem of optical recognition of short sequences in a way that is applicable in many practical applications.”

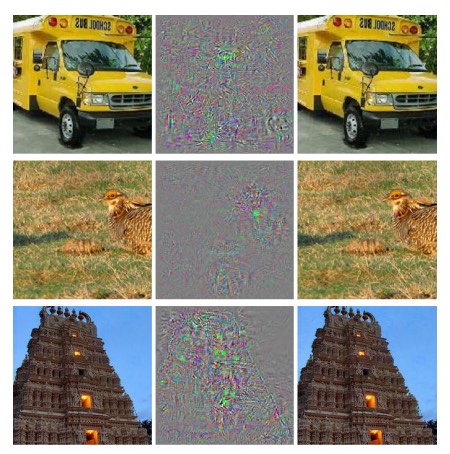

Perhaps I created the impression of a parade of victorious and inspiring results. Of course, the most interesting reports concern fundamental things that are not yet clear to us. For example, in the work of 2013 it was shown that the National Assembly has, in fact, blind spots. Take a look at the images below. On the left is the image from ImageNet, which the network of researchers classified correctly. On the right is a slightly modified image (in the middle the differences are shown), which the network was no longer able to correctly recognize. And the authors found that such “competitive” changes can be selected for any image from the database, and not just for the elite.

Unpleasant result. We used a network based on the same code as the KSH network - that is, it is such a network that is used more and more. And although such NSs calculate, in principle, continuous functions, similar results indicate that they probably calculate almost discrete functions. Worse, they turn out to be discrete in a way that violates our intuitive understanding of rational behavior. This is problem. In addition, it is not very clear what exactly leads to discreteness, what is the problem: in the loss function? Which activation functions to use? In network architecture? In something else? We do not know.

But these results are not as bad as they seem. Although such adversarial changes are quite common, they are unlikely to be found in practice. As indicated in the work:

The existence of adversarial negatives contradicts the network’s ability to achieve high generalizability. Indeed, if the network could generalize well, how could it be deceived by such adversarial negatives indistinguishable from ordinary examples? The explanation is that a set of adversarial negatives is extremely unlikely, and therefore not observed (or almost not observed) in the training data set, however, it has a high density (approximately like rational numbers), and therefore it can be found for almost any case .

Nevertheless, it is unpleasant that we understand the work of the National Assembly so poorly that this result was discovered recently. Of course, the main advantage of such results will be that they stimulated the appearance of subsequent work on this topic. A recent work in 2014 showed that for a trained network it is possible to create images that look like white noise to a person, and the network will classify them into well-known categories with a high degree of confidence. This is another demonstration that we still have a lot to understand in the work of the NS and in their use for image recognition.

But, despite the presence of similar results, the overall picture is inspiring. We are seeing rapid progress in performing extremely complex tests such as ImageNet. We are also seeing rapid progress in solving problems from the real world, such as recognizing address plates in StreetView. But, despite the inspiration, it is not enough just to observe the improvements in the performance of speed tests or even real-world tasks. There are fundamental phenomena, the essence of which we still poorly understand, for example, the existence of competitive images. And while such fundamental problems still open up (not to mention solving them), it would be premature to talk about approaching the solution of the image recognition problem. But at the same time, such problems are excellent incentives for further work.

Other approaches to deep neural networks

In this book, we focused on one task: the classification of numbers MNIST. An excellent task that made us understand many effective ideas: stochastic gradient descent, back propagation, convolution networks, regularization, etc. However, this is also a rather narrow task. After reading the literature on neural networks, you will come across many ideas that we did not discuss: recurring NSs, Boltzmann machines, generative models, transfer of training, reinforced learning, and so on and so forth! Neural networks are a vast area. However, many important ideas are variations of those ideas that we have already discussed, and they are quite easy to understand. In this section, I will slightly open the curtain over these vast expanses. Their discussion would not be detailed and comprehensive - this would greatly inflate the book. It will be an impressionistic attempt to show the conceptual richness of this area, and to connect some concepts with those that we have already seen. In the text I will give several references to other sources, as to materials for further training. Of course, many of them will soon be supplanted by others, and you might want to look for more recent literature. Nevertheless, I believe that many basic ideas will remain interesting for a long time to come.

Recurrent Neural Networks (RNS)

In the direct propagation networks that we used, there is one input that completely determines the activation of all neurons in subsequent layers. This is a very static picture: everything in the network is fixed, and has a frozen, crystalline character. But suppose we allow network elements to change dynamically. For example, the behavior of hidden neurons can be determined not only by activations in previous layers, but also by activations that happened earlier in time. The activation of a neuron can be partially determined by its earlier activation. In networks with direct distribution, this definitely does not happen. Or, perhaps, the activation of hidden and output neurons will be determined not only by the current input to the network, but also by previous ones.

Neural networks with this type of time-varying behavior are known as recurrent neural networks, or RNS. There are many ways to mathematically formalize the informal description of the previous paragraph. You can get an idea of them by reading the Wikipedia article . At the time of writing, in the English version of the article, at least 13 different models are described [at the time of translation in 2019, already 18 / approx. transl.]. But, if we set aside the mathematical details, the general idea of the RNS is the presence of dynamic changes in the network that occur over time. And, unsurprisingly, they are especially useful for analyzing data or processes that change over time. Such data and processes naturally appear in tasks such as speech analysis or natural language.

One of the current ways to use RNS is to more closely combine neural networks with traditional methods of representing algorithms, with concepts such as a Turing machine and common programming languages.In 2014, a RNS was developed that could accept a letter-by-letter description of a very simple python program and predict the result of its work. Informally speaking, the network is learning to “understand” certain python programs. The second work from 2014 used the RNS as a starting point for the development of a Turing neuromachine (BDC). This is a universal computer, the entire structure of which can be trained using gradient descent. They trained their BDC to build algorithms for several simple tasks, such as sorting or copying.

These, of course, are very simple, toy models. Learning how to run a python program like print (398345 + 42598) does not make the neural network a full-fledged interpreter of the language! It is not clear how much stronger these ideas will be. Nevertheless, the results are quite interesting. Historically, neural networks did a good job of recognizing patterns on which conventional algorithmic approaches stumbled. And vice versa, conventional algorithmic approaches do a good job of solving problems that are complex for NS. Today, no one is trying to implement a web server or a database based on NS! It would be great to develop integrated models that integrate the strengths of both NS and traditional algorithmic approaches. RNS, and ideas inspired by them, can help us do this.

In recent years, RNS has been used to solve many other problems. They were especially useful in speech recognition. RNS-based approaches set records for phoneme recognition quality. They were also used to develop improved models of the language used by people. Improved language models help you recognize ambiguities in speech that sound similar. A good language model can tell us that the phrase “forward to infinity” is much more likely than the phrase “forward without limb”, although they sound similar. RNS was used to obtain record achievements in certain language tests.

This work is part of the wider use of NS of all kinds, not just RNS, for solving the problem of speech recognition. For example, a GNS-based approach has shown excellent results in recognizing continuous speech with a large vocabulary. Another GNS-based system is implemented in the Android OS from Google.

I talked a little about what the RNCs are capable of, but did not explain how they work. You might not be surprised to learn that many of the ideas from the world of direct distribution networks can also be used in RNS. In particular, we can train the RNS by modifying the gradient descent and back propagation in the forehead. Many other ideas used in direct distribution networks will also come in handy, from regularization techniques to convolutions and activation and cost functions. Also, many of the ideas that we developed as part of the book can be adapted for use in the RNS.

Long-Term Short Term Memory (DCT) Modules

One of the problems of RNS is that early models were very difficult to train, more complicated than even GNS. The reason was the problems of the unstable gradient, which we discussed in chapter 5. Recall that the usual manifestation of this problem was that the gradient decreases all the time when propagating through the layers in the opposite direction. This extremely slows down the learning of the early layers. In RNS, this problem becomes even worse, since the gradients propagate not only in the opposite direction along the layers, but also in the opposite direction in time. If the network works for a rather long time, the gradient can become extremely unstable and on its basis will be very hard to learn. Fortunately, an idea known as long-term short-term memory (DCT) modules can be included in the RNS . For the first time, the modules introducedHochreiter and Schmidguber in 1997 , specifically to help solve the problem of an unstable gradient. DCTs make it easier to get good results when learning RNS, and many recent works (including those that I have already referenced) use DCT or similar ideas.

Deep trust networks, generative models and Boltzmann machines

Nowadays, interest in deep learning has gained a second wind in 2006, after the publication of works ( 1 , 2 ) explaining how to teach a special kind of NS called deep trust network (GDS). GDS for several years influenced the field of research, but then their popularity began to decline, and direct distribution networks and recurrent NSs became fashionable. Despite this, some of the properties of GDS make them very interesting.

First, GDSs are an example of a generative model. In a direct distribution network, we specify input activations, and they determine the activation of traits neurons further down the network. The generative model can be used in a similar way, but it is possible to set the values of neurons in it, and then run the network “in the opposite direction”, generating the values of the input activations. More specifically, a GDS trained on handwritten digit images can itself generate images similar to handwritten digits (potentially, and after certain actions). In other words, GDM in a sense can learn to write. In this sense, generative models are similar to the human brain: they can not only read numbers, but also write them. Jeffrey Hinton's famous saying states that for pattern recognition, you first need to learn how to generate images.

Secondly, they are capable of learning without a teacher and almost without a teacher. For example, when training on images, GDSs can learn signs that are useful for understanding other images, even if there were no marks on the training images. The ability to learn without a teacher is extremely interesting both from a fundamental scientific point of view and from a practical one - if it can be made to work well enough.

Given all these attractive points of the GDS as models for deep learning, why did their popularity decline? Partly due to the fact that other models, such as direct distribution and recurrent networks, have achieved stunning results, in particular, breakthroughs in the areas of image recognition and speech. It is not surprising that these models have received such attention, and very deserved. However, an unpleasant conclusion follows from this. The market of ideas often works according to the “winner gets everything” scheme, and almost all the attention goes to what is most fashionable in this area now. It can be extremely difficult for people to work on currently unpopular ideas, even if it is obvious that they may be of long-term interest. My personal opinion is that the GDS and other generative models deserve more attention than they get.I won’t be surprised if the GDM or similar model ever outstrips today's popular models. ReadThis article is for introduction to the field of GDM. This article may also be useful . It is not entirely about GDM, but it has a lot of useful things about limited Boltzmann machines, a key component of GDM.

Other ideas

What else is happening in the field of National Assembly and Civil Defense? A huge amount of interesting work. Among the active areas of research is the use of NS for processing natural language , machine translation , and more unexpected applications, for example, music informatics . There are many other areas. In many cases, after reading this book, you will be able to understand recent work, although, of course, you may need to fill in some gaps in knowledge.

I will end this section with a mention of a particularly interesting work. She combines deep convolutional networks with a technique called reinforcement learning to learn how to play video games (and another articleabout it). The idea is to use a convolution network to simplify pixel data from the game screen, turn it into a simpler set of attributes that can then be used to make decisions about further actions: “go left”, “go right”, “shoot”, and etc. Particularly interesting is that one network pretty well learned to play seven different classic video games, ahead of experts in three of them. This, of course, looks like a trick, and the work was actively advertised under the heading “Playing Atari Games with Reinforcement Learning”. However, behind a superficial gloss it is worth considering the fact that the system takes raw pixel data - it does not even know the rules of the game - and on their basis is trained to make good quality decisions in several very different and very competitive situations,each of which has its own complex set of rules. Pretty good.

The future of neural networks

User Intent Interfaces

In an old joke, an impatient professor tells a confused student: "Do not listen to my words, listen to what I mean." Historically, computers often did not understand, like a confused student, what a user means. However, the situation is changing. I still remember the first time I was surprised when I mistakenly wrote a request to Google, and the search engine said to me, “Did you mean [correct request]?” Google Director Larry Page once described the perfect search engine as a system that understands what exactly your requests mean, and giving you exactly what you want.

This is the idea of an interface based on user intent. In it, instead of responding to the user's literal requests, the search engine will use the MO to take a vague user request, understand exactly what it means, and act on this basis.

The idea of an interface based on user intent can be applied more widely than just in a search. Over the next several decades, thousands of companies will create products in which MO will be used for user interfaces, calmly referring to inaccurate user actions, and guessing their true intentions. We already see early examples of such intent-based interfaces: Apple Siri; Wolfram Alpha; IBM Watson systems that automatically tag photos and videos, and more.

Most of them will fail. Interface development is a complicated thing, and I suspect that instead of inspiring interfaces, many companies will create lifeless interfaces on the basis of MO. The best MO in the world will not help you if your interface sucks. However, some products will succeed. Over time, this will lead to a serious change in our relationship with computers. Not so long ago, say, back in 2005, users took for granted that interacting with computers requires high accuracy. The literal nature of the computer served to spread the idea that computers are very literal; the only forgotten semicolon could completely change the nature of the interaction with the computer. But I believe that in the next few decades we will develop several successful interfaces based on user intent,and this will radically change our expectations when working with computers.

,

Of course, MO is not only used to create interfaces based on user intent. Another interesting application of MO is data science, where it is used to search for “known unknowns” hidden in the obtained data. This is already a fashionable topic, about which many articles have been written, so I will not extend to it for a long time. I want to mention one consequence of this fashion, which is not often noted: in the long run, it is possible that the biggest breakthrough in the Moscow Region will not be just one conceptual breakthrough. The biggest breakthrough will be that research in the field of MO will become profitable through the use of data in science and other areas. If a company can invest a dollar in MO research and get a dollar and ten cents of revenue rather quickly, then a lot of money will be poured into the MO region. In other words, MO is an engine,driving us towards the emergence of several large markets and areas of technology growth. As a result, there will be large teams of people experts in this field who will have access to incredible resources. This will move the MO even further, create even more markets and opportunities, which will be the immaculate circle of innovation.

The role of neural networks and deep learning

I described MO in general terms as a way to create new opportunities for technology development. What will be the specific role of the National Assembly and Civil Society in all this?

To answer the question, it is useful to turn to history. In the 1980s, there was an active joyful revival and optimism associated with neural networks, especially after the popularization of back propagation. But the recovery subsided, and in the 1990s, the MO baton was passed on to other technologies, for example, the support vector method. Today, the National Assembly is again on the horse, setting all kinds of records, and overtaking many rivals in various problems. But who guarantees that tomorrow a new approach will not be developed that will again overshadow the NA? Or, perhaps, the progress in the field of the National Assembly will begin to stall, and nothing will replace them?

Therefore, it is much easier to reflect on the future of the Defense Ministry as a whole than specifically on the National Assembly. Part of the problem is that we understand the NA very poorly. Why are NS so good at compiling information? How do they avoid retraining so well, given the sheer number of options? Why does stochastic gradient descent work so well? How well will NS work when scaling datasets? For example, if we expand ImageNet base 10 times, will the performance of the NS improve more or less than the effectiveness of other MO technologies? All these are simple, fundamental questions. And so far we have a very poor understanding of the answers to these questions. In this regard, it is difficult to say what role the National Assembly will play in the future of the Moscow Region.

I will make one prediction: I think that GO will not go anywhere. The ability to study hierarchies of concepts, to build different layers of abstractions, apparently, is fundamental to the knowledge of the world. This does not mean that tomorrow’s GO networks will not radically differ from today’s. We may encounter major changes in their constituent parts, architectures, or learning algorithms. These changes may turn out to be dramatic enough for us to stop considering the resulting systems as neural networks. However, they will still engage in civil defense.

Will NS and GO soon lead to the appearance of artificial intelligence?

In this book, we focused on the use of NS in solving specific problems, for example, image classification. Let's expand our queries: what about general-purpose thinking computers? Can the National Assembly and Civil Society help us solve the problem of creating a general-purpose AI? And if so, given the high speed of progress in the field of civil defense, will we see the emergence of AI in the near future?

A detailed answer to such a question would require a separate book. Instead, let me offer you one observation based on Conway’s law :

Organizations designing systems are limited to a design that copies the communications structure of this organization.

That is, for example, Conway’s law states that the layout of the Boeing 747 aircraft will reflect the expanded structure of Boeing and its contractors at the time the 747 model was being developed. Or another, simple and concrete example: consider a company developing complex software. If the software control panel should be connected with the MO algorithm, then the panel developer should communicate with the company’s MO expert. Conway’s law simply formalizes this observation.

For the first time they heard Conway’s law, many people say either “Isn’t this a banal evidence?” Or “Is it so?” I will begin with a remark about his infidelity. Let's think: how is Boeing accounting reflected in the model 747? What about the cleaning department? What about feeding staff? The answer is that these parts of the organization most likely do not appear anywhere else in Scheme 747 explicitly. Therefore, you need to understand that Conway’s law applies only to those parts of the organization that are directly involved in design and engineering.

What about the remark about banality and evidence? This may be true, but I don’t think so, because organizations often work to reject Conway’s law. Teams developing new products are often inflated due to the excessive number of employees, or, conversely, they lack a person with critical knowledge. Think of all products with useless and complicating features. Or think of products with obvious flaws - for example, with a terrible user interface. In both classes of programs, problems often arise due to the mismatch of the team needed to release a good product and the team that really assembled. Conway’s law may be obvious, but that doesn’t mean that people cannot regularly ignore it.

Conway’s law is applicable to the design and creation of systems in cases where, from the very beginning, we imagine what components the product will consist of and how to make them. It cannot be applied directly to the development of AI, since AI is not (so far) such a task: we do not know what parts it consists of. We are not even sure what basic questions you can ask. In other words, at the moment, AI is more a problem of science than engineers. Imagine that you need to start developing the 747th without knowing anything about jet engines or the principles of aerodynamics. You would not know which experts to hire in your organization. As Werner von Braun wrote, “basic research is what I do when I don’t know what I do.” Is there a version of Conway’s law that applies to tasks that are more related to science than engineers?

To find the answer to this question, let us recall the history of medicine. In the early days, medicine was the domain of practitioners, such as Galen or Hippocrates , who studied the entire human body. But with the growth in the volume of our knowledge, I had to specialize. We have discovered many deep ideas - recall the microbial theory of diseases, or understanding the principle of the functioning of antibodies, or the fact that the heart, lungs, veins and arteries form the cardiovascular system. Such deep ideas formed the foundation for narrower disciplines, such as epidemiology, immunology, and the accumulation of overlapping areas related to the cardiovascular system. This is how the structure of our knowledge formed the social structure of medicine. This is especially noticeable in the case of immunology: the idea of the existence of an immune system worthy of a separate study was very nontrivial. So we have a whole field of medicine - with specialists, conferences, awards, and so on - organized around something that is not just invisible, but perhaps not even separate.

Such a development of events was often repeated in many established scientific disciplines: not only in medicine, but also in physics, mathematics, chemistry, and others. Regions are born monolithic, having only a few deep ideas in stock. The first experts are able to cover them all. But over time, solidity changes. We discover many new deep ideas, and there are too many of them for someone to be able to truly master them all. As a result, the social structure of the region is being reorganized and divided, concentrating around these ideas. Instead of a monolith, we have fields divided by fields divided by fields - a complex, recursive social structure that refers to itself, whose organization reflects the connections between the most profound ideas. This is how the structure of our knowledge forms the social organization of science. However, this social form in turn limits and helps determine what we can detect. This is the scientific analogue of Conway’s law.

But what does all this have to do with deep learning or AI?

Well, from the early days of AI development , there has been a debate that everything will go either “not too complicated, thanks to our superweapon”, or “superweapon will not be enough.” Deep learning is the latest example of a superweapon that has been used in the disputes I have seen. In the early versions of such disputes, logic, or Prolog, or expert systems, or some other technology, which was then the most powerful, was used. The problem with such disputes is that they do not give you the opportunity to say exactly how powerful any of the candidates for superweapons will be. Of course, we just spent a whole chapter reviewing evidence that civil defense can solve extremely complex problems. It definitely looks very interesting and promising. But this was the case with systems such as Prolog, or Eurisko, or with expert systems. Therefore, only the fact that a set of ideas looks promising does not mean anything special. How do we know that GO is actually different from these early ideas? Is there a way to measure how powerful and promising a set of ideas is? It follows from Conway’s law that as a crude and heuristic metric we can use the complexity of the social structure associated with these ideas.

Therefore, we have two questions. First, how powerful is the set of ideas related to civil society according to this metric of social complexity? Secondly, how powerful a theory do we need to create a general-purpose AI?

On the first question: when we look at civil defense today, this field looks interesting and rapidly developing, but relatively monolithic. It has some deep ideas, and several major conferences are held, some of which overlap a lot. Work at work uses the same set of ideas: stochastic gradient descent (or its close equivalent) to optimize the cost function. It’s great that these ideas are so successful. What we are not observing so far is a large number of well-developed smaller areas, each of which would explore its own set of deep ideas, which would move civil defense in many directions. Therefore, according to the metric of social complexity, deep learning, I'm sorry for the pun, while it remains a very shallow area of research. One person is still able to master most of the deep ideas from this area.

On the second question: how complex and powerful a set of ideas will be needed to create AI? Naturally, the answer will be: no one knows for sure. But in the afterword to the book, I studied some of the existing evidence on this subject. I concluded that even according to optimistic estimates, the creation of AI will require many, many, many deep ideas. According to Conway’s law, in order to reach this point, we must see the emergence of many interrelated disciplines, with a complex and unexpected structure that reflects the structure of our deepest ideas. We do not yet observe such a complex social structure when using NS and civil defense. Therefore, I believe that we, at least, are several decades away from using GO to develop general-purpose AI.

I spent a lot of effort on creating a speculative argument, which, perhaps, seems quite obvious, and does not lead to a certain conclusion. This will surely disappoint certainty-loving people. I meet a lot of people online who publicly announce their very specific and confident opinions about AI, often based on shaky arguments and non-existent evidence. I can honestly say: I think it's too early to judge. As in the old joke: if you ask a scientist how much more we need to wait for any discovery, and he says “10 years” (or more), then in fact he means “I have no idea”. Before the advent of AI, as in the case of controlled nuclear fusion and some other technologies, “10 years” have remained for more than 60 years. On the other hand, what we definitely have in the field of civil defense is an effective technology, the limits of which we have not yet discovered, and many open fundamental tasks. And it opens up amazing creative opportunities.

All Articles