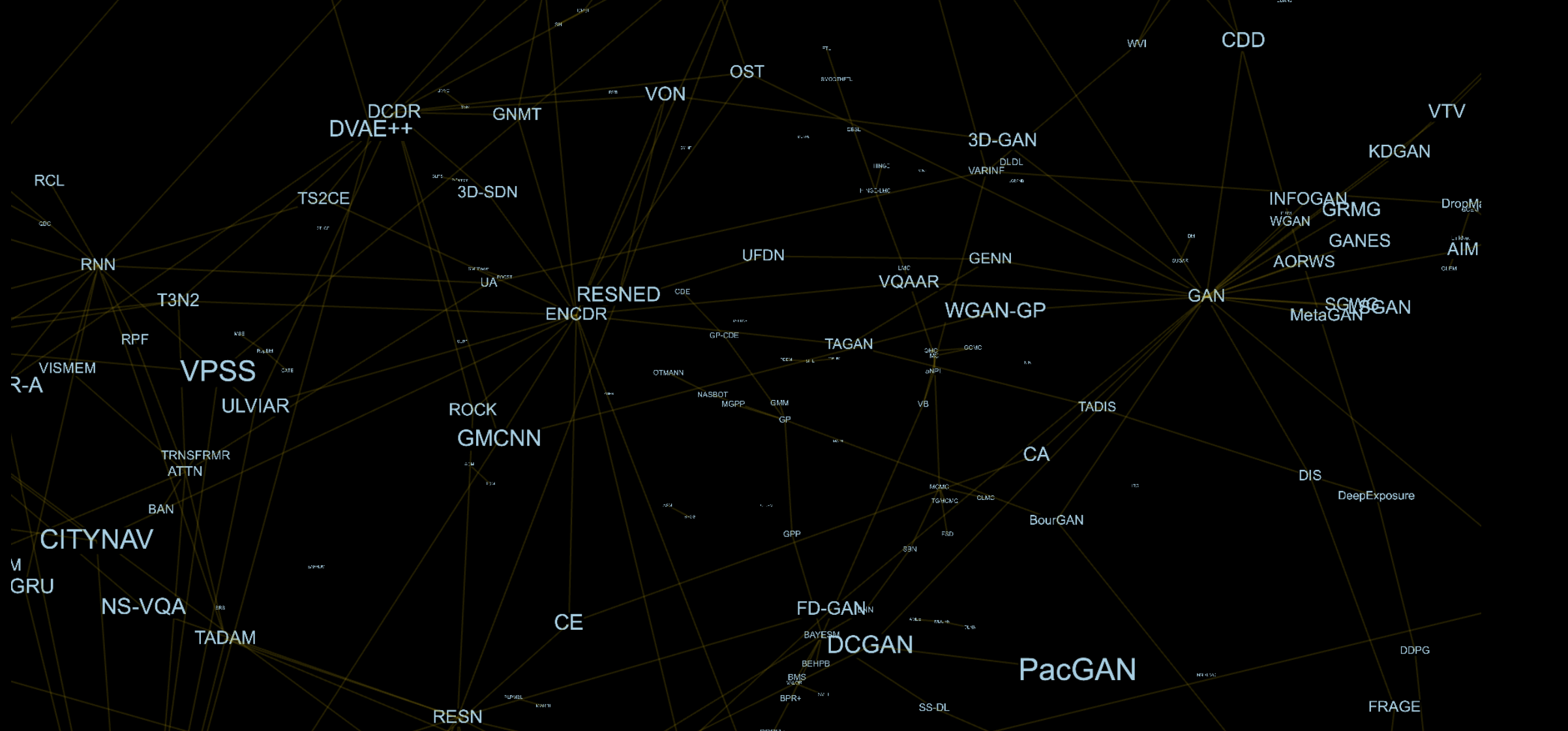

Visualization of dependencies and inheritance between machine learning models

A few months ago, I ran into a problem, my model based on machine learning algorithms just simply did not work. I thought for a long time about how to solve this problem and at some point I realized that my knowledge is very limited and my ideas are scarce. I know a couple of dozen models, and this is a very small part of those works that can be very useful.

The first thought that came to mind is that if I know and understand more models, my qualities as a researcher and engineer as a whole will increase. This idea prompted me to study articles from the latest machine learning conferences. Structuring such information is quite difficult, and it is necessary to record the dependencies and relationships between the methods. I did not want to present the dependencies in the form of a table or a list, but I wanted something more natural. As a result, I realized that having a three-dimensional graph for myself with edges between models and their components seems quite interesting.

For example, architecturally GAN [1] consists of a generator (GEN) and discriminator (DIS), Adversarial Auto-Encoder (AAE) [2] consists of Auto-Encoder (AE) [3] and DIS ,. Each component is a separate vertex in this graph, so for AAE we will have an edge with AE and DIS.

Step by step, I analyzed the articles, wrote out what methods they consist of, in which subject area they are applied, on what data they were tested, and so on. In the process, I realized how many very interesting solutions remain unknown, and do not find their application.

Machine learning is divided into subject areas, where each area tries to solve a specific problem using specific methods. In recent years, the borders have been almost erased, and it is practically difficult to identify the components that are used only in a certain area. This trend generally leads to improved results, but the problem is that with the increase in the number of articles, many interesting methods go unnoticed. There are many reasons for this, and the popularization by large companies of only certain areas plays an important role in this. Realizing this, the graph, which was previously developed as something strictly personal, became public and open.

Naturally, I conducted research and tried to find analogues to what I was doing. There are enough services that allow you to monitor the emergence of new articles in this area. But all these methods are aimed primarily at simplifying the acquisition of knowledge, and not at helping to create new ideas. Creativity is more important than experience, and tools that can help you think in different directions, and see a more complete picture, it seems to me that should become an integral part of the research process.

We have tools that make experimenting, launching and evaluating models easier, but we don’t have methods that allow us to quickly generate and evaluate ideas.

In just a few months, I sorted out about 250 articles from the last NeurIPS conference and about 250 other articles on which they are based. Most of the areas were completely unfamiliar to me, it took several days to understand them. Sometimes I just could not find the correct description for the models, and of what components they consist of. Proceeding from this, the second logical step was to create the possibility for the authors to add and change methods in the graph themselves, because no one except the authors of the article knows how to parse and describe their method in the best way.

An example of what happened as a result is presented here.

I hope that this project will be useful to someone, if only because it, perhaps, will allow someone to get associations that can lead to a new interesting idea. I was surprised when I heard how the idea of generative competitive networks is based. At the MIT machine Intelligence podcast [4], Yan Goodfellow said that the idea of adversarial networks is associated with the “positive” and “negative” phases of training for the Boltzman Machine [5].

This project is community-driven. I would like to develop it and motivate more people to add information about their methods there, or edit what has already been made. I believe that more accurate method information and better visualization technologies will really help make this a useful tool.

There is a lot of space for the development of the project, starting from improving the visualization itself, ending with the ability to build an individual graph with the possibility of obtaining recommendations for improvement methods.

Some technical details can be found here .

[1] Ian J. Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, Yoshua Bengio. Generative Adversarial Nets.

[2] Alireza Makhzani, Jonathon Shlens, Navdeep Jaitly, Ian Goodfellow, Brendan Frey. Adversarial Autoencoders.

[3] Dana H. Ballard. Autoencoder.

[4] Ian J. Goodfellow: Artificial Intelligence podcast at MIT.

[5] Ruslan Salakhutdinov, Geoffrey Hinton. Deep boltzmann machines

All Articles