Go Product Development: One Project History

Hello! My name is Maxim Ryndin, I am the team lead of two teams in Gett - Billing and Infrastructure. I want to talk about web product development, which we at Gett primarily use Go. I will tell you how in 2015-2017 we switched to this language, why we chose it at all, what problems we encountered during the transition and what solutions we found. And I’ll tell you about the current situation in the next article.

For those who don’t know: Gett is an international taxi service that was founded in Israel in 2011. Gett is now represented in 4 countries: Israel, Great Britain, Russia and the USA. The main products of our company are mobile applications for clients and drivers, a web portal for corporate clients where you can order a car, and a bunch of internal admin pages through which our employees set up tariff plans, connect new drivers, monitor fraud cases and much more. At the end of 2016, a global R&D office was opened in Moscow, which works in the interests of the entire company.

How we came to Go

In 2011, the main product of the company was a monolithic application on Ruby on Rails, because at that time this framework was very popular. There were successful examples of businesses quickly developed and launched on Ruby on Rails, so it was associated with success in the business. The company was developing, new drivers and users came to us, loads grew. And the first problems began to appear.

In order for the client application to display the location of the car, and so that its movement looks like a smooth curve, drivers must often send their coordinates. Therefore, the endpoint responsible for receiving coordinates from drivers was almost always the most heavily loaded. And the web server framework in Ruby on Rails did a poor job of this. It was possible to scale only extensively, adding new application servers, which is expensive and inefficient. As a result, we took out the functional collection of coordinates in a separate service, which was originally written in JS. For a while, this solved the problem. However, as the load grew, when we reached 80 thousand RPMs, the service on Node.js stopped saving us.

Then we declared a hackathon. All employees in the company had the opportunity to write a prototype in a day, which was to collect the coordinates of the drivers. Here are the benchmarks of two versions of that service: running on prod and rewritten on Go.

In almost all respects, the service on Go showed the best results. The service on Node.js used a cluster; it is a technology for using all the cores of a machine. That is, the experiment was a plus or minus honest. Although Node.js has the disadvantage of a single-threaded runtime, it has no effect on the results.

Gradually, our product demands grew. We developed more and more functionality, and once we encountered such a problem: when you add some piece of code in one place, something may break in another place where the project is strongly connected. We decided to overcome this scourge by switching to a service-oriented architecture. But the performance deteriorated as a result: when a runtime request is encountered while executing the code by the Ruby on Rails interpreter, it is blocked and the worker is idle. And network I / O operations have become more and more.

As a result, we decided to adopt Go as one of the main development languages.

Features of our product development

Firstly, we have very different product requirements. Since our cars drive in three countries with completely different laws, it is necessary to implement very different sets of functionality. For example, in Israel, it is legislatively required that the cost of a trip be considered by a taximeter - this is a device that passes mandatory certification every few years. When the driver starts the trip, he presses the “go” button, and when he finishes, he presses the “stop” button, and enters the price shown by the taximeter into the application.

There are no such strict laws in Russia. Here we can configure the pricing policy ourselves. For example, tie it to the duration of the trip or to the distance. Sometimes, when we want to implement the same functionality, we first roll it out in one country, and then adapt and roll it out in other countries.

Our product managers set requirements in the form of product stories, we try to adhere to just such an approach. This automatically leaves its mark on testing: we use the behavior driven development methodology so that incoming product requirements can be projected onto test situations. It’s easier for people who are far from programming to just read the test results and understand what's what.

We also wanted to get rid of duplication of some work. After all, if we have a service that implements some kind of functionality, and we need to write a second service, re-solving all the problems that we solved in the first, re-integrating with the monitoring and migration tools, then this will be ineffective.

We solve problems

Framework

Ruby on Rails is built on MVC architecture. At the time of the transition, we really did not want to abandon it in order to make life easier for those developers who can only program on this framework. Changing tools does not add comfort without that, and if you also change the architecture of the application, it’s the same as pushing a person who does not know how to swim from a boat. We did not want to hurt developers this way, so we took one of the few MVC frameworks at that time called Beego .

We tried using Beego, as in Ruby on Rails, to do server rendering. However, the page rendered on the server we did not like very much. I had to throw out one component, and today Beego produces only JSON from the backend, and all rendering is performed by React at the front.

Beego allows you to build a project automatically. It was very difficult for some developers to switch from a scripting language to the need to compile. There were funny stories when a person implemented some kind of feature, and only by code review or even accidentally found out that, it turns out, you need to do a Go-build. And the task is already closed.

In Beego, a router is generated from a comment in which the developer writes the path to the controller actions. We have an ambiguous attitude to this idea, because if a typo, for example, the router was re-typed, then for those who are not sophisticated in this approach, it is difficult to find a mistake. People, at times, could not figure out the reasons even after several hours of exciting debug.

Database

We use PostgreSQL as the database. There is such a practice - to control the database schema from the application code. This is convenient for several reasons: everyone knows about them; they are easy to deploy, the database is always in sync with the code. And we also wanted to keep these buns.

When you have several projects and teams, sometimes to implement the functionality you have to crawl into other people's projects. And it is very tempting to add a column to the table, in which there may be 10 million records. And a person who is not immersed in this project may not be aware of the size of the table. To prevent this, we issued a warning about dangerous migrations that could block the database for recording, and gave developers the means to remove this warning.

Migration

We decided to migrate using Swan , which is a patched goose , in which we made a couple of improvements. These two, like many migration tools, want to do everything in one transaction, so that in case of problems you can easily roll back. Sometimes it happens that you need to build an index, and the table is locked. PostgreSQL has a

concurrently

parameter that avoids this. The problem is that if in PostgreSQL you start building an index on this

concurrently

, and even in a transaction, an error will pop up. At first we wanted to add a flag so as not to open a transaction. And in the end, they did this:

COMMIT; CREATE INDEX CONCURRENTLY huge_index ON huge_table (column_one, column_two); BEGIN;

Now, when someone adds an index with the

concurrently

parameter, he gets this hint. Note that

commit

and

begin

not confused. This code closes the transaction that the migration tool opened, then rolls the index with the

concurrently

parameter, and then opens another transaction so that the tool closes something.

Testing

We try to adhere to behavior driven development. In Go, this can be done using the Ginkgo tool. It is good because it has the usual keywords for BDD, “describe”, “when” and others, and also allows you to simply project text written by the product manager onto test situations that are stored in the source code. But we were faced with a problem: people who came from the world of Ruby on Rails believe that in any programming language there is something similar to a factory girl - a factory for creating initial conditions. However, there was nothing like this in Go. As a result, we decided that we would not reinvent the wheel: just before each test, in the hooks before and after the test, we fill the database with the necessary data, and then clean it so that there are no side effects.

Monitoring

If you have a production service that people are accessing, then you need to monitor its work: are there five hundred errors or are requests being processed quickly. In the world of Ruby on Rails, NewRelic is very often used for this, and many of our developers have owned it well. They understood how the tool worked, where to look if there were any problems. NewRelic allows you to analyze the processing time of requests via HTTP, identify slow external calls and requests to the database, monitor data flows, provides intelligent error analysis and alerts.

NewRelic has the Apdex aggregate function, which depends on the histogram of the distribution of the duration of the answers and some values that you think are normal and that are set at the very beginning. This feature also depends on the level of errors in the application. NewRelic computes Apdex and issues a warning if its value falls below some level.

NewRelic is also good at having an official agent for Go recently. This is what the general monitoring overview looks like:

On the left is a query processing diagram, each of which is divided into segments. Segments include request queuing, middleware processing, length of stay in the Ruby on Rails interpreter, and access to repositories.

The Apdex chart is displayed on the top right. Bottom right - the frequency of processing requests.

The intrigue is that in Ruby on Rails to connect NewRelic you need to add one line of code and add your credentials to the configuration. And everything magically works. This is possible due to the fact that in Ruby on Rails there is monkey patching, which is not in Go, so there is a lot to do manually.

First of all, we wanted to measure the duration of request processing. This was done using the hooks provided by Beego.

beego.InsertFilter("*", beego.BeforeRouter, StartTransaction, false) beego.InsertFilter("*", beego.AfterExec, NameTransaction, false) beego.InsertFilter("*", beego.FinishRouter, EndTransaction, false)

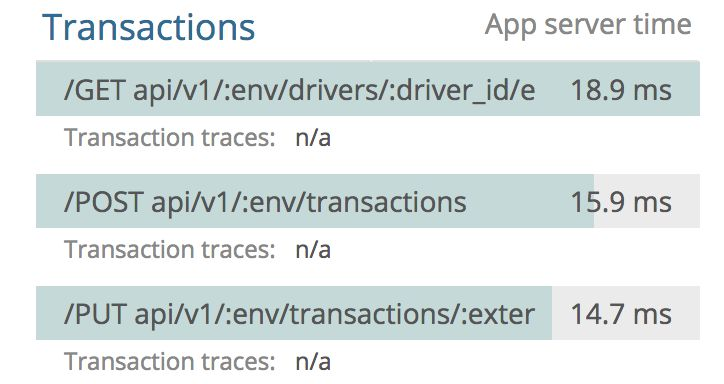

The only non-trivial point was that we shared the opening of the transaction and its naming. Why did we do this? I wanted to measure the duration of the request processing taking into account the time spent on routing. At the same time, we need reports aggregated by the endpoints to which the requests came. But at the time of opening the transaction, we have not yet defined the URL pattern by which the match will occur. Therefore, when a request arrives, we open a transaction, then on the hook, after executing the controller, name it, and after processing, close it. Therefore, today our reports look like this:

We used an ORM called GORM because we wanted to keep the abstraction and not force developers to write pure SQL. This approach has both advantages and disadvantages. In the world of Ruby on Rails, there is an ORM Active Record that really pampered people. Developers forget that you can write pure SQL, and operate only with ORM calls.

db.Callback().Create().Before("gorm:begin_transaction"). Register("newrelicStart", startSegment) db.Callback().Create().After("gorm:commit_or_rollback_transaction"). Register("newrelicStop", endSegment)

To measure the duration of query execution in the database when using GORM, you need to take the

db

object. Callback Callback says that we want to register a callback. It should be called when creating a new entity - a call to

Create

. Then we indicate exactly when to launch Callback.

Before

is responsible for this with the

gorm

argument:

begin_transaction

is a point at the time the transaction is opened. Next, with the name

NewrelicStop

register the

startSegment

function, which simply calls the Go agent and opens a new segment for accessing the database.

ORM will call this function before we open the transaction, and thereby open the segment. We must do the same to close the segment: just hang the Callback.

In addition to PostgreSQL, we use Redis, which is also not smooth. For this monitoring, we wrote a wrapper over a standard client, and did the same for calling external services. Here's what happened:

This is what monitoring looks like for an application written in Go. On the left is a report on the duration of query processing, consisting of segments: execution of the code itself in Go, access to the PostgreSQL and Replica databases. Calls to external services are not displayed on this graph, because there are very few of them and are simply invisible when averaged. We also have information on Apdex and the frequency of request processing. In general, the monitoring turned out to be quite informative and useful for use.

As for data flows, thanks to our wrappers over the HTTP client, we can track requests to external services. The promotion service request scheme is indicated here: it refers to four of our other services and two repositories.

Conclusion

Today we have more than 75% of production services written in Go, we do not conduct active development in Ruby, but only support it. And in this regard, I want to note:

- Fears that the speed of development will decrease have not been confirmed. Programmers poured into the new technology each in its own mode, but, on average, after a couple of weeks of active work, development on Go became as predictable and fast as on Ruby on Rails.

- The performance of Go applications under load is pleasantly surprising compared to past experience. We significantly saved on the use of infrastructure in AWS, significantly reducing the number of instances used.

- The change of technology has significantly encouraged programmers, and this is an important part of a successful project.

- Today we have already left Beego and Gorm, more about this in the next article.

Summarizing, I want to say that if you write not on Go, you suffer from problems of high workloads and are bored with traffic, go to this language. Just do not forget to negotiate with the business.

All Articles