QUIC in action: how Uber implemented it to optimize performance

The QUIC protocol is extremely interesting to watch, so we love to write about it. But if the previous publications about QUIC were more of a historical (local history, if you like) character and material, then today we are pleased to publish a different interpretation - we will talk about the actual application of the protocol in 2019. And it’s not about a small infrastructure based in a conditional garage, but about Uber, which works almost all over the world. How did the company's engineers come to the decision to use QUIC in production, how they tested and what they saw after rolling into the production - under the cut.

Uber is a global scale, namely 600 cities of presence, in each of which the application relies entirely on wireless Internet from more than 4,500 mobile operators. Users expect the application to work not just fast, but in real time - to ensure this, the Uber application needs low latency and a very reliable connection. Alas, the HTTP / 2 stack does not feel well in dynamic and loss-prone wireless networks. We realized that in this case, low performance is directly related to TCP implementations in the kernels of operating systems.

To solve the problem, we applied QUIC , a modern protocol with channel multiplexing, which gives us more control over the performance of the transport protocol. The IETF working group is currently standardizing QUIC as HTTP / 3 .

After detailed tests, we came to the conclusion that the implementation of QUIC in our application will make “tail” delays less compared to TCP. We observed a decrease in the range of 10-30% for HTTPS traffic on the example of driver and passenger applications. QUIC also gave us end-to-end control over custom packages.

In this article, we share our experience on optimizing TCP for Uber applications using a stack that supports QUIC.

Today, TCP is the most used transport protocol for delivering HTTPS traffic on the Internet. TCP provides a reliable stream of bytes, thereby coping with network congestion and link layer loss. The widespread use of TCP for HTTPS traffic is explained by the ubiquity of the former (almost every OS contains TCP), availability on most infrastructure (for example, load balancers, HTTPS proxies and CDNs) and out-of-box functionality, which is available on most platforms and networks.

Most users use our application on the go, and TCP tail delays are far from real-time requirements of our HTTPS traffic. Simply put, users all over the world have faced this - Figure 1 shows delays in large cities:

Figure 1. The magnitude of the “tail” delays varies in the main cities where Uber is present.

Despite the fact that there were more delays in Indian and Brazilian networks than in the USA and Great Britain, tail delays are much larger than average delays. And this is true even for the USA and Great Britain.

TCP was created for wired networks, that is, with an emphasis on well-predictable links. However, wireless networks have their own characteristics and difficulties. First, wireless networks are susceptible to loss due to interference and signal attenuation. For example, Wi-Fi networks are sensitive to microwaves, bluetooth and other radio waves. Cellular networks suffer from signal loss ( path loss ) due to signal reflection / absorption by objects and buildings, as well as interference from neighboring cell towers . This leads to more significant (4-10 times) and a variety of round-trip delays (RTT) and packet loss compared to a wired connection.

To combat bandwidth fluctuations and losses, cellular networks typically use large buffers for bursts of traffic. This can lead to excessive priority, which means longer delays. Very often, TCP treats such a sequence as loss due to an increased timeout, so TCP is inclined to relay and thereby fill the buffer. This problem is known as bufferbloat ( excessive network buffering, buffer swelling ), and it is a very serious problem of the modern Internet.

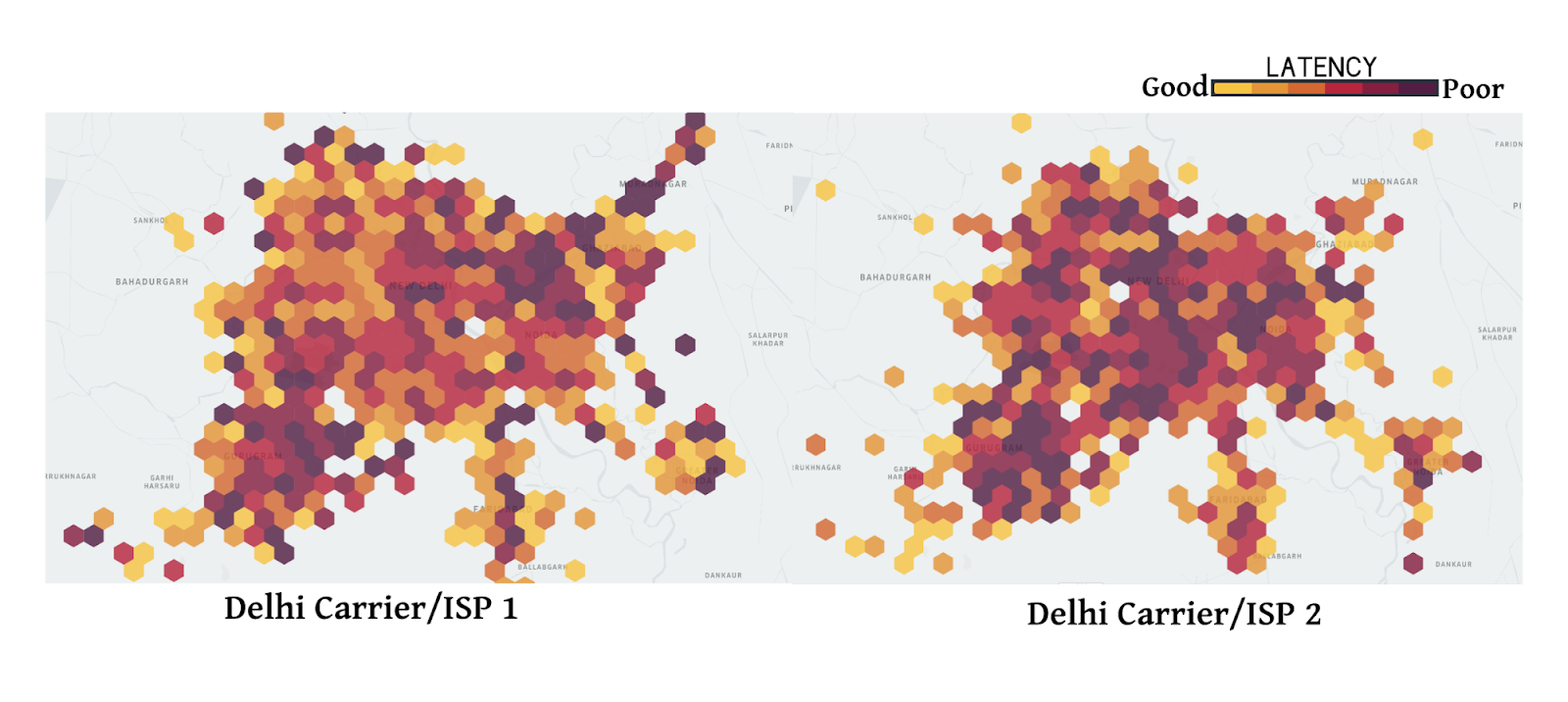

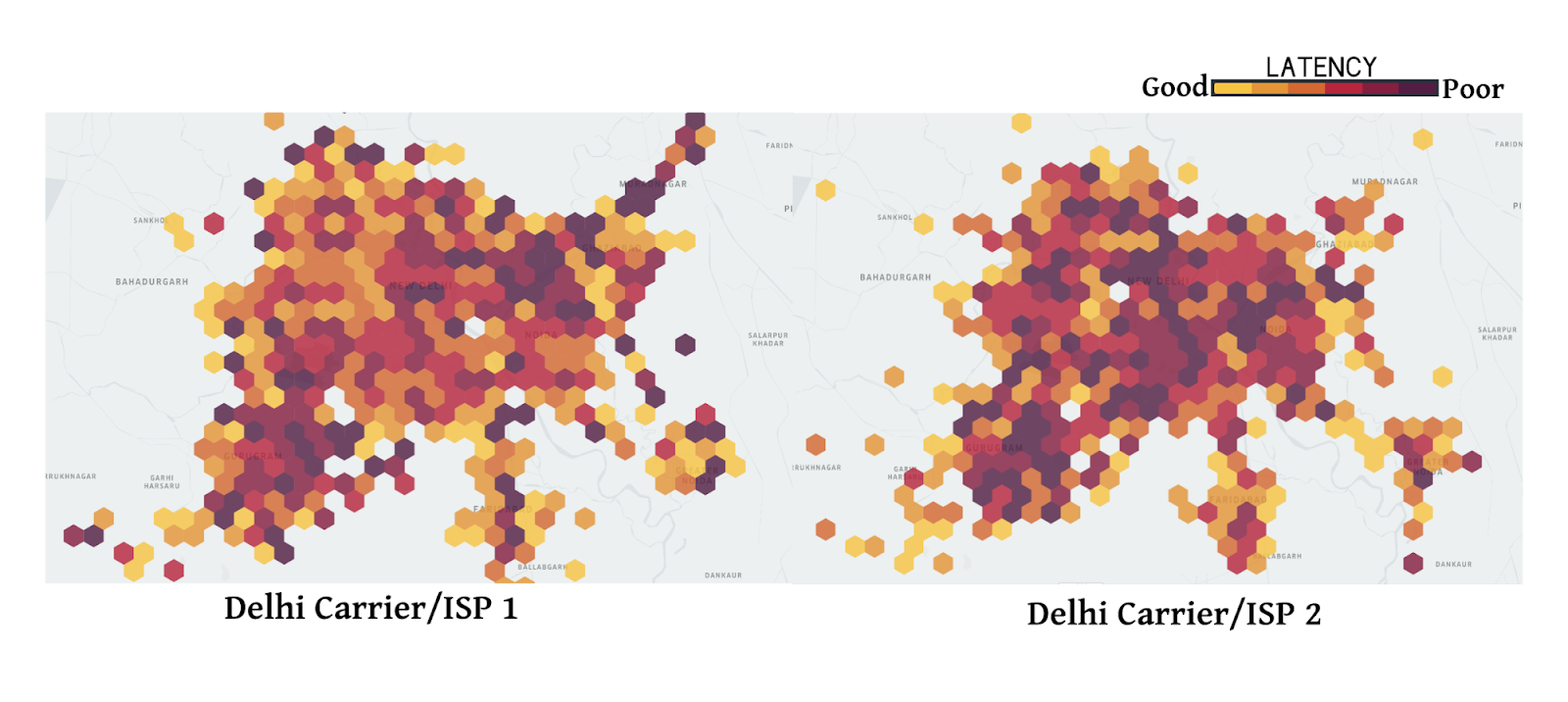

Finally, cellular network performance varies depending on the carrier, region, and time. In Figure 2, we collected the median delays of HTTPS traffic over cells in a range of 2 kilometers. Data is collected for the two largest mobile operators in Delhi, India. As you can see, performance varies from cell to cell. Also, the performance of one operator is different from the performance of the second. This is influenced by factors such as network entry patterns taking into account time and location, user mobility, as well as network infrastructure, taking into account the density of towers and the ratio of network types (LTE, 3G, etc.).

Figure 2. Delays for an example of a 2 km radius. Delhi, India.

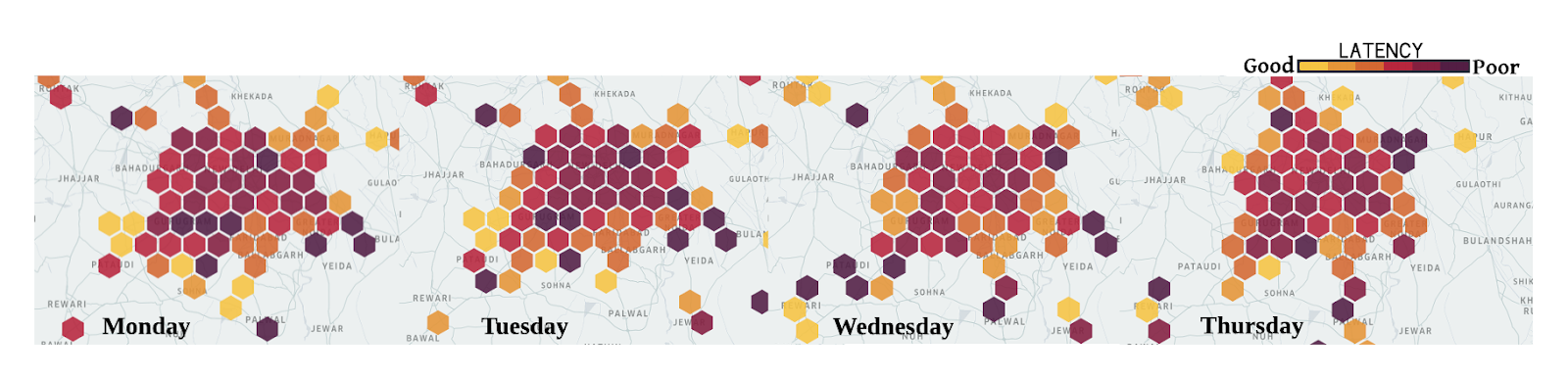

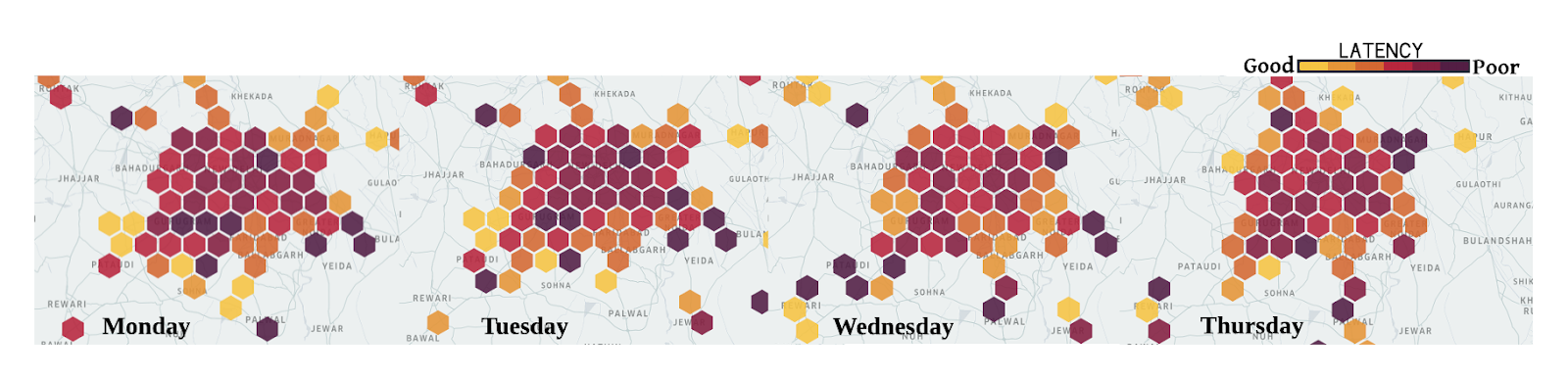

Also, the performance of cellular networks varies over time. Figure 3 shows the median delay by day of the week. We also observed a difference on a smaller scale — within one day and one hour.

Figure 3. Tail delays can vary significantly on different days, but with the same operator.

All of the above leads to the fact that TCP performance is inefficient in wireless networks. However, before looking for alternatives to TCP, we wanted to develop an accurate understanding of the following points:

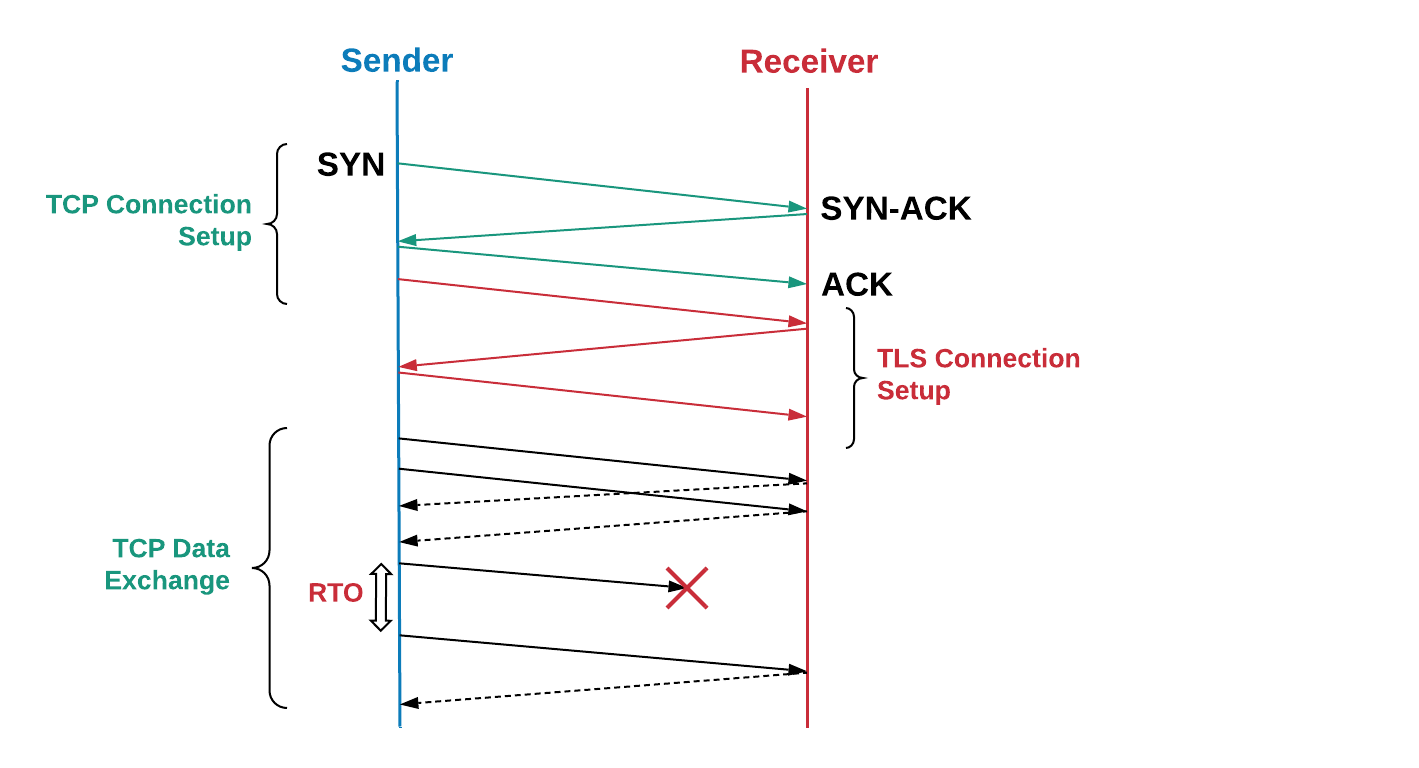

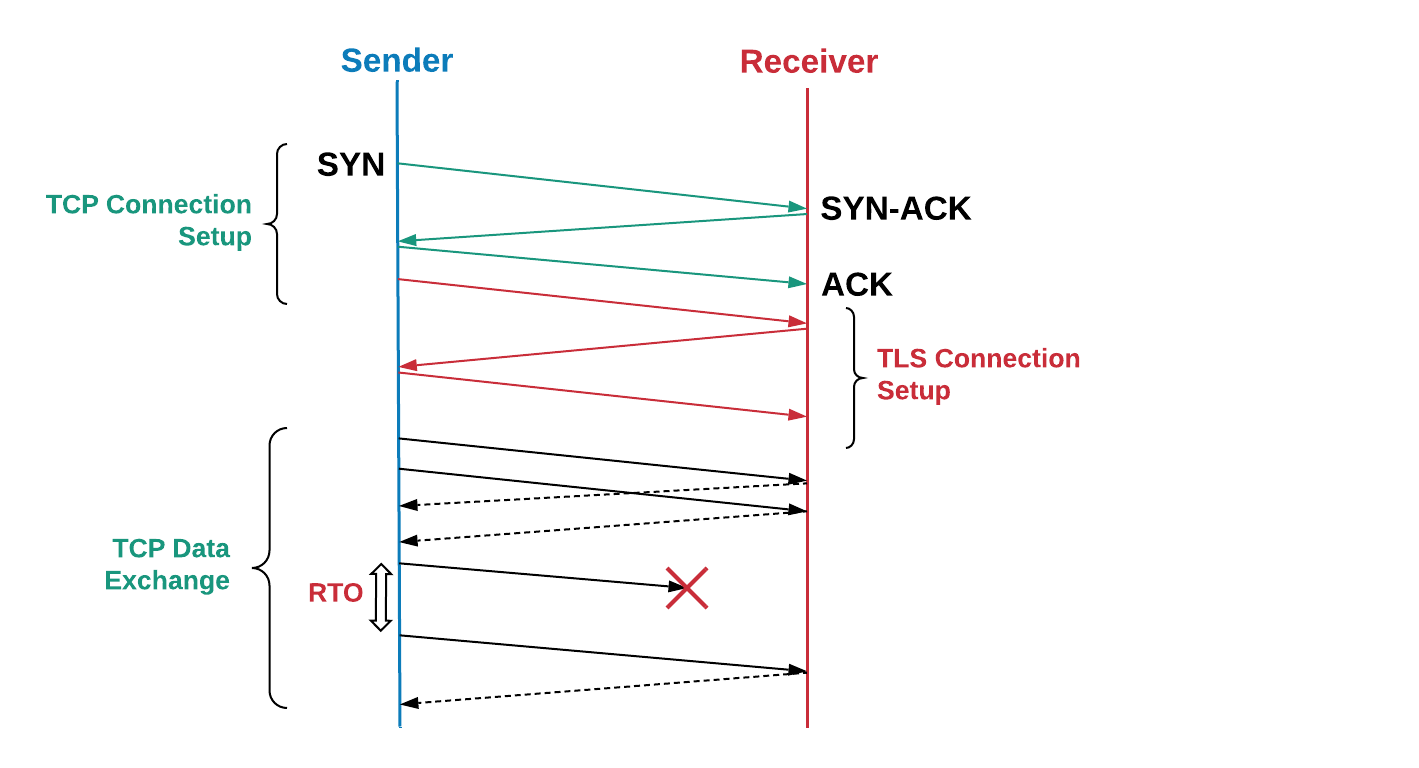

To understand how we analyzed TCP performance, let's briefly recall how TCP transfers data from a sender to a receiver. First, the sender establishes a TCP connection by performing a three-way handshake : the sender sends a SYN packet, waits for a SYN-ACK packet from the recipient, then sends an ACK packet. Additional second and third passes go into creating a TCP connection. The recipient acknowledges receipt of each packet (ACK) to ensure reliable delivery.

If a packet or ACK is lost, the sender retransmit after a timeout (RTO, retransmission timeout ). The RTO is calculated dynamically based on various factors, for example, on the expected RTT delay between the sender and the receiver.

Figure 4. TCP / TLS packet exchange includes a retransmit mechanism.

To determine how TCP worked in our applications, we monitored TCP packets with tcpdump for a week on combat traffic coming from Indian border servers. Then we analyzed the TCP connections using tcptrace . In addition, we created an Android application that sends emulated traffic to a test server, imitating the real traffic as much as possible. Smartphones with this application were handed out to several employees who collected logs for several days.

The results of both experiments were consistent with each other. We saw high RTT delays; tail values were almost 6 times higher than the median value; arithmetic mean value of delays - more than 1 second. Many connections were lossy, causing TCP to retransmit 3.5% of all packets. In areas with congestion, such as airports and train stations, we observed a 7% loss. Such results cast doubt on the conventional wisdom that advanced retransmission schemes used in cellular networks significantly reduce transport loss. Below are the test results from the “simulator” application:

Almost half of these connections had at least one packet loss, most of which were SYN and SYN-ACK packets. Most TCP implementations use an RTO value of 1 second for SYN packets, which increases exponentially for subsequent losses. Application loading time may increase due to the fact that TCP will require more time to establish connections.

In the case of data packets, high RTOs greatly reduce useful network utilization in the presence of temporary losses in wireless networks. We found that the average retransmit time is about 1 second with a tail delay of almost 30 seconds. Such high delays at the TCP level caused HTTPS timeouts and retries, which further increased latency and network inefficiency.

While the 75th percentile of the measured RTT was around 425 ms, the 75th percentile for TCP was almost 3 seconds. This hints that the loss forced TCP to make 7-10 passes in order to successfully transmit data. This may be due to an ineffective RTO calculation, the inability of TCP to respond quickly to the loss of the last packets in the window, and the inefficiency of the congestion control algorithm, which does not distinguish between wireless losses and losses due to network congestion. Below are the TCP loss test results:

Originally designed by Google, QUIC is a multi-threaded, modern transport protocol that runs on top of UDP. At the moment, QUIC is in the process of standardization (we already wrote that there are, as it were, two versions of QUIC, the curious can follow the link - approx. Translator). As shown in Figure 5, QUIC is hosted under HTTP / 3 (actually, HTTP / 2 on top of QUIC - this is HTTP / 3, which is now heavily standardized). It partially replaces the HTTPS and TCP layers, using UDP to form packets. QUIC only supports secure data transfer, since TLS is fully integrated into QUIC.

Figure 5: QUIC works under HTTP / 3, replacing TLS, which used to work under HTTP / 2.

Below we list the reasons that convinced us to use QUIC to strengthen TCP:

We looked at alternative approaches to solving the problem before choosing QUIC.

First of all, we tried deploying TPC PoPs (Points of Presence) to complete TCP connections closer to users. Essentially, PoPs terminates the TCP connection with the mobile device closer to the cellular network and proxies traffic to the original infrastructure. Closing TCP closer, we can potentially reduce RTT and make sure that TCP will be more responsive to dynamic wireless environments. However, our experiments showed that for the most part, RTT and losses come from cellular networks and the use of PoPs does not provide a significant performance improvement.

We also looked towards tuning TCP parameters. Configuring the TCP stack on our heterogeneous edge servers was difficult because TCP has disparate implementations in different OS versions. It was difficult to implement this and test various network configurations. Configuring TCP directly on mobile devices was not possible due to a lack of authority. More importantly, chips such as connections with 0-RTT and improved RTT prediction are critical to the protocol architecture, and therefore it is not possible to achieve significant benefits just by configuring TCP.

Finally, we evaluated several UDP-based protocols that troubleshoot video streaming - we wanted to find out if these protocols would help in our case. Alas, they lacked many security settings, and they also needed an additional TCP connection for metadata and control information.

Our research has shown that QUIC is almost the only protocol that can help with the problem of Internet traffic, while taking into account both security and performance.

To successfully integrate QUIC and improve application performance in poor communication conditions, we replaced the old stack (HTTP / 2 over TLS / TCP) with the QUIC protocol. We used the Cronet network library from Chromium Projects , which contains the original, Google version of the protocol - gQUIC. This implementation is also constantly being improved to follow the latest IETF specification.

First, we integrated Cronet into our Android apps to add QUIC support. Integration was carried out in such a way as to minimize migration costs. Instead of completely replacing the old network stack that used the OkHttp library, we integrated Cronet UNDER the OkHttp API framework. By integrating in this way, we avoided changes in our network calls (which Retrofit uses) at the API level.

Similar to the approach to Android devices, we implemented Cronet in Uber applications for iOS, intercepting HTTP traffic from network APIs using NSURLProtocol . This abstraction, provided by the iOS Foundation, processes protocol-specific URL data and ensures that we can integrate Cronet into our iOS apps without significant migration costs.

On the backend side, QUIC termination is provided by the Google Cloud Load balancing infrastructure, which uses alt-svc headers in responses to support QUIC. In general, the balancer adds the alt-svc header to each HTTP request and it already validates QUIC support for the domain. When the Cronet client receives an HTTP response with this header, it uses QUIC for subsequent HTTP requests to this domain. As soon as the balancer completes QUIC, our infrastructure explicitly sends this action via HTTP2 / TCP to our data centers.

Outstanding performance is the main reason for our search for a better protocol. To begin with, we created a stand with network emulation to find out how QUIC will behave with different network profiles. To test the operation of QUIC in real networks, we experimented around New Delhi, using emulated network traffic very similar to HTTP calls in the passenger’s application.

Inventory for experiment:

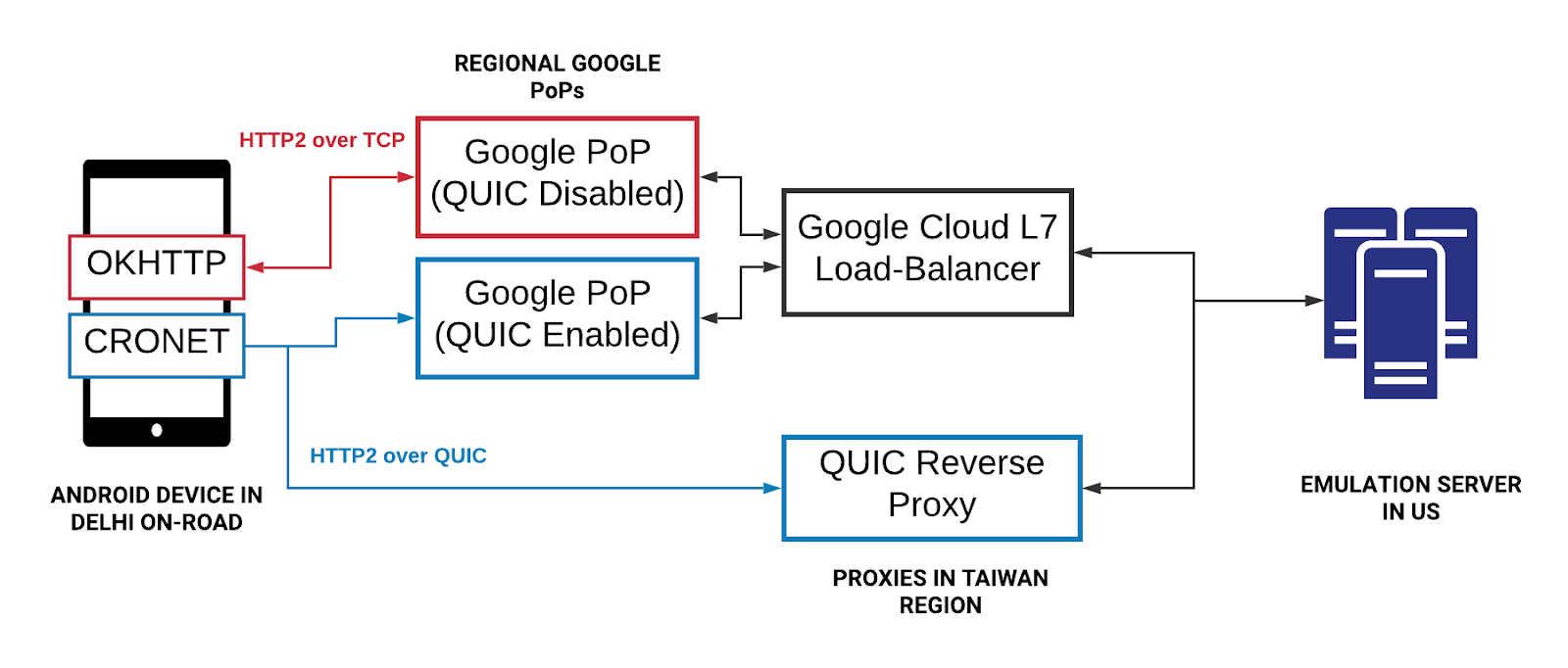

Figure 6. The travel set for TCP vs QUIC tests consisted of Android devices with OkHttp and Cronet, cloud proxies for terminating connections, and an emulation server.

When Google made QUIC available using Google Cloud Load Balancing , we used the same inventory, but with one modification: instead of NGINX, we took Google balancers to complete TCP and QUIC connections from devices, as well as to direct HTTPS traffic to the emulation server . Balancers are distributed around the world, but use the PoP server closest to the device (thanks to geolocation).

Figure 7. In the second experiment, we wanted to compare the TCP completion delay and QUIC: using Google Cloud and using our cloud proxy.

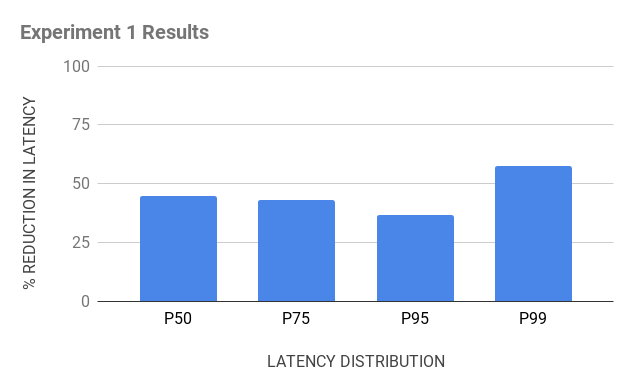

As a result, several revelations awaited us:

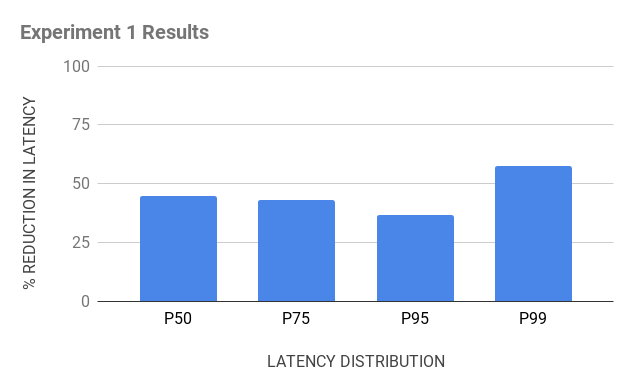

Figure 8. The results of two experiments show that QUIC is significantly superior to TCP.

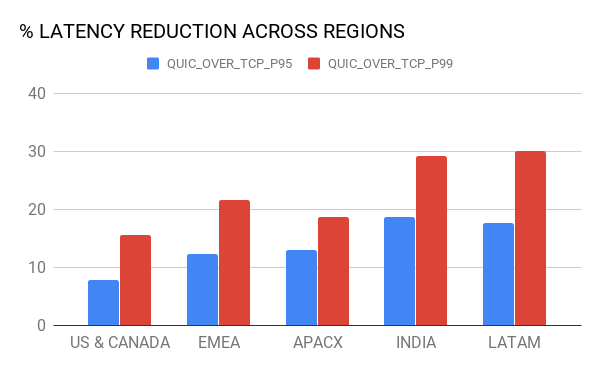

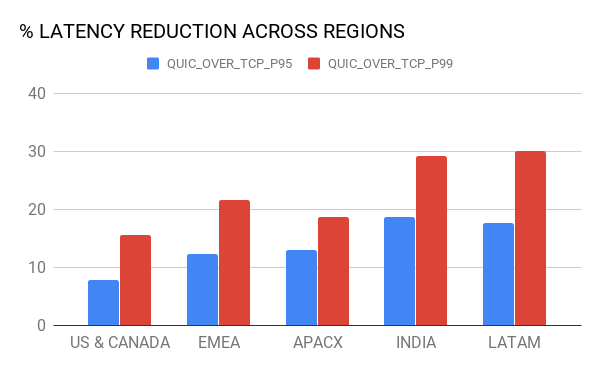

Inspired by experimentation, we have implemented QUIC support in our Android and iOS applications. We conducted A / B testing to determine the impact of QUIC in the cities where Uber operates. In general, we saw a significant reduction in tail delays across regions, as well as telecom operators and network type.

The graphs below show the percentage improvements in the tails (95 and 99 percentile) for macro regions and different types of networks - LTE, 3G, 2G.

Figure 9. In combat tests, QUIC outperformed TCP in latency.

Perhaps this is just the beginning - rolling out QUIC in production gave tremendous opportunities to improve application performance in both stable and unstable networks, namely:

After analyzing the protocol performance on real traffic, we saw that approximately 80% of the sessions successfully used QUIC for all requests, while 15% of the sessions used a combination of QUIC and TCP. We assume that the combination appeared because the Cronet library switches back to TCP by timeout, since it cannot distinguish between real UDP failures and bad network conditions. Now we are looking for a solution to this problem, as we are working on the subsequent implementation of QUIC.

Traffic from mobile applications is sensitive to delays, but not to bandwidth. Also, our applications are mainly used in cellular networks. Based on experiments, tail delays are still large, even though using a proxy to complete TCP and QUIC is close to users. We are actively looking for ways to improve congestion management and increase the efficiency of QUIC loss recovery algorithms.

With these and some other improvements, we plan to improve the user experience regardless of network and region, making convenient and seamless package transport more accessible around the world.

Pictures are clickable. Enjoy reading!

Uber is a global scale, namely 600 cities of presence, in each of which the application relies entirely on wireless Internet from more than 4,500 mobile operators. Users expect the application to work not just fast, but in real time - to ensure this, the Uber application needs low latency and a very reliable connection. Alas, the HTTP / 2 stack does not feel well in dynamic and loss-prone wireless networks. We realized that in this case, low performance is directly related to TCP implementations in the kernels of operating systems.

To solve the problem, we applied QUIC , a modern protocol with channel multiplexing, which gives us more control over the performance of the transport protocol. The IETF working group is currently standardizing QUIC as HTTP / 3 .

After detailed tests, we came to the conclusion that the implementation of QUIC in our application will make “tail” delays less compared to TCP. We observed a decrease in the range of 10-30% for HTTPS traffic on the example of driver and passenger applications. QUIC also gave us end-to-end control over custom packages.

In this article, we share our experience on optimizing TCP for Uber applications using a stack that supports QUIC.

Last word of technology: TCP

Today, TCP is the most used transport protocol for delivering HTTPS traffic on the Internet. TCP provides a reliable stream of bytes, thereby coping with network congestion and link layer loss. The widespread use of TCP for HTTPS traffic is explained by the ubiquity of the former (almost every OS contains TCP), availability on most infrastructure (for example, load balancers, HTTPS proxies and CDNs) and out-of-box functionality, which is available on most platforms and networks.

Most users use our application on the go, and TCP tail delays are far from real-time requirements of our HTTPS traffic. Simply put, users all over the world have faced this - Figure 1 shows delays in large cities:

Figure 1. The magnitude of the “tail” delays varies in the main cities where Uber is present.

Despite the fact that there were more delays in Indian and Brazilian networks than in the USA and Great Britain, tail delays are much larger than average delays. And this is true even for the USA and Great Britain.

TCP performance over the air

TCP was created for wired networks, that is, with an emphasis on well-predictable links. However, wireless networks have their own characteristics and difficulties. First, wireless networks are susceptible to loss due to interference and signal attenuation. For example, Wi-Fi networks are sensitive to microwaves, bluetooth and other radio waves. Cellular networks suffer from signal loss ( path loss ) due to signal reflection / absorption by objects and buildings, as well as interference from neighboring cell towers . This leads to more significant (4-10 times) and a variety of round-trip delays (RTT) and packet loss compared to a wired connection.

To combat bandwidth fluctuations and losses, cellular networks typically use large buffers for bursts of traffic. This can lead to excessive priority, which means longer delays. Very often, TCP treats such a sequence as loss due to an increased timeout, so TCP is inclined to relay and thereby fill the buffer. This problem is known as bufferbloat ( excessive network buffering, buffer swelling ), and it is a very serious problem of the modern Internet.

Finally, cellular network performance varies depending on the carrier, region, and time. In Figure 2, we collected the median delays of HTTPS traffic over cells in a range of 2 kilometers. Data is collected for the two largest mobile operators in Delhi, India. As you can see, performance varies from cell to cell. Also, the performance of one operator is different from the performance of the second. This is influenced by factors such as network entry patterns taking into account time and location, user mobility, as well as network infrastructure, taking into account the density of towers and the ratio of network types (LTE, 3G, etc.).

Figure 2. Delays for an example of a 2 km radius. Delhi, India.

Also, the performance of cellular networks varies over time. Figure 3 shows the median delay by day of the week. We also observed a difference on a smaller scale — within one day and one hour.

Figure 3. Tail delays can vary significantly on different days, but with the same operator.

All of the above leads to the fact that TCP performance is inefficient in wireless networks. However, before looking for alternatives to TCP, we wanted to develop an accurate understanding of the following points:

- Is TCP the main culprit in tailing delays in our applications?

- Do modern networks have significant and diverse round-trip delays (RTT)?

- What is the effect of RTT and TCP performance loss?

TCP performance analysis

To understand how we analyzed TCP performance, let's briefly recall how TCP transfers data from a sender to a receiver. First, the sender establishes a TCP connection by performing a three-way handshake : the sender sends a SYN packet, waits for a SYN-ACK packet from the recipient, then sends an ACK packet. Additional second and third passes go into creating a TCP connection. The recipient acknowledges receipt of each packet (ACK) to ensure reliable delivery.

If a packet or ACK is lost, the sender retransmit after a timeout (RTO, retransmission timeout ). The RTO is calculated dynamically based on various factors, for example, on the expected RTT delay between the sender and the receiver.

Figure 4. TCP / TLS packet exchange includes a retransmit mechanism.

To determine how TCP worked in our applications, we monitored TCP packets with tcpdump for a week on combat traffic coming from Indian border servers. Then we analyzed the TCP connections using tcptrace . In addition, we created an Android application that sends emulated traffic to a test server, imitating the real traffic as much as possible. Smartphones with this application were handed out to several employees who collected logs for several days.

The results of both experiments were consistent with each other. We saw high RTT delays; tail values were almost 6 times higher than the median value; arithmetic mean value of delays - more than 1 second. Many connections were lossy, causing TCP to retransmit 3.5% of all packets. In areas with congestion, such as airports and train stations, we observed a 7% loss. Such results cast doubt on the conventional wisdom that advanced retransmission schemes used in cellular networks significantly reduce transport loss. Below are the test results from the “simulator” application:

| Network metrics | Values |

|---|---|

| RTT, milliseconds [50%, 75%, 95%, 99%] | [350, 425, 725, 2300] |

| RTT discrepancy, seconds | ~ 1.2 s average |

| Packet loss in unstable connections | On average ~ 3.5% (7% in areas with congestion) |

Almost half of these connections had at least one packet loss, most of which were SYN and SYN-ACK packets. Most TCP implementations use an RTO value of 1 second for SYN packets, which increases exponentially for subsequent losses. Application loading time may increase due to the fact that TCP will require more time to establish connections.

In the case of data packets, high RTOs greatly reduce useful network utilization in the presence of temporary losses in wireless networks. We found that the average retransmit time is about 1 second with a tail delay of almost 30 seconds. Such high delays at the TCP level caused HTTPS timeouts and retries, which further increased latency and network inefficiency.

While the 75th percentile of the measured RTT was around 425 ms, the 75th percentile for TCP was almost 3 seconds. This hints that the loss forced TCP to make 7-10 passes in order to successfully transmit data. This may be due to an ineffective RTO calculation, the inability of TCP to respond quickly to the loss of the last packets in the window, and the inefficiency of the congestion control algorithm, which does not distinguish between wireless losses and losses due to network congestion. Below are the TCP loss test results:

| TCP packet loss statistics | Value |

|---|---|

| Percentage of connections with at least 1 packet loss | 45% |

| The percentage of connections with loss during connection establishment | thirty% |

| The percentage of connections with loss during data exchange | 76% |

| Retransmission Delay Distribution, Seconds [50%, 75%, 95%, 99%] | [1, 2.8, 15, 28] |

| Distribution of retransmissions for one packet or TCP segment | [1,3,6,7] |

QUIC application

Originally designed by Google, QUIC is a multi-threaded, modern transport protocol that runs on top of UDP. At the moment, QUIC is in the process of standardization (we already wrote that there are, as it were, two versions of QUIC, the curious can follow the link - approx. Translator). As shown in Figure 5, QUIC is hosted under HTTP / 3 (actually, HTTP / 2 on top of QUIC - this is HTTP / 3, which is now heavily standardized). It partially replaces the HTTPS and TCP layers, using UDP to form packets. QUIC only supports secure data transfer, since TLS is fully integrated into QUIC.

Figure 5: QUIC works under HTTP / 3, replacing TLS, which used to work under HTTP / 2.

Below we list the reasons that convinced us to use QUIC to strengthen TCP:

- 0-RTT connection setup. QUIC allows the reuse of authorizations from previous connections, reducing the number of security handshakes. In the future, TLS1.3 will support 0-RTT, but a three-way TCP handshake will still be required.

- Overcoming HoL blocking. HTTP / 2 uses one TCP connection for each client to improve performance, but this can lead to a HoL (head-of-line) block. QUIC simplifies multiplexing and delivers requests to the application independently of each other.

- congestion management. QUIC is at the application level, making it easier to update the main transport algorithm, which controls sending, based on network parameters (amount of loss or RTT). Most TCP implementations use the CUBIC algorithm, which is not optimal for delay sensitive traffic. Recently developed algorithms like BBR more accurately model the network and optimize latency. QUIC allows you to use BBR and update this algorithm as it improves .

- make up for losses. QUIC calls up two TLPs ( tail loss probe ) before the RTO works - even when the losses are very noticeable. This is different from TCP implementations. TLP retransmits mainly the last packet (or new, if any) to trigger quick replenishment. Tail delay processing is especially useful for how Uber works with the network, namely for short, episodic and delay-sensitive data transmissions.

- optimized ACK. Since each packet has a unique serial number, there is no problem of distinguishing between packets when they are relayed. ACK packets also contain time to process the packet and generate client-side ACKs. These features ensure that QUIC calculates RTT more accurately. The QUIC ACK supports up to 256 NACK ranges, helping the sender to be more resilient to packet swapping and use fewer bytes in the process. Selective ACK ( SACK ) in TCP does not solve this problem in all cases.

- connection migration. QUIC connections are identified by a 64-bit ID, so if the client changes IP addresses, you can continue to use the ID of the old connection on the new IP address, without interruption. This is a very common practice for mobile applications when a user switches between Wi-Fi and cellular connections.

Alternatives to QUIC

We looked at alternative approaches to solving the problem before choosing QUIC.

First of all, we tried deploying TPC PoPs (Points of Presence) to complete TCP connections closer to users. Essentially, PoPs terminates the TCP connection with the mobile device closer to the cellular network and proxies traffic to the original infrastructure. Closing TCP closer, we can potentially reduce RTT and make sure that TCP will be more responsive to dynamic wireless environments. However, our experiments showed that for the most part, RTT and losses come from cellular networks and the use of PoPs does not provide a significant performance improvement.

We also looked towards tuning TCP parameters. Configuring the TCP stack on our heterogeneous edge servers was difficult because TCP has disparate implementations in different OS versions. It was difficult to implement this and test various network configurations. Configuring TCP directly on mobile devices was not possible due to a lack of authority. More importantly, chips such as connections with 0-RTT and improved RTT prediction are critical to the protocol architecture, and therefore it is not possible to achieve significant benefits just by configuring TCP.

Finally, we evaluated several UDP-based protocols that troubleshoot video streaming - we wanted to find out if these protocols would help in our case. Alas, they lacked many security settings, and they also needed an additional TCP connection for metadata and control information.

Our research has shown that QUIC is almost the only protocol that can help with the problem of Internet traffic, while taking into account both security and performance.

QUIC integration into the platform

To successfully integrate QUIC and improve application performance in poor communication conditions, we replaced the old stack (HTTP / 2 over TLS / TCP) with the QUIC protocol. We used the Cronet network library from Chromium Projects , which contains the original, Google version of the protocol - gQUIC. This implementation is also constantly being improved to follow the latest IETF specification.

First, we integrated Cronet into our Android apps to add QUIC support. Integration was carried out in such a way as to minimize migration costs. Instead of completely replacing the old network stack that used the OkHttp library, we integrated Cronet UNDER the OkHttp API framework. By integrating in this way, we avoided changes in our network calls (which Retrofit uses) at the API level.

Similar to the approach to Android devices, we implemented Cronet in Uber applications for iOS, intercepting HTTP traffic from network APIs using NSURLProtocol . This abstraction, provided by the iOS Foundation, processes protocol-specific URL data and ensures that we can integrate Cronet into our iOS apps without significant migration costs.

Quic Completed on Google Cloud Balancers

On the backend side, QUIC termination is provided by the Google Cloud Load balancing infrastructure, which uses alt-svc headers in responses to support QUIC. In general, the balancer adds the alt-svc header to each HTTP request and it already validates QUIC support for the domain. When the Cronet client receives an HTTP response with this header, it uses QUIC for subsequent HTTP requests to this domain. As soon as the balancer completes QUIC, our infrastructure explicitly sends this action via HTTP2 / TCP to our data centers.

Performance: Results

Outstanding performance is the main reason for our search for a better protocol. To begin with, we created a stand with network emulation to find out how QUIC will behave with different network profiles. To test the operation of QUIC in real networks, we experimented around New Delhi, using emulated network traffic very similar to HTTP calls in the passenger’s application.

Experiment 1

Inventory for experiment:

- Android test devices with OkHttp and Cronet stacks to make sure that we start HTTPS traffic over TCP and QUIC, respectively;

- Java-based emulation server that sends the same type of HTTPS headers in responses and loads client devices to receive requests from them;

- Cloud proxies that are physically located close to India to complete TCP and QUIC connections. While we used the reverse proxy on NGINX to complete TCP, it was difficult to find the open source reverse proxy for QUIC. We built the reverse proxy for QUIC ourselves, using the base QUIC stack from Chromium and published it in chromium as open source.

Figure 6. The travel set for TCP vs QUIC tests consisted of Android devices with OkHttp and Cronet, cloud proxies for terminating connections, and an emulation server.

Experiment 2

When Google made QUIC available using Google Cloud Load Balancing , we used the same inventory, but with one modification: instead of NGINX, we took Google balancers to complete TCP and QUIC connections from devices, as well as to direct HTTPS traffic to the emulation server . Balancers are distributed around the world, but use the PoP server closest to the device (thanks to geolocation).

Figure 7. In the second experiment, we wanted to compare the TCP completion delay and QUIC: using Google Cloud and using our cloud proxy.

As a result, several revelations awaited us:

- PoP termination improved TCP performance. Since balancers complete the TCP connection closer to the users and are perfectly optimized, this results in lower RTT, which improves TCP performance. Although QUIC had less of an effect, it still bypassed TCP in terms of reducing tail delays (by 10-30 percent).

- tails are affected by network transitions (hops) . Although our QUIC proxy was farther from the devices (the delay was about 50 ms higher) than the Google balancers, it produced a similar performance - a 15% reduction in latency versus a 20% decrease in 99 percentile for TCP. This suggests that the last mile transition is a bottleneck in the network.

Figure 8. The results of two experiments show that QUIC is significantly superior to TCP.

Combat traffic

Inspired by experimentation, we have implemented QUIC support in our Android and iOS applications. We conducted A / B testing to determine the impact of QUIC in the cities where Uber operates. In general, we saw a significant reduction in tail delays across regions, as well as telecom operators and network type.

The graphs below show the percentage improvements in the tails (95 and 99 percentile) for macro regions and different types of networks - LTE, 3G, 2G.

Figure 9. In combat tests, QUIC outperformed TCP in latency.

Only forward

Perhaps this is just the beginning - rolling out QUIC in production gave tremendous opportunities to improve application performance in both stable and unstable networks, namely:

Coverage increase

After analyzing the protocol performance on real traffic, we saw that approximately 80% of the sessions successfully used QUIC for all requests, while 15% of the sessions used a combination of QUIC and TCP. We assume that the combination appeared because the Cronet library switches back to TCP by timeout, since it cannot distinguish between real UDP failures and bad network conditions. Now we are looking for a solution to this problem, as we are working on the subsequent implementation of QUIC.

QUIC optimization

Traffic from mobile applications is sensitive to delays, but not to bandwidth. Also, our applications are mainly used in cellular networks. Based on experiments, tail delays are still large, even though using a proxy to complete TCP and QUIC is close to users. We are actively looking for ways to improve congestion management and increase the efficiency of QUIC loss recovery algorithms.

With these and some other improvements, we plan to improve the user experience regardless of network and region, making convenient and seamless package transport more accessible around the world.

All Articles