Science never quoted

“Wanted quotes”

The Nobel Prize-winning geneticist and Oliver Smiths , who died in January 2017 at the age of 91, was a modest inventor who preferred to keep a low profile. It was typical for him to tell the story of his biggest fiasco: work on osmotic pressure measurement, published in 1953 [Smithies, O. Biochem. J. 55, 57–67 (1953)], which, as he put it, “had a dubious achievement in the form of the absence of references to it”.

“No one has ever referred to it and no one has ever used this method,” he told students at a meeting in Lindau in Germany in 2014.

In actual fact, Smith's work attracted much more attention than he thought: in ten years since its publication nine works have referred to it. But the mistake is clear - many scientists misunderstand the noncitability of work, both in terms of the scale of this phenomenon, and by its influence on the world of science.

One frequently repeated assessment, mentioned in a controversial article published in the journal Science in 1990, states that more than half of all scientific articles remain without reference to them for five years after their publication. And scientists are very worried about this, says Jevin West, an information theory specialist at the University of Washington in Seattle, who studies the large-scale patterns of research literature. After all, citation is a generally accepted measure of academic influence: a marker that the work was not only read, but also found useful for further research. Researchers are worried that a large amount of uncited work indicates the emergence of a mountain of useless or irrelevant research. “And do not count how many times people asked me at dinner:“ What part of the literature is completely without references? ”Says West.

In fact, research without reference to it is not always useless. Moreover, there are few such studies, says Vincent Larivière, an information theory specialist at the University of Montreal in Canada.

In order to better understand this dark, all-forgotten corner of published works, Nature magazine plunged into numbers with the intention of finding out how many works actually remain without mention. Surely it is impossible to know, because the citation database is incomplete. But it is clear that, at least in the backbone of 12,000 journals in the Web of Science, a large database from Clarivate Analytics, work without mention is much less common than is commonly believed.

Web of Science records suggest that less than 10% of scientific works remain without reference to them. This figure should be even lower because a large number of works, marked in the database as having no mention, are in fact somewhere mentioned by someone.

This does not necessarily mean that there are fewer low-quality works: thousands of journals are not indexed with the Web of Science base, and concern about the fact that scientists fill their resumes with meaningless works remains real.

But new numbers can reassure people who are frightened by tales of oceans of abandoned work. In addition, upon closer examination of some works that no one mentioned, it turns out that they have a benefit - and they are being read - despite seemingly ignoring. “The absence of quotations cannot be interpreted as the futility or worthlessness of articles,” says David Pendlebury, chief analyst for Clarivate quotes.

Myths about the absence of references

The notion that the scientific literature is replete with unqualified research goes back to a couple of articles in the journal Science, dated 1990 and 1991 [Pendlebury, DA Science 251.1410-1411 (1991)]. A report from 1990 reports that 55% of articles published from 1981 to 1985 were not mentioned anywhere within five years after their release. But these analyzes are misleading, mainly because they took into account such types of publications as letters, corrections, meeting minutes and other editorial material that is not usually quoted. If you delete all this, leaving only the research papers and review articles, the percentages of the unlisted articles will collapse. And if you extend the waiting period over five years, these percentages will fall even more.

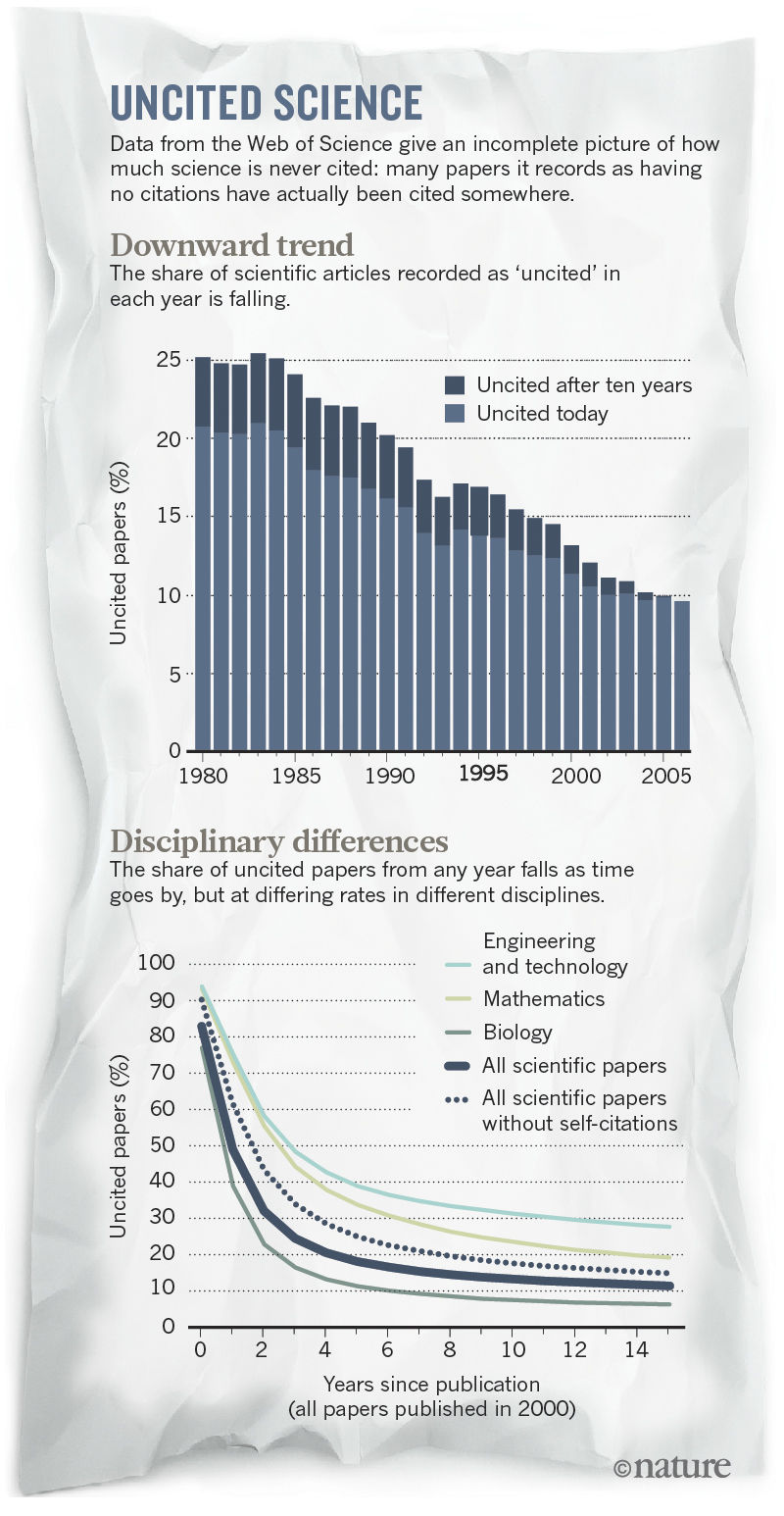

In 2008, Larivière and his colleagues took a fresh look at Web of Science and reported not only that the number of completely uncited works was less than what was thought, but also that the percentage of works without mention was reduced for several decades. Nature Magazine asked Lariviera with Cassidy Sugimoto of Indiana University in Bloomington to update their analysis and comment on it for this article.

New figures that count research articles and reviews suggest that in most areas the number of works that do not attract references at all is leveled off for a period of five to ten years after publication, although each area has its proportions. Of all the works on biomedicine published in 2006, only 4% are not quoted today; in chemistry, this number is 8%, and in physics - closer to 11%. If you remove the cases of self-censorship, these numbers increase - and in some disciplines and a half. In engineering and technology fields, the percentage of work in 2006 without references is 24%, much higher than the natural sciences. This may be due to the technical nature of these articles, which solve specific problems, instead of providing other scientists with a basis for continuing the work, says Larivière.

The upper chart is the distribution of the number of works without references by year.

Lower - differences in different areas of science; the dotted line indicates the general schedule for all regions.

If we take all the articles in general - 39 million research papers in all areas recorded in the Web of Science from 1900 to the end of 2015 - then 21% of them remained without mention. Not surprisingly, most of the work without reference to them appeared in obscure journals. Almost all works in famous journals are subsequently quoted.

Impossible measurements

These data provide only part of the picture. But to fill in all the missing information on the scientific literature - the task is almost impossible.

Checking a small amount of work is already quite challenging. For example, in 2012, Peter Heneberg, a biologist from Charles University in Prague, decided to check the Web of Science records relating to 13 Nobel laureates to check out the wildly sounding statements of another article stating that about 10% of the studies of Nobel laureates are not referenced . His first Web of Science study found a number close to 1.6%. But then, using the Google Scholar service, Höneberg found that many of the remaining works still had links, but they were unrecorded due to data entry errors and typos in the works. In addition, additional quotes existed in magazines and books that were not indexed by Web of Science. By the time Höneberg stopped his searches, having spent about 20 hours behind this, he reduced the number of works without mentioning another five times, to 0.3%.

Such shortcomings and lead to the fact that the present number of never cited works can not be found: it will take too much time to repeat the manual check after Heneberg on a large scale. On the Web of Science indicated, for example, that 65% of the work on humanitarian work, published in 2006, no one has ever mentioned. And this is actually the case - quite a lot of humanitarian work remains without mention, in particular, because new research in this area is not so much dependent on the accumulated previous knowledge. But Web of Science does not correctly reflect the situation in this area, since it neglects many magazines and books.

The same reasons undermine the reliability of comparisons of different nations. The Web of Science shows that works written by scientists from China, India and Russia will be ignored more likely than those written in the United States or Europe. But the database generally does not track many local journals that would reduce this gap, says Larivière.

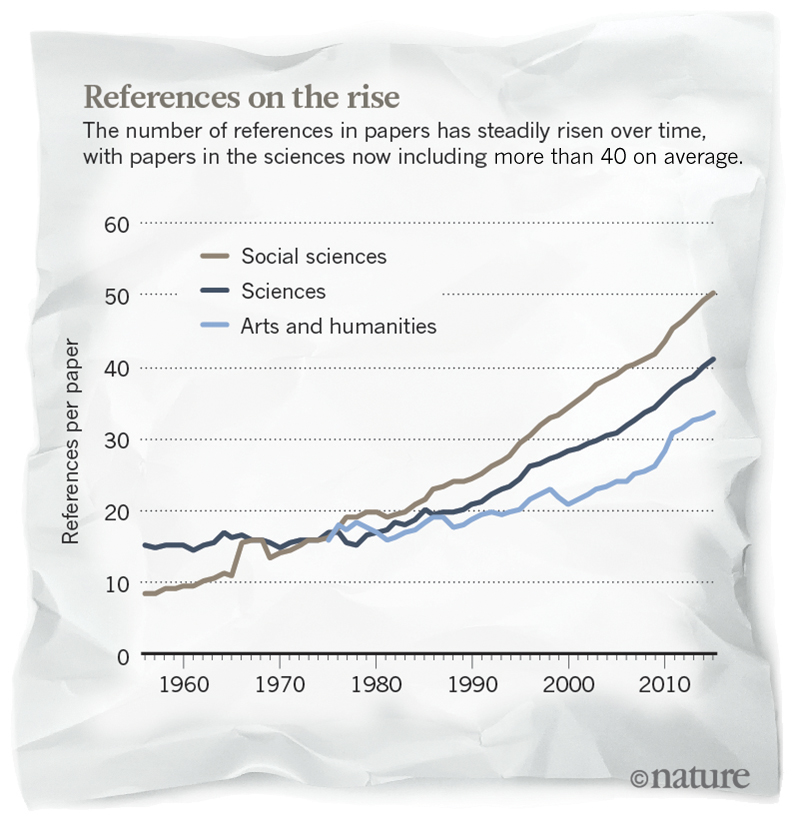

Despite the problems with the absolute number, the reduction of works without mentioning in the Web of Science is strictly observed, says Larivière. The Internet has greatly facilitated the task of finding and citing the necessary works, he says. It is possible that this is also facilitated by the desire to open access to articles. But Larivière warns that this trend should not be overestimated. In his 2009 survey [Wallace, ML, Larivière. V. & Gingras, YJ Informetrics 3, 296–303 (2009)], it is argued that the number of works without quotations falls because scientists publish more and more works, and push more and more references to other works in them. A specialist in the field of bibliometrics [statistical analysis of scientific literature / approx. transl.] Ludo Waltman from Leiden University in the Netherlands agrees. "I would not interpret these figures as a guarantee that more and more scientific papers are becoming useful."

The number of references to work is gradually increasing.

Waltman says that many works barely avoid the fate of the uncited ones: Waltman and Lariviere’s independent calculations show that there are more than one or two times of works mentioned on the Web of Science than those who have no mention at all. “And we know that many links are actually made formally, for a tick,” he says. Or they can be a sign of the “you — me, I — you” system of scientists, says Dahlia Remler, a specialist in health economics from the School of Public and International Relations. Marx in New York. “Even large-cited studies can be part of a game in which scientists play together, not going to benefit anyone,” she says.

Not completely pointless.

Some researchers may be tempted to reject work without reference as unimportant. After all, if they had at least some value, wouldn't someone refer to them?

Probably, but not necessarily. Scientists are influenced by many more articles than they later mention, says Michael Macroberts, a botanist at the University of Louisiana at Shreveport. In an article in 2010 devoted to a lack of citation analysis, Macroberts referred to his own 1995 work on the discovery of a moaning moss (Palhinhaea cernua) in Texas. This was the first and only time when someone referred to this work, but the information mentioned in it fell into the plant atlases and large databases. People using these databases rely on this work and thousands of other botanical reports. “Information in these so-called non-quoting articles is used; they are simply not quoted, "he says.

In addition, articles that are not quoted, yet read. In 2010, researchers from the New York Department of Public Health and Psychological Hygiene published a study analyzing the deficiencies in the work of the saliva test kit for HIV, done using special programs [Egger, JR, Konty, KJ, Borrelli, JM, Cummiskey, J. & Blank, S. PLoS ONE 5, e12231 (2010)]. A few years before, the use of the kit was suspended in the clinics, and then resumed again. The authors wanted to use the experience of clinics to explore the question of whether software can analyze the quality of the work of the sets in case of problems.

Their work, published in the journal PLoS ONE, was never mentioned. But it was viewed more than 1,500 times, and downloaded more than 500 times, said Joe Egger, a co-author of the work, now working at the Duke World Health Institute in Durham, North Carolina. “The purpose of this article is to improve the practices aimed at supporting public health, and not to advance science,” he says.

Other articles may remain without mention because they close unproductive areas of research, says Niklaas Byyurma, a chemist at the University of Cardiff, Britain. In 2003, Büyurma and his colleagues published a paper on an “isochoric dispute” about whether it would be useful to try to restrain the expansion or contraction of the solvent during a reaction that occurs with changing temperatures. In theory, this technically challenging experiment could lead to new knowledge of how solvents affect the rate of chemical reactions. But the Byuymy checks showed that chemists did not learn anything new from similar experiments. “We decided to prove that something should not be done - and we showed it,” he says. “I am proud of this work as not implying a mention,” he adds.

Oliver Smiths, speaking at a meeting in Lindau, said he recognized the importance of the work of 1953, despite the fact that he thought that it was not mentioned. He told the public that the work carried out helped him earn a degree and become a full-fledged scientist. In fact, it was the apprenticeship of a future Nobel Prize winner. “I really liked to do it,” he said, “and I learned how to do science properly.” Smithy actually has at least one job without any mention: an article from 1976 , where it was shown that a certain gene associated with the immune system is located on the human chromosome . But that was important, for other reasons, says geneticist Raju Kucherlapati of Harvard Medical School in Boston, Massachusetts, one of the authors of the work. He says that the article marked the beginning of a long collaboration with the Smiths laboratory, culminating in the work on the genetics of mice, which earned Smithy a 2007 Nobel Prize in physiology or medicine. “For me,” says Kucherlapati, “the importance of this work was that I recognized Oliver.”

Stories of uncited works

Long wait

For every researcher who wants someone to mention his work, there is hope, given the story of Albert Peck, whose work from 1926, describing one of the types of glass defects, was first quoted in 2014. In the 1950s, work lost its usefulness, as manufacturers invented how to make smooth glass without the defects described. But in 2014, materials researcher Kevin Knowles from the University of Cambridge in Britain stumbled upon Google in this job while writing a review of this area — he was using these defects to scatter light. Now he quoted it in four articles already. "I like to write works in which I can mention little-known articles."

Missed wave

Doctoral student Francisco Pina-Martins from the University of Lisbon in 2016 published her work on the interpretation of genetic sequencing data, being sure that no one would mention it because the technology described in it, developed by 454 Life Sciences, is outdated and not used. He uploaded his data analysis software to GitHub, a site where people share source code, in 2012 - and this has been mentioned in several papers. But the publication of the study itself took four years, because, according to him, it is associated with a rare problem that the experts, who had previously studied the article, simply did not understand.

Dead end

A lot of stories about the works without mention are sad. In 2010, neuroscientist Adriano Ceccarelli published an article in the journal PLoS ONE about gene regulation in Dictyostelium slugs. His requests for grants to continue research remained unanswered, and the work was never quoted. “Well, you know how it is with research - it turns out that the work has led to a dead end,” he says. - My ideas are not worth funding. Now I just teach and wait for retirement. But I would be happy to start working if I received funding tomorrow. ”

All Articles