AI Accountability: The Role of the Explanatory Note

Artificial Intelligence (AI) systems are becoming more common. In this regard, lawyers and legislators discuss the problem of how such systems should be regulated, who will be responsible for their actions. This question requires careful study and a weighted approach, because AI systems are capable of generating huge amounts of data and be used in applications of different functionality - from medical systems and autopilots in cars to predicting crimes and calculating potential criminals. At the same time, scientists strive to create a “strong AI” capable of reasoning, and here the question arises how to determine the presence of intent in his actions - or to recognize actions as unintentional.

Artificial Intelligence (AI) systems are becoming more common. In this regard, lawyers and legislators discuss the problem of how such systems should be regulated, who will be responsible for their actions. This question requires careful study and a weighted approach, because AI systems are capable of generating huge amounts of data and be used in applications of different functionality - from medical systems and autopilots in cars to predicting crimes and calculating potential criminals. At the same time, scientists strive to create a “strong AI” capable of reasoning, and here the question arises how to determine the presence of intent in his actions - or to recognize actions as unintentional.

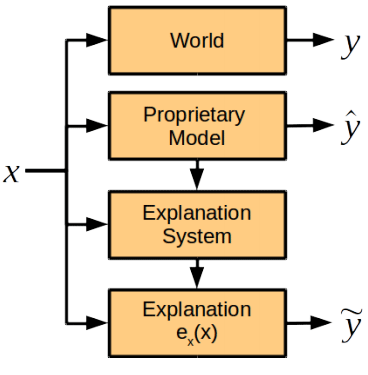

There are many ways to bring the AI system to accountability and responsibility; several studies have been published on this topic. In the new scientific work, specialists in computer science, cognitive science and lawyers from Harvard and Cambridge universities (USA) are discussing one component of the future AI accountability system, namely the role of the explanatory note from AI, that is, how the artificial intelligence system explains its actions . Scientists concluded that the module explaining actions should be separated from the general AI system.

The authors of the scientific work describe all the problems that arise when trying to ask the AI system for an explanation of its actions.

First, such an opportunity must be provided at the stage of system development, otherwise the AI may refuse or be unable to explain its actions.

Secondly, the AI system processes a large array of data using complex proprietary algorithms or methods that it itself has developed in the learning process. Thus, when trying to explain her actions in the most complete way, she can generate too much data that is incomprehensible to a person. Or - like the other extreme - will provide a too simplified model that will not reflect the real AI intentions and motives of actions. In addition, the algorithms of the AI system may be the intellectual property of the company-developer, so a method of drafting an explanatory note should be provided in order to establish the reasons for the actions of the system, but not give out the underlying algorithms.

The researchers believe that the explanation is possible without disclosing the algorithms and rules that underlie the functioning of the AI system. In the event of an incident, the system should provide answers to the following questions:

- What are the main factors that influenced the decision?

- Would a change in a certain factor lead to a change in decision?

- Why did two similar cases lead to different solutions?

Answers to such questions do not necessarily require the disclosure of proprietary secrets and internal algorithms of the system, but at the same time they will give a clear understanding of its motives.

Scientists argue in which cases it is appropriate to require an explanation from an AI. In essence, this is required in cases where the benefit of an explanation outweighs the price of receiving it: “We believe that there are three conditions for situations where society considers it necessary to get an explanation from the decision maker. These are moral, social or legal reasons, ”explains Finale Doshi-Velez, lead author of the scientific work.

In this case, one should not demand explanations from the AI in literally every situation. As mentioned above, this increases the risks of issuing trade secrets and imposes an additional burden on the developers of AI systems. In other words, the constant accountability of AI to a person will slow down the development of these systems, including in areas important to humans.

Unlike the widespread opinion that the AI system is a black box, the causes of which are incomprehensible to humans, the authors of the scientific work are confident that a normally functioning module for explaining the actions of AI can be developed. This module will be integrated into the system, but work independently of decision-making algorithms and not obey them.

Scientists believe that there are some points of explanation of actions that are easy for people to state, and machines are hard, and vice versa.

Comparison of human abilities and AI to explain

| Person | AI | |

|---|---|---|

| Benefits | May explain the act a posteriori | Reproducibility of the situation, lack of social pressure |

| disadvantages | May be inaccurate and unreliable, feels social pressure | Requires preliminary programming of the explanation module, additional taxonomies and data storage expansion |

However, the expert group recommends setting the same standard for AI systems to explain their actions, which is set for people today (this standard is written in US law: in particular, an explanation is required in cases of strict liability, divorce and discrimination, for administrative decisions and from judges and jurors to explain their decisions - although the level of detail of the explanatory note is very different in each case).

But if the reasons for the actions of AI go beyond the limits of understanding a person, then with the goal of accountability of AI in the future, another standard can be developed for their explanatory notes, scientists believe.

The scientific article was published on November 3, 2017 on the site of preprints arXiv.org (arXiv: 1711.01134v1).

All Articles