RNNoise: Sacrifice Your Noise for Learning the Mozilla Neural Network

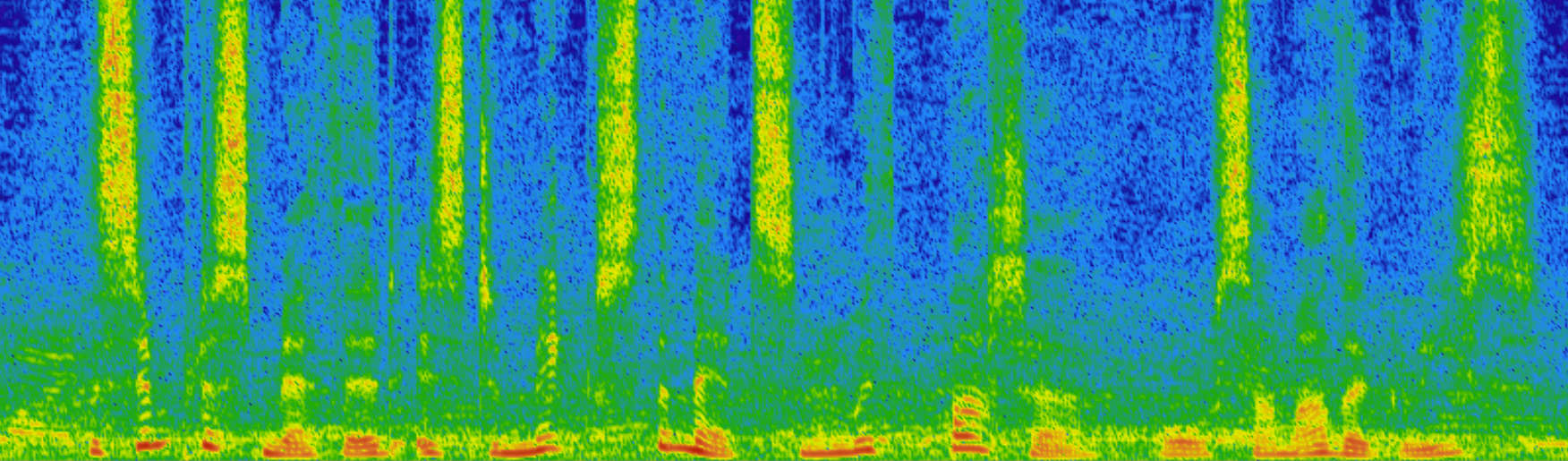

Spectrogram before noise reduction, recorded human speech at 15 dB SNR

Spectrogram of sound after processing by the RNNoise neural network

Noise cancellation has remained a hot topic of research since at least the 1970s . Despite significant improvements in system quality, their high-level architecture has remained almost unchanged. The spectral estimation technique relies on spectral noise estimation, which, in turn, works with a voice activity detector (VAD) or similar algorithm. Each of the three components requires careful fitting - and they are difficult to customize. Therefore, the achievements of Mozilla and Xiph.org in depth learning are of such great importance. The hybrid system RNNoise created by them already shows a good result in noise cancellation (see the source code and demo ).

Creating RNNoise, the developers sought to get a small and fast algorithm that will work effectively in real time even on Raspberry Pi. And they succeeded, and RNNoise shows a better result than the coolest and most sophisticated modern filters.

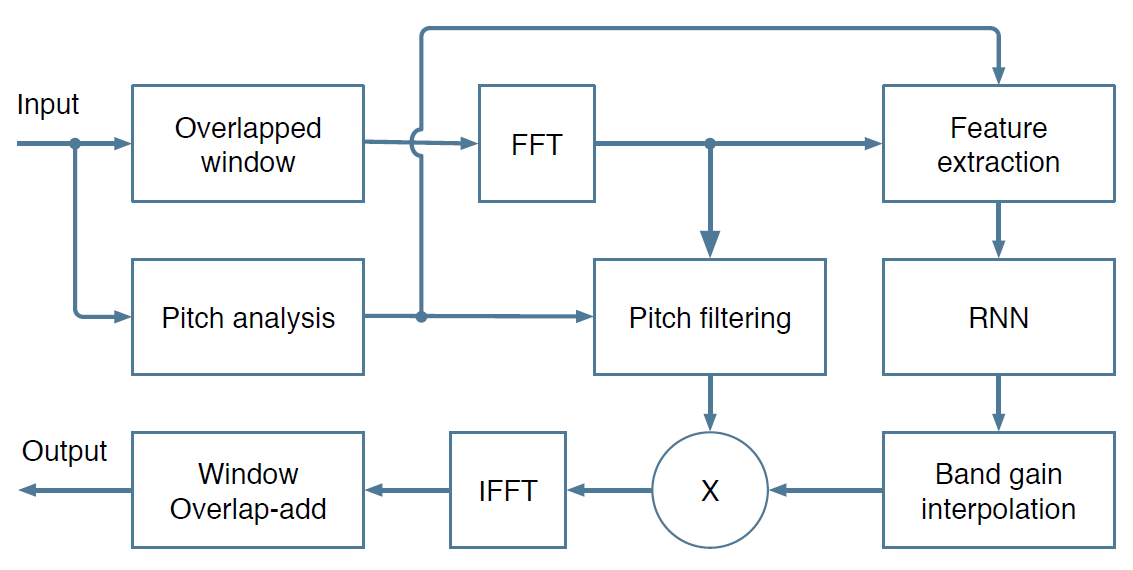

High-level structure of most noise reduction algorithms

Neural networks have previously been used for noise suppression, in recent years this is a popular area of research. But most of them involve the use of automatic speech recognition in applications, where latency and computational power are not determining factors. In contrast, the Mozilla project focuses on real-time applications, such as video conferencing, and sound processing at a full 48 kHz sampling rate.

To achieve this goal, Mozilla used a hybrid approach that uses both well-known noise reduction techniques and in-depth training to replace components that are difficult to configure in conventional systems. The essence of the method is shown in the flowchart.

Signal processing block diagram

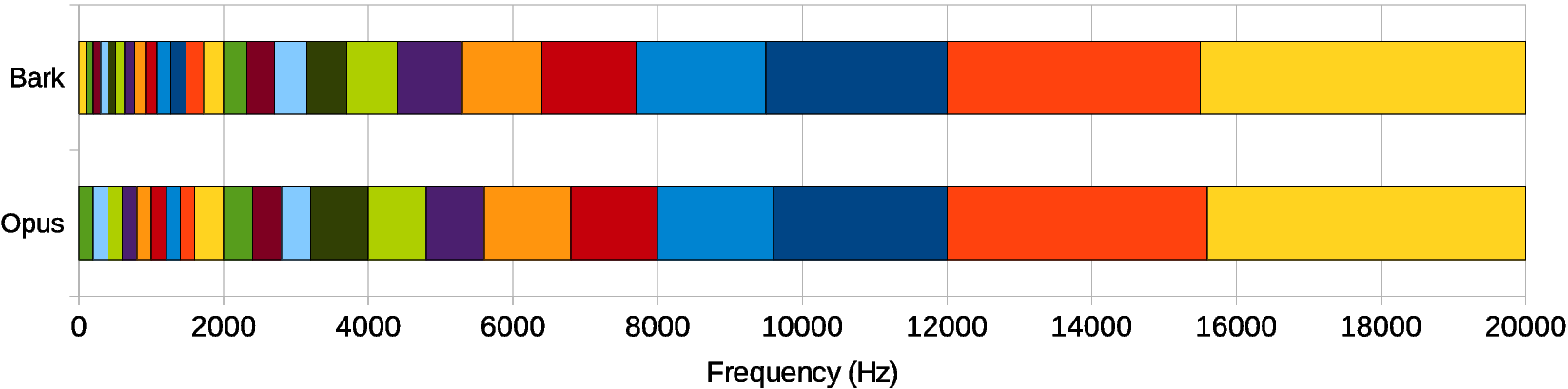

Such a hybrid approach differs from end-to-end networks, where a neural network assumes absolutely all or almost all sound processing. Of course, such systems have proven their effectiveness, but the developers of RNNoise consider them too complicated and resource-intensive. For example, the Google RNN network for noise suppression (2012) directly estimates the frequency values. For sound processing at 8 kHz, it uses 6144 blocks in hidden layers and about 10 million weights. Scaling to 48 kHz speech with frames of 20 ms creates a too complex system with more than 400 output signals (from 0 to 20 kHz). Raspberry Pi definitely won't pull it. The goal of Mozilla was to make a simple and fast model, so they applied a hybrid approach. In addition, they generally refused to work directly with samples and with the spectrum, and instead divided the spectrum into 22 bands - and analyzed them, and not 480 (complex) spectral values, which would have to be analyzed otherwise. These 22 ranges correspond to the human perception of sound by ear, in accordance with the psychoacoustic scale of barks . A similar distribution is used in the Opus codec, and here Mozilla borrowed the base model, only slightly correcting it.

This approach has proven its effectiveness. The program consumes only a modest share of the computing resources of the ARM Cortex-A53 processor operating at 1.2 GHz (Raspberry Pi 3).

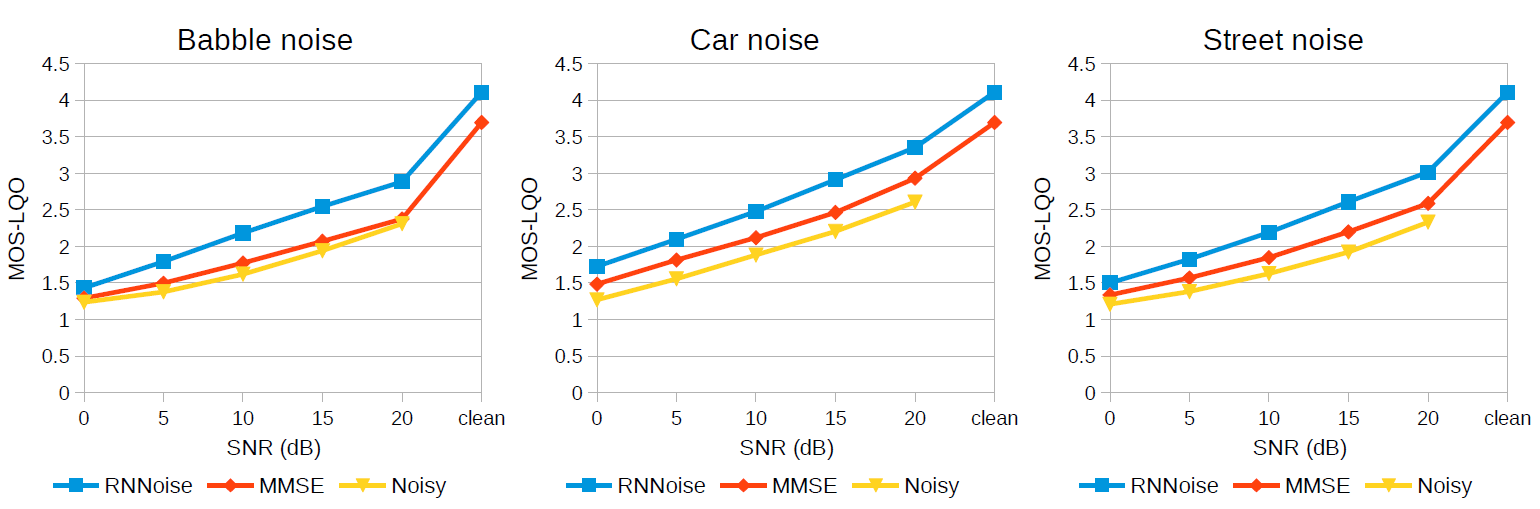

Comparative tests have shown that the use of a neural network significantly improves the quality of noise reduction. The diagrams show noise suppression from background conversations (left), cars (center), and street noise (right) compared to the MMSE-based SpeexDSP library .

Now developers are asking all users to donate their noise for scientific purposes, that is, to train a neural network. You can record noise directly online. They ask to do this in any environment where voice conversation is possible, that is, literally anywhere: it can be your car, office, street or any place where you can communicate by phone or via computer. On the noise recording page, just click the “Record” button and be silent for 1 minute. To train a neural network, you need to specify in which specific environment you recorded silence (noise).

A scientific article (pdf) with a description of RNNoise has not yet been submitted for publication in a scientific journal.

All Articles