Fundamental machine learning limitations

Recently, my aunt sent e-mails to her colleagues with the subject “math problem! What is the correct answer? ”The letter was a deceptively simple puzzle:

1 + 4 = 5

2 + 5 = 12

3 + 6 = 21

8 + 11 =?

For her, the decision was obvious. But her colleagues decided that the correct decision was theirs - not congruent with her decision. Was the problem with one of their answers, or with the puzzle itself?

My aunt and her colleagues stumbled upon the fundamental problem of machine learning, a discipline that studies learning computers. Virtually all the training that we expect from computers - and which we do ourselves - is to reduce the information to the basic laws, on the basis of which it is possible to draw conclusions about something unknown. And her mystery was the same.

For a man, the task is to find any pattern. Of course, our intuition limits the range of our guesses. But computers do not have intuition. From the point of view of a computer, the difficulty in recognizing patterns is in their abundance: if there are an infinite number of equally legitimate patterns, because of which some are correct, and some are not?

And this problem has recently passed into a practical plane. Until the 1990s, AI systems were rarely engaged in machine learning. Suppose the Deep Thought chess computer, the predecessor of Deep Blue, did not learn chess by trial and error. Instead, chess grandmasters and programming wizards carefully created the rules by which it was possible to understand whether a chess position is good or bad. Such a meticulous manual adjustment was typical for the “expert systems” of that time.

In order to tackle the riddle of my aunt with the help of an expert systems approach, it is necessary for a person to squint and look at the first three rows of examples and notice the following pattern in them:

1 * (4 + 1) = 5

2 * (5 + 1) = 12

3 * (6 + 1) = 21

Then the person would give the computer a command to follow the laws x * (y + 1) = z. Applying this rule to the last result, we get the solution - 96.

Despite the early successes of expert systems, the manual labor required for their development, adjustment and updating became very heavy. Instead, the researchers paid attention to the development of machines capable of recognizing patterns on their own. The program could, for example, study a thousand photographs or market transactions and derive from them statistical signals corresponding to a person in a photo or a surge in prices in the market. This approach quickly became dominant, and has since been at the heart of everything, from automatic mail sorting and spam filtering to credit card fraud detection.

But, despite all the successes, these MO systems require a programmer somewhere in the process. Take as an example the riddle of my aunt. We assumed that in each row there are three significant components (three numbers in a row). But there is a potential fourth element in it - the result from the previous line. If this property of the string is valid, then another plausible pattern is manifested:

0 + 1 + 4 = 5

5 + 2 + 5 = 12

12 + 3 + 6 = 21

According to this logic, the final answer should be equal to 40.

What is the pattern is true? Naturally, both - and none of them. It all depends on what patterns are valid. You can, for example, build a pattern by taking the first number, multiplying it by the second, adding one-fifth of the sum of the previous answer and the three, and round it all to the nearest integer (very strange, but it works). And if we allow the use of properties related to the appearance of numbers, there may be a sequence associated with serifs and lines. The search for patterns depends on the assumptions of the observer.

The same is true for MO. Even when the machines train themselves, the preferred patterns are chosen by people: should the face recognition software contain explicit rules if / that, or should it regard each feature as additional evidence in favor of or against every possible person who owns the person? What features of the image should process software? Does it need to work with individual pixels? Or maybe with the edges between light and dark areas? The choice of such options limits what patterns the system deems probable or even possible. The search for this ideal combination has become a new work of MO specialists.

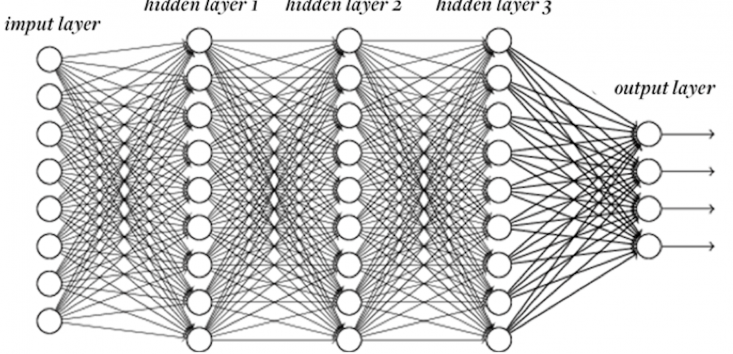

But the automation process did not stop there. In the same way as programmers used to forget to write the rules of work, now they were reluctant to develop new features. “Wouldn’t it be great if the computer itself could figure out what features it needs?” So they developed a neural network with in-depth training — MO technology that can independently draw conclusions about high-level properties based on simpler information. Feed the neural network a set of pixels, and she learns to take into account edges, curves, textures - and all this without direct instructions.

And what, the programmers have lost their jobs because of One Algorithm, To Edit All?

Not yet. Neural networks are still unable to ideally approach any tasks. Even in the best cases, they have to adjust. The neural network consists of layers of "neurons", each of which performs calculations based on input data and gives the result to the next layer. But how many neurons are needed and how many layers? Should each neuron accept input from each neuron of the previous level, or should some neurons be more selective? What transformation should each neuron perform on the input data to produce a result? And so on.

These questions limit attempts to apply neural networks to new tasks; a neural network that perfectly recognizes faces is completely incapable of automatic translation. And again, the elements of construction chosen by man clearly push the network to certain laws, leading it away from others. A knowledgeable person understands that not all patterns are created equal. Programmers will not remain without work yet.

Of course, the next logical step will be neural networks, independently guessing how many neurons need to be turned on, what connections to use, etc. Research projects on this topic have been underway for many years.

How far can it go? Will the machines learn to work independently so well that the external adjustment will turn into an old-fashioned relic? In theory, one can imagine an ideal universal student — one who can solve everything for himself, and always chooses the best scheme for the chosen task.

But in 1996, computer scientist David Walpert proved the impossibility of the existence of such a student. In his famous “free-lunch absence theorems,” he showed that for any pattern that a student learns well, there is a pattern that he will learn terribly. This returns us to the mystery of my aunt - to the infinite number of patterns that may arise from the final data. Selecting a learning algorithm means choosing patterns that the machine will handle poorly. Perhaps all tasks, for example, pattern recognition, will end up in one comprehensive algorithm. But no learning algorithm can learn everything equally well.

This makes machine learning unexpectedly similar to the human brain. Although we like to consider ourselves clever, our brains do not study perfectly either. Each part of the brain is carefully tuned by evolution to recognize certain patterns — be it what we see, the language we hear, or the behavior of physical objects. But we are not doing so well with the search for patterns in the stock market; here cars beat us.

The history of machine learning has many regularities. But the most likely will be the following: we will train the machines to learn for many more years.

All Articles