25 microchips that shook the world

In the development of microchips, as in life, little things sometimes add up to significant phenomena. Make up a tricky microcircuit, create it from a strip of silicon, and your little creature can lead to a technological revolution. It happened with the Intel 8088 microprocessor. And with Mostek MK4096 4-kilobit DRAM. And with Texas Instruments TMS32010 digital signal processor.

Among the many excellent chips that have appeared in factories for the fifty years of the reign of integrated circuits, one small group stands out. Their schemes turned out to be so advanced, so unusual, so ahead of their time, that we no longer have the technological cliches to describe them. Suffice it to say that they gave us the technology that made our fleeting and usually boring existence in this universe tolerable.

We have prepared a list of 25 ICs that, in our opinion, deserve an honorable place on the mantelpiece of the house, which was built by Jack Kilby and Robert Noyce [the inventors of the integrated circuit - ca. trans.]. Some of them turned into a long-playing icon of chip-lover worship: for example, the Signetics 555 timer. Others, such as the Fairchild 741 operational amplifier, have become an example of circuit patterns. Some, for example, Microchip Technology PIC microcontrollers, were sold in billions, and are still being sold. Several special chips, such as Toshiba flash memory, created new markets. And at least one has become a symbol of geeks in pop culture. Question: what processor does Bender, an alcoholic, a smoker and a censorious robot from “Futurama” work on? Answer: MOS Technology 6502.

What unites all these chips is that partly and because of them, too, engineers rarely go for a walk on the street.

Of course, such lists are quite controversial. Someone can accuse us of whims and that we missed something. Why did we choose Intel 8088, and not the first, 4004? Where is the radiation resistant military processor class RCA 1802, the former brain of many spacecraft?

If you need one result of the introduction, then let it be this: our list is what remains after many weeks of controversy between the author, his trusted sources, and several IEEE Spectrum editors. We did not try to create an exhaustive listing of each chip, which has become a technological breakthrough or known commercial success. We also did not include in the list the chips, the greatest in essence, but so unknown that only five engineers remember them to remember them. We concentrated on chips that have become unique, interesting, stunning. We chose chips of different types, from large and small companies, created a long time ago. Most of all, we picked up the ICs that influenced the lives of many people — chips that became part of the gadgets that shook the world, symbolized technological trends, or simply delighted people.

Each chip is accompanied by a description of how it appeared, why it was innovative, comments of engineers and directors involved in the development are given. This selection is not for the historical archive, so we did not line them up in chronological order, or by type, or importance. We randomly placed them in the article so that it was interesting to read. After all, the story, in fact, quite sloppy.

Signetics NE555 Timer (1971)

It was in the summer of 1970. Chip developer Hans Kamenzind [Hans Camenzind] probably knew a lot about Chinese restaurants, for his small office was sandwiched between two restaurants in the suburb of Sunnyvale, California. Kamenzind worked as a consultant for Signetics, a local firm operating in the semiconductor market. The economy flew into the abyss. He earned less than $ 15,000 a year, and he had a wife and four children at home. He urgently needed to invent something worthwhile.

And he managed. And invented one of the greatest chips of all time. The 555 was a simple IC capable of operating as a timer or oscillator. It will be the best-selling among analog semiconductor circuits, will appear in kitchen appliances, toys, spacecraft and thousands of other things.

“And after all, they almost did not think about doing it,” recalls Kamenzind, who, at 75, continues to develop chips, although he does so very far from any Chinese restaurant.

The idea of the 555th came to him while working on the phase synchronization circuit. With minor corrections, the circuit could work as a simple timer. You power it, and it works for a certain period of time. It sounds simple, but then there was nothing like it.

Initially, the Signetics engineering department rejected this idea. The company was already selling components from which customers could make timers. Everything could end on this, but Kamenzind insisted. He went to see Art Fury, the company's marketing manager. Fury liked the idea.

For almost a year, Kamenzind tested prototypes on prototyping boards, painted components on paper and on Rubylith film masks. “All this was done manually, without any computers,” he says. The final circuit turned out to be 23 transistors, 16 resistors and 2 diodes.

Entering the market in 1971, the 555 chip became a sensation. In 1975, Signetics absorbed Philips Semiconductors, now known as NXP, claiming sales were in the billions. Engineers are still using the 555 to create useful electronic modules, as well as less useful pieces, such as the lighting of the radiator grilles of cars in the style of " Knight of the roads ."

Texas Instruments TMC0281 Speech Synthesizer (1978)

If it were not for TMC0281, ET could never “call home”. This is because TMC0281, the first speech synthesizer on a single chip, was the heart (or, probably, mouth?) Of the Speak & Spell learning toy from Texas Instruments. In the Steven Spielberg film, the flat-headed alien uses her to build an interplanetary communicator (to be exact, except for this, he uses a coat hanger, a can of coffee and a circular saw).

If it were not for TMC0281, ET could never “call home”. This is because TMC0281, the first speech synthesizer on a single chip, was the heart (or, probably, mouth?) Of the Speak & Spell learning toy from Texas Instruments. In the Steven Spielberg film, the flat-headed alien uses her to build an interplanetary communicator (to be exact, except for this, he uses a coat hanger, a can of coffee and a circular saw).

TMC0281 synthesized voice using linear prediction coding. The sound came from a mixture of buzzing, hissing and clicking. This was an unexpected solution to the problem, which, it was thought, “cannot be solved with the help of IP,” said Jean Franz, one of the four engineers who developed the toy, and still works at TI. Variations of this chip were used in Atari arcade games and Chrysler cars on platform K. In 2001, TI sold a line of Speech synthesizer speech chips, which ceased production in 2007. But if you ever need to make a phone call over a very, very far distance, you can find Speak & Spell toys in excellent condition on eBay for under $ 50.

MOS Technology 6502 Microprocessor (1975)

When the chubby geek injected this chip into a computer and loaded it, the heart sank in the Universe for a moment. This geek was Steve Wozniak, the computer was an Apple I, and the chip was 6502, an 8-bit microprocessor developed at MOS Technology. The chip has become the main brain of incredibly fruitful computers like the Apple II, Commodore PET, BBC Micro, not to mention gaming systems like Nintendo and Atari. Chuck Pedle, one of the chip makers, recalls how they presented their 6502 at a trade show in 1975. “We had two glass decanter chips,” he says, “and my wife sat and sold them.” Shoppers had a whole crowd. The reason is that the 6502 was not only faster than its competitors, but also much cheaper. It cost $ 25 when the Intel 8080 and Motorola 6800 cost $ 200 each.

When the chubby geek injected this chip into a computer and loaded it, the heart sank in the Universe for a moment. This geek was Steve Wozniak, the computer was an Apple I, and the chip was 6502, an 8-bit microprocessor developed at MOS Technology. The chip has become the main brain of incredibly fruitful computers like the Apple II, Commodore PET, BBC Micro, not to mention gaming systems like Nintendo and Atari. Chuck Pedle, one of the chip makers, recalls how they presented their 6502 at a trade show in 1975. “We had two glass decanter chips,” he says, “and my wife sat and sold them.” Shoppers had a whole crowd. The reason is that the 6502 was not only faster than its competitors, but also much cheaper. It cost $ 25 when the Intel 8080 and Motorola 6800 cost $ 200 each.

The breakthrough, according to Bill Mensch, who created 6502 together with Peddl, was a minimal set of instructions and a new production process, "that issued 10 times more usable chips than the competition". The 6502 almost single-handedly made the cost of the processors fall, which helped launch the revolution of personal computers. Some embedded systems still use it. Interestingly, 6502 also serves as the electronic brain of Bender, a robot from Futurama, which follows from an episode from 1999.

Texas Instruments TMS32010 Digital Signal Processor (1983)

Big Texas is known for many great things, such as cowboy hat, country steak, Dr Pepper, and TMS32010, a digital signal processor. It was not the first DSP (the first was DSP-1 from Western Electric, which appeared in 1980), but it was the fastest of them. He could produce a multiplication of 200 ns - this property of his engineers caused a pleasant sensation throughout the body. Moreover, he could execute instructions both from ROM on a chip and from external RAM, while competitors did not have such capabilities. “It made software development for the TMS32010 flexible, exactly the same as for microcontrollers and microprocessors,” says Wanda Gass, a member of the DSP development team, still working at TI. The chip cost $ 500 and in the first year 1000 units were sold. Gradually, sales grew, and DSP became part of modems, medical devices, and military systems. Oh, and one more application - a crappy doll in the style of Chucky, Worlds of Wonder's Julie, who could talk and sing. Chip was the first of a large DSP family to earn TI a fortune.

Big Texas is known for many great things, such as cowboy hat, country steak, Dr Pepper, and TMS32010, a digital signal processor. It was not the first DSP (the first was DSP-1 from Western Electric, which appeared in 1980), but it was the fastest of them. He could produce a multiplication of 200 ns - this property of his engineers caused a pleasant sensation throughout the body. Moreover, he could execute instructions both from ROM on a chip and from external RAM, while competitors did not have such capabilities. “It made software development for the TMS32010 flexible, exactly the same as for microcontrollers and microprocessors,” says Wanda Gass, a member of the DSP development team, still working at TI. The chip cost $ 500 and in the first year 1000 units were sold. Gradually, sales grew, and DSP became part of modems, medical devices, and military systems. Oh, and one more application - a crappy doll in the style of Chucky, Worlds of Wonder's Julie, who could talk and sing. Chip was the first of a large DSP family to earn TI a fortune.

Microchip Technology PIC 16C84 Microcontroller (1993)

In the early 1990s, the vast universe of 8-bit microcontrollers belonged to one company, the omnipotent Motorola. And then a small competitor appeared with the unremarkable name Microchip Technology. He developed the PIC 16C84, which included a memory called EEPROM - an electrically erasable reprogrammable ROM. He did not need ultraviolet light to erase how his predecessor, the EPROM, needed it. “After that, users could change their code on the fly,” says Rod Drake, chief chip developer, now working as a director at Microchip. More pleasantly, the chip cost $ 5, four times cheaper than alternatives, most of which were made by Motorola. 16C84 has found application in smart cards, control panels and wireless keys for cars. It was the beginning of a line of microcontrollers that became electronic superstars for both the Fortune 500 companies and for fans of something to solder at home. It sold 6 billion copies of the chip used in industrial controllers, unmanned aerial vehicles, digital pregnancy tests, chip fireworks, decorations with LEDs, and sensors for filling septic tanks called Turd Alert.

In the early 1990s, the vast universe of 8-bit microcontrollers belonged to one company, the omnipotent Motorola. And then a small competitor appeared with the unremarkable name Microchip Technology. He developed the PIC 16C84, which included a memory called EEPROM - an electrically erasable reprogrammable ROM. He did not need ultraviolet light to erase how his predecessor, the EPROM, needed it. “After that, users could change their code on the fly,” says Rod Drake, chief chip developer, now working as a director at Microchip. More pleasantly, the chip cost $ 5, four times cheaper than alternatives, most of which were made by Motorola. 16C84 has found application in smart cards, control panels and wireless keys for cars. It was the beginning of a line of microcontrollers that became electronic superstars for both the Fortune 500 companies and for fans of something to solder at home. It sold 6 billion copies of the chip used in industrial controllers, unmanned aerial vehicles, digital pregnancy tests, chip fireworks, decorations with LEDs, and sensors for filling septic tanks called Turd Alert.

Fairchild Semiconductor μA741 Op-Amp (1968)

An operational amplifier is an analog bread cut. You will always need a couple of things, but you can also combine them with anything and get something edible. Developers with their help make audio and video preamplifiers, voltage comparators, precise rectifiers and many other systems included in everyday electronics.

An operational amplifier is an analog bread cut. You will always need a couple of things, but you can also combine them with anything and get something edible. Developers with their help make audio and video preamplifiers, voltage comparators, precise rectifiers and many other systems included in everyday electronics.

In 1963, a 26-year-old engineer, Robert Widlar, developed the first monolithic opamp on an integrated circuit, the μA702, for Fairchild Semiconductor. They sold them for $ 300. Then Widlar produced an improved circuit, μA709, reducing the cost to $ 70 and bringing the chip to huge commercial success. They say that reckless Widlar asked for an increase after that, and when he did not receive it, he quit. With great pleasure, National Semiconductor hired a comrade who at the time helped establish the discipline of analog design of the IC. In 1967, Widlar again improved the opamp by making the LM101.

In the meantime, Fairchild managers were worried about the sudden competition, the recently hired David Fullagar carefully studied the LM101 in their lab. He realized that the chip, even if it was ingeniously created, had a couple of flaws. In order to avoid frequency distortions, engineers had to attach an external capacitor to it. In addition, the input part of the IC, the so-called. front-end, some chips were too sensitive to noise due to the inconsistent quality of semiconductor fabrication.

“The front end looked hastily,” he says.

Fullagar engaged in its own development. He expanded the limitations of semiconductor manufacturing by introducing a 30 pF capacitor into the chip. But how to improve the front-end? The solution was simple: “I was just suddenly lit up when I was driving the car” - and consisted of a couple of additional transistors. They made the amplifier smoother and the quality of production more constant.

Fullagar took his development to the head of a laboratory named Gordon Moore, and he sent it to the commercial department of the company. The new μA741 chip has become the standard among operational amplifiers. This IP and created by competitors Fairchild options sold hundreds of millions. Now for $ 300, which asked for its predecessor 702, you can buy a thousand 741 chips.

Intersil ICL8038 Waveform Generator (circa 1983)

Critics scoffed at the limited performance of the ICL8038 and its penchant for unstable behavior. This chip, a generator of sinusoidal, rectangular, triangular, and other waves, really behaved somewhat capriciously. But engineers soon learned how to reliably use it, and 8038 became a hit, which as a result sold by hundreds of millions, which found countless applications — for example, Muga’s synthesizers and blue boxes, which phreakers used to hack telephone companies in the 1980s. The component was so popular that the company released a document called “Everything you always wanted to know about the ICL8038”. An example of a question from there: “Why, after connecting 7 and 8 pins, the IC works best in conditions of temperature change?” Intersil stopped production of 8038 in 2002, but amateurs are still looking for them and making home functionalized transducers and theremins.

Western Digital WD1402A UART (1971)

Gordon Bell is known for the PDP minicomputer series, launched in the 1960s by Digital Equipment Corp. He also invented a less well-known, but no less important chip: universal asynchronous receiver / transmitter, UART. Bell needed circuits to connect Teletype and PDP-1, and this required the conversion of parallel signals into serial ones, and vice versa. Its implementation consisted of 50 separate components. Western Digital, a small company that manufactured calculator chips, offered to make a UART on a single chip. The company's founder, Al Phillips, still recalls how his vice president of engineering showed him film sheets with a scheme ready for production. “I looked at them for a minute and found an open loop,” says Phillips. “The vice president was hysterical.” Western Digital introduced the WD1402A around 1971, and soon other options followed. Now UART are widely used in modems, computer peripherals and other equipment.

Acorn Computers ARM1 Processor (1985)

In the early 1980s, Acorn Computers was a small company with a large product. Located in Cambridge, England, the company has sold over 1.5 million BBC micro-desktops. It's time to develop a new model, and the engineers decided to make their own 32-bit microprocessor. They called it Acorn RISC Machine, or ARM. The engineers knew that the task would not be easy. They were almost ready for the fact that insurmountable problems would force them to abandon the project. “The team was so small that every decision had to be applied, giving priority to simplicity — or we will never finish it!” Says one of the developers, Steve Furber, now a professor at the University of Manchester. As a result, simplicity has become the main feature of the product. ARM was small, consumed little, programming was easy for him. Sophie Wilson, who developed the instruction set, still remembers how they first checked the chip on a computer. “We wrote 'PRINT PI', and he gave the correct answer,” she says. “We opened the champagne.” In 1990, Acorn singled out ARM as a separate unit, and the architecture became dominant in the field of embedded 32-bit processors. More than 10 billion ARM cores have been used in all sorts of gadgets, including one of the most disgraceful failures of Apple, the Newton handheld, and one of its most deafening successes, the iPhone.

In the early 1980s, Acorn Computers was a small company with a large product. Located in Cambridge, England, the company has sold over 1.5 million BBC micro-desktops. It's time to develop a new model, and the engineers decided to make their own 32-bit microprocessor. They called it Acorn RISC Machine, or ARM. The engineers knew that the task would not be easy. They were almost ready for the fact that insurmountable problems would force them to abandon the project. “The team was so small that every decision had to be applied, giving priority to simplicity — or we will never finish it!” Says one of the developers, Steve Furber, now a professor at the University of Manchester. As a result, simplicity has become the main feature of the product. ARM was small, consumed little, programming was easy for him. Sophie Wilson, who developed the instruction set, still remembers how they first checked the chip on a computer. “We wrote 'PRINT PI', and he gave the correct answer,” she says. “We opened the champagne.” In 1990, Acorn singled out ARM as a separate unit, and the architecture became dominant in the field of embedded 32-bit processors. More than 10 billion ARM cores have been used in all sorts of gadgets, including one of the most disgraceful failures of Apple, the Newton handheld, and one of its most deafening successes, the iPhone.

Kodak KAF-1300 Image Sensor (1986)

The Kodak DCS 100 digital camera, which appeared in 1991, cost $ 13,000 and required external 5kg memory blocks that users had to carry on their shoulders. But still, in the electronics of the camera, located in the Nikon F3, there was one impressive component: a finger-sized chip capable of taking pictures with a resolution of 1.3 megapixels, which allowed you to take photos of acceptable quality with a size of 7 "x5". “At that time, 1 megapixel was a magic number,” says Eric Stevens, the main chip developer still working at Kodak. This chip - a true two-phase charge-coupled device - became the basis for future CCD sensors, giving rise to a revolution in digital photography. What was the very first photo taken with the KAF-1300? “Um,” says Stevens, “we just aimed the sensor at the lab wall.”

The Kodak DCS 100 digital camera, which appeared in 1991, cost $ 13,000 and required external 5kg memory blocks that users had to carry on their shoulders. But still, in the electronics of the camera, located in the Nikon F3, there was one impressive component: a finger-sized chip capable of taking pictures with a resolution of 1.3 megapixels, which allowed you to take photos of acceptable quality with a size of 7 "x5". “At that time, 1 megapixel was a magic number,” says Eric Stevens, the main chip developer still working at Kodak. This chip - a true two-phase charge-coupled device - became the basis for future CCD sensors, giving rise to a revolution in digital photography. What was the very first photo taken with the KAF-1300? “Um,” says Stevens, “we just aimed the sensor at the lab wall.”

IBM Deep Blue 2 Chess Chip (1997)

On one side of the board - one and a half kilograms of gray matter. On the other - 480 chess chips. People ended up losing to computers in 1997, when a chess-playing computer from IBM Deep Blue defeated the then world champion, Garry Kasparov. Each of the Deep Blue chips consisted of 1.5 million transistors located in a logical array that counted the moves - as well as RAM and ROM. All together, the chips could handle 200 million chess positions per second. This brute force, combined with the cunning features of the game evaluation, and gave out the moves, called Kasparov "non-computer". “They had a serious psychological pressure,” recalls the main author of Deep Blue, Feng Xiong Xiu, who works at Microsoft today.

On one side of the board - one and a half kilograms of gray matter. On the other - 480 chess chips. People ended up losing to computers in 1997, when a chess-playing computer from IBM Deep Blue defeated the then world champion, Garry Kasparov. Each of the Deep Blue chips consisted of 1.5 million transistors located in a logical array that counted the moves - as well as RAM and ROM. All together, the chips could handle 200 million chess positions per second. This brute force, combined with the cunning features of the game evaluation, and gave out the moves, called Kasparov "non-computer". “They had a serious psychological pressure,” recalls the main author of Deep Blue, Feng Xiong Xiu, who works at Microsoft today.

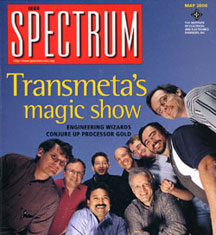

Transmeta Corp. Crusoe Processor (2000)

Great features mean big radiators.And a little living battery. And crazy power consumption. Therefore, the goal of Transmeta was to develop a processor with low power consumption, which would furnish voracious counterparts from Intel and AMD. According to the plan, the software must translate x86 instructions on the fly into Crusoe machine code, and its efficient concurrency would save time and energy. He was advertised as the greatest achievement since the creation of silicon substrates, and at one time it was. “Wizarding engineers caused the gold of the processors,” as was written on the cover of the IEEE Spectrum of May 2000. Crusoe and his successor, Efficeon, “have proven that dynamic binary translation can be commercially successful,” says David Dietzel, co-founder of Transmeta, now working at Intel. Unfortunately, he adds,The chips appeared a few years before the active development of the low-power computer market. And, although Transmeta did not fulfill its promises, with the help of licenses and lawsuits, it forced Intel and AMD to cool them down.

Great features mean big radiators.And a little living battery. And crazy power consumption. Therefore, the goal of Transmeta was to develop a processor with low power consumption, which would furnish voracious counterparts from Intel and AMD. According to the plan, the software must translate x86 instructions on the fly into Crusoe machine code, and its efficient concurrency would save time and energy. He was advertised as the greatest achievement since the creation of silicon substrates, and at one time it was. “Wizarding engineers caused the gold of the processors,” as was written on the cover of the IEEE Spectrum of May 2000. Crusoe and his successor, Efficeon, “have proven that dynamic binary translation can be commercially successful,” says David Dietzel, co-founder of Transmeta, now working at Intel. Unfortunately, he adds,The chips appeared a few years before the active development of the low-power computer market. And, although Transmeta did not fulfill its promises, with the help of licenses and lawsuits, it forced Intel and AMD to cool them down.

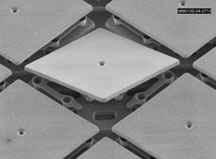

Texas Instruments Digital Micromirror Device (1987)

June 18, 1999 Larry Hornbeck led his wife Laura on a date. They went to watch Star Wars Episode 1 at Burbank, California. The grizzled engineer was not an ardent fan of the Jedi. They went there because of the projector. It used a chip - a digital micromirror device - invented by Hornbeck for Texas Instruments. The chip uses millions of swiveling microscopic mirrors to direct light through the projector lens. This viewing was “the first digital demonstration of a major motion picture,” says Hornbek. Today, film projectors using DLP technology work in thousands of cinemas. They are also used in projector televisions, office projectors and tiny cell phone projectors. “To paraphrase Houdini,” says Hornbeck, “micromirrors, gentlemen. The effect is created with the help of micromirrors ".

June 18, 1999 Larry Hornbeck led his wife Laura on a date. They went to watch Star Wars Episode 1 at Burbank, California. The grizzled engineer was not an ardent fan of the Jedi. They went there because of the projector. It used a chip - a digital micromirror device - invented by Hornbeck for Texas Instruments. The chip uses millions of swiveling microscopic mirrors to direct light through the projector lens. This viewing was “the first digital demonstration of a major motion picture,” says Hornbek. Today, film projectors using DLP technology work in thousands of cinemas. They are also used in projector televisions, office projectors and tiny cell phone projectors. “To paraphrase Houdini,” says Hornbeck, “micromirrors, gentlemen. The effect is created with the help of micromirrors ".

Intel 8088 Microprocessor (1979)

Was there any single chip that dragged Intel into the Fortune 500 list? The company says it was: 8088. It was the 16-bit CPU that IBM chose for its original PC line, which came to dominate the desktop market.

By the strange vicissitudes of fate, the name of the chip, which became famous for supporting the x86 architecture, did not end with “86”. The 8088th was a small remake of the 8086th, the first 16-bit Intel chip. Or, as Intel engineer Stephen Morse said, the 8088 was the "castrated version 8086". This is because the main innovation of the chip was not a step forward: 8088 processed data in 16-bit words using an 8-bit external data bus.

Intel managers did not disclose the details of the 8088 project until the 8086 design was almost finished. "Management did not want to delay 8086 even for a day, telling us that they were thinking over 8088," said Peter Stoll, lead engineer of the 8086 project, who worked a little on 8088.

Only after the appearance of the first 8086 worker did Intel send the drawings and documentation to the development department in Haifa, Israel, where two engineers, Rafi Retter and Dany Star, changed the chip to work on an 8-bit bus.

Such a modification turned out to be one of the best solutions of the company. The 8088 CPU with 29,000 transistors required fewer auxiliary chips, which could be cheaper, and “was fully compatible with 8-bit iron, and also worked faster and helped organize a smooth transition to 16-bit processors,” wrote Robert Noyce and Ted Hoff from Intel in an article for the 1981 IEEE Micro magazine.

The first PC to use the 8088 was the IBM Model 5150, a $ 3000 monochrome computer. Now almost all PCs in the world are built on CPUs whose ancestor is 8088. Not bad for a castrated chip.

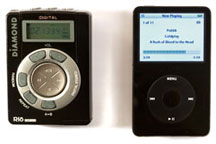

Micronas Semiconductor MAS3507 MP3 Decoder (1997)

Before the iPod, there was also the Diamond Rio PMP300. Hardly you remember him. He appeared in 1998 and immediately became a hit, but then the hype subsided faster than Milli Vanilli. But one interesting feature of the player was that it worked on the MAS3507 MP3 decoder, a digital RISC signal processor, with a set of instructions optimized for compressing and decompressing data. The chip, developed by Micronas, allowed Rio to stuff a little more than a dozen songs into flash memory - today it is ridiculous, but at that time he could compete with portable CD players. How charmingly old-fashioned, isn't it? Rio and the followers have paved the way for the iPod, and now you can carry thousands of songs in your pocket - all Milli Vanilli albums and clips.

Before the iPod, there was also the Diamond Rio PMP300. Hardly you remember him. He appeared in 1998 and immediately became a hit, but then the hype subsided faster than Milli Vanilli. But one interesting feature of the player was that it worked on the MAS3507 MP3 decoder, a digital RISC signal processor, with a set of instructions optimized for compressing and decompressing data. The chip, developed by Micronas, allowed Rio to stuff a little more than a dozen songs into flash memory - today it is ridiculous, but at that time he could compete with portable CD players. How charmingly old-fashioned, isn't it? Rio and the followers have paved the way for the iPod, and now you can carry thousands of songs in your pocket - all Milli Vanilli albums and clips.

Mostek MK4096 4-Kilobit DRAM (1973)

Mostek was not the first DRAM. But its 4-kilobyte DRAM contained a key innovation — the address-compilation stunt invented by co-founder Bob Probsting. In fact, the chip used the same pins to access columns and rows of memory, compacting the address signals. As a result, with increasing memory, the chip did not need to increase the number of contacts, and it could be made cheaper. There was only a small compatibility issue. The 4096 used 16 pins, and the memory manufactured by Texas Instruments, Intel, and Motorola worked with 22 pins. As a result, one of the most epic confrontations in the history of DRAM occurred. Mostek, putting its future on this chip, tried to convert the users, partners, the press and even its employees into their faith. Fred Behousen, who, as a recent employee,I had to test the 4096 devices, recalls how Probsting and CEO Sevin came to him on his night duty and held a small conference at 2 am. “They boldly predicted that in six months no one would care about the 22-pin DRAM,” says Behuzen. They were right. 4096 and his followers for years became the main trend in the DRAM market.

Mostek was not the first DRAM. But its 4-kilobyte DRAM contained a key innovation — the address-compilation stunt invented by co-founder Bob Probsting. In fact, the chip used the same pins to access columns and rows of memory, compacting the address signals. As a result, with increasing memory, the chip did not need to increase the number of contacts, and it could be made cheaper. There was only a small compatibility issue. The 4096 used 16 pins, and the memory manufactured by Texas Instruments, Intel, and Motorola worked with 22 pins. As a result, one of the most epic confrontations in the history of DRAM occurred. Mostek, putting its future on this chip, tried to convert the users, partners, the press and even its employees into their faith. Fred Behousen, who, as a recent employee,I had to test the 4096 devices, recalls how Probsting and CEO Sevin came to him on his night duty and held a small conference at 2 am. “They boldly predicted that in six months no one would care about the 22-pin DRAM,” says Behuzen. They were right. 4096 and his followers for years became the main trend in the DRAM market.

Xilinx XC2064 FPGA (1985)

In the early 1980s, chip developers tried to squeeze everything out of each transistor in the circuit. But then Ross Freeman had a radical idea. He invented a chip clogged with transistors, which formed not very strictly organized logical blocks that could be tuned using software. Sometimes a group of transistors could not be used - that's heresy! - but Freeman believed that Moore's law would eventually make transistors very cheap. So it happened. To market a chip called a user-programmable FPGA gate array, Freeman co-founded Xilinx. (A strange concept for a company with a strange name). When its first product came out in 1985, the employees were given a task: they needed to manually draw an example of a circuit using the XC2064 logic blocks, as the company's customers would do. Bill Carter,former technical director, recalls how he was approached by CEO Bernie Wonderschmitt, complaining that he "did not manage his homework a little." Carter gladly helped the boss. “And we, armed with paper and colored pencils, worked on Bernie's task!” Today, FPGA chips sold by Xilinx and other companies are used in such a huge list of things that it will be hard to bring it here. This is the configuration!that it will be hard to bring him here. This is the configuration!that it will be hard to bring him here. This is the configuration!

Zilog Z80 Microprocessor (1976)

Federigo Faggin knew well how much money and man-hours needed to spend on bringing a microprocessor to the market. Working at Intel, he participated in the development of two fruitful representatives of this kind: the very first, 4004, and 8080 of the genus Altair. Having founded the Zilog company together with the former colleague from Intel, Ralph Ungerman, they decided to start with something simpler: a microcontroller on a single chip.

Faggin and Angermann rented an office in a suburb of Los Altos in California, threw a business plan and went in search of venture capital. They ate at the nearest Safeway supermarket, Camembert Cheese and Crackers, he recalls.

But soon the engineers realized that the microcontroller market was already flooded with very good chips. Even if their chip were better than others, they would get a very small profit, and would continue to eat cheese with crackers. Zilog needed to take a sight higher in the food chain - and so was born the project of the microprocessor Z80.

Their goal was to bypass 8080 performance and offer full compatibility for 8080 programs to divert Intel users. For months, Fuggin, Ungerman and Masatoshi Shima, another former engineer from Intel, worked 80 hours a week, hunched over the tables and drawing diagrams for the Z80. Faggin soon realized that even though small in size and can be beautiful [" small is beautiful"- a collection of essays by popular economist EF Schumacher / approx. Transl.], But his eyes get very tired.

" By the end of the work, I had to buy glasses, he says. "I became short-sighted."

The team plowed throughout 1975 and entered in 1976. By March, they finally had a prototype chip. The Z80 was a contemporary of MOS Technology's 6502, and like that, it stood out not only for its elegant design, but also for its low price ($ 25). But in order to start producing it, spend a lot of effort on beliefs. “It’s just that time was saturated,” says Faggin, who has also earned himself an ulcer tavleniyam ulcer - an infectious disease, not nervous / ca. Perevi]...

But sales eventually went. The Z80 has thousands of products built into it, including the first Osborne I laptop computer, Radio Shack TRS-80 and MSX home computers, printers, fax machines, photocopiers, modems and satellites. Zilog still produces the Z80 because of its popularity in some embedded systems. In the basic configuration today, it costs $ 5.73 - it's even cheaper than a lunch of cheese and crackers.

Sun Microsystems SPARC Processor (1987)

A long time ago, in the early 1980s, people wore warm leggings of neon colors and watched Dallas [a soap opera from 13 seasons about a cunning oil magnate / approx. perev.], and microprocessor developers tried to increase the complexity of the CPU instructions, so that more actions could be performed in a single computation cycle. But then a group from the University of California at Berkeley, known for its counterculture preferences, suggested the opposite: simplify the instruction set, and process them so quickly that as a result compensate for the fact that less is done in one cycle. A group from Berkeley, led by David Patterson, called this approach RISC - reduced instruction set computer [computer with a reduced set of commands].

From an academic point of view, the idea of RISC was not bad. But will she sell? This put Sun Microsystems. In 1984, a small team of Sun engineers began developing a 32-bit SPARC (Scalable Processor Architecture, scalable processor architecture) RISC processor. They wanted to use this chip in a new line of workstations. One day, Scott McNeely, director of Sun, appeared in the SPARC lab. “He said that SPARC would turn Sun from a company with revenues of $ 500 million a year into a company with revenues of a billion a year,” recalls Patterson, a consultant for the SPARC project.

And if this was not enough, then many experts doubted that the company would succeed in completing this project. Worse, the marketing team had an unpleasant insight: SPARC, on the contrary, will be CRAPS! [gambling dice, or plural shit - approx. transl.] Team members had to swear that they would not make a sound of it even among company employees - not to mention that these rumors reached their main competitor, MIPS Technologies, who also studied the RISC concept.

The first version of the minimalist SPARC consisted of a “matrix processor for 20,000 gates, which did not even have instructions for integer multiplication and division,” says Robert Garner, the leading architect of SPARC, today working at IBM. But at a speed of 10 million instructions per second, it worked three times faster than processors with a set of complex instructions (CISC) of that time.

Sun will use SPARC for profitable workstations and servers for many years. The first product based on SPARC, which appeared in 1987, was the Sun-4 line of workstations, which quickly captured the market and helped unleash the company's revenue for the billion-dollar milestone - as Makili predicted.

Tripath Technology TA2020 AudioAmplifier (1998)

There is a subset of audiophiles who insist that tube amplifiers produce the best sound, and that will always be the case. So when someone from the audio community declared that a class D semiconductor amplifierInvented by Silicon Valley company Tripath Technology, gives the same warm and vibrant sound as tube amplifiers, this was a serious statement. The trick was to use a 50 MHz sampling system to operate the amplifier. The company boasted that their TA2020 works better and costs much less than any comparable semiconductor amplifier. To demonstrate his work at the exhibitions, “we played this very romantic song from“ Titanic, ”says Adya Tripati, founder of Tripath. Like most Class D amplifiers, the 2020 was very energy efficient; he did not need a radiator and he could fit in a compact case. The lower quality, 15 W version of the TA2020 was sold in the USA for $ 3 and was used in boom boxes and mini tape recorders.Other versions — the most powerful of which was the 1000-watt version — were used in home theaters, high-end audio systems and televisions from Sony, Sharp, Toshiba and others. As a result, large semiconductor manufacturing companies entered this market, created similar chips and sent Tripath into oblivion. But their chips became the subject of worship. Amplifier kits and TA2020-based products are still sold in companies such as 41 Hz Audio, Sure Electronics and Winsome Labs.Amplifier kits and TA2020-based products are still sold in companies such as 41 Hz Audio, Sure Electronics and Winsome Labs.Amplifier kits and TA2020-based products are still sold in companies such as 41 Hz Audio, Sure Electronics and Winsome Labs.

Amati Communications Overture ADSL Chip Set (1994)

Remember when DSL modems came out and you threw that miserable 56.6 kbps modem into the garbage? You, and two thirds of all people using DSL technology, should say thank you Amati Communications, a startup from Stanford University. In the early 1990s, they invented a DSL modulation called discrete multitone modulation, DMT. In fact, it allows you to turn a single telephone line into hundreds of channels and improve data transfer in the opposite way to Robin Hood. “Bits are stolen from the poorest channels and given to the richest,” says John Kyoffi, co-founder of Amati, now a professor at Stanford. DMT beat competitors - including offers from the giant AT & T - and has become the global standard for DSL. In the mid-1990s, Amati's DSL chipset, one analog and two digital, was not selling very actively,but by 2000 sales had risen to millions. In the early 2000s, sales exceeded 100 million chips per year. Texas Instruments bought Amati in 1997.

Remember when DSL modems came out and you threw that miserable 56.6 kbps modem into the garbage? You, and two thirds of all people using DSL technology, should say thank you Amati Communications, a startup from Stanford University. In the early 1990s, they invented a DSL modulation called discrete multitone modulation, DMT. In fact, it allows you to turn a single telephone line into hundreds of channels and improve data transfer in the opposite way to Robin Hood. “Bits are stolen from the poorest channels and given to the richest,” says John Kyoffi, co-founder of Amati, now a professor at Stanford. DMT beat competitors - including offers from the giant AT & T - and has become the global standard for DSL. In the mid-1990s, Amati's DSL chipset, one analog and two digital, was not selling very actively,but by 2000 sales had risen to millions. In the early 2000s, sales exceeded 100 million chips per year. Texas Instruments bought Amati in 1997.

Motorola MC68000 Microprocessor (1979)

Motorola was late for a party of 16-bit processors, so I decided to appear stylish. The hybrid 16-bit / 32-bit MC68000 contained 68,000 transistors, more than double that of the Intel 8086. It had internal 32-bit registers, but a 32-bit bus would have made it too expensive, so the 68000th used 24-bit addressing and 16-bit data channels. This was probably the last of the large processors designed by hand with pencil and paper. “I handed over the reduced copies of the drawings of the flowcharts, resources of the execution units, decoders and control logic to other project members,” says Nick Tredenick, who developed the logic of the 68000th. The copies were small and difficult to read, and as a result, his tired-eyed colleagues intelligibly informed him about it. “Once I came to the office,and found a copy of my credit card-sized flowcharts on the table, ”recalls Tredenik. The 68,000th appeared in all early Macs, as well as in the Amiga and Atari ST. Serious sales went thanks to the embedding of the chip in laser printers, arcade machines and industrial controllers. The 68000th was also one of the greatest blunders that almost hit the target, along with Pete Best, who left the post of drummer in the Beatles. IBM wanted to use the chip in its PC line, but instead settled on the Intel 8080, because, among other things, the 68000 was relatively rare. As one observer later noted, if Motorola had won, the Windows-Intel duopoly, which is called Wintel, could have been called Winola.Serious sales went thanks to the embedding of the chip in laser printers, arcade machines and industrial controllers. The 68000th was also one of the greatest blunders that almost hit the target, along with Pete Best, who left the post of drummer in the Beatles. IBM wanted to use the chip in its PC line, but instead settled on the Intel 8080, because, among other things, the 68000 was relatively rare. As one observer later noted, if Motorola had won, the Windows-Intel duopoly, which is called Wintel, could have been called Winola.Serious sales went thanks to the embedding of the chip in laser printers, arcade machines and industrial controllers. The 68000th was also one of the greatest blunders that almost hit the target, along with Pete Best, who left the post of drummer in the Beatles. IBM wanted to use the chip in its PC line, but instead settled on the Intel 8080, because, among other things, the 68000 was relatively rare. As one observer later noted, if Motorola had won, the Windows-Intel duopoly, which is called Wintel, could have been called Winola.because, among other things, 68000 was relatively rare. As one observer later noted, if Motorola had won, the Windows-Intel duopoly, which is called Wintel, could have been called Winola.because, among other things, 68000 was relatively rare. As one observer later noted, if Motorola had won, the Windows-Intel duopoly, which is called Wintel, could have been called Winola.

Motorola was late for a party of 16-bit processors, so I decided to appear stylish. The hybrid 16-bit / 32-bit MC68000 contained 68,000 transistors, more than double that of the Intel 8086. It had internal 32-bit registers, but a 32-bit bus would have made it too expensive, so the 68000th used 24-bit addressing and 16-bit data channels. This was probably the last of the large processors designed by hand with pencil and paper. “I handed over the reduced copies of the drawings of the flowcharts, resources of the execution units, decoders and control logic to other project members,” says Nick Tredenick, who developed the logic of the 68000th. The copies were small and difficult to read, and as a result, his tired-eyed colleagues intelligibly informed him about it. “Once I came to the office,and found a copy of my credit card-sized flowcharts on the table, ”recalls Tredenik. The 68,000th appeared in all early Macs, as well as in the Amiga and Atari ST. Serious sales went thanks to the embedding of the chip in laser printers, arcade machines and industrial controllers. The 68000th was also one of the greatest blunders that almost hit the target, along with Pete Best, who left the post of drummer in the Beatles. IBM wanted to use the chip in its PC line, but instead settled on the Intel 8080, because, among other things, the 68000 was relatively rare. As one observer later noted, if Motorola had won, the Windows-Intel duopoly, which is called Wintel, could have been called Winola.Serious sales went thanks to the embedding of the chip in laser printers, arcade machines and industrial controllers. The 68000th was also one of the greatest blunders that almost hit the target, along with Pete Best, who left the post of drummer in the Beatles. IBM wanted to use the chip in its PC line, but instead settled on the Intel 8080, because, among other things, the 68000 was relatively rare. As one observer later noted, if Motorola had won, the Windows-Intel duopoly, which is called Wintel, could have been called Winola.Serious sales went thanks to the embedding of the chip in laser printers, arcade machines and industrial controllers. The 68000th was also one of the greatest blunders that almost hit the target, along with Pete Best, who left the post of drummer in the Beatles. IBM wanted to use the chip in its PC line, but instead settled on the Intel 8080, because, among other things, the 68000 was relatively rare. As one observer later noted, if Motorola had won, the Windows-Intel duopoly, which is called Wintel, could have been called Winola.because, among other things, 68000 was relatively rare. As one observer later noted, if Motorola had won, the Windows-Intel duopoly, which is called Wintel, could have been called Winola.because, among other things, 68000 was relatively rare. As one observer later noted, if Motorola had won, the Windows-Intel duopoly, which is called Wintel, could have been called Winola.

Chips & Technologies AT Chip Set (1985)

By 1984, when IBM introduced the 80286 AT-based PC line, the company was already becoming the clear leader in the desktop world, and was going to dominate there further. But the plans of the Blue Giant were upset by a tiny little firm Chips & Technologies from San Jose. C & T developed five chips that duplicated the functionality of an AT motherboard that used 100 chips. To ensure that the chipset was compatible with the IBM PC, C & T engineers realized that they had only one solution. “We had a painful, but, of course, entertaining task — to play games for weeks,” said Ravi Bhatnagar, lead chipset designer, now the vice president of Altierre Corp. C & T chips have allowed manufacturers such as Taiwanese Acer to make cheaper PCs and launch invading PC clones. Intel bought C & T in 1997.

By 1984, when IBM introduced the 80286 AT-based PC line, the company was already becoming the clear leader in the desktop world, and was going to dominate there further. But the plans of the Blue Giant were upset by a tiny little firm Chips & Technologies from San Jose. C & T developed five chips that duplicated the functionality of an AT motherboard that used 100 chips. To ensure that the chipset was compatible with the IBM PC, C & T engineers realized that they had only one solution. “We had a painful, but, of course, entertaining task — to play games for weeks,” said Ravi Bhatnagar, lead chipset designer, now the vice president of Altierre Corp. C & T chips have allowed manufacturers such as Taiwanese Acer to make cheaper PCs and launch invading PC clones. Intel bought C & T in 1997.

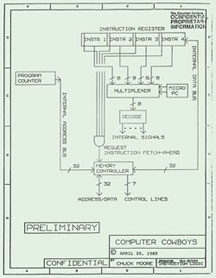

Computer Cowboys Sh-Boom Processor (1988)

Two chip makers enter the bar. This is Russell Fish III and Chuck Moore, and the bar is called W-Boom. And this is not the beginning of a joke - it is a real part of the technological history, filled with disagreements and court fights, a lot of court fights. It all started in 1988, when Fish and Moore created a strange processor called Sh-Boom. The chip was so well-adjusted that it could work faster than the clock frequency in the circuit that controlled the work of the rest of the computer. Therefore, two developers have found a way to make the processor work on its ultra-fast internal clock, while remaining synchronized with the rest of the computer. Sh-Boom was not commercially successful, and after patenting innovation, Fish and Moore did something else. Later, Fish sold his patent rights to the company Patriot Scientific from California, which remained a tiny firm without profit, until its directors had a revelation: in the years since the invention of Sh-Boom, the speed of processors far exceeded the speed of motherboards, therefore almost every computer and consumer electronics manufacturer simply will have to use a solution similar to the innovation patented by Fisch and Moore. Opachki! Patriot filed a carriage of lawsuits against US and Japanese companies. Whether the work of the chips of these companies depended on the ideas used in Sh-Boom was a controversial issue. But since 2006, Patriot and Moore have had more than $ 125 million in licensing fees from Intel, AMD, Sony, Olympus, and others. As for the name Sh-Boom, Moore, currently working at IntellaSys, says: “It allegedly came from the name of the bar where Fish and I drank bourbon and traced on napkins. In fact, this is not quite the case, but I liked the name he proposed. ”

Two chip makers enter the bar. This is Russell Fish III and Chuck Moore, and the bar is called W-Boom. And this is not the beginning of a joke - it is a real part of the technological history, filled with disagreements and court fights, a lot of court fights. It all started in 1988, when Fish and Moore created a strange processor called Sh-Boom. The chip was so well-adjusted that it could work faster than the clock frequency in the circuit that controlled the work of the rest of the computer. Therefore, two developers have found a way to make the processor work on its ultra-fast internal clock, while remaining synchronized with the rest of the computer. Sh-Boom was not commercially successful, and after patenting innovation, Fish and Moore did something else. Later, Fish sold his patent rights to the company Patriot Scientific from California, which remained a tiny firm without profit, until its directors had a revelation: in the years since the invention of Sh-Boom, the speed of processors far exceeded the speed of motherboards, therefore almost every computer and consumer electronics manufacturer simply will have to use a solution similar to the innovation patented by Fisch and Moore. Opachki! Patriot filed a carriage of lawsuits against US and Japanese companies. Whether the work of the chips of these companies depended on the ideas used in Sh-Boom was a controversial issue. But since 2006, Patriot and Moore have had more than $ 125 million in licensing fees from Intel, AMD, Sony, Olympus, and others. As for the name Sh-Boom, Moore, currently working at IntellaSys, says: “It allegedly came from the name of the bar where Fish and I drank bourbon and traced on napkins. In fact, this is not quite the case, but I liked the name he proposed. ”

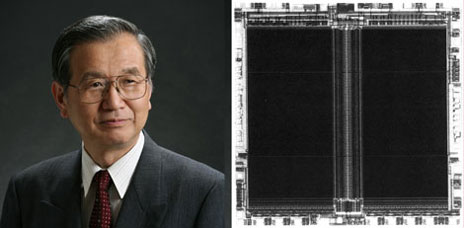

Toshiba NAND Flash Memory (1989)

The flash memory saga began when a Toshiba factory manager named Fujio Masuoka decided to reinvent the semiconductor memory. But first, a little history.

Before the advent of flash memory, magnetic tape, floppy disks and hard drives were the only way to store what was considered large memory at the time. Many companies tried to create semiconductor alternatives, but the available options, such as an EPROM that required ultraviolet to erase data, and an EEPROM that worked without ultraviolet, were economically unprofitable.

Masuoka-san from Toshiba is entering. In 1980, he hired four engineers to work on a semi-secret project to develop a memory chip capable of storing large amounts of data for little money. Their strategy was simple. “We knew that the cost of the chip would fall while the size of the transistors would decrease,” says Masuoka, now the technical director at Unisantis Electronics in Tokyo.

The Masuoka team came up with a variant of the EEPROM, in which the memory cell consisted of a single transistor. At that time, the usual EEPROM required two transistors per cell. It would seem that the difference was small, but it influenced the cost greatly.

In search of a memorable name, they stopped at “flash”, due to the very high erasure rate. But if you think that after this Toshiba rushed to introduce memory into production and watch how money is being dropped to them - you do not know how large corporations usually refer to internal ideas. It turns out that Masuok's bosses ordered him to, in general, erase this idea.

He naturally did not do that. In 1984, he presented work on memory design at the IEEE International Electron Devices Meeting. This prompted Intel to develop a type of flash memory based on NOR logic gates. In 1988, the company introduced a 256 kbit chip, which has been used in transportation, computers and other common devices, which has opened up a good niche for Intel.

That was enough for Toshiba to finally decide to market the Masuok invention. Its flash chip was based on NAND technology, with a high recording density, but difficult to manufacture. Success came in 1989, when the first NAND flash hit the market. As Masuoka predicted, prices continued to fall.

In the late 1990s, flash photography was promoted by digital photography, and Toshiba became one of the largest players in the multi-billion dollar market. At the same time, Masuok's relationship with other directors deteriorated, and he left the company. Later, he sued a lawsuit demanding to unfasten part of his profits, and won.

Now NAND flash is a key component of any gadget: cell phones, cameras, music players, and, of course, USB flash drives that techies like to wear around their necks. “Mine was 4 gigabytes,” says Masuoka.

All Articles