The tragedy of FireWire: a technology developed by joint efforts destroyed corporate wars

“Show us that the industry has adopted it, and then we will support it too.”

The rise and fall of FireWire - IEEE 1394 , an interface standard capable of boasting high-speed communications and support for isochronous traffic [data stream transmitted at a constant speed, in which all successively transmitted data blocks are strictly mutually synchronized with great accuracy - approx. transl.] - one of the most tragic stories from the field of computer technology. The standard was forged in the fire of teamwork. The combined efforts of several competitors, including Apple, IBM and Sony, made FireWire a design triumph. He represented a unified standard for the entire industry, one serial bus, to rule everyone. If FireWire had the full potential, it could replace SCSI and all the huge mess of ports and cables huddled on the back of the desktop.

However, the leading creator of FireWire, Apple, almost killed him even before he managed to appear in at least one device. As a result, the Cupertino company killed the standard in fact, even as it seemed that its dominance in the industry was approaching.

The history of entering the FireWire market and dropping it out of favor today is a stark reminder that no one, as promising, well-designed or loved by all technology, is immune from the internal and external political struggles of corporations or from our unwillingness to leave the comfort zone.

Start

“It all began in 1987,” Michael Jonas Tiner, the chief architect of FireWire, told us. Then he was a system architect in the marketing department of National Semiconductor, implanted technical knowledge among few in this sales managers and marketing specialists. Around that time, there was talk of the need to create a new generation of internal data bus architectures. A bus is a channel through which various data can be transferred between computer components, and an internal bus is needed by expansion cards, such as scientific tools or dedicated graphics processing.

The Institute of Electrical and Electronics Engineers (IEEE) quickly spotted the emergence of new attempts to create three incompatible standards - VME, NuBus 2 and Futurebus. The organization was contemptuous of such initiatives. Instead, they offered to everyone - why not work together?

Tynera was appointed chairman of the new project to unify the industry around a single serial bus architecture. Serial means that the transmission is carried out a bit at a time, rather than several bits at a time — parallel transmission is faster at the same frequency, but it has higher overhead, and with increasing frequencies, there are problems with efficiency.

"People quickly appeared - including a comrade named David James, who was working at Hewlett-Packard's architectural laboratory at the time - who said: 'Yes, we also need a consistent bus,'" said Tiner. “But we want to have taps for connections with low-speed peripherals,” such as floppy drives or keyboards with mice, and all that. ”

Apple Included

Tyner got a job with Apple in 1988. Shortly thereafter, the company began searching for a successor to the Apple Desktop Bus, ADB, used for low-speed devices such as keyboards and mice. Apple needed the next version to support audio. And Thiner had just the right thing.

But the early FireWire prototype was too slow. The very first options offered a speed of 12 megabits per second (1.5 Mb / s); Apple wanted 50. The company was afraid that they would have to switch to expensive optics.

To solve the mixed use problem, Tiner and James - also coming to Apple - invented the isochronous transfer method - that is, transfer at regular intervals. This guaranteed the arrival time of the data. Guaranteed time means that the device can more efficiently process signals with a high bit rate, and that there will be no variable delays in the device — the delay of the few milliseconds required for the interface will always be the same, regardless of the circumstances. This makes isochronous transmission ideal for multimedia - for professional work with audio and video, which previously required special hardware.

Apple appointed Roger van Brant and Florin Oprescu to the group of analog engineers to develop the physical layer — wires and electrical signals going through them — and to integrate the technology into the accelerated interface. Van Brant realized that you can avoid using optics, but instead take twisted wires. Additional speed did not increase the cost.

“At about the same time, someone at IBM (surprisingly) was looking for a replacement for SCSI,” recalls Tiner. - And since we also used SCSI, we thought - maybe we can use our idea to replace it. We have joined forces. But they already wanted speeds of 100 Mbps. ”

To achieve increased throughput, the team turned to STMicroelectronics. These guys owned a trick that could double the cable bandwidth using timekeeping (in other words, coordinating the behavior of different elements in the circuit) called DS coding.

Now they needed a connector. “We had an order to make it unique, so that anyone could look at it and immediately understand what it is,” recalls Tiner. Macs of that time had three different round connectors. The PC also had a bunch of similar connectors.

They asked a local Apple expert what connector to take. He noted that the cable for the Nintendo Game Boy was distinguished by a unique look, and they can make it unique for their project by swapping contacts. The connector will be able to use exactly the same technology, the same pins and so on, but at the same time look different. Better still, the Game Boy cable was the first popular cable to carry fragile pins into the cable. That way, when the pins were wearing out, you could just buy a new cable and not replace or repair the device.

The final specification spans 300 pages — sophisticated technology with elegant functionality. It was accepted in 1995 as IEEE 1394, it allowed operation speed up to 400 megabits (50 MB) per second, simultaneously in both directions over cables up to 4.5 m long. The cables could power the connected devices with current up to 1.5 A (and 30 B). On one bus could accommodate up to 63 devices, and they all allow you to connect and disconnect on the fly. Everything was configured automatically after connection, you did not need to think about network terminators or device addresses. And FireWire had its own microcontroller, so it was not dependent on fluctuations in the CPU load.

What is in the name?

The first working title of FireWire, ChefCat, was taken from the cartoon, the character of which was on Teener's favorite circle. But on the eve of Comdex '93, a major trade show for the computer industry, engineers suggested that Firewire be a possible official name. Marketing liked it, although they said that “w” should be capitalized. So at the exhibition it was formally presented.

In addition to Texas Instruments, which called it Lynx, American and European manufacturers left this name. In Japan, everything was different [as always]. Sony decided to use the name i.LINK and DV-input, and made most of the consumer electronics industry do the same. “Officially, this was done because the Japanese are afraid of fire,” says Tiner. “They had a lot of fires and a lot of burnt houses.”

It seemed silly. One day, after work, he gave his friends from Sony to drink, and they revealed to him the real reason behind the value of the title. “Sony once didn’t want to use the Dolby brand, because Dolby sounded better than Sony,” says Tiner. “Not as technology, but simply as a name.” And with FireWire it turned out the same. "They compared FireWire and Sony, and they decided that FireWire sounds cool and Sony is boring."

Sony saves everyone

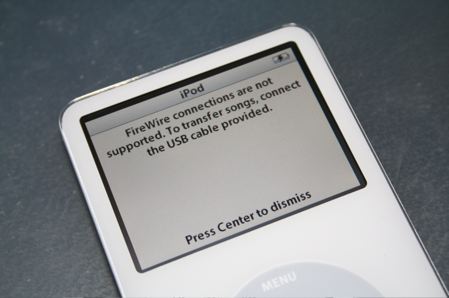

Sony may have complicated and confused the market with its i.LINK and its stupid four-pin connector (created, to Tiner's annoyance, without consulting with other members of the consortium). But the Japanese electronic giant deserves credit for bringing technology to the market.

Apple was in a mess for most of the 1990s. Eric Sirkin, director of Macintosh OEM at New Media Division, said that the situation was similar to manic-depressive psychosis. “For one year, the company tried to compete on prices with PCs, since the board of directors believed that it was losing market share,” he said. The company doubled its bet on consumer hardware and product efficiencies to reduce costs. “Next year,” Sirkin continues, “after they regained market share, they realized that they had no innovation. So they darted the other way. ”

Innovations like FireWire technology attracted the attention of technopress. Magazine Byte gave her the most important technology award. But at Apple, as Tiner recalls, to keep the project afloat it was necessary to maintain a conspiracy between co-workers from Apple and IBM. Each half of the team told its marketers that other companies are going to use this technology.

But to receive financing does not mean to enter the market. The decision makers in the engineering and marketing groups did not want to add FireWire technology to Mac. “They said: 'Show us that the industry has adopted it, and then we will support it too,” explains Sirkin. It was their technology, but they did not want to be the first to promote it.

At some point, FireWire was even canceled. The team frantically sought another sponsor. Sirkin was impressed with the technology and thought that it could help Mack stand out, so he agreed to take it under his wing and promote consumer electronics companies. He and the evangelist Jonathan Zar took her to Japan, where they collected a good contact base, thanks to his previous work with semiconductors at Xerox PARC and Zoran Corporation.

Sony Industrial saw the potential in FireWire. The team tried to conquer a new digital video market, just below the professional one, and they were developing a new DV standard. Soon, Sony's industrial division invited Philips and some other Japanese companies to participate. "They invited Apple," said Sirkin, "because of FireWire." A year later, the first DV cameras were already being prepared for the market - with support for a FireWire connection.

“And then Apple began to wake up,” Sirkin recalls. - Computer scientists said: Oh my God, it becomes a standard. ” And the IEEE requirements stated that all standards must be licensed with a formal cost payment.

“To create something related to FireWire, you had to pay $ 50,000 in advance,” he told us. - Once. And after that there was no need to pay anything. ” By order of Microsoft - the software giant was worried that Apple would decide to deceive the industry with licensing - Sirkin designed everything in the form of a contract.

Intel joined the project in 1996. She influenced the Open Host Standards Committee (OHSC), which developed the standard for implementing FireWire on computer hardware. Intel prepared to add it to its chipsets, which would mean that FireWire could be present in almost all new computers.

Most of the FireWire team left Apple at about this time, from the ongoing internal chaos. Sirkin tried to organize a startup based on FireWire. “I failed, and I stopped trying and did something else,” he told us. Tyner and several other engineers formed Zayante, which was contracted with Intel to implement FireWire, and with Hewlett-Packard to create printers with FireWire support.

The future seemed bright. FireWire was faster and more versatile than the other standard, USB, with a trifling maximum speed of 12 Mbps, depending on the CPU load (which meant that the actual transfer rate was lower). Technology got a good press. She even won an Emmy. It seemed that in the next couple of years it will appear in every new computer, and it will be used by professionals from the world of audio and video. Hard drive manufacturers have begun the transition from SCSI to FireWire for external devices. There has already been talk of placing technology in machines, aerospace devices, home networks, digital TVs and almost everywhere else you can find USB.

And in January 1999, even Apple finally began to embed FireWire into Macs. Before that, you had to purchase a PCI expansion card.

Beginning of the End

Despite Mac's sales growth, Apple’s financial situation was deplorable. The company needed more profit. After learning about the hundreds of millions of dollars in profits earned by IBM from patents, Apple director Steve Jobs launched a change in the FireWire licensing policy. Apple decided to demand $ 1 for each port (that is, for devices with two ports - $ 2).

The consumer electronics industry is furious. Everyone thought that was inappropriate and unfair. Intel sent its CTO to Jobs to negotiate, but the meeting ended poorly. Intel decided to abandon support for FireWire, complete attempts to embed the interface in the chipsets, and support USB 2.0, the maximum speed of which should have been 480 Mb / s (in practice it was about 280, that is, about 30-40 Mb / s).

Sirkin believes that Microsoft could reverse the new licensing policy by quoting a previously concluded agreement. "Microsoft must have thrown it out," he suggested, because "it would have stopped Apple."

"They could say: Look, this is what your team agreed to, and now you are breaking this agreement."

A month later, Apple reduced the fee to 25 cents per system, with the distribution of money among all patent holders. But it was too late - Intel was not going to return.

It was a fatal blow to the FireWire PC market. PC vendors would love to include anything built into Intel's chipset (for example, USB), but nothing else, except a dedicated graphics or sound card. “They are so worried about the cost that adding another interface is not considered,” said Tiner.

Faster and more advanced versions of the technology could not be saved either (FireWire 400 was more efficient, followed by FireWire 800, which appeared in Macs, and then FireWire 1600 and 3200, which did not appear there anymore). Could not do this and Apple, which used FireWire in the first generation iPod. The technology disappeared from the PC during the 2000s.

Tyner tried to persuade the 1394 trade association to move FireWire to Ethernet / IEEE 802 technology, because Apple - which bought the problem company - wanted it to work over long distances. “The answer was a deafening silence,” he told us. “They didn't want to do this.” Tiner sure that the reason for all is that no one "wanted to be first and leave the comfort zone" without the secret support from Apple.

FireWire left Macs between 2008 (when the MacBook Air came out without the FireWire port) and 2012 (when the last Mac came out with the FireWire port). It is still supported through an adapter for Thunderbolt or an external hub, but this is already an outdated technology - it is used by people who still have not abandoned devices on FireWire.

Elegy of the Dead Technology

Firewire is disappearing today. Its place in the upper segment of the market took Thunderbolt. At the other end, where there is more storage, USB 2.0 has given way to faster USB 3.0, which is now being replaced by USB-C, a standard supported and protected by Apple. It has small, simpler connectors that can be connected upside down, and twice as fast as theoretical speed (10 Gbit / s), as well as more variety than USB 3.0. It can power HDMI and DisplayPort through the adapter, and also supports the entire crowd of USB devices, from 1.0 to 3.1.

The speeds in networks of all sizes are so great that the need for something like FireWire is gone. “Packages can arrive faster than they will be required, everything works so fast,” notes Sirkin. “So no need to worry about synchronization anymore.” And yet it is interesting to think about how close FireWire came to ubiquity - and it all failed because of the short-sighted actions of the most innovative company in the field of computing and consumer electronics.

“Apple created an image that could not be changed on the market — an image of a leader and innovator,” Sirkin told us. - Steve Jobs helped create it. But in the early 90s she was no longer an innovator. She just twisted the handle all the way. Slightly improved processors, slightly improved screens. Improved software.

“I think that the essence of the story is that FireWire reflects the state of Apple of that era,” he continued. Apple has stopped seeing itself as an innovator. “And there was a very innovative technology that she simply refused to use in her computers. Apple only found another company, in this case, Sony, grabbed the idea. And after Sony liked it, Apple got a hold of it. ”

All Articles