The end of the era of Moore's Law and how this may affect the future of information technology.

You probably shouldn’t tell in detail about what the so-called Moore's Law is about in Geektimes - we all know about it, even approximately. In short, this law is an empirical observation made by Gordon Moore. There were several formulations of the law itself, but a modern one says that the number of transistors placed on an integrated circuit chip doubles every 24 months. A little later, a kind of law appeared where not two years, but 18 months appeared. This is due not to Moore, but to David House from Intel. In his opinion, processor performance should double every 18 months due to the simultaneous growth of both the number of transistors and the speed of each of them.

Since the wording of the law, the developers of electronic elements have been trying to keep pace with the established time frames. Generally speaking, for 1965 the law was something unusual, it can even be called radical. Then the “mini-computer” was still not very small, occupying the volume of a normal desktop, or even more. At that time, it was difficult to imagine that computers over time could even become part of a refrigerator, washing machine, or other household appliances. Most people have never seen a computer, and those who have seen it almost never worked with them. Well, those who worked, used punch cards and other not very convenient tools of interaction with computers, which, in turn, worked to solve a fairly narrow range of tasks.

Above the idea of Moore, after it became known about her, they even began to joke in magazines. For example, in one of them they placed a caricature like this:

Then it was difficult to imagine that soon even such computers would not be considered small at all. The illustration, by the way, was seen by Moore, and she was very surprised by its originality. As far as can be judged, the artist tried to convey a somewhat skeptical attitude towards the idea of constantly reducing the size of the PC. But after 25 years, this illustration has become quite a mundane reality.

The Effect of Moore's Law

As mentioned above, there are several variations of Moore's Law; we are not talking only about the constant increase in the number of transistors in a chip. One of the consequences of Moore’s idea is to try to figure out how quickly all the smaller transistors will work. Also, scientists and information technology specialists, using Moore’s idea, tried and tried to pre-order how fast the amount of RAM, main memory would grow, how productive chips would be, etc.

But the main thing is not in which of the versions of Moore's Law is more curious / useful, but in what influence the main idea has had on our world. Here there are three main forms of influence. This is a developer rivalry, prediction and change in the architecture of computing systems.

Rivalry

Moore's law can be used to find out how much information can be stored in a single chip. This law, by the way, can be attributed in full to RAM. At the dawn of a computer, or rather a PC, a computer chip could store

Forecasting

Knowing the trend of increasing the number of transistors in the chip volume (and the formula was initially quite clear), engineers from any of the companies manufacturing electronic components could roughly imagine when which generation of chips would come out. And it was a fairly accurate prediction. You could also imagine in what year and with what performance the processor will work.

Engineers at the enterprises began to make production plans, being guided, basically, by the Law of Moore. Computer vendors were well aware of when which generation of machines should leave the market and when which should appear.

Moore's law, one might say, has established the production process of producing electronic components and systems. There were no surprises in this regard, and it could not be, because everyone worked at about the same speed, not trying to overtake or keep up with the time frames set by Moore. Everything was perfectly predictable.

PC architecture and elements

All the same Moore's Law allowed engineers to develop the design of chips, which has become the benchmark for a long time. It is about Intel 4004 and its subsequent incarnations. A specialized architecture was developed, which was called von Neumann architecture .

In March 1945, the principles of logical architecture were formulated in a document called the “First Draft Report on EDVAC” - a report for the US Army Ballistic Laboratory, for which money the construction of ENIAC and the development of EDVAC were made. The report, since it was only a draft, was not intended for publication, but only for distribution within the group, but German Goldstein, the project curator from the US Army, duplicated this scientific work and sent it to a wide circle of scientists for familiarization. Since on the first page of the document there was only the name of von Neumann [1], those who read the document had the false impression that he was the author of all the ideas presented in the work. The document gave enough information so that those who read it could build their computers, similar to EDVAC, on the same principles and with the same architecture, which as a result became known as “von Neumann architecture”.

After the end of the Second World War and the end of work on ENIAC in February 1946, the team of engineers and scientists broke up, John Mockley, John Eckert decided to turn to business and create computers on a commercial basis. Von Neumann, Goldstein and Burks moved to the Institute for Advanced Study, where they decided to create their own computer "IAS-machine", similar to EDVAC, and use it for research work. In June 1946, they [2] [3] set forth their principles for constructing computers in the classic article “Preliminary Consideration of the Logic Design of an Electronic Computing Device”. More than half a century has passed since then, but the provisions put forward in it remain valid today. The article convincingly justifies the use of the binary system to represent numbers, and in fact, previously all computers stored the processed numbers in decimal form. The authors demonstrated the advantages of the binary system for technical implementation, the convenience and ease of performing arithmetic and logical operations in it. In the future, computers began to process non-numeric types of information - text, graphic, sound, and others, but binary data coding still forms the informational basis of any modern computer.

All the foundations that were laid several decades ago and became the basis. In the future, almost everything remained unchanged, the developers just tried to make computers more productive.

It is worth remembering that the basis of everything is Moore's Law. All his incarnations served to support the basic model of the development of computer technology, and there was little that could break this cycle. And the more actively the development of computer technology was going on, the deeper, one might say, the developers of these systems were tied up in the law. After all, the creation of a different computer architecture takes years and years, and few companies could afford this luxury - the search for alternative ways of developing computer technology. Research organizations like MIT have made brave experiments like the Lisp Machine and the Connection Machine, and here we can mention one of the Japanese projects. But it all ended in nothing, von Neumann's architecture remained.

The work of engineers and programmers now consisted in optimizing the work of their programs and hardware, so that every square millimeter of chips worked more and more efficiently. Developers competed in caching more and more data. Also, various manufacturers of electronic components have tried (and are still trying to) place as many cores as possible within a single processor. Anyway, all the work focused on a limited number of processor architectures. These are X86, ARM and PowerPC. Thirty years ago they were much more.

X86 is used primarily on desktops, laptops and cloud servers. ARM processors run on phones and tablets. Well, PowerPC in most cases used in the automotive industry.

An interesting exception to the rigid rules of the game, established by Moore's Law, is the GPU. They were developed in order to process graphic information with a high degree of efficiency, therefore their architecture differs from the processor one (still). But in order to cope with its task, the GPU had to be finalized independently of the evolution of processors. The graphics card architecture was optimized for processing large amounts of data needed to draw the image on the screen. Therefore, here the engineers have developed a different type of chips, which did not replace the existing processors, but supplemented their capabilities.

When will Moore's law stop working?

In the usual sense, it has already ceased to work, in the classical sense, which was discussed above. This is evidenced by various sources, including, for example, this . Now the race is still ongoing. For example, in the very first commercial 5-bit Intel 4004 processor released in 1971 there were 2,300 transistors. After 45 years, in 2016, Intel introduced a 24-core Xeon Broadwell-WS processor with 5.7 billion transistors. This processor is available on 14 nm technology. IBM recently announced a 7 nm processor with 20 billion transistors, and then a 5 nm processor with 30 billion transistors.

But 5 nm is a layer only 20 atoms thick. Here, engineering is getting close to the technical limit of further improvement of the technical process. In addition, the density of transistors in modern processors is very high. Per square millimeter - 5 or even 10 billion transistors. The signal transfer rate in the transistor is very high and is of great importance. The core frequency of the modern fastest processors is 8.76 GHz. Further acceleration is also possible, but it is a technical problem, and a very, very large one. That is why engineers chose to create multi-core processors, rather than continue to increase the frequency of a single core.

This allowed to maintain the pace of increase in the number of operations per second, as provided by Moore's law. But still multi-core is a certain deviation from the law. Nevertheless, a number of experts believe that it does not matter how we are trying to “succeed,” the main thing is that the pace of development of technologies, in particular, computer equipment, more or less corresponds to Moore’s law.

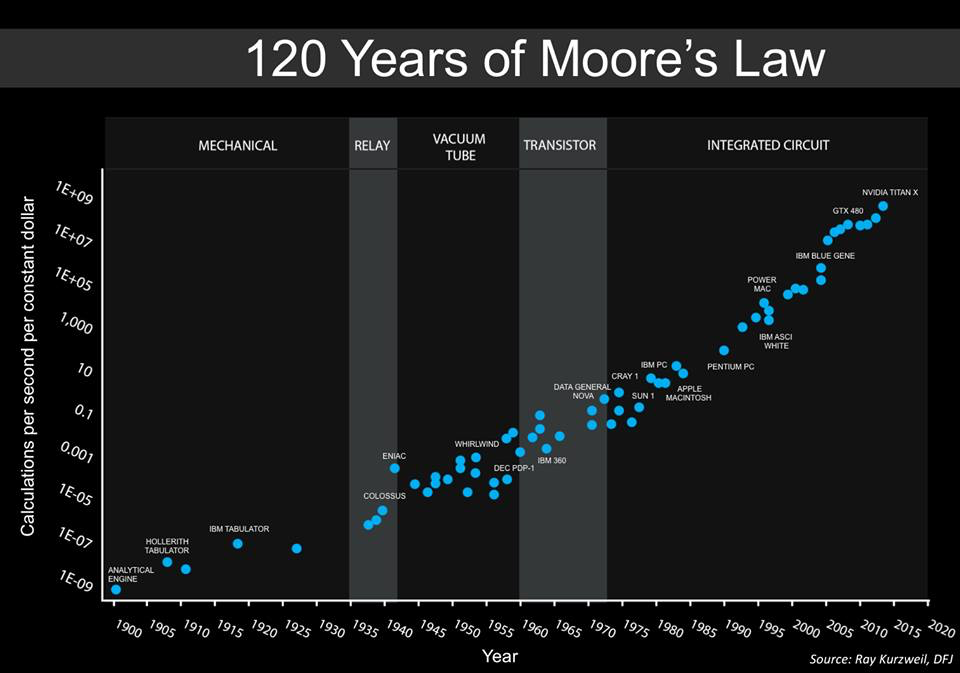

Below is a graph built by Steve Jurvetson, co-founder of Draper Fisher Jurvetson. He claims that this is an augmented schedule, previously presented by Ray Kurzweil .

This graph shows the relative cost of the number of operations per unit of time (1 second). That is, we can clearly see how much cheaper computer calculations have become over time. Moreover, the calculations have become increasingly universal, so to speak. In the 40s, there were specialized vehicles designed to break into military codes. In the 1950s, computers began to be used for working with general tasks, and this trend continues to this day.

Interestingly, the last two positions on the graph are the GPU, GTX 450 and NVIDIA Titan X. What is interesting is that in 2010 there were no GPUs on the graph, only multi-core processors.

In general, the GPU is already here, and many are pleased with them. In addition, such a direction as deep learning, one of the manifestations of neural networks, is becoming increasingly popular. They are engaged in the development of many companies, large and small. And the GPU is perfect for neural networks.

Why all this? The fact is that the overall increase in the number of calculations is still there, yes, but methods and equipment are changing.

What does all of this mean?

Now the form of computer computing itself is changing. Architects will soon not need to think about what else to do in order to keep up with Moore's law. Now new ideas are gradually being introduced that will allow to reach heights inaccessible to ordinary computer systems with traditional architecture. It is possible that in the near future the speed of the calculations will not be so important, the performance of the systems can be improved otherwise.

Self-learning systems

Now many neural networks depend on the GPU. For them, systems with a specialized architecture are created. For example, Google has developed its own chips, which are called TensorFlow Units (or TPUs). They allow you to save computing power due to the effectiveness of the calculations. Google uses these chips in its data centers, many of the company's cloud services are based on them. As a result, the efficiency of the systems is higher, and the energy consumption is lower.

Specialty chips

In conventional mobile devices now work ARM-processors, which are specialized. These processors process information from cameras, optimize speech processing, and work with face recognition in real time. Specialization in everything - that awaits electronics.

Specialized Architecture

Yes, the world has not come together with the von Neumann architecture, now systems with different architectures are being developed, designed to perform different tasks. This trend not only persists, but even accelerates.

Computer systems security

Cybercriminals are becoming more skilled, with the hacking of some systems, you can now get millions, tens of millions of dollars. But in most cases, the system can be hacked due to software or hardware errors. The overwhelming number of techniques used by hackers work on systems with the von Neumann architecture, but they will not work with other systems.

Quantum systems

The so-called quantum computers - experimental technology, which, among other things, is also very expensive. It uses cryogenic elements, plus a lot of everything else, which is not in conventional systems. Quantum computers are completely different from the computers we are used to, and Moore’s law doesn’t apply to them. However, with their help, according to experts, it is possible to radically improve the performance of some types of calculations. Perhaps it was Moore’s law that led scientists and engineers to look for new ways to increase the efficiency of calculations, and found them.

As an afterword

Most likely in 5-10 years we will see absolutely new computing systems, now we are talking about semiconductor technology. These systems will be ahead of our wildest plans and develop at a very fast pace. Most likely, specialists, trying to circumvent Moore's law, will create new technologies for developing chips, which, if they were told about them now, would have seemed like magic to us. What would people who lived 50 years ago say if they were given a modern smartphone? Few would understand how everything works. So in our case.

All Articles