Nvidia Era

Apparently, the era of GPU-computing has come! Intel is bad. If you haven’t read my blog quite regularly over the past few years, I’ll explain that I [ Alex St. John ] stood at the origins of the original DirectX team at Microsoft back in 1994, and created the Direct3D API along with other first DirectX creators (Craig Eisler and Eric Engstrom) and contributed to its spread in the video game industry and graphics chip makers. On this topic in my blog you can find many stories, but the one that is directly related to this post, I wrote in 2013, the year.

Nvidia history

I think the version of Nvidia’s future games is the right one, and I really like living in an era when I can work with such tremendous computer power. It seems to me that I lived to an epoch in which I can walk along the bridge of the Enterprise and play with the warp engine. And literally - Warps Nvidia calls the minimum unit of parallel processes that can be run on the GPU.

Those who follow stock quotes may have noticed that Nvidia shares have recently risen sharply after many years of slow climbing. It seems to me that this sudden breakthrough declares a revolutionary shift in computer computing, representing the culmination of many years of progress in developing GPGPU . To this day, Intel has retained a monopoly over computing in the industrial market, successfully repelling competitors' attacks on their superiority in the industrial computing space. This dominance has ended this year, and the market sees it coming. To understand what is happening and why this is happening, I will go back to my early years at Microsoft.

In the 90s, Bill Gates coined the term “co-operation” [Cooperatition = competition + cooperation] to describe forced competitive partnerships with other leaders of the technical industry at that time. In conversations about Intel, the term pops up especially often. And while the fate and success of Microsoft and Intel intertwined all tighter, the two companies are constantly fighting with each other for domination. In both companies there were teams of people who “specialized” in attempts to gain advantages over their rivals. Paul Maritz, the then CEO of Microsoft, was very worried that Intel might try to virtualize Windows, allowing many other competing operating systems to enter the market and exist on a desktop PC in parallel with Windows. Interestingly, Paul Maritz later became CEO of VMWARE. And indeed, Intel actively invested in such attempts. One of their strategies was to try to emulate at the software level all the common iron functionality with which OEMs usually supplied PCs — video cards, modems, sound cards, network equipment, and so on. By transferring all external computing to an Intel processor, the company could destroy the sales and growth of all possible alternative computing platforms that could otherwise grow, carrying the threat of Intel's CPU. Specifically, Intel's announcement of 3DR technology in 1994 prompted Microsoft to create DirectX.

I worked for a team at Microsoft that was responsible for the company's strategic positioning in the light of competitive threats in the market, the “Developer Relations Group, DRG]. Intel demanded that Microsoft send a representative to speak at a 3DR presentation. As an expert on graphics and 3D at Microsoft, I was sent with a special mission to assess the threat that the new initiative from Intel potentially represented, and to form an effective strategy to combat it. I decided that Intel is really trying to virtualize Windows, emulating at the software level all possible data processing devices. I wrote a proposal called “take entertainment seriously,” where I proposed to block Intel’s attempts to make Windows insignificant to create a competitive consumer market for new hardware features. I wanted to create a new set of Windows drivers that allowed for massive competition in the hardware market so that new media work, including audio, data entry, video, network technologies, etc. in the PC game market we are creating, depended on our own Windows drivers. Intel could not cope with the competition in the free market that we created for companies producing consumer hardware, and therefore could not create a CPU capable of effectively virtualizing all the functionality that users could require. So was born DirectX.

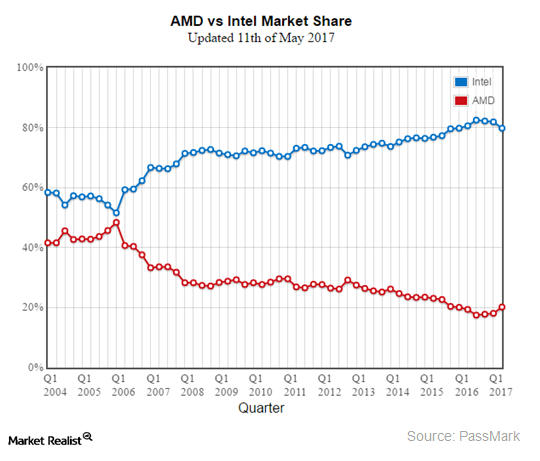

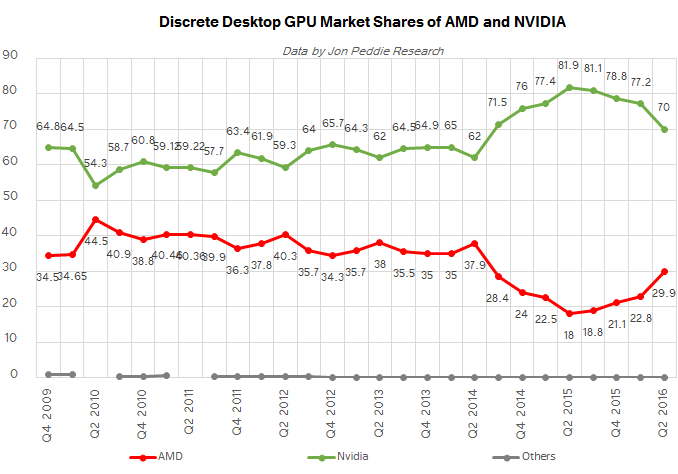

In this blog you can find a lot of stories about the events surrounding the creation of DirectX, but if in brief, our "evil strategy" was a success. Microsoft realized that in order to dominate the consumer market and contain Intel, it was necessary to focus on video games, after which dozens of 3D-chip manufacturers appeared. Twenty years have passed, and among a small number of surviving Nvidia, together with ATI, since acquired by AMD, dominated first in the market for consumer graphics, and more recently in the market for industrial computing.

This brings us back to the current 2017th year, when the GPU finally begins to completely replace x86 processors, which were previously treated with reverence. Why now and why GPU? The secret of x86 hegemony was the success of Windows and backward compatibility with x86 instructions up to the 1970s. Intel could maintain and increase its monopoly in the industrial market due to the fact that the cost of transferring applications to the CPU with any other instruction set that did not occupy any market niche was too high. The phenomenal set of features of Windows OS tied to the x86 platform strengthened Intel's market position. The beginning of the end came when Microsoft and Intel together could not make the leap to dominance in the emerging market of mobile computing. For the first time in several decades, a crack appeared on the x86 CPU market, which was filled with ARM processors, after which the new, alternative Windows operating systems from Apple and Google were able to capture the new market. Why Microsoft and Intel could not make this jump? You can find a car of interesting reasons, but in this article I would like to emphasize one thing - the x86 backwards compatibility baggage. For the first time, energy efficiency has become more important for CPU success than speed. All transistors and all the millions of lines of x86 code that Intel and Microsoft nested in PCs have become obstacles to energy efficiency. The most important aspect of Intel and Microsoft’s market hegemony at one moment became a hindrance.

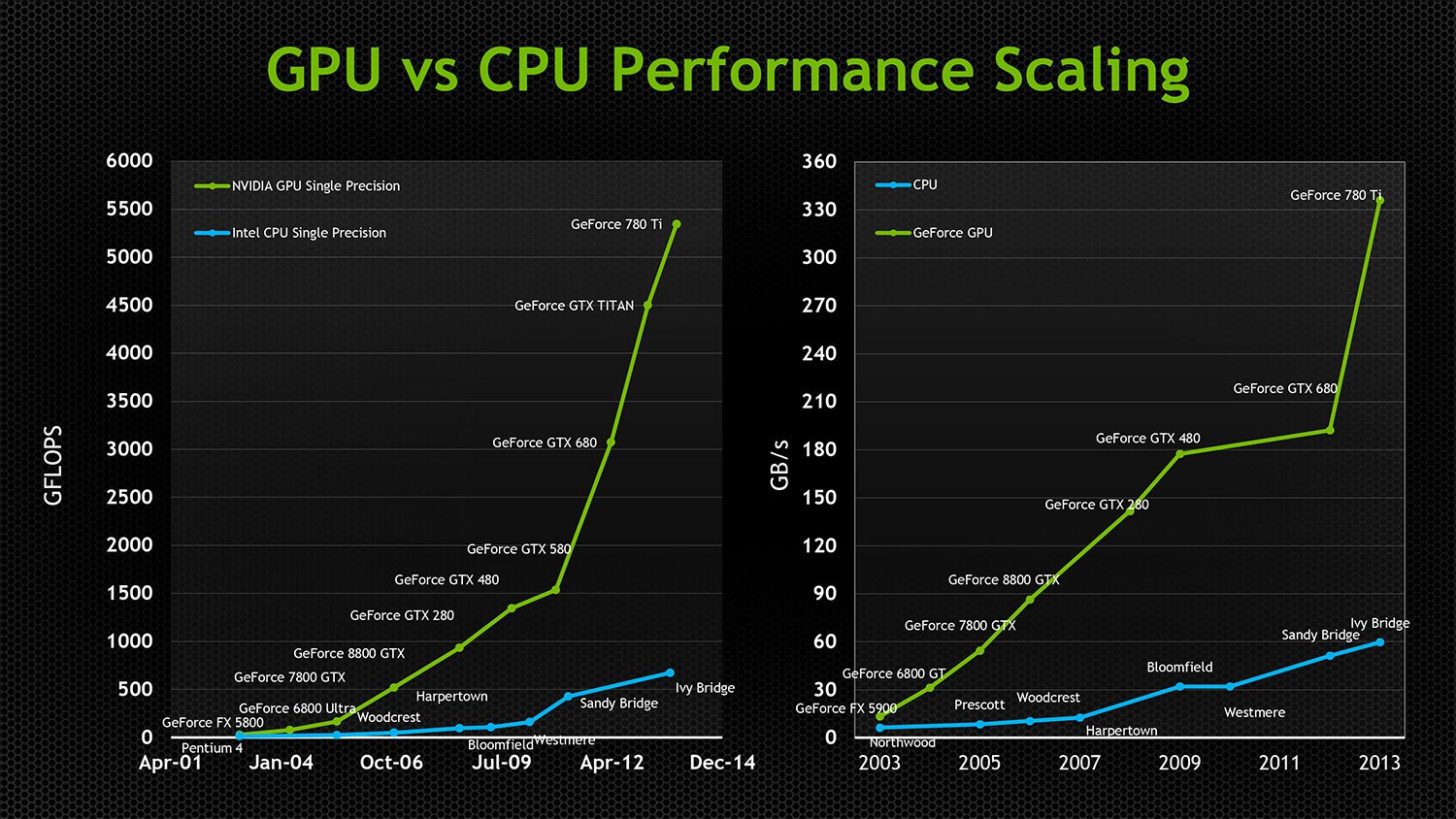

The need for Intel to constantly increase the speed and support of backward compatibility forced the company to spend more and more energy-efficient transistors to obtain constantly decreasing speed gains in each new generation of x86 processors. Backward compatibility has also seriously hampered Intel’s ability to parallelize its chips. The first parallel GPU appeared in the 90s, and the first Intel dual-core CPU was released only in 2005. Even today, the most powerful CPU from Intel copes with only 24 cores, although in most modern video cards there are processors with thousands of cores. GPUs, which were originally parallel, did not drag backward compatibility with them, and thanks to architecture-independent API technologies like Direct3D and OpenGL, they were free to innovate and increase parallelism without having to compromise with compatibility or transistor efficiency. By 2005, GPUs even became general-purpose computing platforms that support heterogeneous parallel general-purpose computing. By heterogeneity, I mean that chips from AMD and NVIDIA can execute the same compiled programs, despite completely different low-level architecture and instruction set. And at a time when Intel's chips were striving for ever-decreasing performance jumps, the GPU doubled its speed every 12 months, while reducing power consumption by half! Extreme parallelization made it possible to use transistors very effectively, providing each successive transistor added to the GPU with the ability to effectively influence the speed of operation, while an increasing number of x86 transistors growing in the number of transistors was not involved.

And although the GPU increasingly invaded the territory of industrial supercomputers, the production of media and VDI, the main turn in the market occurred when Google began to effectively use the GPU to train neural networks capable of very useful things. The market realized that AI will be the future of big data processing and will open up huge new automation markets. GPUs were perfect for running neural networks. Up to this point, Intel has successfully relied on two approaches that have suppressed the growing influence of the GPU on industrial computing.

1. Intel left the PCI bus speed low and limited the number of I / O paths supported by their processor, thus ensuring that GPUs will always depend on Intel processors to handle their workloads and remain separated from various valuable real-time applications of high-speed computing from - for delays and PCI bandwidth limitations. While their CPU was able to limit application access to GPU speed, Nvidia was languishing at the other end of the PCI bus without access to many practically useful industrial loads.

2. Provided a cheap GPU with minimal functionality on a consumer processor to isolate Nvidia and AMD from the premium gaming market and from general market acceptance.

The growing threat from Nvidia and the failed attempts of Intel itself to create x86-compatible supercomputer accelerators led Intel to choose a different tactic. They have acquired Altera and want to include programmable FPGAs in the next generation of processors from Intel. This is an ingenious way to make the Intel processor support greater I / O capabilities compared to the limited PCI bus hardware from competitors, and so the GPU doesn’t get any advantage. FPGA support enabled Intel to go toward supporting parallel computing on their chips, not playing at the gates of the growing market for applications using GPUs. It also allowed industrial computer manufacturers to create highly specialized hardware, still dependent on x86. This was a brilliant move by Intel, since it excluded the possibility of penetration of the GPU into the industrial market in several directions at once. Brilliant, but most likely doomed to failure.

Five consecutive news items describe the reason I’m sure the x86 party will end in 2017.

1. SoftBank’s VisionFund Fund has received an investment of $ 93 billion from companies willing to take the place of Intel

2. SoftBank bought ARM Holdings for $ 32 billion

3. SoftBank bought shares of Nvidia for $ 4 billion

4. Nvidia launches Project Denver [code name for Nvidia's microarchitecture, implementing the ARMv8-A 64/32-bit instruction set, using a combination of a simple hardware decoder and a software binary translator with dynamic recompilation / approx. transl.]

5. NVIDIA announced the Xavier Tegra SOC with a Volta GPU with 7 billion transistors, 512 CUDA Cores and 8 ARM64 Custom Cores - a mobile ARM / Hybrid chip with ARM cores accelerated by the GPU.

Why is this sequence of events important? It was in this year that the first generation of independent GPUs entered the market in wide access, and was able to run its own OS without obstacles in the form of PCI. Nvidia no longer needs an x86 processor. ARM has an impressive number of consumer and industrial operating systems and applications transferred to them. All industrial and cloud markets are switching to ARM chips as controllers for a wide range of their market solutions. ARM chips are already integrated FPGA. ARM chips consume little power, yielding in performance, but GPUs are extremely fast and efficient, so GPUs can provide processor power, and ARM cores can handle tedious IO and UI operations that do not require computational power. An increasing number of applications working with big data, high-performance computing, machine learning no longer need Windows, and they do not work on x86. 2017 is the year when Nvidia falls off its leash and becomes a truly viable competitive alternative to x86-based industrial computing in valuable new markets that are not suited to x86-based solutions.

If the ARM processor is not powerful enough for your needs, then IBM, in collaboration with Nvidia, is going to produce a new generation of CPU Power9 for processing big data working with 160 PCIe lanes.

AMD also launches the new Ryzen CPU , and, unlike Intel, AMD has no strategic interest in stifling PCI performance. Their consumer chips support 64 lines of PCIe 3.0, and professional - 128. AMD also launches a new HIP cross-compiler, thanks to which applications for CUDA become compatible with AMD's GPU. Despite the fact that these two companies compete with each other, both of them will benefit from Intel's shift in the industrial market with alternative approaches to GPU computing.

All this means that in the coming years, solutions based on GPU will capture industrial computing at increasing speed, and the world of desktop interfaces will increasingly rely on cloud-based visualization or work on mobile ARM processors, since even Microsoft has announced support for ARM .

Putting it all together, I predict that in a few years we will only hear about the battle between the GPU and the FPGA for the advantage in industrial computing, while the era of CPU will gradually end.

All Articles